Automated Synthesis Platforms: A Cost-Benefit Analysis for Accelerating Drug Discovery

This article provides a comprehensive cost-benefit analysis of automated synthesis platforms for researchers, scientists, and drug development professionals.

Automated Synthesis Platforms: A Cost-Benefit Analysis for Accelerating Drug Discovery

Abstract

This article provides a comprehensive cost-benefit analysis of automated synthesis platforms for researchers, scientists, and drug development professionals. It explores the foundational technologies, from robotic hardware to AI-driven synthesis planning, that underpin these systems. The analysis delves into practical methodologies and applications, demonstrating how automation integrates into established workflows like the Design-Make-Test-Analyse (DMTA) cycle to reduce timelines and costs. It addresses critical troubleshooting, optimization challenges, and the evolving trust framework required for robust implementation. Finally, it validates the economic proposition through comparative analysis with traditional methods, budget impact assessments, and real-world case studies, offering a data-driven perspective on the return on investment and strategic value of automation in biomedical research.

The Foundation of Automated Synthesis: Core Technologies and Economic Drivers

Automated synthesis represents a fundamental shift in chemical research, replacing traditional manual processes with robotic and computational systems. This evolution is transforming laboratories from artisanal workshops into automated factories of discovery, accelerating the pace of research across pharmaceuticals, materials science, and biotechnology [1] [2]. The core definition encompasses integrated systems where robotics, artificial intelligence, and specialized hardware work in concert to design, execute, and analyze chemical experiments with minimal human intervention.

The transition toward full automation occurs across multiple levels of sophistication. Researchers at UNC-Chapel Hill have defined a five-level framework that categorizes this progression from simple assistive devices to fully autonomous systems [2]. At Level A1 (Assistive Automation), individual tasks such as liquid handling are automated while humans handle most work. Level A2 (Partial Automation) involves robots performing multiple sequential steps with human supervision. Level A3 (Conditional Automation) enables robots to manage entire processes, requiring intervention only for unexpected events. Level A4 (High Automation) allows independent experiment execution with autonomous reaction to unusual conditions, while Level A5 (Full Automation) represents complete autonomy including self-maintenance and safety management [2]. This framework provides critical context for comparing current platforms and their respective capabilities within the cost-benefit analysis landscape.

Comparative Analysis of Automated Synthesis Platforms

The market offers diverse automated synthesis solutions ranging from specialized modular systems to flexible mobile platforms. Each architecture presents distinct advantages for specific research applications and budget considerations.

Table 1: Comparative Performance Metrics of Automated Synthesis Platforms

| Platform Type | Key Features | Throughput Capacity | Implementation Cost | Flexibility/Adaptability | Primary Applications |

|---|---|---|---|---|---|

| Modular Systems (e.g., Chemputer [1]) | Standardized modules, XDL language, reproducible protocols | Medium to High | $50,000-$150,000+ [3] | Moderate (module-dependent) | Organic synthesis, pathway optimization |

| Mobile Robots (e.g., Free-roaming systems [4]) | Navigate existing labs, share human equipment, multimodal analysis | Variable (depends on station integration) | $200,000+ (complex setups) | High (utilizes diverse instruments) | Exploratory chemistry, supramolecular assembly |

| Industrial Automation | ATEX-certified, corrosion-resistant, high payload | Very High | $50,000-$300,000+ [3] | Low (fixed processes) | Chemical manufacturing, hazardous material handling |

| Specialized Platforms (e.g., RoboChem [5]) | Integrated flow reactors, in-line analytics, tailored hardware | High for specific chemistry | N/A (often custom) | Low (domain-specific) | Photocatalysis, reaction optimization |

Table 2: Cost-Benefit Analysis of Automation Approaches

| Automation Approach | Initial Investment | ROI Timeline | Personnel Requirements | Data Quality & Reproducibility | Limitations |

|---|---|---|---|---|---|

| Benchtop Lab Systems | $50,000-$150,000 [3] | 18-36 months [3] | Technical specialist | High for standardized protocols | Limited flexibility, specialized maintenance |

| Mobile Robot Platforms | High ($200,000+) | 2-3+ years (research setting) | Interdisciplinary team | Excellent (multimodal validation) [4] | Complex integration, navigation challenges |

| Industrial Manufacturing | $50,000-$300,000+ [3] | 18-24 months (production) | Robotics engineers | Exceptional (GMP compliance) | High upfront costs, rigid workflows |

| Open-Source/DIY Systems (e.g., FLUID [1]) | <$50,000 | N/A (research focus) | Technical expertise | Good (community validation) | Limited support, self-integration required |

Experimental Protocols and Workflows

Protocol 1: Mobile Robotic Platform for Exploratory Synthesis

A landmark study published in Nature demonstrates an integrated workflow for autonomous exploratory synthesis using mobile robots [4]. This protocol exemplifies how flexible automation can navigate complex chemical spaces without predefined targets.

Methodology:

- Platform Configuration: The system integrates a Chemspeed ISynth synthesizer, UPLC-MS, benchtop NMR, and commercial photoreactor stations, linked by mobile robotic agents for sample transport [4].

- Decision Algorithm: A heuristic decision-maker processes orthogonal NMR and UPLC-MS data, applying binary pass/fail criteria determined by domain experts. Reactions must pass both analytical assessments to proceed to subsequent stages [4].

- Experimental Sequence:

- Parallel synthesis of precursor libraries (e.g., ureas and thioureas via amine-isocyanate condensation)

- Automated aliquot reformatting for orthogonal analysis

- Mobile robot transportation to analytical stations

- Data processing and decision-making for scale-up or diversification

- Functional assay integration (e.g., host-guest binding evaluation) [4]

Key Performance Metrics: The system successfully executed structural diversification chemistry, supramolecular host-guest chemistry, and photochemical synthesis, demonstrating the capacity to navigate complex reaction spaces where outcomes aren't defined by a single optimization parameter [4].

Protocol 2: Closed-Loop Optimization for Photocatalysis

The "RoboChem" system exemplifies specialized platforms that integrate continuous-flow reactors with in-line analytics for autonomous reaction optimization [5].

Methodology:

- Reactor Design: Continuous-flow photoreactor with transparent tubing wrapped around UV LEDs, integrated with IoT sensors for real-time monitoring [5].

- Analytical Integration: In-line NMR provides structural confirmation and conversion data without manual intervention.

- Optimization Algorithm: Bayesian optimization algorithms guide experimental parameters based on real-time analytical feedback, creating a fully closed-loop system [5].

- Experimental Sequence:

- Algorithm selects reaction conditions based on previous results

- Continuous-flow system executes reaction with precise residence time control

- In-line NMR analyzes output stream

- Data feeds back to algorithm for next condition selection

- Process continues until optimal conditions identified

Key Performance Metrics: RoboChem demonstrated the ability to optimize photocatalytic reactions with higher efficiency and speed than manual approaches, identifying improved conditions in hours rather than days [5].

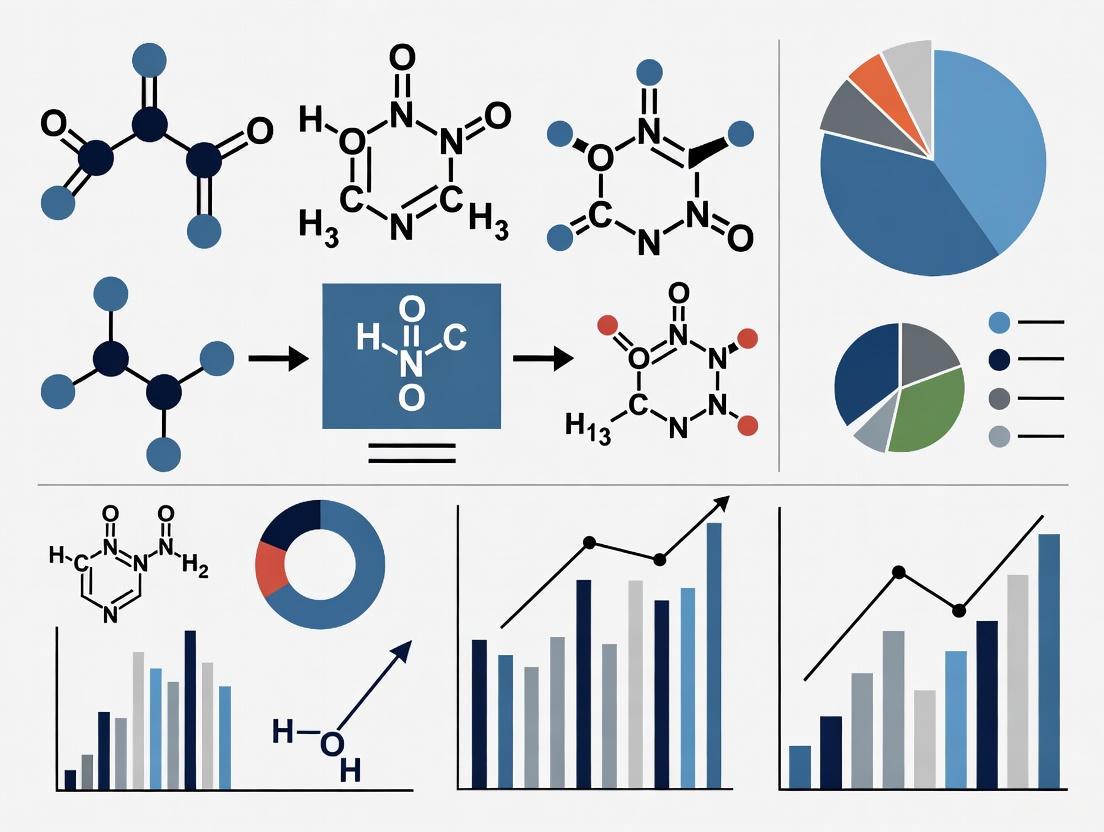

Figure 1: Mobile robotic synthesis workflow integrating modular stations and heuristic decision-making [4].

The Scientist's Toolkit: Essential Research Reagent Solutions

Successful implementation of automated synthesis requires specialized materials and reagents tailored to robotic platforms. The selection of appropriate consumables directly impacts experimental reproducibility and system reliability.

Table 3: Essential Research Reagent Solutions for Automated Synthesis

| Reagent/Category | Function in Automated Workflows | Platform Compatibility Considerations | Impact on Experimental Outcomes |

|---|---|---|---|

| Specialized Phosphoramidites | Solid-phase DNA synthesis building blocks | Automated synthesizer compatibility | Critical for oligonucleotide purity and yield [6] |

| ATEX-Certified Solvents | Reaction media for hazardous chemistry | Explosion-proof robotic systems | Enables safe handling of flammable materials [3] |

| Calibration Standards | Analytical instrument validation | Specific to UPLC-MS/NMR configurations | Ensures data reliability across automated runs [4] |

| Functionalized Solid Supports | Immobilized reagents and catalysts | Flow chemistry reactor compatibility | Enables continuous processes and catalyst recycling [5] |

| Stable Isotope-Labeled Compounds | Reaction mechanism studies | Compatibility with in-line NMR detection | Provides real-time mechanistic insights [5] |

| Enzymatic DNA Synthesis Mix | PCR-based gene assembly | Thermal cycler integration | Reduces chemical waste and improves fidelity [6] |

| Diphenyl Chlorophosphonate-d10 | Diphenyl Chlorophosphonate-d10, MF:C12H10ClO3P, MW:278.69 g/mol | Chemical Reagent | Bench Chemicals |

| FAM hydrazide,5-isomer | FAM hydrazide,5-isomer, MF:C21H15ClN2O6, MW:426.8 g/mol | Chemical Reagent | Bench Chemicals |

Technological Foundations and Implementation Architecture

The architecture of modern automated synthesis platforms combines physical robotics with digital intelligence. Understanding these components is essential for effective platform selection and implementation.

Figure 2: Automated synthesis system architecture showing integration across control, physical, analytical, and intelligence layers [1] [4] [5].

Key Architectural Components

Control Systems: Modern platforms utilize specialized languages like XDL (Chemical Description Language) to abstract chemical procedures from specific hardware, enabling protocol transfer across systems [1]. This digital layer orchestrates timing, coordinates robotic movements, and integrates data streams from multiple instruments.

Physical Robotics: Implementation ranges from stationary robotic arms (e.g., UR5e for sample preparation [1]) to mobile platforms that navigate laboratory spaces. These systems employ advanced perception for object recognition and manipulation, enabling handling of standard laboratory glassware and equipment [5].

Analytical Integration: Successful platforms incorporate multiple orthogonal characterization techniques. The combination of UPLC-MS for molecular weight information and NMR for structural analysis provides comprehensive reaction assessment, mimicking the multifaceted approach of human chemists [4].

Decision Intelligence: Systems employ either heuristic algorithms based on domain expertise or machine learning approaches for optimization. Heuristic methods excel in exploratory chemistry where multiple products are possible, while ML optimization shines in parameter optimization for known reactions [4] [5].

Cost-Benefit Analysis and Future Outlook

The adoption of automated synthesis platforms represents a significant investment decision for research organizations. A thorough cost-benefit analysis must consider both quantitative and qualitative factors across the research lifecycle.

Financial Considerations

Initial Investment: Platform costs range from $50,000 for benchtop systems to $300,000+ for industrial configurations, with specialized mobile platforms potentially exceeding this range [3]. Additional expenses include facility modifications, safety systems, and integration with existing instrumentation.

Operational Costs: Annual maintenance typically adds 10-15% of initial investment, with consumables, calibration standards, and specialized reagents representing ongoing expenses. Personnel costs for specialized technicians or robotics engineers must also be factored.

Return on Investment: Most systems deliver ROI within 18-36 months through reduced labor requirements, higher throughput, and improved material efficiency [3]. Additional financial benefits include reduced safety incidents and lower waste disposal costs.

Strategic Benefits Beyond Cost Savings

Accelerated Discovery Timelines: Automated systems can operate continuously without fatigue, dramatically compressing design-make-test-analyze (DMTA) cycles. AI-driven platforms like those from Exscientia report design cycles approximately 70% faster than conventional approaches [7].

Enhanced Reproducibility and Data Quality: Automated logging of experimental parameters creates structured, digital records that enhance reproducibility. Systems consistently execute protocols with precision unattainable through manual methods, reducing human error [1] [5].

Safety Improvements: Enclosed automated systems minimize researcher exposure to hazardous compounds, enabling exploration of potentially toxic or reactive substances with reduced risk [3].

Democratization Potential: Open-source platforms like the Chemputer and FLUID aim to make automation accessible to smaller research groups, potentially leveling the playing field between well-funded institutions and smaller laboratories [1].

Implementation Challenges and Limitations

Technical Complexity: Integration with legacy laboratory equipment presents significant engineering challenges. Many existing instruments lack robotic compatibility, requiring custom interfaces [3].

Workflow Adaptation: Research processes must be reengineered for automation, potentially limiting flexibility for exploratory investigations that require continuous human intuition [4].

Personnel Requirements: Effective operation demands cross-trained researchers with expertise in both chemistry and robotics, creating staffing challenges for traditional academic departments [3] [2].

Maintenance Demands: Chemical environments accelerate wear on robotic components, requiring specialized maintenance protocols and potentially increasing downtime [3].

The automated synthesis landscape offers diverse solutions tailored to different research needs and budgetary constraints. Platform selection should align with specific research objectives:

For high-throughput optimization of known reactions, specialized flow systems like RoboChem provide exceptional efficiency [5]. For exploratory chemistry with unpredictable outcomes, mobile robotic platforms with multimodal analysis offer superior flexibility [4]. For educational institutions or budget-constrained environments, open-source modular systems present a viable entry point [1].

The most successful implementations adopt a phased approach, beginning with partial automation that addresses specific bottlenecks while gradually expanding capabilities. This strategy maximizes ROI while building institutional expertise. Regardless of the specific platform, the future of chemical research clearly lies in collaborative human-robot partnerships that leverage the respective strengths of human intuition and robotic precision [1] [2] [5].

As the field evolves toward higher autonomy levels, researchers must balance efficiency gains against the need for chemical insight and creativity. The most transformative applications of automated synthesis will likely emerge not from simply accelerating existing workflows, but from enabling experimental approaches that were previously impossible through manual methods alone.

The adoption of automated synthesis platforms is reshaping research in chemistry and materials science. The core of this transformation lies in the integration of three distinct technological layers: the robotic hardware that performs physical tasks, the generative AI that plans experiments and analyzes results, and the LLM agents that orchestrate the entire workflow. This guide provides a comparative analysis of the components within this technology stack, framing the selection within a cost-benefit analysis crucial for research-driven deployment.

Robotic Hardware: The Physical Layer of Automation

The robotic hardware forms the foundation of any self-driving lab (SDL), responsible for the physical execution of experiments. Platforms vary significantly in their design, capabilities, and cost, directly influencing the types of scientific questions they can address.

The table below compares the characteristics of different robotic platforms and hardware components as used in automated synthesis.

Table: Comparison of Robotic Hardware Platforms for Automated Synthesis

| Platform / Component | Type / Role | Key Characteristics | Reported Throughput & Performance | Relative Cost & Accessibility |

|---|---|---|---|---|

| Affordable Electrochemical Platform [8] | Integrated SDL Platform | Open-source design; custom potentiostat; automated synthesis | Database of 400 electrochemical measurements [8] | "Cost-effective"; "open science" approach [8] |

| iChemFoundry Platform [9] | Integrated SDL Platform | Intelligent automated system; high-throughput synthesis | "High efficiency, high reproducibility, high flexibility" [9] | Not explicitly stated, part of a global innovation center [9] |

| Standard Bots RO1 [10] | Collaborative Robot (Cobot) | 6-axis arm; ±0.025 mm repeatability; 18 kg payload [10] | Used for CNC tending, palletizing, packaging [10] | $37,000; positioned as affordable [10] |

| Microfluidic Reactor Systems [11] | Reactor & Sampling System | Low material usage; rapid spectral sampling | Demonstrated: ~100 samples/hour; Theoretical: 1,200 measurements/hour [11] | Enables exploration with expensive/hazardous materials [11] |

Experimental Protocols for Hardware Performance Validation

The performance metrics cited in the table above are derived from specific experimental protocols detailed in the source research. A key metric for any SDL is its degree of autonomy, which defines how much human intervention is required.

Figure 1: Levels of Autonomy in Self-Driving Labs. The pathway from human-dependent to fully autonomous operation, with the highest level being theoretical as of 2024 [11].

- Measuring Throughput and Operational Lifetime: The methodology for determining a platform's throughput involves distinguishing between its theoretical maximum and its demonstrated performance in a specific experimental context [11]. Similarly, operational lifetime is quantified as either demonstrated (the maximum/average runtime achieved in a study) or theoretical (the maximum potential runtime without physical constraints like reagent depletion). For example, a microfluidic system might have a theoretical throughput of 1,200 measurements per hour but a demonstrated throughput of 100 samples per hour for longer reactions [11].

- Quantifying Experimental Precision: The precision of an automated platform—the unavoidable spread of data points around a ground truth—is foundational for reliable AI-driven optimization. The established protocol involves conducting unbiased replicates of a single experimental condition. To prevent systemic bias, the test condition is alternated with random condition sets before each replicate, mimicking the conditions of an active optimization loop. Low precision can severely hamper an algorithm's ability to navigate a parameter space effectively [11].

Generative AI and LLM Agents: The Digital Brain

The digital layer encompasses the artificial intelligence that controls the robotic hardware. While the terms are often used interchangeably, a distinction can be made: Generative AI refers to models that create content or plans, while LLM Agents are systems that use LLMs to reason, make decisions, and take actions within an environment, such as an SDL.

Comparative Performance of LLMs in Robotic Tasks

The choice of AI model is critical, as it directly impacts the robot's ability to understand commands, reason about its environment, and plan complex tasks. The following table synthesizes performance data from benchmark leaderboards and specific robotics experiments.

Table: LLM & AI Model Comparison for Robotics and Research Applications

| AI Model | Best Suited For (Use Case) | Key Experimental Performance Data | Cost & Efficiency Considerations |

|---|---|---|---|

| Claude (Opus/Sonnet) | Coding for robotic orchestration; complex task planning | 82.0% on SWE-bench (Agentic Coding) [12]; 40% overall accuracy in "pass the butter" robotics test [13] | Higher cost; Claude 4 Sonnet cited as 20x more expensive than Gemini 2.5 Flash for coding [14] |

| Gemini Pro & Flash | Multimodal reasoning; cost-effective coding & automation | 91.8% on MMMLU (Multilingual Reasoning) [12]; 37% accuracy in robotics test [13]; "most cost-effective" for coding [14] | Gemini 2.5 Flash is a low-cost option [14] [12] |

| GPT Models | Everyday assistance; intuitive task understanding | 35.2% on "Humanity's Last Exam" (Overall) [12]; "magical" memory feature for contextual assistance [14] | Not the most cost-effective for large-scale coding tasks [14] |

| Specialized Models (e.g., Gemini ER 1.5) | Robotic-specific tasks | Underperformed general-purpose models (Gemini 2.5 Pro, Claude Opus 4.1, GPT-5) in a holistic robotics test [13] | Investment in specialized models may not yet yield superior performance over general-purpose LLMs [13] |

Experimental Protocols for Evaluating LLM Agents in Robotics

The performance data for LLMs in robotics comes from carefully designed experimental protocols that test reasoning, planning, and physical execution.

- The "Pass the Butter" Protocol: A real-world experiment tested state-of-the-art LLMs embodied in a vacuum robot. The methodology was as follows [13]:

- Task Decomposition: The single prompt, "pass the butter," was sliced into a series of sub-tasks: find the butter (placed in another room), recognize it among several packages, obtain it, locate the human (who may have moved), deliver it, and wait for confirmation of receipt.

- Model Scoring: Each LLM was scored on its performance in each individual task segment, and these were combined into a total accuracy score.

- Baseline Establishment: Three humans were tested using the same protocol to establish a performance baseline (achieving 95%).

- Benchmarking Against Contaminated Data: When evaluating models based on public leaderboards, it is critical to acknowledge benchmark saturation and data contamination. Relying on saturated benchmarks like MMLU offers little differentiation. Instead, focus on newer, frequently updated benchmarks like LiveBench, LiveCodeBench, and SWE-bench, which are designed to be more resistant to data leakage and provide a better measure of a model's true reasoning capabilities on novel problems [15].

Figure 2: AI and Hardware Workflow. The interaction loop between the user, LLM agents, generative AI, and robotic hardware in a closed-loop SDL.

The Scientist's Toolkit: Essential Research Reagents and Solutions

Beyond the core technology stack, the practical implementation of an automated synthesis platform relies on a suite of research reagents and software solutions.

Table: Key Research Reagents and Solutions for Automated Synthesis Platforms

| Item / Solution | Function / Role | Implementation Example |

|---|---|---|

| ChemOS 2.0 [8] | Software for orchestration and campaign management | Used to orchestrate an autonomous electrochemical campaign [8] |

| Custom Potentiostat [8] | Provides extensive control over electrochemical experiments for characterization | Core of an open, affordable autonomous electrochemical setup [8] |

| High-Value/Hazardous Materials [11] | The target molecules or precursors for synthesis and discovery | Low material usage in microfluidic/SDL platforms expands the explorable parameter space [11] |

| Slack Integration [13] [8] | Enables external communication and monitoring of the robotic agent | Used to capture robot "internal dialog" and task status [13] |

| Surrogate Benchmarks [11] | Digital functions (e.g., for Bayesian Optimization) to evaluate algorithm performance | Used to evaluate algorithm performance without physical experiments, saving cost [11] |

| (S)-(+)-Canadaline-d3 | (S)-(+)-Canadaline-d3, MF:C21H23NO5, MW:372.4 g/mol | Chemical Reagent |

| 1-Acetyl-3-indoxyl-d4 | 1-Acetyl-3-indoxyl-d4, MF:C10H9NO2, MW:179.21 g/mol | Chemical Reagent |

The integration of robotic hardware, generative AI, and LLM agents is creating a new paradigm for scientific discovery. The comparative data shows that there is no one-size-fits-all solution. The optimal technology stack depends on a careful cost-benefit analysis tailored to the research problem.

For labs with limited capital, an open-source hardware approach combined with a cost-effective, high-performing LLM like Gemini 2.5 Pro offers a compelling entry point [8] [14]. The dominant trend, however, is moving toward closed-loop autonomy, where the synergy between robust hardware and sophisticated AI agents can achieve an experimental throughput and efficiency unattainable through human-led efforts [11]. While general-purpose LLMs currently outperform their robotics-specific counterparts in some holistic tests [13], the rapid evolution of models and the emphasis on contamination-free benchmarks will be crucial for selecting agents capable of genuine discovery in complex, real-world chemical spaces [15].

The integration of artificial intelligence (AI) into chemical and drug synthesis is catalyzing a fundamental shift in research and development (R&D) paradigms. This guide provides an objective comparison of AI-driven synthesis platforms against traditional methods, framed within a cost-benefit analysis. The market for AI in computer-aided synthesis planning (CASP) is projected to experience explosive growth, rising from USD 3.1 billion in 2025 to USD 82.2 billion by 2035, representing a compound annual growth rate (CAGR) of 38.8% [16]. This growth is primarily driven by the urgent need to reduce drug discovery timelines, lower R&D costs, and embrace sustainable green chemistry principles. The following sections detail the quantitative market drivers, compare platform efficiencies through experimental data, and outline the essential toolkit for modern research laboratories.

Market Growth and Financial Projections

The financial investment and projected growth in AI-driven synthesis are clear indicators of its transformative potential. The data underscores a significant shift in R&D spending towards intelligent, data-driven platforms.

Table 1: AI in Computer-Aided Synthesis Planning Market Forecast

| Metric | 2025 Value | 2035 Projected Value | CAGR (2026-2035) |

|---|---|---|---|

| Global Market Size | USD 3.1 billion [16] | USD 82.2 billion [16] | 38.8% [16] |

| Regional Leadership (2035 Share) | - | North America (38.7%) [16] | - |

| Fastest-Growing Region | - | Asia Pacific [16] | 20.0% (2026-2035) [16] |

| Dominant Application | - | Small Molecule Drug Discovery [16] | - |

Table 2: Broader AI and Generative AI Market Context

| Market Segment | 2025 Market Size | 2030/2032 Projected Value | CAGR |

|---|---|---|---|

| Overall AI Market | USD 371.71 billion [17] | USD 2,407.02 billion by 2032 [17] | 30.6% (2025-2032) [17] |

| Generative AI Market | - | USD 699.50 billion by 2032 [18] | 33.0% (2025-2032) [18] |

Key Market Drivers: A Cost-Benefit Analysis

The remarkable market growth is fueled by several key drivers that directly address the high costs and inefficiencies of traditional research methods.

- Accelerated Discovery Timelines: Traditional drug discovery typically takes 10 to 15 years at an average cost exceeding USD 2.6 billion per drug. AI application can reduce timelines by 30% to 50%, particularly in the preclinical discovery phase [16]. For instance, companies like Insilico Medicine have reported nominating preclinical candidates in an average of ~13 months, a fraction of the traditional 2.5–4 years [19].

- Rising Adoption of Green Chemistry: Regulatory pressures, such as the EU’s Green Deal, are driving the adoption of AI to design more sustainable and environmentally friendly synthesis routes, reducing the ecological footprint of chemical processes [16].

- Substantial Government and Private Investment: Significant funding is de-risking innovation in this sector. Venture capital investment in health AI in the U.S. reached USD 11 billion [16]. This is complemented by global government initiatives, such as the UK’s AI Research Resource (AIRR), aimed at boosting sovereign compute capacity for AI development [20].

Experimental Comparison: AI-Driven vs. Traditional Synthesis

The following experimental protocols and results provide a quantitative comparison of the efficiency gains offered by AI-driven synthesis platforms.

Experimental Protocol: Onepot's Automated Synthesis and AI Validation

This protocol details the methodology used by Onepot to validate its AI chemist, "Phil" [21].

- Objective: To assess the improvement in synthesis speed and efficiency for creating novel small molecules using a fully integrated AI and robotics platform compared to traditional manual methods.

- Platform Components:

- AI Chemist ("Phil"): An AI system with direct control over the lab environment, capable of planning reactions, interpreting LC/MS data, and generating new hypotheses.

- Automated Lab Infrastructure: Includes liquid handlers, plate sealers, plate-based reactions, and robotics in a glovebox for handling sensitive chemistries.

- Methodology:

- Input: Target small molecules for pharmaceuticals or materials science.

- AI Planning: The AI chemist designs synthesis routes and corresponding experimental procedures.

- Automated Execution: Robotic systems execute the synthesis, handling reagent supply, reaction setup, workup, and purification.

- Analysis & Learning: LC/MS and other analytical tools provide structured data back to the AI, which identifies byproducts, validates hypotheses, and refines subsequent experiments in a closed loop.

- Comparison Metric: Time from initial molecular idea to delivery of a physical compound batch was compared against industry-standard timelines for traditional contract research organizations (CROs).

Experimental Protocol: Efficiency Gains in Evidence Synthesis

This systematic review protocol quantifies workload efficiency from applying AI to research synthesis tasks [22].

- Objective: To quantify time and workload efficiencies of using AI-automation tools in systematic literature reviews (SLRs) versus human-led methods.

- AI Tools: Machine learning and natural language processing for screening citations, a rate-limiting step in SLRs.

- Methodology:

- Dataset: A collection of published studies comparing manual and AI-assisted SLR processes.

- AI Screening: Use of ML/NLP models to screen titles and abstracts for relevance.

- Metric Calculation: Key metrics included Work Saved over Sampling at 95% recall (WSS@95%) and the percentage reduction in abstracts requiring human review.

- Comparison Metric: Workload and time-to-completion for the screening phase of SLRs.

Results and Comparative Data

The implementation of the above protocols yielded significant performance improvements.

Table 3: Experimental Results Comparing AI and Traditional Methods

| Experiment | Traditional Workflow Result | AI-Driven Workflow Result | Efficiency Gain |

|---|---|---|---|

| Small Molecule Synthesis (Onepot) [21] | 6-12 weeks for candidate molecules | Receiving new molecules 6-10 times faster | 6x-10x acceleration |

| Literature Review Screening (Systematic Review) [22] | 100% manual abstract screening | 55%-64% decrease in abstracts to review | ~60% workload reduction |

| Work Saved over Sampling (WSS@95%) [22] | Baseline (0% saved) | 6- to 10-fold decrease in workload | Up to 90% workload reduction |

The Scientist's Toolkit: Essential Research Reagent Solutions

AI-driven synthesis relies on a combination of computational and physical components. Below is a table of key materials and their functions in this field.

Table 4: Essential Research Reagent Solutions for AI-Driven Synthesis

| Item | Function | Application Example |

|---|---|---|

| Proprietary AI Platforms (e.g., Schrödinger, BIOVIA, ChemPlanner) [16] | Core software for retrosynthesis analysis, reaction prediction, and molecular design. | Forms the intellectual property backbone for computer-aided synthesis innovation [16]. |

| Cloud-based AI Services (e.g., AWS Bedrock, Google Vertex AI) [17] | Provides scalable computing infrastructure and pre-trained foundation models, eliminating need for in-house data science teams. | Enables small and mid-sized enterprises to adopt enterprise-grade AI for synthesis planning [17]. |

| Liquid Handlers & Robotics [21] | Automated instruments for precise liquid dispensing and reaction setup in high-throughput experimentation. | Used in platforms like Onepot's to run many reactions in parallel without manual intervention [21]. |

| Open-Source Software (e.g., RDKit, DeepChem) [16] | Provides accessible libraries for cheminformatics and deep learning, democratizing AI capabilities. | Allows researchers to model molecular interactions and optimize drug candidates without commercial software licenses [16]. |

| Self-Driving Laboratory (SDL) Orchestration (e.g., ChemOS 2.0) [23] | Software to manage and integrate automated hardware and AI decision-making in a continuous loop. | Used in low-cost, open-source SDLs to coordinate experiments from synthesis to electrochemical characterization [23]. |

| N-Nitrosodibutylamine-d9 | N-Nitrosodibutylamine-d9, MF:C8H18N2O, MW:167.30 g/mol | Chemical Reagent |

| N-tert-Butylcarbamoyl-L-tert-leucine-d9 | N-tert-Butylcarbamoyl-L-tert-leucine-d9, MF:C11H22N2O3, MW:239.36 g/mol | Chemical Reagent |

Workflow Visualization of an AI-Driven Synthesis Platform

The following diagram illustrates the integrated, cyclical workflow of a modern AI-driven synthesis platform, highlighting the closed-loop learning that enables continuous improvement.

AI-Driven Synthesis Workflow: This diagram illustrates the continuous "build-measure-learn" cycle of an AI-driven synthesis platform, where data from each experiment feeds back to improve the AI model's predictive power and decision-making for subsequent rounds.

The process of discovering and developing new therapeutics is underpinned by chemical synthesis. For decades, this foundation has relied on traditional, manual laboratory methods. However, as the pharmaceutical industry grapples with soaring R&D expenditures and declining productivity, the cost and inefficiency of these traditional synthesis methods have become a critical bottleneck. This guide provides an objective comparison between traditional synthesis and emerging automated platforms, framing the analysis within the broader context of the cost-benefit imperative facing modern drug development.

The R&D Productivity Crisis in Biopharma

Current biopharmaceutical R&D is characterized by intense activity but diminishing returns. Understanding this landscape is crucial to appreciating the impact of synthetic efficiencies.

- Rising Costs, Lower Output: The industry invests over $300 billion annually into R&D, supporting a pipeline of over 23,000 drug candidates. Despite this record investment, the success rate for drugs entering Phase 1 trials has plummeted to just 6.7% in 2024, down from 10% a decade ago [24].

- Strained Financials: The internal rate of return on R&D investment has fallen to 4.1%, well below the cost of capital. Furthermore, R&D margins are projected to decline from 29% to 21% of total revenue by 2030, squeezed by the shrinking commercial performance of new launches and rising costs per approval [24].

- The Patent Cliff: Compounding these issues, the industry is approaching the largest patent cliff in history, putting an estimated $350 billion of revenue at risk between 2025 and 2029 [24].

This productivity crisis underscores the urgent need for efficiencies across the R&D pipeline, starting with the foundational step of molecular synthesis.

Quantitative Comparison: Traditional vs. Automated Synthesis

The following tables summarize key performance indicators, highlighting the stark contrast between traditional methods and automated platforms.

Table 1: Comparing Synthesis Performance and Cost Metrics

| Performance Metric | Traditional Synthesis | Automated Synthesis Platforms |

|---|---|---|

| Typical Synthesis Scale | Milligram to gram scale [25] | Picomole scale (low ng to low μg) [25] |

| Throughput per Reaction | Hours to days [25] | ~45 seconds/reaction [25] |

| Success Rate for Analog Generation | Variable and technique-dependent | 64% (as demonstrated for 172 analogs) [25] |

| Reproducibility | Inconsistent between labs and chemists [26] | High, due to minimized human error and standardized protocols [27] |

| Material Collection Efficiency | N/A (Bulk processing) | 16 ± 7% (for a specific microdroplet system) [25] |

Table 2: Broader R&D and Economic Impacts

| Impact Area | Traditional Synthesis Workflow | Automated Synthesis Workflow |

|---|---|---|

| Drug Discovery Cost | Contributes to an average total of ~$2.5 billion per new drug [28] | Potential for significant cost reduction in early discovery [28] |

| Discovery Timeline | 12-15 years for full R&D pipeline [28] | Accelerated lead identification and optimization [27] |

| R&D Attrition | High; only 9-14% of molecules survive Phase I trials [28] | Aims for higher success rates via better, data-driven candidate selection [28] |

| Key Bottleneck | Long design-make-test cycles [28] | Speed of synthesis and testing enables rapid iteration [25] [27] |

Experimental Protocols: Illustrating the Paradigm Shift

Protocol 1: Traditional Medicinal Chemistry for Lead Optimization

This protocol exemplifies the time and resource-intensive nature of manual synthesis.

- Design: A medicinal chemist analyzes structure-activity relationship (SAR) data to propose new target analogs.

- Manual Synthesis:

- Setup: The reaction is assembled in a round-bottom flask under ambient atmosphere or inert gas.

- Execution: Reagents are added manually. The reaction proceeds with magnetic stirring for a prescribed time (hours to days), often requiring heating or cooling.

- Work-up: The reaction mixture is manually quenched and extracted using separatory funnels.

- Purification: The crude product is purified by manual flash column chromatography.

- Analysis: The final compound is characterized using techniques like NMR and LC-MS.

- Testing: The purified compound is submitted for biological assay (e.g., binding affinity, cellular activity).

This linear process is slow, and the "make" step often becomes the rate-limiting factor, preventing the rapid exploration of chemical space.

Protocol 2: Automated High-Throughput Microdroplet Synthesis

This modern protocol, derived from a recent study, demonstrates the principles of accelerated, automated synthesis [25].

- Precursor Array Preparation: Reaction mixtures are pre-deposited in a spatially defined, two-dimensional array on a solid surface. Each sample consists of multiple 50 nL spots, totaling ~450 nL of reactant mixture [25].

- Automated DESI Reaction and Transfer:

- A automated DESI (Desorption Electrospray Ionization) sprayer emits a stream of charged solvent microdroplets.

- The precursor array is rastered beneath the sprayer. When the spray impacts a sample spot, it desorbs secondary microdroplets containing the reactants.

- On-the-fly chemical transformations occur in these microdroplets during their millisecond-scale flight, accelerated by phenomena at the air-liquid interface [25].

- The reacted microdroplets are collected at the corresponding position on a product array (e.g., filter paper).

- Analysis and Collection: The collected products are analyzed directly from the array using techniques like nanoelectrospray MS or extracted for LC-MS/MS validation [25].

This system performs synthesis, reaction acceleration, and product collection in an integrated, automated fashion, achieving a throughput of approximately one reaction every 45 seconds [25].

Visualizing the Synthesis Workflows

The following diagrams illustrate the logical relationships and fundamental differences between the two approaches.

Diagram 1: Traditional Synthesis is a linear, manual process with slow iteration, making the "make" step a major bottleneck [24] [28].

Diagram 2: Automated synthesis creates a tight, data-driven cycle where synthesis and analysis are fast and integrated, drastically reducing iteration time [25] [26] [27].

The Scientist's Toolkit: Key Research Reagent Solutions

The implementation of advanced synthesis protocols, particularly automated platforms, relies on specialized reagents and materials.

Table 3: Essential Reagents and Materials for Automated Synthesis

| Research Reagent/Material | Function in Experimental Protocol |

|---|---|

| Precursor Array Plates | Provides a solid support for the spatially defined, nanoliter-scale deposition of reactant mixtures, enabling high-throughput screening [25]. |

| DESI Spray Solvent | The charged solvent (e.g., aqueous/organic mixtures) used to create primary microdroplets that desorb and ionize reactants from the array surface, facilitating both the reaction and transfer [25]. |

| Collection Surface (Chromatography Paper) | Acts as the solid support for the product array, capturing the synthesized compounds after their microdroplet flight for subsequent analysis or storage [25]. |

| Internal Standards (e.g., Naltrexone) | Co-collected with reactants/products to enable accurate quantification of reaction conversion and collection efficiency via mass spectrometry [25]. |

| AI-Driven Synthesis Planning Software | Replaces labor-intensive manual research by using algorithms trained on millions of reactions to propose viable synthetic pathways and rank reagent choices [26]. |

| Potassium cyanate-13C,15N | Potassium cyanate-13C,15N, MF:CHKNO, MW:84.109 g/mol |

| Methoxytrimethylsilane-d3 | Methoxytrimethylsilane-d3, MF:C4H12OSi, MW:107.24 g/mol |

The data and protocols presented herein objectively demonstrate that traditional chemical synthesis constitutes a significant and costly bottleneck in pharmaceutical R&D. Its manual, slow, and resource-intensive nature directly contributes to the industry's productivity crisis. In contrast, automated synthesis platforms offer a compelling alternative, delivering radical improvements in speed, efficiency, and the ability to generate high-quality data. The cost-benefit analysis is clear: integrating automation is no longer a niche advantage but a strategic necessity for de-risking R&D and building a more sustainable and productive drug discovery pipeline.

Core Components of a Cost-Benefit Analysis Framework for Research Platforms

The global synthesis platform market, valued at USD 2.14 billion in 2024, is undergoing a radical transformation driven by artificial intelligence and automation [29]. In pharmaceutical and chemical research, automated synthesis platforms have emerged as critical tools for accelerating discovery, with the AI sector in computer-aided synthesis planning alone projected to grow from USD 3.1 billion in 2025 to USD 82.2 billion by 2035, representing a staggering 38.8% compound annual growth rate [16]. This rapid expansion underscores the strategic importance of implementing rigorous cost-benefit analysis frameworks to guide investment decisions in research technologies.

For researchers, scientists, and drug development professionals, these platforms offer unprecedented capabilities in predictive modeling, high-throughput experimentation, and data-driven discovery. However, their substantial capital requirements—with chemical robotics systems ranging from $50,000 to over $300,000—demand careful financial justification [3]. This guide establishes a comprehensive framework for evaluating automated synthesis platforms, comparing performance metrics across leading technologies, and quantifying both tangible and intangible returns on investment.

Market Context and Growth Drivers

Current Market Landscape

The adoption of automated synthesis technologies is accelerating across multiple research domains, particularly in pharmaceutical development where reducing discovery timelines provides significant competitive advantage. North America currently dominates the market with a projected 38.7% revenue share by 2035, though the Asia Pacific region is expected to expand at the fastest rate, stimulated by increasing adoption of AI-driven drug discovery and innovations in combinatorial chemistry [16].

Table 1: Global AI in Computer-Aided Synthesis Planning Market Forecast

| Metric | 2025 | 2026 | 2035 | CAGR (2026-2035) |

|---|---|---|---|---|

| Market Size | USD 3.1 billion | USD 4.3 billion | USD 82.2 billion | 38.8% |

| Regional Leadership | --- | --- | North America (38.7% share) | --- |

| Fastest Growing Region | --- | --- | Asia Pacific | 20.0% (2026-2035) |

Key Technological Drivers

Several interconnected technological trends are propelling the adoption of automated synthesis platforms:

AI and Machine Learning Integration: Algorithms now enable predictive modeling of synthesis pathways, significantly reducing trial-and-error experimentation. AI-powered platforms can autonomously design novel chemical structures with tailored properties, with some applications reducing specific drug discovery timelines from years to months [16] [30].

High-Throughput Automation: Robotic systems enable continuous operation without manual intervention, dramatically increasing experimental capacity. Modern platforms can perform hundreds of reactions in parallel while systematically recording both successful and failed attempts—creating comprehensive datasets essential for training robust AI models [31].

Data Infrastructure and FAIR Principles: Research Data Infrastructures (RDIs) built on FAIR principles (Findable, Accessible, Interoperable, Reusable) ensure experimental data is structured, machine-interpretable, and traceable across entire workflows [31]. This infrastructure transforms raw experimental data into validated knowledge graphs accessible through semantic query interfaces.

Core Components of Cost-Benefit Analysis

A robust cost-benefit framework for research platforms must account for both quantitative financial metrics and qualitative strategic advantages that impact research productivity and innovation capacity.

Capital and Operational Cost Structure

The total investment required for automated synthesis platforms extends beyond initial hardware acquisition to include integration, training, and ongoing operational expenses.

Table 2: Comprehensive Cost Analysis for Automated Synthesis Platforms

| Cost Component | Typical Range | Key Considerations |

|---|---|---|

| Hardware Acquisition | ||

| Lab-based systems | $50,000 - $150,000 | Benchtop units for automated synthesis, sample preparation |

| Industrial-scale robots | $200,000 - $300,000+ | Explosion-proof, ATEX-certified models for hazardous environments [3] |

| Integration & Installation | 20-40% of hardware cost | Specialized enclosures, corrosion-resistant components, safety systems |

| Annual Maintenance | 10-15% of hardware cost | Specialized training for chemical exposure degradation [3] |

| Operator Training | $5,000 - $20,000 initial | Programming, chemical process safety, emergency response |

| Data Management | Variable | LIMS integration, cloud storage, computational resources |

Quantitative Benefit Metrics

The financial justification for automated synthesis platforms derives from multiple dimensions of improved efficiency and productivity.

Table 3: Quantitative Benefit Metrics and Measurement Approaches

| Benefit Category | Measurement Approach | Typical Impact Range |

|---|---|---|

| Throughput Increase | Experiments per FTE month | 3-5x manual capacity [29] |

| Error Reduction | Batch rejection rates | 40-60% decrease [3] |

| Time Savings | Protocol development and execution | 30-50% reduction in discovery timelines [16] |

| Material Savings | Chemical consumption per experiment | 20-35% reduction through miniaturization |

| Labor Optimization | Researcher hours per experiment | 50-70% decrease in manual tasks [32] |

Qualitative Strategic Benefits

Beyond direct financial metrics, automated platforms deliver strategic advantages that strengthen long-term research capabilities:

Enhanced Reproducibility and Data Integrity: Automated platforms capture complete experimental context—including reagents, conditions, instrument parameters, and negative results—in structured, machine-readable formats [31]. This comprehensive data capture ensures perfect traceability and enables true reproducibility across experiments and research teams.

Accelerated Innovation Cycles: By integrating robotic experimentation with AI-driven planning, researchers can rapidly iterate through design-make-test-analyze cycles. The Swiss Cat+ West hub exemplifies this approach, with automated workflows performing high-throughput chemistry experiments with minimal human input, generating volumes of data far exceeding manual capabilities [31].

Safety and Risk Mitigation: Automated systems reduce human exposure to hazardous materials through enclosed workcells with integrated leak sensors, negative-pressure ventilation, and emergency shutdown systems [3]. This protects personnel and minimizes operational disruptions from safety incidents.

Knowledge Preservation and Transfer: Structured data capture ensures experimental knowledge persists beyond individual researchers' tenure. Semantic modeling using ontology-driven approaches transforms experimental metadata into validated Resource Description Framework (RDF) graphs that remain queryable and reusable indefinitely [31].

Experimental Protocols and Methodologies

High-Throughput Experimentation Workflow

Automated synthesis platforms follow structured workflows that integrate digital planning, robotic execution, and analytical characterization. The following diagram illustrates a standardized protocol for high-throughput chemical experimentation:

High-Throughput Experimentation Workflow

This workflow, implemented at the Swiss Cat+ West hub, demonstrates the integration of automated synthesis with multi-stage analytical characterization [31]. The process begins with digital project initialization through a Human-Computer Interface (HCI) that structures input metadata in JSON format. Automated synthesis then proceeds using Chemspeed platforms under programmable conditions (temperature, pressure, stirring) with all parameters logged via ArkSuite software. Following synthesis, compounds enter a branching analytical path that directs samples based on detection signals and chemical properties, ensuring appropriate characterization while conserving resources on negative results. Crucially, even failed experiments generate structured metadata that contributes to machine learning datasets.

Research Reagent Solutions and Essential Materials

Table 4: Key Research Reagents and Materials for Automated Synthesis

| Material | Function | Application Context |

|---|---|---|

| Chemspeed Synthesis Platforms | Automated parallel synthesis under controlled conditions | High-throughput reaction screening and optimization [31] |

| LC-DAD-MS-ELSD-FC Systems | Multi-detector liquid chromatography for reaction screening | Primary analysis providing quantitative information and retention times [31] |

| GC-MS Systems | Gas chromatography-mass spectrometry for volatile compounds | Secondary screening when LC methods show no detection [31] |

| SFC-DAD-MS-ELSD | Supercritical fluid chromatography for chiral separation | Enantiomeric resolution and stereochemistry characterization [31] |

| ASM-JSON Data Format | Allotrope Simple Model for structured data capture | Standardized instrument output for automated data integration [31] |

| Semantic Metadata (RDF) | Resource Description Framework for knowledge representation | Converting experimental metadata into machine-queryable graphs [31] |

AI-Assisted Research Synthesis Protocol

The integration of AI tools has transformed research synthesis methodologies, particularly for literature-based evidence synthesis:

AI-Assisted Research Synthesis Protocol

This protocol, derived from methodologies discussed at the NIHR CORE Information Retrieval Forum, demonstrates how AI tools are being integrated into evidence synthesis workflows [33]. The process begins with precise research question formulation, followed by AI-assisted search strategy development that can automate the translation of searches across databases with different syntax rules—a task that traditionally requires 5.4 hours on average and up to 75 hours for complex strategies [33]. AI-powered tools then screen retrieved documents, extract relevant data, and assist in evidence synthesis, while maintaining human oversight for validation and critical appraisal to address concerns about AI "hallucinations" and ensure methodological rigor.

Implementation Framework and ROI Analysis

Strategic Decision Framework

Selecting and implementing automated synthesis platforms requires careful consideration of multiple technical and operational factors:

Table 5: Strategic Decision Framework for Platform Selection

| Decision Factor | Critical Evaluation Questions | Red Flags |

|---|---|---|

| Data Requirements | Do we need production-derived or from-scratch data? | One-size-fits-all claims without use case specialization |

| Governance & Compliance | How will we meet EU AI Act and other regulatory obligations? | No clear deployment model or auditability features |

| Technical Capabilities | Can the tool maintain relational integrity across complex data? | Limited conditional sampling or unstable training performance |

| Scalability & Security | What happens when scale or security requirements increase? | Cloud-only vendor without VPC or private deployment options |

| Interoperability | Does the platform support FAIR data principles? | Proprietary data formats that create vendor lock-in |

Return on Investment Calculation

Most chemical robotics systems deliver ROI within 18-36 months, with faster payback in high-throughput environments [3]. The following calculation framework incorporates both direct and indirect benefits:

ROI Calculation Formula: [ ROI = \frac{\text{Total Benefits} - \text{Total Costs}}{\text{Total Costs}} \times 100\% ]

Sample Calculation for Mid-Scale Installation:

- Total Costs (3-year horizon): $475,000

- Hardware: $300,000

- Integration: $90,000 (30% of hardware)

- Maintenance: $135,000 (3 years × $45,000/year)

- Training: $15,000

- Total Benefits (3-year horizon): $742,500

- Labor savings: $360,000 (2 FTE × $60,000/year × 3 years)

- Material savings: $157,500 ($52,500/year)

- Error reduction: $225,000 (3 avoided batch failures × $75,000 each)

- ROI: (\frac{742,500 - 475,000}{475,000} \times 100\% = 56.3\%)

Key factors that accelerate ROI include continuous 24/7 operation, high material value where waste reduction delivers significant savings, and improved product consistency that reduces rejected batches [3]. Organizations should also factor in strategic benefits such as accelerated time-to-market for new compounds—particularly valuable in pharmaceutical development where AI-assisted platforms can reduce discovery timelines by 30-50% in specific phases [16].

Future Outlook and Emerging Trends

The automated synthesis platform landscape continues to evolve rapidly, with several trends shaping future capabilities and cost-benefit considerations:

Agentic AI and Autonomous Experimentation: AI systems are evolving from assistive tools to autonomous "virtual coworkers" that can plan and execute multistep research workflows [34]. These agentic AI systems promise further reductions in researcher intervention while increasing experimental complexity and discovery potential.

Specialized Hardware Integration: Application-specific semiconductors are emerging to address the massive computational demands of AI-driven synthesis planning [34]. These specialized processors optimize performance for chemical simulation and pattern recognition tasks while managing power consumption and heat generation.

Democratization through Cloud-Based Platforms: Smaller research organizations are gaining access to sophisticated synthesis capabilities through cloud-based platforms and marketplace offerings, such as MOSTLY AI's availability on AWS Marketplace with flat-fee pricing of $3,000 per month [35]. This model reduces upfront capital requirements and makes advanced capabilities accessible to smaller teams.

Hybrid Human-AI Research Models: Successful integration of automated platforms increasingly follows a hybrid approach where AI handles repetitive, high-volume tasks while researchers focus on experimental design, interpretation, and complex decision-making [32] [33]. This model optimizes both efficiency and scientific creativity.

A comprehensive cost-benefit framework for automated synthesis research platforms must extend beyond simple financial calculations to encompass strategic research capabilities, data quality, and long-term innovation capacity. The most successful implementations balance sophisticated automation with human expertise, ensuring that technology augments rather than replaces researcher intuition and creativity. As platforms continue evolving toward greater autonomy and intelligence, organizations that establish rigorous evaluation frameworks today will be best positioned to capitalize on these advancements while maximizing return on research investments.

For research organizations considering automation, a phased implementation approach—beginning with pilot projects targeting specific high-value workflows—provides the opportunity to refine cost-benefit models with real-world data before committing to enterprise-wide deployment. This measured strategy maximizes learning while managing financial exposure, creating a pathway to sustainable research transformation through automation.

Methodology in Action: Integrating Automation into the Research Workflow

The adoption of automated synthesis platforms is transforming research laboratories, offering a compelling value proposition grounded in quantifiable improvements in speed, efficiency, and reproducibility. Within the broader context of a cost-benefit analysis for research institutions, this guide provides an objective comparison between automated platforms and traditional manual methods. The data presented herein, drawn from recent studies and market analyses, offers researchers, scientists, and drug development professionals a evidence-based framework for evaluating the return on investment of this transformative technology. The transition to automation is not merely a matter of convenience but a strategic imperative for enhancing experimental rigor, accelerating discovery timelines, and optimizing resource utilization.

Quantitative Performance Comparison

The performance advantages of automated platforms can be systematically measured and compared against manual techniques across several key metrics. The following tables summarize quantitative data from recent implementations and market forecasts.

Table 1: Comparative Performance of Automated vs. Manual Methods in Recent Studies

| Performance Metric | Manual Method | Automated Platform | Quantified Improvement | Source/Platform |

|---|---|---|---|---|

| Operator Workload | Baseline | 2-3x reduction | 50-66% decrease | AutoFSP [36] |

| Compositional Error | Variable, user-dependent | Within ±5% | High precision across orders of magnitude | AutoFSP (ZnxZr1−xOy) [36] |

| Experimental Throughput | Limited by human speed | Up to 1,200 measurements/hour | Dramatic increase in data generation | Microfluidic Spectral System [11] |

| Data Generation Rate | ~100 samples/hour (demonstrated) | ~1,200 measurements/hour (theoretical) | 12x potential increase | Microfluidic System [11] |

| Synthesis Documentation | Manual, prone to variation | Standardized, machine-readable | Enhanced reproducibility & traceability | AutoFSP [36] |

Table 2: Broader Market and Efficiency Trends in Laboratory Automation

| Metric Category | Specific Metric | Data / Statistic | Implication |

|---|---|---|---|

| Market Growth | Liquid Handling Systems Market (2024) | USD 3.99 billion [37] | Strong and established market presence |

| Projected CAGR (2025-2034) | 5.69% [37] | Sustained and steady growth demand | |

| Automated Liquid Handling Robots Projected CAGR (2025-2033) | 10% [38] | Rapid adoption in high-throughput applications | |

| Operational Efficiency | Operational Lifetime (Demonstrated Unassisted) | Up to 2 days (example) [11] | Requires consideration for continuous processes |

| Operational Lifetime (Demonstrated Assisted) | Up to 1 month (example) [11] | Highlights potential for long-term studies with minimal intervention | |

| Impact of Precision | Optimization Rate with High Precision | Significantly improved [11] | High data quality is critical for efficient algorithm-guided research |

Detailed Experimental Protocols

To understand the data behind the comparisons, it is essential to examine the methodologies of key studies demonstrating automation benefits.

Protocol: End-to-End Synthesis Development using an LLM-Based Framework

This protocol, developed by researchers, demonstrates a closed-loop system for developing a copper/TEMPO-catalyzed aerobic alcohol oxidation reaction [39].

- Objective: To autonomously guide the synthesis development process from literature search to product purification using a framework of six specialized AI agents (LLM-RDF) [39].

- Methodology:

- Literature Scouter Agent: Initiated with a natural language prompt to search the Semantic Scholar database for "synthetic methods that can use air to oxidize alcohols into aldehydes." The agent identified and recommended the Cu/TEMPO catalytic system based on sustainability, safety, and substrate compatibility criteria [39].

- Information Extraction: The relevant literature document was provided to the same agent, which summarized detailed experimental procedures, reagents, and catalyst options [39].

- High-Throughput Screening (HTS): The process involved several sub-tasks:

- Experiment Designer Agent: Designed the HTS experiments for substrate scope and condition screening.

- Hardware Executor Agent: Executed the experiments on automated experimental platforms.

- Spectrum Analyzer Agent: Performed gas chromatography (GC) analysis on the results.

- Result Interpreter Agent: Analyzed the HTS data to draw conclusions [39].

- Challenge Mitigation: The platform addressed reproducibility challenges such as solvent volatility and catalyst stability inherent in the original manual protocol [39].

- Outcome: The framework successfully managed the entire development process, showcasing its versatility across three distinct chemical reactions, thereby validating its broader applicability [39].

Protocol: Automated Catalyst Synthesis via Flame Spray Pyrolysis (AutoFSP)

This protocol outlines the automated synthesis of inorganic mixed-metal nanoparticles, a process frequently used for catalysts [36].

- Objective: To accelerate the discovery and optimization of nanoparticle catalysts while providing standardized, machine-readable documentation of all synthesis steps [36].

- Methodology:

- Platform Design: A novel robotic platform (AutoFSP) was constructed, integrating automated precursor preparation and flame spray pyrolysis synthesis.

- Precision and Accuracy: The platform was tested for its ability to produce nanoparticles with specific metal ratios, such as ZnxZr1−xOy and InxZr1−xOy, across two orders of magnitude.

- Performance Analysis: The compositional accuracy of the synthesized nanoparticles was analyzed, and the effective molar metal loading was calculated to determine relative error.

- Workload and Documentation: Operator time requirements for both manual and automated processes were tracked and compared. All synthesis parameters and outcomes were automatically recorded in a standardized digital format [36].

- Outcome: The AutoFSP platform achieved a compositional relative error within ±5%, reduced operator workload by a factor of 2-3, and eliminated human experimental error through superior documentation [36].

Workflow and System Diagrams

The following diagrams illustrate the core operational logic of self-driving laboratories and a specific automated synthesis platform.

The Autonomous Experimentation Cycle

Integrated Workflow of an LLM-Based Development Framework

The Scientist's Toolkit: Research Reagent Solutions

Successful implementation of automated synthesis relies on a suite of core technologies and reagents. The following table details essential components for a typical automated high-throughput screening (HTS) workflow for chemical synthesis, as referenced in the experimental protocols.

Table 3: Key Research Reagent Solutions for Automated Synthesis

| Item / Solution | Function in Automated Workflow |

|---|---|

| Automated Liquid Handling Robot | Precisely dispenses reagents and catalysts in microliter-to-milliliter volumes for high-throughput reaction setup, enabling massive parallelization [38] [40]. |

| Cu/TEMPO Catalyst System | Serves as a model catalytic system for aerobic oxidations, frequently used in benchmark studies to validate automated platform performance [39]. |

| Metal Salt Precursors | Raw materials (e.g., Zn, Zr, In salts) for the automated synthesis of mixed-metal oxide nanoparticles via routes like flame spray pyrolysis [36]. |

| Modular Liquid-Handing Platform | A flexible workstation that can be equipped with ancillary modules like heaters, shakers, or centrifuges to perform complex, multi-step synthesis protocols without manual intervention [40]. |

| Gas Chromatography (GC) System | An inline or offline analysis instrument integrated into the platform for rapid determination of reaction conversion and yield, providing the data for closed-loop optimization [39]. |

| Laboratory Information Management System (LIMS) | Software that manages sample tracking, experimental data, and workflow definition, ensuring data integrity and reproducibility in regulated environments [40]. |

| 8-Hydroxy Guanosine-13C,15N2 | 8-Hydroxy Guanosine-13C,15N2, MF:C10H13N5O6, MW:302.22 g/mol |

| 2-Amino-8-oxononanoic acid hydrochloride | 2-Amino-8-oxononanoic acid hydrochloride, MF:C9H18ClNO3, MW:223.70 g/mol |

Accelerating the Design-Make-Test-Analyse (DMTA) Cycle

In modern drug discovery, the Design-Make-Test-Analyse (DMTA) cycle is a critical iterative process for developing new therapeutic compounds. However, for decades, this process has been constrained by a significant slowdown, a phenomenon known as Eroom's Law – the observation that drug discovery is becoming slower and less productive over time, in direct opposition to the accelerating pace of technology [41]. This bottleneck is particularly pronounced in the "Make" phase, where the synthesis of target compounds often represents the most costly and time-consuming step [42] [43]. The pursuit of accelerated DMTA cycles is no longer merely an operational goal but a strategic necessity for the viability of pharmaceutical research and development.

This guide provides a comparative analysis of contemporary strategies and platforms designed to overcome these bottlenecks. By examining the integration of automation, artificial intelligence (AI), and novel workflows, we will objectively compare the performance of different acceleration approaches. The analysis is framed within a cost-benefit context, crucial for researchers, scientists, and drug development professionals making informed decisions about technology investments. We will summarize quantitative performance data, detail experimental protocols, and visualize key workflows to offer a comprehensive resource for modernizing drug discovery efforts.

Performance Comparison of DMTA Acceleration Strategies

The acceleration of the DMTA cycle can be pursued through two primary, non-mutually exclusive strategies: making each iteration faster, or reducing the number of iterations required to identify a viable clinical candidate [41]. The following table compares the quantitative performance and key characteristics of several advanced platforms and approaches currently reshaping the field.

Table 1: Comparative Analysis of DMTA Acceleration Platforms and Strategies

| Strategy/Platform | Key Technology/Feature | Reported Impact/Performance | Primary DMTA Phase Addressed |

|---|---|---|---|

| AI-Powered Synthesis Planning [42] | Machine Learning (ML), Retrosynthetic Analysis | Reduces planning time; identifies viable synthetic routes for complex molecules. | Design |

| Fully Automated Synthesis Systems [41] | Parallel automated synthesis, liquid handlers | Targets 1-10 mg of final compound; enables high-throughput "Make" phase for hit-to-lead. | Make |

| Direct-to-Biology (D2B) Workflow [44] | Testing unpurified reaction mixtures | Accelerates timelines from months to weeks; high agreement between unpurified/purified compound data. | Make, Test |

| AI-Driven Compound Design [41] | Generative AI models for de novo design | Designs compounds with good activity, drug-like properties, and synthetic feasibility, reducing failed iterations. | Design |

| Automated Data Workflows [45] | Integrated data ecosystems (e.g., Genedata Screener) | Automates data processing & analysis; supports AI/ML-driven candidate prioritization. | Analyze |

| High-Throughput Reaction Analysis [41] | Direct Mass Spectrometry (no chromatography) | Achieves ~1.2 seconds/sample throughput (vs. >1 min/sample for LCMS). | Test, Analyze |

Detailed Experimental Protocols

To ensure reproducibility and provide a clear understanding of the technical foundations, this section outlines the detailed methodologies for two of the most impactful protocols cited in the comparison: the Direct-to-Biology workflow and the high-throughput reaction analysis.

Protocol 1: Direct-to-Biology (D2B) for Targeted Protein Degraders

The D2B protocol bypasses the traditional purification bottleneck, allowing for the rapid biological testing of newly synthesized compounds. The following workflow diagram illustrates the key stages of this process.

Title: Direct-to-Biology (D2B) Experimental Workflow

1. Design Phase [44]:

- Objective: Design a library of bifunctional targeted protein degraders.

- Procedure:

- Utilize a diverse toolbox of E3 ligase binders (e.g., for CRBN, VHL).

- Design linkers extended from different vectors, incorporating a mix of flexible and rigid linkers of varying lengths.

- Prioritize designs for favorable physicochemical properties and broad applicability.

2. Synthesis & "Make" Phase [44]:

- Objective: Synthesize target compounds in a format compatible with direct biological testing.

- Microscale Synthesis:

- Reaction Vessel: Perform reactions in 96-well or 384-well plates.

- Reaction Scale: Use a reaction mixture volume of approximately 50 μL, containing <1 mg of reactants per well.

- Validation: Determine reaction success (conversion) via LC-MS analysis.

3. Direct-to-Biology Transfer:

- Objective: Prepare the reaction mixture for biological assay without purification.

- Procedure: Directly transfer an aliquot of the unpurified reaction mixture into the biological assay system.

4. Biological and Physicochemical Testing [44]:

- Objective: Assess the biological activity and key properties of the unpurified compounds.

- Biological Assay:

- Assay Type: Employ a suite of Targeted Protein Degradation (TPD) assays.

- Format: Use a multiplex assay that simultaneously measures target degradation (e.g., % degradation, DC50) and cytotoxicity.

- Data Output: Generate single-point degradation data and concentration-response curves (DC50) directly from unpurified mixtures.

- Physicochemical Testing:

- Assay: Perform assays such as Enzymatic Polarized Solvent Assay (ePSA) to measure permeability-related properties.

- Validation: Confirm that results from unpurified compounds show high agreement with data from purified compounds.

5. Analysis and Hit Follow-up [44]:

- Objective: Identify hits and confirm activity.

- Data Analysis: Analyze degradation and cytotoxicity data to establish Structure-Activity Relationships (SAR) and select hits.

- Hit Confirmation:

- Option 1 (Micropurification): Perform rapid micropurification of the active D2B reaction mixtures for retesting.

- Option 2 (Resynthesis): Formally resynthesize and purify the hit compound for definitive validation.

- Success Criteria: Concentration-response curves of unpurified, micropurified, and purified compounds should be in excellent agreement.

Protocol 2: High-Throughput Reaction Analysis via Direct Mass Spectrometry

This protocol, developed by the Blair group, drastically accelerates the analysis of reaction outcomes, which is a common bottleneck in the "Test" phase of synthesis optimization [41].

1. Reaction Setup [41]:

- Objective: Prepare a large number of reaction condition variations for screening.

- Procedure:

- Use automated liquid handlers to set up reactions in a parallel format (e.g., in a 384-well plate).

- The system allows for rapid variation of parameters like catalysts, ligands, solvents, and substrates.

2. Reaction Execution:

- Objective: Carry out the chemical reactions under controlled conditions.

- Procedure: Allow reactions to proceed under the specified conditions (e.g., temperature, time).

3. High-Throughput Sample Analysis [41]:

- Objective: Analyze reaction outcomes with maximum speed.

- Technology: Employ a direct mass spectrometry method that avoids the slow step of liquid chromatography.

- Procedure:

- Sample Introduction: Automatically introduce a small aliquot from each reaction well directly into the mass spectrometer.

- Ionization & Mass Analysis: Use soft ionization techniques to generate ions of the reaction components without significant fragmentation. Observe diagnostic mass-to-charge (m/z) ratios for the desired product, starting materials, and potential by-products.

- Success/Failure Determination: Determine reaction success based on the presence of diagnostic fragmentation patterns or ions corresponding to the desired product.

- Performance: This method achieves a throughput of approximately 1.2 seconds per sample, enabling the analysis of a 384-well plate in about 8 minutes.

4. Data Integration and Model Building:

- Objective: Use the rapid analytical data to inform and improve reaction prediction models.

- Procedure: Feed the high-throughput success/failure data into machine learning models to predict the outcomes of new, untested reactions with accuracy comparable to expert chemists [41].

Workflow Visualization: The AI-Digital-Physical DMTA Cycle

The most significant evolution in the DMTA cycle is the move towards a fully integrated, data-driven system where the physical, digital, and AI-driven components work in concert. The following diagram maps this interconnected workflow.

Title: Integrated AI-Digital-Physical DMTA Workflow

This workflow illustrates a modern, bidirectional cycle where:

- The AI & Digital Layer operates continuously, using FAIR (Findable, Accessible, Interoperable, Reusable) data to power predictive models and generative AI for compound design [42] [46].

- The Physical Experimentation Layer executes the designed experiments with high efficiency using automation.

- The key to acceleration is the seamless flow of data from physical experiments back to the digital repository, which refines the AI models, leading to better designs in the next iteration and reducing the number of cycles needed [46].

The Scientist's Toolkit: Essential Research Reagent Solutions

The successful implementation of accelerated DMTA cycles relies on a suite of specific reagents, tools, and platforms. The following table details key solutions that form the backbone of these advanced workflows.

Table 2: Essential Research Reagent Solutions for Accelerated DMTA Cycles

| Tool/Reagent Category | Specific Examples / Key Features | Primary Function in DMTA Cycle |

|---|---|---|

| Building Block (BB) Collections [42] | Enamine, eMolecules, Chemspace; "Make-on-Demand" (MADE) virtual catalogues. | Provides rapid access to diverse, high-quality chemical starting materials for synthesis. |

| AI Synthesis Planning Platforms [42] | Computer-Assisted Synthesis Planning (CASP) using Monte Carlo Tree Search; "Chemical Chatbots". | Augments human intuition for planning viable multi-step synthetic routes to target molecules. |