How Robotic Platforms Are Accelerating Chemical Discovery: AI, Automation, and the Future of Lab Science

This article explores the transformative integration of robotic platforms, artificial intelligence, and automation in chemical and drug discovery.

How Robotic Platforms Are Accelerating Chemical Discovery: AI, Automation, and the Future of Lab Science

Abstract

This article explores the transformative integration of robotic platforms, artificial intelligence, and automation in chemical and drug discovery. Aimed at researchers and development professionals, it details the foundational principles of self-driving labs, their application in methodologies from high-throughput screening to autonomous synthesis, and the practical challenges of implementation. It further examines the growing body of validation data, including accelerated timelines and compounds entering clinical trials, providing a comprehensive overview of how these technologies are reshaping scientific discovery and future research paradigms.

The New Lab Partner: Understanding Robotic and AI Foundations

The field of chemical and materials research is undergoing a profound transformation with the emergence of autonomous laboratories, which represent a fundamental shift from traditional manual, trial-and-error experimentation to an AI-driven, accelerated research paradigm. These self-driving labs (SDLs) are automated robotic platforms integrated with artificial intelligence to execute experiments, interact with robotic systems, and manage data, thereby closing the predict-make-measure discovery loop [1] [2]. This approach addresses a critical challenge in modern research: while computational methods can predict hundreds of thousands of novel materials, experimental validation remains a slow, labor-intensive process [3]. Autonomous laboratories are poised to bridge this gap, dramatically accelerating the discovery of new materials for clean energy, electronics, and pharmaceuticals while significantly reducing resource consumption and waste [4] [2].

Framed within the broader context of how robotic platforms accelerate chemical discovery research, SDLs leverage a powerful integration of robotics, artificial intelligence, and domain knowledge to achieve research velocities previously unimaginable. By operating continuously and autonomously, these systems can process 50 to 100 times as many samples as a human researcher each day, potentially increasing the rate of materials discovery by 10-100 times compared to conventional methods [5] [6]. This acceleration is not merely about speed but represents a fundamental reimagining of the scientific process itself, where AI-guided systems rapidly iterate through design-make-test-learn cycles, continuously refining their approach based on experimental outcomes [5].

Core Architecture of Autonomous Laboratories

Fundamental Components and System Integration

The architecture of an autonomous laboratory is built upon three tightly integrated core components that work in concert to enable closed-loop operation. This integration creates a seamless workflow where computational predictions guide physical experiments, and experimental results inform subsequent computational analysis.

Table 1: Core Components of Autonomous Laboratories

| Component | Function | Key Technologies |

|---|---|---|

| Hardware & Robotics | Executes physical experiments and measurements | Robotic arms, liquid handlers, furnaces, synthesizers, analytical instruments (XRD, spectrophotometers) |

| AI & Machine Learning | Plans experiments, analyzes data, decides next actions | Bayesian optimization, generative models, active learning, natural language processing, computer vision |

| Software & Data Infrastructure | Manages workflow, stores data, facilitates communication | Laboratory Information Management Systems (LIMS), application programming interfaces (APIs), cloud computing platforms |

The hardware component encompasses the physical robotic systems that perform experimental procedures. For inorganic materials synthesis, this might include robotic arms for transferring samples, box furnaces for heating, and automated X-ray diffraction (XRD) stations for characterization [3]. In pharmaceutical applications, liquid handling robots automate the precise mixing of drugs and excipients for formulation discovery [7]. These systems operate in environments specifically designed for automated workflows, with the A-Lab at Berkeley National Laboratory occupying 600 square feet and containing 3 robotic arms, 8 furnaces, and access to approximately 200 powder precursors [5].

The artificial intelligence component serves as the "brain" of the autonomous laboratory, making critical decisions about which experiments to perform next. Machine learning algorithms, particularly Bayesian optimization (BO), are frequently employed to efficiently navigate complex experimental spaces [7]. These algorithms leverage data from previous experiments to build surrogate models of the experimental landscape, then select subsequent experiments that balance exploration of unknown regions with exploitation of promising areas [2]. For materials synthesis, AI systems may also incorporate natural language processing models trained on historical literature to propose initial synthesis recipes based on analogy to known materials [3].

The software infrastructure forms the connective tissue that enables communication between all components. A central management system, often controlled through an application programming interface (API), coordinates the activities of various instruments and robotic systems [3]. This software architecture enables on-the-fly job submission and dynamic reconfiguration of experimental plans based on incoming results. Cloud computing platforms are increasingly integrated into these systems, as demonstrated by Exscientia's implementation of an AI-powered platform built on Amazon Web Services (AWS) that links generative-AI "DesignStudio" with robotic "AutomationStudio" [8].

The Closed-Loop Workflow

The defining feature of autonomous laboratories is their implementation of a continuous closed-loop workflow that iterates through sequential cycles of prediction, experimentation, and learning. This self-correcting, adaptive process fundamentally distinguishes SDLs from simply automated laboratories.

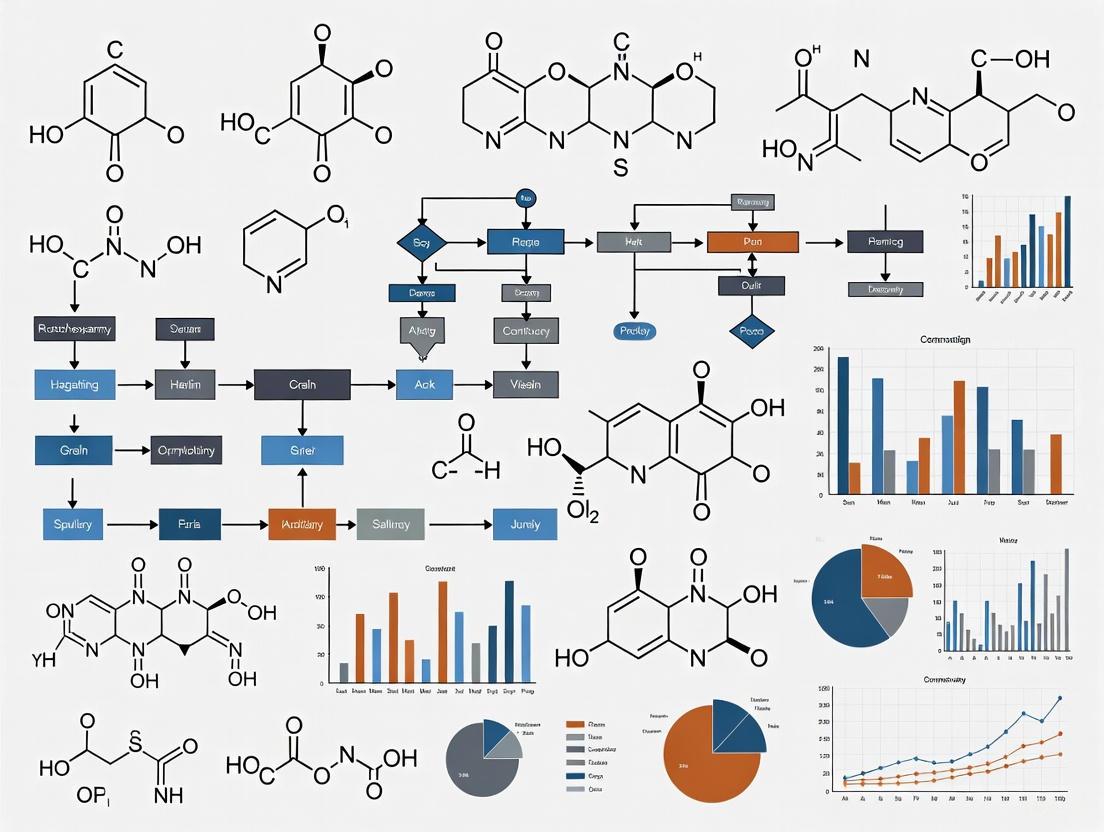

Diagram 1: Closed-Loop Workflow in Autonomous Laboratories

The process begins with researchers defining a clear research goal, such as synthesizing a specific novel material or optimizing a pharmaceutical formulation for maximum solubility [3] [7]. The AI system then proposes an initial set of experiments based on available data, computational predictions, or historical knowledge. For novel materials with no prior synthesis data, the system might use natural language processing models trained on scientific literature to identify analogous syntheses and propose precursor combinations and reaction conditions [3].

Robotic systems subsequently execute the proposed experiments, handling tasks such as dispensing and mixing precursor powders, heating samples in furnaces, or preparing liquid formulations using liquid handling robots [3] [7]. This automation enables continuous operation, with systems like the A-Lab functioning 24/7 for extended periods [5]. After experiments are completed, integrated characterization systems automatically analyze the results. For materials synthesis, this typically involves X-ray diffraction to identify crystalline phases and determine yield, while pharmaceutical applications might use spectrophotometers to measure drug solubility [3] [7].

The data analysis phase employs machine learning algorithms to interpret characterization results. For XRD patterns, probabilistic ML models trained on experimental structures can identify phases and quantify weight fractions automatically [3] [6]. The analyzed results then feed into the AI decision-making engine, which updates its models of the experimental landscape and applies active learning algorithms to propose the next most informative experiments. This continuous learning process enables the system to rapidly converge toward optimal solutions, as demonstrated by the A-Lab's use of its ARROWS³ (Autonomous Reaction Route Optimization with Solid-State Synthesis) algorithm to identify synthesis routes with improved yield when initial recipes failed [3].

Implementation Approaches and Methodologies

Varying Degrees of Autonomy

Autonomous laboratories exist along a spectrum of autonomy, from fully self-driving systems that operate with minimal human intervention to semi-autonomous platforms that combine automated workflows with strategic human guidance. The choice of implementation depends on the specific research domain, available resources, and complexity of the experimental procedures.

Fully self-driving laboratories represent the most advanced implementation, where human researchers have almost no input to the workflow once the research goal is defined [7]. The A-Lab at Berkeley National Laboratory exemplifies this approach, having successfully synthesized 41 novel compounds from 58 targets during 17 days of continuous operation without human intervention [3]. Similarly, researchers at North Carolina State University demonstrated a fully autonomous system that utilized dynamic flow experiments to collect at least 10 times more data than previous techniques while dramatically reducing both time and chemical consumption [4]. These systems implement complete design-make-test-analyze cycles, with AI algorithms making all decisions about which experiments to perform next based on incoming data.

Semi-self-driving or semi-closed-loop systems represent a hybrid approach where the bulk of experimental work is automated, but key components still require human intervention [7]. This approach lowers barriers to adoption by reducing the need for comprehensive robotics while still leveraging the power of AI-driven experimentation. An example is the semi-self-driving robotic formulator used for pharmaceutical development, where automated liquid handling robots prepare formulations and spectrophotometers characterize them, but researchers manually transfer well plates between devices and load powder into plates [7]. This system tested 256 formulations from a possible 7776 combinations (approximately 3.3% of the total space) and identified 7 lead formulations with high solubility in just a few days [7].

Experimental Protocols Across Applications

The implementation of autonomous laboratories varies significantly across different research domains, with specialized methodologies developed for specific applications ranging from inorganic materials synthesis to pharmaceutical formulation.

Solid-State Materials Synthesis Protocol

The A-Lab's protocol for synthesizing novel inorganic powders demonstrates the application of autonomous methodology to solid-state materials:

- Target Identification: Researchers select target materials predicted to be stable using computational resources like the Materials Project, filtering for air-stable compounds that will not react with Oâ‚‚, COâ‚‚, or Hâ‚‚O during handling [3].

- Recipe Generation: The system proposes up to five initial synthesis recipes using machine learning models that assess target "similarity" through natural-language processing of a large database of literature syntheses [3]. A synthesis temperature is proposed by a second ML model trained on heating data from literature [3].

- Automated Synthesis:

- Precursor powders are automatically dispensed and mixed by robotic systems

- Mixtures are transferred to alumina crucibles and loaded into one of four box furnaces

- Samples are heated according to proposed temperature profiles [3]

- Characterization and Analysis:

- After cooling, robotic arms transfer samples to an XRD station

- Samples are ground into fine powder and measured by XRD

- Probabilistic ML models analyze diffraction patterns to identify phases and weight fractions

- Automated Rietveld refinement confirms phase identification [3]

- Iterative Optimization: If initial recipes fail to produce >50% yield, the active learning algorithm (ARROWS³) proposes improved follow-up recipes based on observed reaction pathways and thermodynamic driving forces [3].

Pharmaceutical Formulation Discovery Protocol

The protocol for semi-self-driven discovery of medicine formulations demonstrates how autonomous methodology applies to pharmaceutical development:

- Experimental Space Definition: Researchers define the formulation space by selecting approved excipients (e.g., Tween 20, Tween 80, Polysorbate 188, dimethylsulfoxide, propylene glycol) and concentration ranges (0%, 1%, 2%, 3%, 4%, 5%), creating a potential search space of 7776 combinations for a 5-excipient system [7].

- Seed Dataset Generation: A diverse initial dataset of 96 formulations (generated in triplicate) is created using k-means clustering to ensure broad coverage of the experimental space [7].

- Automated Formulation and Testing:

- A liquid handling robot automatically prepares formulations according to designed experiments

- Samples are centrifuged and diluted using liquid-handling robotics

- A spectrophotometer plate reader characterizes solubility through absorbance measurements [7]

- Bayesian Optimization Loop:

- An automated script runs Bayesian optimization to design the next experiment batch

- The algorithm selects 32 formulations per iteration expected to maximize solubility

- The process repeats for multiple learning loops [7]

- Lead Validation: Promising formulations identified by the system are manually prepared in triplicate and re-characterized to confirm performance [7].

Performance Metrics and Impact Assessment

Quantitative Performance Benchmarks

The transformative potential of autonomous laboratories is evidenced by concrete performance metrics demonstrating accelerated discovery timelines, enhanced experimental efficiency, and reduced resource consumption compared to conventional research approaches.

Table 2: Performance Metrics of Autonomous Laboratories

| Metric | Traditional Methods | Autonomous Laboratories | Improvement Factor |

|---|---|---|---|

| Data Acquisition | Single snapshots per experiment | Continuous data streaming (every 0.5 seconds) | 10-20x more data points [4] |

| Sample Throughput | Limited by human operation (several per day) | 100-200 samples per day [5] | 50-100x increase [5] |

| Formulation Testing | ~35 formulations in 6 days (manual) | 256 formulations in 6 days [7] | 7x more formulations with 75% less human time [7] |

| Chemical Consumption | Conventional quantities required for manual experimentation | "Dramatic" reduction through optimized experimentation [4] | Significant waste reduction [4] [2] |

| Discovery Timeline | Years for materials discovery | Weeks to months for materials discovery [4] | 10-100x acceleration [6] |

The performance advantages of autonomous laboratories extend beyond simple acceleration to encompass more efficient exploration of complex experimental spaces. In pharmaceutical formulation, the semi-self-driving system was able to identify highly soluble formulations after testing only 3.3% of the total experimental space, demonstrating the remarkable efficiency of Bayesian optimization in navigating high-dimensional problems [7]. Similarly, in materials synthesis, the A-Lab successfully produced 71% of target compounds, with analysis suggesting this success rate could be improved to 78% with minor modifications to computational techniques [3].

Case Studies: From Materials to Medicines

Inorganic Materials Discovery at A-Lab

The A-Lab at Berkeley National Laboratory represents one of the most comprehensive implementations of autonomous materials discovery. During its demonstrated operation, the system successfully synthesized 41 of 58 novel target compounds spanning 33 elements and 41 structural prototypes [3]. The lab's active learning capability was particularly evidenced by its optimization of synthesis routes for nine targets, six of which had zero yield from initial literature-inspired recipes [3]. For example, in synthesizing CaFe₂P₂O₉, the system identified an alternative reaction pathway that avoided the formation of intermediates with small driving forces (8 meV per atom) in favor of a pathway with a much larger driving force (77 meV per atom), resulting in an approximately 70% increase in target yield [3].

Pharmaceutical Formulation Discovery

In pharmaceutical applications, researchers demonstrated a semi-self-driving system for discovering liquid formulations of poorly soluble drugs, using curcumin as a test case [7]. The system identified 7 lead formulations with high solubility (>10 mg mLâ»Â¹) after sampling only 256 out of 7776 potential formulations [7]. The discovered formulations were predicted to be within the top 0.1% of all possible combinations, highlighting the efficiency of the autonomous approach in navigating vast experimental spaces [7]. The system operated with significantly enhanced efficiency, testing 7 times more formulations than a skilled human formulator could achieve in the same timeframe while requiring only 25% of the human time [7].

Essential Research Tools and Infrastructure

Research Reagent Solutions and Materials

The experimental workflows in autonomous laboratories rely on specialized reagents, materials, and instrumentation tailored to automated handling and high-throughput experimentation.

Table 3: Key Research Reagent Solutions in Autonomous Laboratories

| Item | Function | Application Examples |

|---|---|---|

| Powder Precursors | Starting materials for solid-state synthesis | ~200 inorganic powders for materials synthesis (e.g., metal oxides, phosphates) [5] |

| Pharmaceutical Excipients | Enable drug formulation and solubility enhancement | Tween 20, Tween 80, Polysorbate 188, dimethylsulfoxide, propylene glycol [7] |

| Characterization Standards | Calibrate analytical instruments for accurate measurements | Reference materials for XRD analysis [3] |

| Solvent Systems | Medium for liquid-phase reactions and formulations | High-purity solvents compatible with automated liquid handling systems [7] |

Critical Instrumentation and Robotic Systems

The hardware infrastructure of autonomous laboratories encompasses specialized robotic systems, synthesis equipment, and characterization instruments that enable continuous, automated operation.

Robotic Manipulation Systems form the physical backbone of autonomous laboratories, handling tasks such as transferring samples between stations, dispensing powders and liquids, and loading samples into instruments. The A-Lab utilizes 3 robotic arms to manage sample movement between preparation, heating, and characterization stations [5]. These systems require precise calibration to handle the diverse physical properties of solid powders, which can vary significantly in density, flow behavior, particle size, hardness, and compressibility [3].

Synthesis and Processing Equipment includes automated systems for conducting chemical reactions and preparing materials. For solid-state synthesis, the A-Lab employs 8 box furnaces for heating samples according to programmed temperature profiles [5]. For solution-based chemistry and pharmaceutical formulation, liquid handling robots like the Opentrons OT-2 enable precise dispensing and mixing of reagents in well plates [7]. Continuous flow reactors represent another important synthesis platform, particularly for applications requiring rapid screening of reaction conditions, with systems capable of varying chemical mixtures continuously and monitoring reactions in real time [4].

Characterization and Analysis Instruments provide the critical data that feeds the autonomous decision-making loop. X-ray diffraction (XRD) serves as a primary characterization technique for materials synthesis, with automated systems capable of grinding samples into fine powders and measuring diffraction patterns without human intervention [3]. For pharmaceutical applications, spectrophotometer plate readers enable high-throughput measurement of drug solubility through absorbance spectroscopy [7]. The integration of these analytical instruments with robotic sample handling enables rapid turnaround between experiment completion and data analysis, which is essential for maintaining continuous operation.

Autonomous laboratories represent a fundamental transformation in the paradigm of chemical and materials research, shifting from traditional manual experimentation to AI-driven, robotic accelerated discovery. By integrating artificial intelligence with automated robotics and data infrastructure, these systems implement closed-loop workflows that dramatically accelerate the design-make-test-learn cycle. The performance metrics speak unequivocally: autonomous laboratories can achieve 10-100x acceleration in discovery timelines while reducing resource consumption and generating far less waste than conventional approaches [4] [6].

The architectural framework of these systems—encompassing specialized hardware, AI decision-making engines, and integrative software—enables continuous, adaptive experimentation that becomes increasingly efficient through machine learning. Implementation approaches range from fully self-driving systems requiring minimal human intervention to semi-autonomous platforms that balance automation with researcher expertise, making the technology accessible across different research domains and resource environments [3] [7].

As the technology continues to evolve, future developments are likely to focus on increasing integration across distributed networks of autonomous laboratories, enabling collaborative experimentation across multiple institutions [1]. Advances in AI, particularly in large-scale foundation models tailored to scientific domains, promise to enhance the reasoning and planning capabilities of these systems [1]. The continued reduction in costs for robotic components through 3D printing and open-source designs will further democratize access to this transformative technology [2]. Through these developments, autonomous laboratories are poised to substantially accelerate the discovery of solutions to pressing global challenges in clean energy, medicine, and sustainable materials.

The field of chemical discovery is undergoing a profound transformation, shifting from traditional, labor-intensive trial-and-error approaches to a new paradigm defined by speed, scale, and intelligence. This shift is powered by the integrated core triad of Artificial Intelligence (AI), robotic platforms, and sophisticated data systems. Together, these technologies create closed-loop, autonomous laboratories that can execute and analyze experiments with minimal human intervention, dramatically accelerating the journey from hypothesis to discovery [9] [10]. In the context of chemical research, this triad enables the exploration of vast, multidimensional reaction hyperspaces—encompassing variables like concentration, temperature, and substrate combinations—that are intractable for human researchers alone [11]. This technical guide examines the components, workflows, and implementations of this core triad, framing it within the broader thesis of how robotic platforms are accelerating chemical discovery research for scientists and drug development professionals.

The Core Components of the Triad

Artificial Intelligence: The Cognitive Engine

AI serves as the planning and learning center of the modern laboratory, moving beyond simple automation to become an active collaborator in the scientific process [12].

- Design and Planning: AI algorithms, particularly large language models (LLMs), can now design experiments and propose synthetic routes based on natural language instructions. Systems like Coscientist and ChemCrow demonstrate the ability to plan complex chemical tasks, such as optimizing palladium-catalyzed cross-couplings or synthesizing an insect repellent, by leveraging tool-using capabilities and expert-designed software [9].

- Optimization and Decision-Making: Machine learning algorithms like Bayesian optimization, Gaussian processes, and genetic algorithms guide the exploration of chemical parameter spaces. These algorithms propose the most informative subsequent experiments, enabling efficient convergence toward optimal conditions or novel discoveries with far fewer trials than traditional methods [10] [11].

- Data Analysis and Interpretation: AI models, including convolutional neural networks, are used to interpret complex characterization data. For instance, at Berkeley Lab's A-Lab, AI analyzes X-ray diffraction patterns to identify synthesized phases, while elsewhere, AI decomposes UV-Vis spectra to quantify yields for multiple products and by-products simultaneously [9] [11].

Robotic Platforms: The Embodied Executor

Robotic systems provide the physical interface to the chemical world, translating digital instructions into tangible experiments.

- Mobile Manipulators: Unlike fixed automation, mobile robots can navigate standard laboratories, transferring samples between specialized stations (e.g., synthesizers, UPLC-MS, NMR spectrometers). This creates a flexible, modular workflow that can be reconfigured for different experimental campaigns [13] [9].

- Robotic Arms and Liquid Handlers: From simple liquid handling to complex manipulations like pouring and weighing, robotic arms perform the repetitive and precise tasks of chemical synthesis. Systems like the A-Lab for solid-state materials and RoboChem for flow chemistry automate the entire process from precursor preparation to product isolation [12] [13].

- Integrated Workstations: Platforms such as the MO:BOT standardize and automate biologically relevant processes like 3D cell culture, improving reproducibility and providing more predictive data for drug discovery [14].

Data Systems: The Central Nervous System

High-performance data infrastructure is the critical glue that binds AI and robotics into a cohesive, intelligent whole.

- High-Speed Data Acquisition and Processing: Streams of data from instruments like electron microscopes or mass spectrometers are fed directly to supercomputers for near-instant analysis. At Berkeley Lab's Molecular Foundry, the Distiller platform streams microscopy data to the Perlmutter supercomputer, enabling researchers to refine experiments in progress [12].

- Data Management and Knowledge Graphs: Structured databases and knowledge graphs organize multimodal data—from proprietary databases to unstructured literature—making it machine-readable and actionable. These systems are essential for training robust AI models and for extracting prior knowledge for experimental planning [10].

- High-Performance Networking: Networks like Berkeley Lab's ESnet use AI to predict and optimize traffic, ensuring seamless, high-speed collaboration and data transfer between geographically distributed research facilities, which is crucial for handling enormous datasets [12].

Integrated Workflow: The Closed-Loop Discovery Engine

The true power of the triad is realized when its components are integrated into a continuous, closed-loop cycle. The following diagram illustrates this self-driving workflow.

Figure 1: The Closed-Loop Autonomous Discovery Workflow. This self-driving cycle integrates AI, robotics, and data systems to accelerate research with minimal human intervention.

This "design-make-test-analyze" loop functions as follows:

- AI Planning & Design: Given a high-level objective (e.g., "discover a novel organic semiconductor"), the AI agent queries knowledge bases and existing literature to propose initial synthetic targets and experimental protocols [9] [10]. In systems like Coscientist, this is initiated through natural language commands [15].

- Robotic Execution: The proposed protocol is translated into machine-readable code (e.g., using the XDL language) that directs robotic platforms to execute the experiment. This includes tasks like dispensing reagents, controlling reaction conditions (temperature, stirring), and terminating reactions [13] [9].

- Automated Data Analysis: Robotic systems transfer the crude product to analytical instruments (e.g., NMR, MS, HPLC). AI models then process the raw data in near real-time—for instance, by deconvoluting UV-Vis spectra to quantify yields of multiple products or identifying crystalline phases from XRD patterns [11] [9].

- AI Model Update & Decision: The results are fed back to the AI system. Using optimization algorithms like Bayesian optimization or active learning, the AI assesses the outcome, updates its internal model of the chemical space, and proposes the most promising set of conditions for the next experiment [9] [10]. This closed-loop continues until the objective is met.

Quantitative Impact: Data and Metrics

The implementation of the core triad is delivering measurable improvements in the speed, cost, and success of research and development. The following table summarizes key quantitative findings from the field.

Table 1: Quantitative Impact of the AI, Robotics, and Data Triad in Scientific Discovery

| Metric | Traditional Workflow | Triad-Enhanced Workflow | Source & Context |

|---|---|---|---|

| Discovery Timeline | ~5 years (target to preclinical) | 18-24 months (e.g., Insilico Medicine's anti-fibrosis drug) | [8] [16] |

| Experiment Throughput | Manually limited | ~1,000 reactions analyzed per day (robot-assisted UV-Vis mapping) | [11] |

| Design Cycle Efficiency | Baseline | ~70% faster design cycles; 10x fewer compounds synthesized (Exscientia) | [8] |

| Clinical Trial Cost | High baseline | Up to 70% reduction in trial costs through AI-driven optimization | [16] |

| Yield Quantification Cost | High (NMR/LC-MS) | "Cents per sample" (low-cost optical detection) | [11] |

| Synthesis Success Rate | Human-dependent | 71% (41 of 58 predicted materials) achieved autonomously by A-Lab | [9] |

Detailed Experimental Protocol: Hyperspace Mapping of Chemical Reactions

To illustrate the triad in action, this section details a specific experiment for robot-assisted mapping of chemical reaction hyperspaces, as published in Nature [11].

Objective

To reconstruct a complete, multidimensional portrait of chemical reactions by quantifying the yields of major and minor products across thousands of conditions, thereby uncovering unexpected reactivity and product switchovers.

Methodology

Robotic Setup and Execution:

- A house-built robotic platform capable of handling organic solvents and harsh reagents is used.

- The robot pipettes reagents into reaction vials according to a predefined grid spanning the hyperspace of continuous variables (e.g., concentration, temperature, stoichiometry).

- After a set reaction time, the robotic system acquires a UV-Vis absorption spectrum of each crude reaction mixture (~8 seconds per spectrum).

Bulk Product Identification:

- The crude mixtures from all hyperspace points are combined into a single, complex mixture.

- This aggregate mixture is separated by traditional preparative chromatography.

- Isolated fractions are identified using NMR and MS to establish the full "basis set" of products that form anywhere in the explored hyperspace.

Spectral Calibration:

- The UV-Vis absorption spectra of all purified basis-set components (and starting materials) are measured at different concentrations to construct calibration curves.

Data Analysis and Yield Quantification:

- The complex UV-Vis spectrum of each individual crude reaction is computationally decomposed (via vector decomposition / spectral unmixing) into a linear combination of the reference spectra from the basis set.

- The fit is constrained by reaction stoichiometry to ensure physically meaningful results.

- An anomaly detection algorithm (using the Durbin-Watson statistic) analyzes the residuals between the experimental and fitted spectra to flag conditions that produce unexpected, unidentifiable products.

Key Reagents and Solutions

Table 2: Research Reagent Solutions for Reaction Hyperspace Mapping

| Item | Function in the Experiment |

|---|---|

| House-Built Robotic Platform | Executes high-throughput pipetting, reaction control, and automated UV-Vis spectral acquisition. |

| UV-Vis Spectrophotometer | Rapid, low-cost analytical core for quantifying reaction outcomes at a throughput of ~100 samples/hour. |

| Vector Decomposition Algorithm | Software tool for deconvoluting complex spectral data into individual component concentrations. |

| Anomaly Detection Algorithm | Identifies regions of hyperspace where unanticipated products are formed, guiding further investigation. |

| Basis Set of Purified Products | Provides the reference spectra required for quantitative spectral unmixing of the crude reaction mixtures. |

Leading Platforms and Implementations

The core triad is being implemented across various global research initiatives and commercial platforms.

- Berkeley Lab's A-Lab: An autonomous materials discovery platform where AI proposes new inorganic compounds, and robots prepare and test them. The tight integration of computation, robotics, and data has successfully synthesized numerous novel materials predicted to be stable [12] [9].

- Coscientist (Carnegie Mellon University): An LLM-powered AI system that can design, plan, and optimize complex chemical reactions by controlling robotic laboratory instruments through natural language commands [15] [9].

- Modular Autonomous Platforms (University of York & Others): Systems using free-roaming mobile robots to connect islands of automation (synthesizers, UPLC-MS, NMR), creating a flexible "self-driving laboratory" for exploratory organic synthesis [13] [9].

- AI-Driven Drug Discovery (Exscientia, Insilico Medicine): Companies that have integrated generative AI with automated precision chemistry to create end-to-end platforms, compressing the early drug discovery timeline from years to months and advancing multiple AI-designed molecules into clinical trials [8].

The data management architecture that supports these platforms is complex and critical to their success, as shown in the following diagram.

Figure 2: Data System Architecture for Autonomous Discovery. This flow shows how disparate data sources are integrated into a knowledge graph that feeds AI models and records results from robotic execution, creating a learning loop.

The integration of AI, robotics, and data systems represents a fundamental shift in the paradigm of chemical discovery. This core triad enables the creation of autonomous laboratories that operate as closed-loop systems, capable of exploring chemical spaces with a speed, scale, and precision far beyond human capability. By delegating repetitive and data-intensive tasks to machines, researchers are empowered to focus on higher-level strategy, creative problem-solving, and interpreting the novel discoveries that these systems generate. As the underlying technologies continue to advance—with more sophisticated AI models, more dexterous robotics, and more interconnected data infrastructures—the acceleration of chemical discovery and drug development will only intensify, heralding a new era of scientific innovation.

The acceleration of chemical discovery research is increasingly driven by the integration of three core technological pillars: robotic arms for physical manipulation, automated liquid handlers for precise fluidic operations, and AI-powered analytics for real-time data interpretation. This whitepaper details the specifications, protocols, and synergistic interactions of these components, framing them within a closed-loop, design-make-test-analyze (DMTA) cycle that is transforming the pace of innovation in fields from drug discovery to materials science [17] [3] [12].

Robotic Arms: The Physical Orchestrators

Robotic arms serve as the kinetic backbone of automated laboratories, physically transferring samples and labware between discrete stations to create continuous, hands-off workflows.

Key Functions & Specifications:

- Sample Logistics: Robotic arms with specialized grippers (centric and extended fingers) move microplates, vials, and crucibles between instruments like liquid handlers, incubators, centrifuges, and analytical devices [3] [18].

- Integration Enabler: They bridge standalone instruments into a cohesive workflow. For example, in integrated workstations, a robotic arm may move an assay plate from a liquid handler to an on-deck incubator and then to a plate reader [18].

- Platform Examples: The A-Lab at Lawrence Berkeley National Laboratory employs robotic arms to shuttle powder samples between stations for dispensing, furnace heating, and X-ray diffraction (XRD) characterization [3] [12]. The Tecan Fluent workstation uses a robotic arm to manage labware across its deck [18].

Experimental Protocol: Autonomous Solid-State Synthesis (A-Lab Protocol)

- Planning: An AI agent proposes a target inorganic compound and a synthesis recipe derived from text-mined literature data and thermodynamic calculations [3].

- Sample Preparation: A robotic arm retrieves a crucible. Precursor powders are dispensed and mixed at an automated station.

- Reaction: The arm transfers the crucible to one of four box furnaces for heating according to the recipe.

- Characterization: After cooling, the arm moves the sample to a preparation station for grinding, then to an XRD instrument.

- Analysis & Iteration: XRD patterns are analyzed by machine learning models. If target yield is below 50%, an active learning algorithm (ARROWS3) proposes a modified recipe, and the cycle repeats [3].

Liquid Handlers: The Fluidic Precision Engineers

Automated liquid handlers execute precise, high-volume fluid transfers, replacing error-prone manual pipetting and enabling miniaturization and high-throughput experimentation [19] [20].

Core Applications and Quantitative Performance: Liquid handlers are versatile tools central to numerous assays. Their performance can be quantified by precision, volume range, and application suitability.

Table 1: Key Liquid Handling Applications and Technologies

| Application | Description | Key Technologies/Examples | Volume Range & Precision |

|---|---|---|---|

| Plate Replication/Reformatting | Copying or transferring samples between plates of different densities (e.g., 96 to 384-well) [19]. | Multi-channel heads (96-, 384-channel); programmed transfer maps. | Microliters; CVs <5% common. |

| Serial Dilution | Creating concentration gradients for dose-response (IC50/EC50) studies [19]. | Automated dilution protocols with mixing cycles. | Microliters to nanoliters; critical for accuracy. |

| Cherry Picking (Hit Picking) | Selectively transferring active compounds from primary screens for confirmation [19]. | Single- or multi-channel arms with scheduling software; integrated barcode scanners. | Variable. |

| Reagent & Master Mix Dispensing | Uniform addition of common reagents (e.g., PCR master mix, ELISA substrates) [19]. | Bulk dispensers, acoustic droplet ejection (ADE). | Nanoliters to milliliters. |

| NGS Library Prep Normalization | Adjusting DNA/RNA samples to uniform concentration for sequencing [19] [20]. | Integrated workflows with plate readers for quantification. | Microliter scale. |

| qPCR Setup | Dispensing master mix and template DNA for quantitative PCR [19]. | Filtered tips, multi-channel pipettors. | Low microliter volumes; CVs <1.5% achievable [19]. |

| Matrix Combination Assays | Testing all pairwise combinations of two reagent sets (e.g., drug synergy) [19]. | Acoustic dispensers for complex nanoliter transfers. | Nanoliter scale (e.g., 2.5 nL/droplet) [18]. |

Technology Breakdown:

- Air Displacement & Positive Displacement Pipetting: Standard for general liquid handling. Positive displacement is noted for handling viscous liquids or magnetic beads without compromise [20].

- Acoustic Droplet Ejection (ADE): A contact-free method using sound waves to transfer nanoliter droplets (e.g., 2.5 nL) from a source to a destination plate. It enables ultra-miniaturization, reduces dead volume, and allows for complex reformatting [19] [18].

- Dispensing Technologies: Micro-diaphragm pumps (e.g., in Mantis, Tempest) enable fast, low-volume dispensing with minimal void volume (microliter scale) [18].

Automated Analytics: The Cognitive Core

Automated analytics encompass the software and AI models that interpret experimental data in real-time, transforming raw results into actionable insights that guide the next experiment.

Key Functions:

- Real-Time Data Interpretation: ML models analyze characterization data (e.g., XRD patterns, NMR spectra) as soon as it is generated, immediately assessing experimental success [3] [21].

- Predictive Modeling & Active Learning: AI predicts material stability, compound binding, and reaction outcomes. Active learning algorithms use experimental results to iteratively optimize subsequent conditions [17] [3].

- Workflow Orchestration: Software platforms (LIMS, ELNs) integrate instrument data, AI analytics, and cloud databases to create a "digital twin" of the lab, managing the entire DMTA cycle [17].

Experimental Protocol: AI-Driven NMR Analysis for Reaction Screening

- Reaction Execution: Chemical reactions are performed, either manually or in an automated synthesizer.

- Direct Analysis: The crude, unpurified reaction mixture is analyzed by NMR spectroscopy.

- Spectral Deconvolution: An automated workflow applies statistical algorithms (e.g., Hamiltonian Monte Carlo Markov Chain) to the complex NMR spectrum of the mixture [21].

- Compound Identification: The workflow compares the deconvolved data against a user-generated library or DFT-calculated spectra to identify molecular structures of products, including isomers, and predict their relative concentrations—all without purification [21].

- Outcome & Iteration: Results are fed back to guide the next round of synthesis, closing the discovery loop. This process reduces analysis time from days to hours [21].

Synergy: The Closed-Loop Acceleration Platform

The true acceleration of discovery arises from the seamless integration of these three components into a closed-loop, autonomous system.

Diagram 1: The Closed-Loop Chemical Discovery Platform (Max Width: 760px)

Workflow Description: The cycle begins with AI and computational tools proposing a target compound or formulation and a synthesis plan [17] [22]. Robotic arms execute the physical synthesis and transport samples [3]. Liquid handlers then prepare assay plates for high-throughput testing (e.g., biochemical activity, toxicity) [19] [17]. Subsequently, automated analytics (e.g., ML analysis of XRD, NMR, or screening data) interpret the results in real-time [3] [21]. This analysis is integrated at a decision point, which uses active learning to refine the hypothesis. The updated instructions are sent back to the AI design and robotic systems, closing the loop. This integrated DMTA cycle, as exemplified by the A-Lab, can operate continuously, dramatically compressing discovery timelines [17] [3] [12].

Diagram 2: Automated NMR Analysis for Crude Mixtures (Max Width: 760px)

The Scientist's Toolkit: Essential Research Reagent Solutions

The efficacy of automated platforms depends on specialized reagents and materials that enable miniaturization, stability, and detection.

Table 2: Key Reagents and Materials for Automated Discovery Workflows

| Item | Function in Automated Workflows | Application Context |

|---|---|---|

| Acoustic-Compatible Plates | Specialized microplates with a fluid interface that enables precise acoustic droplet ejection (ADE). | Contact-free nanoliter dispensing in drug synergy (matrix) assays and compound reformatting [19] [18]. |

| Low-Binding, Low-Dead-Volume Tips & Labware | Minimize reagent loss and sample adhesion during liquid transfers. | Critical for serial dilution and handling precious samples (e.g., NGS libraries) to conserve material [19]. |

| PCR Master Mix | A pre-mixed, optimized solution containing DNA polymerase, dNTPs, buffers, and sometimes probes. | Automated liquid handlers uniformly dispense this mix for high-throughput qPCR setup, ensuring reproducibility [19]. |

| Magnetic Beads | Paramagnetic particles used for nucleic acid purification, cleanup, and size selection. | Automated platforms like firefly use positive displacement technology to reliably handle beads in NGS library prep workflows [20]. |

| Homogeneous Assay Reagents | "Mix-and-read" assay components (e.g., for kinases, ATPases) that require no separation steps. | Foundational for generating high-quality, reproducible data in High-Throughput Screening (HTS), which trains AI models [17]. |

| Stable Isotope-Labeled Standards | Internal standards used in mass spectrometry (MS) for accurate quantification. | Integrated into automated sample prep workflows for metabolomics or pharmacokinetic studies. |

| Advanced Formulation Excipients (e.g., SNAC) | Excipients like Sodium N-(8-[2-hydroxylbenzoyl] amino) caprylate enhance drug absorption and stability. | Key targets for AI-driven formulation screening to overcome API limitations like poor solubility [22]. |

| Lyo-ready Reagents | Reagents formulated for lyophilization (freeze-drying), enhancing long-term stability. | Enables reliable storage and on-demand rehydration in automated, benchtop reagent dispensers. |

| 1-Octanol | 1-Octanol, CAS:220713-26-8, MF:C8H18O, MW:130.23 g/mol | Chemical Reagent |

| Oxalacetic acid | Oxalacetic Acid Reagent|For Research Use | High-purity Oxalacetic Acid (OAA) for life science research. A key metabolic cycle intermediate for biochemistry, cell biology, and disease studies. For Research Use Only. Not for human consumption. |

The concerted application of robotic arms, automated liquid handlers, and intelligent analytics is not merely an incremental improvement but a paradigm shift in chemical discovery research. By physically automating execution, fluidically ensuring precision and scale, and cognitively accelerating insight, these integrated platforms create a virtuous cycle of learning and innovation. They empower researchers to explore vast chemical and material spaces with unprecedented speed and rigor, directly addressing the critical challenges of cost, timeline, and success rates in fields from pharmaceuticals to advanced materials [17] [22] [3].

The integration of artificial intelligence and robotic automation is fundamentally accelerating the pace of chemical discovery research. However, the sheer complexity and intuitive nature of scientific innovation necessitate a collaborative approach. The Human-in-the-Loop (HITL) model has emerged as a critical framework that strategically balances the computational power of automation with the irreplaceable domain knowledge of expert scientists. This whitepaper explores the core principles, methodologies, and implementations of HITL systems, detailing how they are being successfully applied from molecular generation to materials synthesis. By examining experimental protocols, quantitative outcomes, and key enabling technologies, we demonstrate how this synergistic partnership is overcoming traditional research bottlenecks and creating a more efficient, scalable, and insightful path to discovery.

The traditional drug and materials discovery pipeline is notoriously time-consuming, expensive, and constrained by human-scale experimentation. The advent of artificial intelligence (AI) and robotic automation promised a revolution, offering the potential for high-throughput, data-driven research. Yet, initial approaches that relied solely on automation revealed significant limitations. AI models, often trained on limited or biased historical data, struggle to generalize and can produce results that, while statistically plausible, are scientifically invalid or impractical for synthesis [23]. This gap between computational prediction and real-world application has cemented the role of the expert scientist as an essential component in the discovery loop.

The Human-in-the-Loop (HITL) model is an adaptive framework that formally integrates human expertise into AI-driven and automated workflows. In this paradigm, automation handles repetitive, high-volume tasks and data analysis, while human scientists provide strategic guidance, contextual validation, and creative insight. This is not merely using humans to validate AI output; it is about creating a continuous, iterative feedback cycle where human intuition helps steer computational exploration towards more fruitful and realistic regions of chemical space. As noted in research on ternary materials discovery, previous ML approaches were biased by the limits of known phase spaces and experimentalist bias, a limitation that HITL directly addresses [24]. This model is now being deployed to tackle some of the most persistent challenges in chemical research, from inverse-design of materials with targeted properties to the rapid development of novel polymers and drug candidates.

Core Principles of the Human-in-the-Loop Model

The effectiveness of HITL systems in chemical discovery is governed by several foundational principles:

Iterative Refinement and Active Learning: At the core of HITL is an iterative cycle of prediction, experimentation, and feedback. Machine learning models propose candidate molecules or materials, which are then evaluated—either through simulated oracles, human experts, or real-world experiments. The results of this evaluation are fed back as new training data, refining the model's future predictions. This process often employs active learning (AL) strategies, where the system intelligently selects the most informative experiments to perform next, thereby maximizing the knowledge gain from each cycle and minimizing the number of costly experiments required [23]. The Expected Predictive Information Gain (EPIG) criterion is one such method used to select molecules for evaluation that will most significantly reduce predictive uncertainty [23].

Multi-Modal Knowledge Integration: Advanced HITL frameworks, such as the MolProphecy platform, are designed to reason over multiple types of information. They integrate structured data (e.g., molecular graphs, chemical descriptors) with unstructured, tacit domain knowledge from human experts [25]. This is often achieved through architectural features like gated multi-head cross-attention mechanisms, which effectively align LLM-encoded expert insights with graph neural network (GNN)-derived molecular representations, leading to more accurate and robust predictive models [25].

Human as Validator and Strategic Guide: The human expert's role in the loop is multifaceted. They act as a validator, confirming or refuting AI-generated predictions to correct for model hallucinations or biases [23] [25]. Furthermore, they serve as a strategic guide, defining the objective functions and "radical" parameters that fundamentally alter a problem's difficulty, thereby steering the generative process towards chemically feasible and therapeutically relevant outcomes [23] [26]. This moves the scientist from a manual executor of experiments to a "parameter steward" and "validity auditor" [26].

Experimental Protocols and Methodologies

The implementation of HITL models requires carefully designed experimental protocols. The following methodologies are representative of cutting-edge approaches in the field.

Goal-Oriented Molecular Generation with Active Learning

This protocol, detailed in studies on goal-oriented molecule generation, frames discovery as a multi-objective optimization problem [23].

1. Problem Formulation and Scoring Function Definition:

- The target profile for a new molecule is defined, which may include properties like bioactivity, solubility, and synthetic accessibility.

- A scoring function ( s(\mathbf{x}) ) is constructed as a weighted sum of individual property evaluations:

( s(\mathbf{x}) = \sum{j=1}^{J} wj \sigmaj(\phij(\mathbf{x})) + \sum{k=1}^{K} wk \sigmak (f{\theta_k} (\mathbf{x})) )

where ( \mathbf{x} ) is the molecule, ( \phij ) are analytically computable properties, ( f{\theta_k} ) are data-driven QSAR/QSPR models, and ( \sigma ) are transformation functions to normalize scores [23].

2. Initial Model Training and Generation:

- A generative model (e.g., a Reinforcement Learning-guided Recurrent Neural Network) is initialized and optimized to propose molecules that maximize the scoring function ( s(\mathbf{x}) ) [23].

3. Active Learning and Human Feedback Loop:

- The top-ranked generated molecules are selected based on an acquisition criterion like EPIG, which prioritizes compounds with high predictive uncertainty [23].

- These molecules are presented to human experts (medicinal chemists) via an interactive interface (e.g., the Metis UI) [23].

- Experts review the molecules, approving or refuting the predicted properties and optionally providing a confidence level for their assessment.

- The curated feedback is added to the training dataset ( \mathcal{D} ).

4. Model Retraining and Iteration:

- The property predictor ( f{\thetak} ) is retrained on the augmented dataset.

- The generative agent is then updated with the refined predictor, and the cycle repeats from step 2, progressively improving the quality and reliability of the generated molecules.

Human-in-the-Loop Robotic Polymer Discovery

This protocol, exemplified by the work of Carnegie Mellon and UNC Chapel Hill, physically integrates automation with human insight for materials discovery [27].

1. Design of Experiments (DoE) by AI:

- Researchers input desired property targets (e.g., a polymer that is both strong and flexible) into an AI design tool.

- The AI model suggests an initial series of chemical compositions and synthetic experiments.

2. Robotic Synthesis and Testing:

- Robotic platforms and automated science tools at the partner institution (e.g., UNC Chapel Hill) execute the suggested experiments.

- The system synthesizes the proposed polymers and conducts property measurements (e.g., tensile strength, elasticity).

3. Human-Machine Interaction and Dynamic Adjustment:

- Researchers analyze the experimental results, providing critical feedback to the AI model.

- Unlike a fully autonomous process, scientists interact with the model, questioning its suggestions and combining machine-generated options with their own hypotheses. A researcher described this as "interacting with the model, not just taking directions" [27].

- This human feedback is used to dynamically adjust the AI's search strategy, helping it navigate the complex material design space more effectively.

4. Iteration and Validation:

- The AI proposes a new set of experiments based on the human-refined understanding.

- The loop continues until a material meeting the target specifications is discovered and validated.

Quantitative Outcomes and Performance Data

The implementation of HITL models has yielded significant, measurable improvements in the speed, accuracy, and success rate of discovery campaigns. The following tables summarize key quantitative findings from recent research.

Table 1: Performance of Human-in-the-Loop Models in Molecular Property Prediction

| Model/Framework | Benchmark Dataset | Performance Metric | Result | Improvement Over Baseline |

|---|---|---|---|---|

| MolProphecy [25] | FreeSolv | RMSE | 0.796 | 9.1% reduction |

| MolProphecy [25] | BACE | AUROC | Not Specified | 5.39% increase |

| MolProphecy [25] | SIDER | AUROC | Not Specified | 1.43% increase |

| MolProphecy [25] | ClinTox | AUROC | Not Specified | 1.06% increase |

| HITL Active Learning [23] | Simulated DRD2 Optimization | Accuracy & Drug-likeness | Improved | Improved alignment with oracle & better drug-likeness |

Table 2: Impact of Robotics and Automation in Drug Discovery (Market Analysis)

| Segment | Market Leadership/Rate of Growth | Key Drivers and Applications |

|---|---|---|

| Robot Type | Traditional Robots (Dominant) | Stability, scalability in high-throughput screening (HTS) [28]. |

| Collaborative Robots (Fastest CAGR) | Flexibility, safety, ability to work alongside humans [28]. | |

| End User | Biopharmaceutical Companies (Dominant) | Large R&D budgets, need to accelerate timelines [29] [28]. |

| Research Laboratories (Fastest CAGR) | Drive for reproducibility, precision, and efficiency [28]. | |

| Regional Adoption | North America (Dominant) | Advanced infrastructure, early automation adoption, strong R&D funding [28]. |

| Asia Pacific (Fastest CAGR) | Expanding biotech sector, government support for innovation [28]. |

Visualization of Workflows

The following diagrams, generated using Graphviz, illustrate the logical flow and components of standard HITL methodologies in chemical discovery.

Iterative HITL Molecular Discovery Workflow

Diagram 1: Iterative HITL Molecular Discovery

Integrated Robotic HITL Platform

Diagram 2: Integrated Robotic HITL Platform

The Scientist's Toolkit: Essential Research Reagents and Solutions

The successful execution of HITL discovery relies on a suite of computational and physical tools. The following table details key components of the modern chemist's toolkit.

Table 3: Key Research Reagent Solutions for HITL Discovery

| Tool/Reagent | Type | Function in HITL Workflow |

|---|---|---|

| Generative AI Model | Software | Proposes novel molecular structures or material compositions that satisfy target property profiles, expanding the explorable chemical space [24] [30]. |

| Active Learning Criterion (e.g., EPIG) | Algorithm | Selects the most informative candidates for expert evaluation, optimizing the human feedback loop and improving model generalization [23]. |

| QSAR/QSPR Predictor | Software Model | Provides fast, in-silico estimates of complex properties (e.g., bioactivity, solubility) for scoring molecules during generative optimization [23]. |

| Collaborative Robot (Cobot) | Hardware | Executes physical synthesis and handling tasks safely alongside human researchers, enabling flexible and adaptive automated workflows [28]. |

| High-Throughput Screening (HTS) Robot | Hardware | Rapidly tests thousands of compounds for biological activity or material properties, generating the large-scale data required for training AI models [29]. |

| FAIR Data Platform (e.g., Signals Notebook) | Software | Provides a unified, cloud-native platform that ensures data is Findable, Accessible, Interoperable, and Reusable, which is critical for robust AI training and collaboration [31]. |

| Multi-Modal Fusion Framework (e.g., MolProphecy) | Software Architecture | Integrates structured molecular data (from GNNs) with unstructured expert knowledge (from LLMs/Chemists) to enhance prediction accuracy and interpretability [25]. |

| Sulcatone | Sulcatone, CAS:409-02-9, MF:C8H14O, MW:126.20 g/mol | Chemical Reagent |

| Ucf-101 | Ucf-101, CAS:5568-25-2, MF:C27H17N3O5S, MW:495.5 g/mol | Chemical Reagent |

The Human-in-the-Loop model represents a fundamental and necessary evolution in the practice of chemical discovery. It successfully addresses the core weakness of purely automated systems—their lack of contextual wisdom and inability to navigate scientific ambiguity—by forging a synergistic partnership between human and machine intelligence. As evidenced by successful applications in ternary materials discovery, polymer design, and drug candidate generation, this model leads to more accurate predictions, more feasible candidates, and ultimately, a faster transition from concept to validated product.

The future of HITL systems lies in their deeper integration and increasing sophistication. This includes the development of more intuitive interfaces for human-AI collaboration, more robust active learning algorithms capable of handling multiple objectives, and the wider adoption of fully integrated, automated laboratories. By continuing to refine this balance between automation and expert insight, the research community can unlock unprecedented levels of productivity and innovation, dramatically accelerating the delivery of new medicines and advanced materials to society.

From Code to Compound: Methodologies and Real-World Applications

High-Throughput Screening and Sample Management at Scale

The accelerating pace of chemical discovery research is increasingly dependent on sophisticated robotic platforms that transform high-throughput screening (HTS) from a manual, low-volume process to an automated, large-scale scientific capability. These integrated systems enable the rapid testing of hundreds of thousands of compounds against biological or chemical targets, generating massive datasets that drive innovation across pharmaceutical development, materials science, and chemical biology. Within the broader thesis of how robotic platforms accelerate chemical discovery research, this technical guide examines the core infrastructure, methodologies, and data management frameworks that make HTS operations possible at scale. The paradigm shift toward quantitative HTS (qHTS), which tests each library compound at multiple concentrations to construct concentration-response curves, has further increased demands on screening infrastructure, requiring maximal efficiency, miniaturization, and flexibility [32]. By implementing fully integrated and automated screening systems, research institutions can generate comprehensive datasets that reliably identify active compounds while minimizing false positives and negatives—a critical advancement for probe development and drug discovery.

Core Architecture of Robotic Screening Platforms

Integrated System Components

Modern robotic screening platforms represent sophisticated orchestrations of hardware and software components designed to operate with minimal human intervention. These systems typically combine random-access compound storage, precision liquid handling, environmental control, and multimodal detection capabilities into a seamless workflow. A prime example is the system implemented at the NIH's Chemical Genomics Center (NCGC), which features three high-precision robotic arms servicing peripheral units including assay and compound plate carousels, liquid dispensers, plate centrifuges, and plate readers [32]. This configuration enables complete walk-away operation for both biochemical and cell-based screening protocols, with the entire system capable of storing over 2.2 million compound samples representing approximately 300,000 compounds prepared as seven-point concentration series [32].

The sample management architecture is particularly critical for large-scale operations. The NCGC system maintains a total capacity of 2,565 plates, with 1,458 positions dedicated to compound storage and 1,107 positions for assay plate storage [32]. Every storage point on the system features random access, allowing complete retrieval of any individual plate at any given time. This massive storage capacity is complemented by three 486-position plate incubators capable of independently controlling temperature, humidity, and COâ‚‚ levels, enabling diverse assay types to run simultaneously under optimal conditions [32].

Detection and Reading Technologies

The utility of any HTS platform depends fundamentally on its detection capabilities. Modern systems incorporate multiple reading technologies to accommodate diverse assay chemistries and output requirements. As evidenced by the NCGC experience, these commonly include ViewLux, EnVision, and Acumen detectors capable of measuring fluorescence, absorbance, luminescence, fluorescence polarization, time-resolved FRET, FRET, and Alphascreen signals [32]. This detector flexibility enables the same robotic platform to address multiple target types including profiling assays, biochemical assays (enzyme reactions, protein-protein interactions), and cell-based assays (reporter genes, GFP induction, cell death) without hardware reconfiguration [32].

Table 1: Detection Modalities and Their Applications in HTS

| Detection Signal | Measurement Type | Example Applications | Compatible Detectors |

|---|---|---|---|

| Fluorescence | End-point, kinetic read | Enzyme activity, cell viability | ViewLux, EnVision |

| Luminescence | End-point | Reporter gene assays, cytotoxicity | ViewLux |

| Absorbance | End-point, multiwavelength | Cell proliferation, enzyme activity | ViewLux, EnVision |

| Fluorescence Polarization | End-point | Binding assays, molecular interactions | EnVision |

| Time-resolved FRET | End-point | Protein-protein interactions | EnVision |

| Alphascreen | End-point | Biomolecular interactions | EnVision |

Quantitative High-Throughput Screening (qHTS) Implementation

The qHTS Paradigm

Quantitative High-Throughput Screening represents a significant evolution beyond traditional single-concentration screening by testing each compound across a range of concentrations, typically seven or more points across approximately four logarithmic units [32]. This approach generates concentration-response curves (CRCs) for every compound in the library, creating a rich dataset that comprehensively characterizes compound activity. The qHTS paradigm offers distinct advantages: it mitigates the high false-positive and false-negative rates of conventional single-concentration screening, provides immediate potency and efficacy estimates, and reveals complex biological responses through curve shape analysis [32]. Additionally, since dilution series are present on different plates, the failure of a single plate due to equipment problems rarely requires rescreening, as the remaining test concentrations are usually adequate to construct reliable CRCs [32].

The practical implementation of qHTS for cell-based and biochemical assays across libraries of >100,000 compounds requires exceptional efficiency and miniaturization. The NCGC system addresses this challenge through 1,536-well-based sample handling and testing as its standard format, coupled with high precision in liquid dispensing for both reagents and compounds [32]. This miniaturization dramatically reduces reagent consumption—particularly important for expensive or difficult-to-produce biological reagents—while enabling the testing of millions of sample wells within reasonable timeframes and budgets.

qHTS Experimental Protocol

Protocol Title: Quantitative High-Throughput Screening (qHTS) for Compound Library Profiling

Principle: Test each compound at multiple concentrations to generate concentration-response curves, enabling comprehensive activity characterization and reliable identification of true actives while minimizing false positives/negatives.

Materials and Reagents:

- Compound library formatted as concentration series (typically 7 points or more)

- Assay reagents specific to target (enzymes, substrates, cells, detection reagents)

- 1,536-well assay plates

- Dimethyl sulfoxide (DMSO) for compound solubilization

- Appropriate buffer systems

Equipment:

- Robotic screening system with compound storage carousels

- High-precision liquid dispensers (solenoid valve technology)

- 1,536-pin array for compound transfer

- Plate incubators with environmental control (temperature, COâ‚‚, humidity)

- Multimode plate readers (capable of fluorescence, luminescence, absorbance detection)

- Plate lidding/delidding system

- Plate centrifuge

Procedure:

- Compound Library Preparation:

- Format compound library as interplate concentration series spanning approximately four logarithmic units.

- Store compounds in random-access carousels integrated with robotic system.

Assay Plate Preparation:

- Transfer compounds from storage plates to assay plates using 1,536-pin array.

- Dispense assay reagents using high-precision solenoid valve dispensers.

- For cell-based assays, maintain plates in controlled incubators between reagent additions.

Incubation and Reaction:

- Incubate plates under appropriate conditions (time, temperature, atmospheric control).

- Implement kinetic reads where necessary to capture reaction dynamics.

Detection and Reading:

- Measure assay outputs using appropriate detection modes (fluorescence, luminescence, etc.).

- Utilize multiple readers as needed for different signal types.

Data Capture:

- Automatically transfer raw data to analysis pipeline.

- Record quality control metrics for each plate.

Quality Control:

- Include control compounds on each plate (positive and negative controls).

- Monitor dispensing accuracy through control wells.

- Track environmental conditions throughout screening process.

Data Analysis:

- Fit concentration-response curves to data from each compound.

- Classify curve quality and shape (e.g., full response, partial response, inactive).

- Calculate potency (ICâ‚…â‚€/ECâ‚…â‚€) and efficacy values.

This qHTS approach has demonstrated remarkable productivity, with the NCGC reporting generation of over 6 million concentration-response curves from more than 120 assays in a three-year period [32].

Sample Management Infrastructure

Compound Storage and Logistics

Efficient sample management forms the backbone of any successful HTS operation. Large-scale screening campaigns require sophisticated systems for compound storage, retrieval, reformatting, and tracking. The architectural approach taken by leading facilities emphasizes random-access storage with integrated liquid handling to minimize plate manipulation and potential compound degradation. The NCGC system exemplifies this with 1,458 dedicated compound storage positions organized in rotating carousels, providing access to over 2.2 million individual samples [32]. This massive capacity enables the screening of complete concentration series without frequent compound repository access, significantly improving screening efficiency.

Modern systems have evolved beyond simple storage to incorporate just-in-time compound library preparation, eliminating the labor and reagent use associated with preparing fresh compound plates for each screen [32]. Advanced lidding systems protect against evaporation during extended storage periods, while fail-safe anthropomorphic arms manage plate transport and delidding operations. These features collectively ensure compound integrity throughout the screening campaign, which is particularly critical for sensitive biological assays and long-duration experiments.

Data Management and Public Repositories

The massive data output from HTS operations presents significant informatics challenges. Public data repositories such as PubChem have emerged as essential resources for the scientific community, providing centralized access to screening results and associated metadata. PubChem, maintained by the National Center for Biotechnology Information (NCBI), represents the largest public chemical data source, containing over 60 million unique chemical structures and 1 million biological assays from more than 350 contributors [33]. The repository structures data across three primary databases: Substance (SID), Compound (CID), and BioAssay (AID), creating an integrated knowledge system for chemical biology.

For large-scale data extraction, PubChem provides specialized programmatic interfaces such as the Power User Gateway (PUG) and PUG-REST, which enable automated querying and retrieval of HTS data for thousands of compounds [33]. This capability is particularly valuable for computational modelers and bioinformaticians building predictive models from public screening data. The PUG-REST service uses a Representational State Transfer (REST)-style interface, allowing users to construct specific URLs to retrieve data in various formats compatible with common programming languages [33].

Table 2: Key Public HTS Data Resources

| Resource | Primary Content | Access Methods | Data Scale |

|---|---|---|---|

| PubChem | Chemical structures, bioassay results | Web portal, PUG-REST API, FTP | >60 million compounds, >1 million assays |

| ChEMBL | Bioactive molecules, drug-like compounds | Web portal, API, data downloads | >2 million compounds, >1 million assays |

| BindingDB | Protein-ligand binding data | Web search, data downloads | ~1 million binding data points |

| Comparative Toxicogenomics Database (CTD) | Chemical-gene-disease interactions | Web search, data downloads | Millions of interactions |

Workflow Visualization

Diagram 1: Quantitative HTS Workflow. This diagram illustrates the sequential stages of the qHTS process, from compound and assay preparation through robotic screening, data analysis, and final probe development.

Diagram 2: Robotic Screening System Architecture. This diagram shows the integrated components of a modern robotic screening platform, highlighting the coordination between storage, liquid handling, detection modules, and robotic manipulation systems.

The Scientist's Toolkit: Essential Research Reagent Solutions

Table 3: Key Research Reagent Solutions for HTS Operations

| Reagent Category | Specific Examples | Function in HTS | Application Notes |

|---|---|---|---|

| Detection Reagents | Fluorescent dyes, Luminescent substrates, FRET pairs | Signal generation for activity measurement | Must be compatible with miniaturized formats and detection systems |

| Cell Viability Assays | MTT, Resazurin, ATP Lite | Measure cell health and proliferation | Critical for cell-based screening and toxicity assessment |

| Enzyme Substrates | Fluorogenic, Chromogenic peptides/compounds | Monitor enzymatic activity | Km values should be appropriate for assay conditions |

| Cell Signaling Reporters | Luciferase constructs, GFP variants | Pathway-specific activation readouts | Enable functional cellular assays beyond simple viability |

| Binding Assay Reagents | Radioligands, Fluorescent tracers | Direct measurement of molecular interactions | Require separation or detection of bound vs. free ligand |

| Buffer Systems | PBS, HEPES, Tris-based formulations | Maintain physiological pH and ionic strength | Optimization critical for assay performance and reproducibility |

| Positive/Negative Controls | Known agonists/antagonists, Vehicle controls | Assay validation and quality control | Included on every plate to monitor assay performance |

| Diethyltoluamide | N,N-Diethyl-2-methylbenzamide | N,N-Diethyl-2-methylbenzamide for research applications. This active agent is for Research Use Only. Not for diagnostic, therapeutic, or personal use. | Bench Chemicals |

| Resveratrol | Resveratrol, CAS:133294-37-8, MF:C14H12O3, MW:228.24 g/mol | Chemical Reagent | Bench Chemicals |

Emerging Paradigms: AI and Autonomous Laboratories

The integration of artificial intelligence with robotic platforms represents the next evolutionary stage in high-throughput screening and chemical discovery. Autonomous laboratories, also known as self-driving labs, combine AI, robotic experimentation, and automation technologies into a continuous closed-loop cycle that can efficiently conduct scientific experiments with minimal human intervention [9]. In these systems, AI plays a central role in experimental planning, synthesis optimization, and data analysis, dramatically accelerating the exploration of chemical space.

Recent demonstrations highlight the transformative potential of this approach. The A-Lab platform, developed in 2023, successfully synthesized 41 of 58 computationally predicted inorganic materials over 17 days of continuous operation, achieving a 71% success rate with minimal human involvement [9]. Central to its performance were machine learning models for precursor selection and synthesis temperature optimization, convolutional neural networks for XRD phase analysis, and active learning algorithms for iterative route improvement [9]. Similarly, Bayesian reasoning systems have been developed to interpret chemical reactivity using probability, enabling the autonomous rediscovery of historically important reactions including the aldol condensation, Buchwald-Hartwig amination, Heck, Suzuki, and Wittig reactions [34].

Large language models (LLMs) are further expanding autonomous capabilities. Systems like Coscientist and ChemCrow demonstrate how LLM-driven agents can autonomously design, plan, and execute chemical experiments by leveraging tool-using capabilities that include web searching, document retrieval, code generation, and robotic system control [9]. These systems have successfully executed complex tasks such as optimizing palladium-catalyzed cross-coupling reactions and planning synthetic routes for target molecules [9]. The emerging paradigm of "material intelligence" embodies this convergence of artificial intelligence, robotic platforms, and material informatics, creating systems that mimic and extend how a scientist's mind and hands work [35].