Automated Purification in Synthesis: Integrated Systems Accelerating Drug Discovery

This article explores the transformative integration of automated purification systems with synthesis platforms, a key innovation addressing critical bottlenecks in biomedical research and drug development.

Automated Purification in Synthesis: Integrated Systems Accelerating Drug Discovery

Abstract

This article explores the transformative integration of automated purification systems with synthesis platforms, a key innovation addressing critical bottlenecks in biomedical research and drug development. We examine the foundational principles of technologies like single-use TFF and HPLC-based synthesizers, detail their methodological application across biologics and small molecules, and provide troubleshooting and optimization strategies for robust implementation. By presenting validation data and comparative analyses of leading platforms, this resource equips scientists and drug development professionals with the knowledge to harness these integrated workflows, ultimately enabling faster timelines, improved reproducibility, and accelerated translation of discoveries into clinical applications.

The Rise of Integration: Core Concepts and Technologies Powering Automated Synthesis-Purification

In the relentless pursuit of accelerating drug discovery, the synthesis of novel compounds often captures the spotlight. However, for researchers and drug development professionals, the subsequent purification of these compounds frequently emerges as the most formidable and rate-limiting step. In both biologics and small molecule synthesis, purification is not merely a clean-up process; it is a critical, complex, and resource-intensive operation that dictates the pace, cost, and ultimate success of preclinical research. This application note delineates why purification constitutes the primary bottleneck and provides detailed protocols for implementing integrated, automated solutions that can alleviate this constraint, directly supporting research into automated purification systems.

The challenge is twofold. For biologics, the immense molecular complexity and sensitivity of molecules like monoclonal antibodies (mAbs) and recombinant proteins necessitate gentle yet precise separation from complex mixtures [1]. For small molecules, the demand for high-purity compounds to feed the iterative Design-Make-Test-Analyse (DMTA) cycle creates a relentless logistical pressure on research teams [2]. In both cases, manual purification methods are often unable to keep pace, creating a significant drag on innovation. This note frames these challenges within the context of a broader research thesis on automation, demonstrating how integrated platforms can transform this critical path from a bottleneck into a conduit for accelerated discovery.

The Purification Bottleneck: A Quantitative Analysis

The bottleneck status of purification is not anecdotal; it is grounded in quantitative data on cost and time allocation. The following table summarizes the core challenges and their impacts across therapeutic modalities.

Table 1: Quantitative Comparison of Purification Bottlenecks in Drug Development

| Parameter | Biologics Purification | Small Molecule Purification |

|---|---|---|

| Typical Cost Contribution | Accounts for up to 80% of total manufacturing costs [3] | The "Make" step (synthesis & purification) is the most costly part of the DMTA cycle [2] |

| Primary Technical Challenge | Maintaining structural integrity of large, fragile molecules during separation; overcoming low concentrations in harvest streams [3] [4] | Obtaining high-purity final products and intermediates from complex reaction mixtures, often with multi-step routes [2] |

| Impact of Bottleneck | Limits overall production speed; causes cascading delays in downstream filling and packaging; high cost of goods [3] | Slows DMTA cycle iteration, directly delaying SAR (Structure-Activity Relationship) analysis and lead optimization [2] |

| Common Bottleneck Cause | Time-consuming cleaning and validation of reusable filtration systems; buffer preparation and consumption [3] | Labor-intensive, manual chromatography and compound handling after synthesis [2] |

Automated Solutions and Experimental Protocols

To overcome these bottlenecks, research is increasingly focused on automating purification and integrating it seamlessly with upstream synthesis. The protocols below are designed for use with modern automated platforms.

Protocol for Automated Purification of Biologics Using Single-Pass Tangential Flow Filtration (SPTFF)

This protocol outlines the use of Single-Pass TFF to address the buffer volume and time constraints of conventional diafiltration for monoclonal antibodies or other recombinant proteins [3].

Table 2: Research Reagent Solutions for Automated Biologics Purification

| Item | Function |

|---|---|

| Single-Use TFF Assembly | Pre-sterilized, ready-to-use filtration module eliminates cross-contamination risk and reduces setup/cleaning time [3]. |

| Single-Pass TFF (SPTFF) Module | Achieves desired concentration or buffer exchange in a single pass, drastically reducing buffer consumption and process time [3]. |

| Digital Peristaltic Pumps | Provide precise control of flow rates and transmembrane pressure, critical for handling fragile biologic molecules [3]. |

| In-line pH & Conductivity Sensors | Enable real-time monitoring of buffer exchange efficiency and ensure process consistency within PAT (Process Analytical Technology) frameworks [3]. |

Experimental Methodology:

- System Setup: Connect a single-use SPTFF module to the protein harvest stream. Integrate digital peristaltic pumps and in-line sensors for pressure, conductivity, and pH monitoring. Ensure all components are compatible with the bioreactor output.

- Parameter Calibration: Set the feed flow rate and transmembrane pressure (TMP) according to the manufacturer's specifications for the target protein. Typical TMP values range from 5-15 psi to minimize shear stress.

- Process Execution: Direct the protein solution through the SPTFF module. The product is concentrated and diafiltered in a single pass without recirculation. Monitor in-line sensors to confirm the target concentration and buffer composition are achieved.

- Product Recovery: Collect the purified and formulated retentate. Flush the system with a small volume of formulation buffer to maximize product recovery.

- System Disposal: Decontaminate and dispose of the single-use assembly according to biohazardous waste protocols, eliminating cleaning validation.

Protocol for Automated Purification of Small Molecules via Integrated LC-MS and Fractionation

This protocol describes an automated workflow for the purification and analysis of small molecule libraries, directly addressing the "Make" bottleneck in the DMTA cycle [5] [2].

Table 3: Research Reagent Solutions for Automated Small Molecule Purification

| Item | Function |

|---|---|

| Liquid Handling Robot | Automates repetitive tasks like sample transfer, injection, and fraction collection, improving reproducibility and freeing scientist time [5]. |

| Preparative HPLC System | The core separation unit, capable of high-resolution chromatographic purification of complex reaction mixtures. |

| Mass Spectrometer (MS) Detector | Provides real-time, mass-directed triggering for fraction collection, ensuring only the desired product is isolated [5]. |

| 96-Well Collection Plates | Standardized format for efficient collection and subsequent downstream processing (e.g., evaporation, dosing) in screening assays. |

Experimental Methodology:

- Synthesis Integration: The liquid handling robot receives crude reaction mixtures in a 96-well plate from the synthesis platform.

- Chromatography Setup: A generic, high-resolution preparative HPLC method is programmed (e.g., 5-95% organic modifier over 10-15 minutes).

- MS-Triggered Fractionation: The eluent from the HPLC is split to the MS detector. A method is set to trigger fraction collection when the ion count for the target mass ([M+H]+ or other adduct) crosses a predefined threshold.

- Automated Collection: The liquid handler collects the MS-triggered peak into designated wells of a new 96-well collection plate.

- Data Documentation: The system automatically records chromatograms, MS spectra, and fraction locations for each sample, ensuring FAIR (Findable, Accessible, Interoperable, Reusable) data principles [2].

System Workflow Visualization

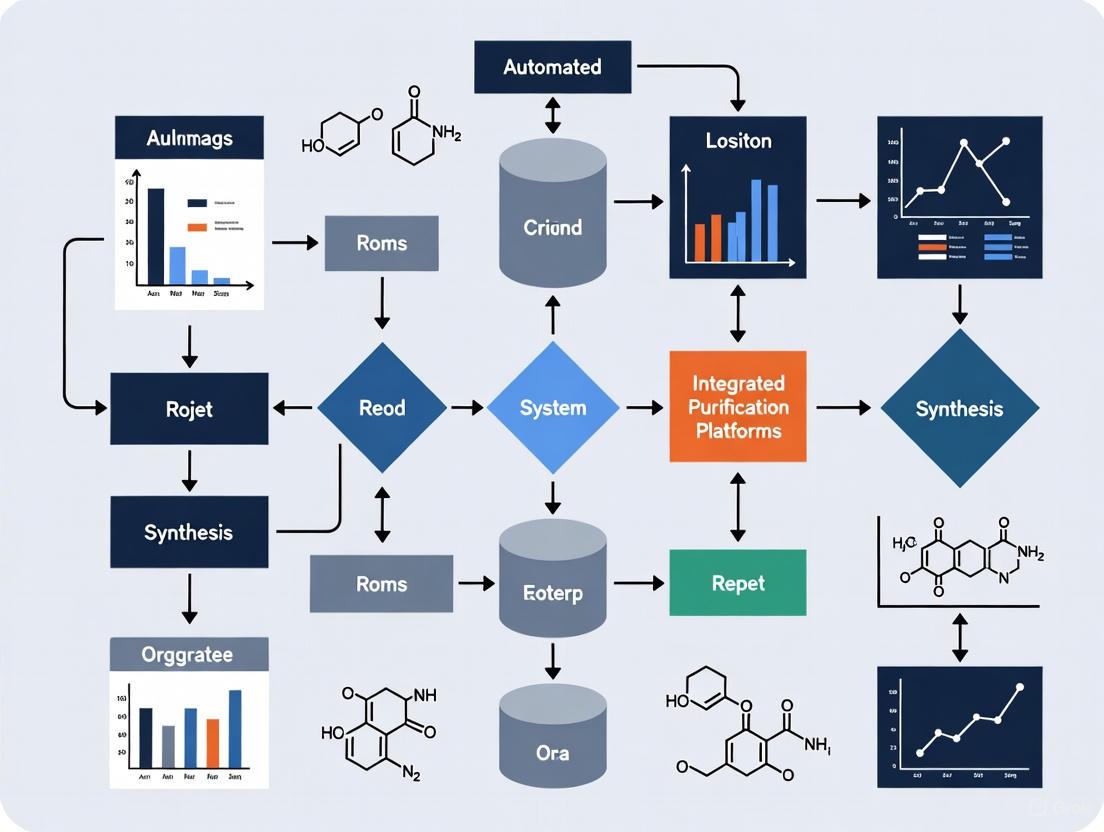

The following diagram illustrates the logical flow of the integrated, automated purification platforms for both biologics and small molecules, highlighting the critical role of automation and real-time analytics.

Diagram 1: Integrated Automated Purification Workflows. The workflows for small molecules (top, yellow) and biologics (bottom, green) demonstrate parallel concepts of integration, automation, and data-rich feedback (red notes). PAT: Process Analytical Technology.

Purification remains the critical path in drug substance development not because of a failure in technique, but because of the escalating demands of modern therapeutics. The path forward for research into automated systems lies in the continued integration of purification with synthesis, the adoption of single-use technologies to eliminate downtime, and the implementation of data-rich, feedback-controlled processes. By treating purification not as a standalone, offline operation but as an integrated component of a continuous workflow, researchers can effectively dismantle this persistent bottleneck, thereby accelerating the delivery of innovative therapies to patients.

The paradigm of biopharmaceutical development is shifting from standalone, manual operations towards fully integrated, automated systems. This transition is built upon core technological pillars that seamlessly connect synthesis, purification, and analysis into continuous workflows. The integration of Single-Use Tangential Flow Filtration (TFF) with advanced purification and synthesis platforms represents a foundational advancement, enabling unprecedented efficiencies in both upstream bioprocessing and downstream purification. These technologies are no longer isolated solutions but critical components within a broader ecosystem of AI-driven discovery and automated biomanufacturing [6] [7].

The drive for integration is underpinned by demonstrated benefits: reduced labor (by 50% or more), dramatically lower buffer and water consumption (by up to 75%), and the elimination of cleaning validation requirements, which collectively accelerate process development and scale-up [8] [9]. For multiproduct facilities, these single-use systems provide a simple and effective method to mitigate cross-contamination risk, enhancing facility flexibility [8]. This application note details the protocols and quantitative performance data for these core technologies, providing a framework for their implementation within modern, automated drug development pipelines.

Technology-Specific Application Notes & Protocols

Single-Use Tangential Flow Filtration (TFF)

Application Note: Single-use TFF has transitioned from a niche technology to a standard for clinical-stage manufacturing and multiproduct facilities, particularly for processes involving monoclonal antibodies (mAbs) and conjugate vaccines [8] [10]. Its primary value proposition lies in simplifying product changeover and reducing total operating costs for campaigns with relatively few batches.

A systematic evaluation comparing a novel Pellicon single-use TFF capsule against traditional multi-use cassettes for the purification of an activated polysaccharide demonstrated equivalent performance in key metrics. The study confirmed that the single-use format provides comparable flux, yield, and clearance of reaction residuals (e.g., periodate/iodate and quenching reagents) while offering significant operational advantages [10].

Table 1: Performance Comparison of Reusable vs. Single-Use TFF Capsules

| Performance Metric | Reusable Cassette | Single-Use Capsule |

|---|---|---|

| Maximum Flux (LMH) | Equivalent performance, reaching plateau at ~25 psi TMP [10] | Equivalent performance, reaching plateau at ~25 psi TMP [10] |

| Product Yield | High (e.g., 96% for MAb X) [8] | Equivalent high yield (e.g., 96% for MAb X) [8] |

| Clearance of Residuals | Effective | Effective, with comparable sieving coefficients [10] |

| Flush Volume Requirement | Larger (≥20 L/m²) [10] | Smaller [10] |

| Pre-use Preparation | Requires cleaning, sanitization, and flushing | Pre-sanitized; requires flushing only (~85% reduction in solution volume) [8] [10] |

| Ease of Setup | Requires compression holder | No compression holder needed; plug-and-play [10] |

Protocol: Implementation of Single-Use TFF for Buffer Exchange

- System Flush and Equilibration: Flush the pre-sanitized single-use TFF capsule with Water for Injection (WFI) for the vendor-recommended duration (e.g., ≥20 L/m²). Equilibrate the capsule with the feed matrix buffer until pH and conductivity of the permeate and retentate streams match the equilibration buffer [10].

- Process Parameter Setting: Set the cross-flow rate to the target value (e.g., 3.5 L/min/m²). For an mAb concentration process, initiate diafiltration against the final formulation buffer [8] [10].

- Diafiltration: Process the solution for the target number of diavolumes (e.g., 20 diavolumes). Maintain constant operating parameters throughout [10].

- Product Recovery: Upon completion, recover the purified product from the retentate line. To maximize yield, perform a system flush using 1 hold-up volume of diafiltration buffer, recirculate, and collect [10].

Single-Pass Tangential Flow Filtration (SPTFF)

Application Note: SPTFF enables continuous, inline concentration and buffer exchange, aligning with the industry's move towards continuous bioprocessing. It is particularly valuable for debottlenecking downstream operations, reducing tankage constraints, and minimizing protein aggregation caused by repeated pump passes in batch systems [11]. SPTFF modules are designed with long path lengths and often a staged configuration to handle the large variations in flow rate, protein concentration, and transmembrane pressure that occur at high conversions.

The performance of SPTFF is governed by a balance between hydrodynamic conditions and the physical properties of the product. A model accounting for axial variation in pressure and flux, as well as key protein properties like viscosity and osmotic pressure, has been developed and validated, providing a robust framework for module design and process optimization [11].

Table 2: Key Design and Performance Parameters for SPTFF of mAbs

| Parameter | Impact on Performance & Design |

|---|---|

| Module Geometry | Staged designs (e.g., Cadence) with decreasing parallel flow channels balance retentate flow rate reduction at high conversions [11]. |

| Path Length | Long path lengths are essential for achieving high conversion in a single pass [11]. |

| Transmembrane Pressure (TMP) | High conversion leads to large axial TMP variation; optimal design must account for this to maintain efficiency [11]. |

| Protein Concentration | Directly affects osmotic pressure and viscosity, which in turn limit the achievable flux and concentration factor [11]. |

| Feed Flux | A critical parameter; studies show operation at 34, 68, and 136 L/h/m² is feasible for mAb concentration [11]. |

Protocol: Inline Concentration of a Monoclonal Antibody Using SPTFF

- System Configuration: Connect the SPTFF module (e.g., Pall Cadence or a Pellicon 3 cassette series) inline after the upstream unit operation (e.g., a chromatography column outlet).

- Parameter Calibration: Based on the feed stream properties (mAb concentration, buffer composition) and target concentration factor, use established models to determine the optimal feed flow rate and operating pressure profile [11].

- Continuous Processing: Direct the process fluid from the previous step through the SPTFF module. The permeate is continuously removed, and the concentrated retentate is output for the next processing step.

- Monitoring and Control: Monitor the pressure drop across the module and the filtrate flux to ensure stable operation and confirm that the target concentration factor is achieved.

AI-Integrated Automated Synthesis Platforms

Application Note: The third pillar of modern drug development is the AI-powered, automated synthesis platform. These systems close the loop between compound design, synthesis, and purification, liberating chemists from routine manual tasks. Platforms such as the "Chemputer" and "AI-Chemist" can execute complex multi-step syntheses, including the production of active pharmaceutical ingredients (APIs) like angiotensin-converting enzyme (ACE) inhibitors, with higher yields and purity than traditional manual methods [12].

The integration of synthesis, purification, and sample management into a single platform, directed by a central robot, dramatically accelerates the generation of pharmaceutical candidates [13]. The advent of generalized autonomous platforms, which combine machine learning, large language models, and biofoundry automation, now allows for the engineering of complex biologics like enzymes without the need for human intervention, judgement, or domain expertise [14].

Protocol: Automated Synthesis-Purification for Small Molecule Generation

This protocol outlines a workflow for an integrated flow chemistry–synthesis–purification platform [13].

- Synthesis in Flow Reactors: The synthesis is initiated in automated flow chemistry modules. These reactors offer precise control over reaction parameters such as temperature, residence time, and mixing.

- Robotic Handoff: A central Mitsubishi robot transfers the reaction mixture from the synthesis station to the purification station.

- Inline Purification and Analysis: The crude product is purified using an inline High-Performance Liquid Chromatography (HPLC) system. Fractions are automatically collected based on UV signal thresholds.

- Sample Dispensing and Management: The purified samples are dispensed by the robot into microtiter plates for subsequent purity and quantification analysis (e.g., LC-MS). The robot also manages the "dry-down" of samples and the generation of aliquots for biological screening.

The Integrated Workflow

The synergy between the core technological pillars creates a powerful, continuous pipeline for drug substance development. The workflow below illustrates how these components integrate within an automated ecosystem.

Diagram 1: Integrated Automated Workflow

The Scientist's Toolkit: Essential Research Reagent Solutions

Successful implementation of the described protocols relies on a set of key reagents and materials. The following table details essential components for these automated platforms.

Table 3: Key Research Reagent Solutions for Automated Purification & Synthesis

| Item | Function & Application |

|---|---|

| Single-Use TFF Capsule (e.g., Pellicon Capsule with Ultracel membrane) | Pre-sanitized, plug-and-play device for final buffer exchange and concentration; eliminates cleaning validation and reduces setup steps [10]. |

| SPTFF Module (e.g., Cadence Inline Concentrator) | Enables continuous, single-pass ultrafiltration and diafiltration for process intensification and integration [11]. |

| Regenerated Cellulose (RC) or Polyethersulfone (PES) Membranes | The core filtration media; selection of molecular weight cut-off (MWCO) and membrane chemistry (e.g., RC for low binding) is critical for yield and product quality [10]. |

| HiFi Assembly Mix | Enzyme mix for high-fidelity DNA assembly in automated biofoundries; crucial for error-free variant construction in autonomous enzyme engineering campaigns [14]. |

| Automated Synthesis Cartridges | Pre-packaged reagents or catalysts for flow-based synthesis platforms (e.g., iterative cross-coupling); enable reproducible and automated small molecule synthesis [12]. |

The convergence of FAIR (Findable, Accessible, Interoperable, Reusable) data principles and Artificial Intelligence (AI) is fundamentally transforming manufacturing, creating a pathway to fully agile and autonomous operations. This paradigm shift is particularly critical for advanced sectors like integrated synthesis and purification platforms, where traditional data silos and manual processes create significant bottlenecks. This Application Note details a practical framework for implementing a data-driven workflow, demonstrating how FAIR-compliant data infrastructure serves as the foundational catalyst for AI-driven optimization. The documented protocol enabled a 15-20% improvement in production output and a 10-15% increase in unlocked capacity by creating a closed-loop system where AI models continuously optimize purification parameters based on real-time, FAIR-formatted sensor data [15]. By providing structured methodologies, quantitative performance data, and standardized reagent solutions, this document equips researchers and manufacturing professionals to accelerate their transition to evidence-based, adaptive manufacturing systems that can dynamically respond to volatile supply chains and complex product demands.

The modern manufacturing landscape, especially in domains requiring integrated synthesis and purification, is characterized by unprecedented volatility and complexity. "Industry 4.0" has promised agile, data-driven operations, yet many organizations remain hindered by inaccessible and poorly structured data, which is unusable for advanced analytics [16] [15]. The FAIR principles directly address this challenge by providing a framework to make data machine-actionable. In an AI-driven world, simply having "open" data is insufficient; data must be richly documented, linked to provenance, and structured for both human and machine consumption to be truly "AI-ready" [17].

The concept of "FAIR Squared" (FAIR²) extends the original principles, defining a formal specification that ensures research and manufacturing data are not only reusable but also structured for deep scientific reuse and aligned with Responsible AI principles [17]. This is achieved by making data compatible with modern AI-ready formats like MLCommons Croissant and integrating essential elements for scientific rigor and reproducibility [17]. For manufacturing, this translates into a robust data foundation that enables key AI applications such as predictive maintenance, AI-optimized production scheduling, and autonomous quality control, ultimately unlocking the door to fully agile manufacturing operations [18].

Protocol for Implementing a FAIR and AI-Driven Agile Workflow

This protocol outlines the complete integration of FAIR data management with an AI-driven control system, specifically for an automated synthesis and purification platform. The entire workflow is designed as a closed-loop system where process data is continuously captured, standardized, and fed back into AI models for ongoing optimization.

The diagram below illustrates the integrated, closed-loop workflow for agile manufacturing, from data acquisition to process optimization.

Materials and Equipment

Research Reagent Solutions

The table below details the essential materials and their functions for establishing an automated synthesis and purification platform.

Table 1: Essential Research Reagent Solutions for Automated Synthesis and Purification

| Item | Function/Application | Key Characteristics |

|---|---|---|

| Single-Use TFF Assembly | Tangential Flow Filtration for biomolecule purification [3]. | Pre-sterilized, integrated sensors, reduces contamination risk and changeover time. |

| Single-Pass TFF System | High-volume concentration and buffer exchange [3]. | Eliminates recirculation loop, cuts buffer consumption, ready for continuous processing. |

| Digital Peristaltic Pumps | Precise fluid transfer and pressure control [3]. | High flow accuracy, live sensor readings, protects fragile molecules (e.g., mAbs, viral vectors). |

| Process Analytical Technology (PAT) | In-line monitoring of Critical Process Parameters (CPPs) [3]. | Tracks protein concentration, pressure, conductivity; enables real-time release. |

| Advanced Membrane Materials | Molecular separation in TFF processes [3]. | High product recovery, low fouling, compatible with diverse biologics. |

| AI-Driven Scheduling Software | Finite capacity production planning and scheduling [18]. | Generates KPI-optimized schedules, simulates "what-if" scenarios, enables rapid replanning. |

Step-by-Step Procedure

Phase 1: Data Acquisition and FAIRification (Duration: 4-6 hours per batch)

- Instrument Integration and Data Capture: Connect all sensors from the purification system (e.g., pressure transducers, flowmeters, conductivity and pH probes) to a Unified Namespace architecture. This ensures a single source of truth for data across the operation [15].

- Automated Metadata Generation: Utilize an AI Data Steward to automatically generate a data dictionary, assign Universally Unique Identifiers (UUIDs) to each data asset, and document provenance, linking data to the specific equipment and batch run [17] [16].

- FAIR² Data Package Creation: Structure the dataset and its metadata into a FAIR² Data Package using the MLCommons Croissant format. This creates an "AI-ready" resource that is richly documented and linked to methodology, ensuring interoperability with major ML frameworks like TensorFlow and PyTorch [17].

Phase 2: AI Processing and Optimization (Duration: 5-15 minutes per optimization cycle)

- Data Aggregation and Model Ingestion: Stream the FAIR-formatted data from the unified data lake to the AI analytics platform. The structured nature of the data eliminates the need for manual pre-processing.

- AI Model Execution: Run pre-trained machine learning models to analyze the real-time process data against historical performance benchmarks. The model will predict optimal setpoints for key parameters (e.g., transmembrane pressure, cross-flow rate, diafiltration volume) to maximize yield and purity.

- Command Generation: The AI system generates and transmits optimized control commands (e.g., pump speeds, valve positions) to the Automated Control System.

Phase 3: Process Execution and Validation (Duration: Process dependent)

- Automated Process Execution: The control system executes the purification run (e.g., using Single-use or Single-pass TFF) based on the AI-optimized parameters [3].

- Real-Time Validation and Feedback: Use in-line Process Analytical Technology (PAT)—such as IR spectroscopy or automated TLC—to monitor Critical Quality Attributes (CQAs) [12] [3]. The results from this validation step are fed back into the data acquisition phase, closing the loop and providing new, labeled data for continuous improvement of the AI models.

Results and Performance Data

Implementation of this FAIR and AI-driven workflow yields significant and measurable improvements across key manufacturing performance indicators. The data below, synthesized from industry deployments and surveys, quantifies the tangible benefits.

Table 2: Quantitative Performance Improvements from AI and Smart Manufacturing Initiatives

| Key Performance Indicator (KPI) | Reported Improvement | Context & Source |

|---|---|---|

| Production Output | 10-20% | Average net impact reported by manufacturers deploying smart technologies [15]. |

| Unlocked Capacity | 10-15% | Capacity freed up through efficiency gains from smart manufacturing initiatives [15] [18]. |

| Employee Productivity | 7-20% | Improvement in workforce productivity due to automation and AI decision support [15]. |

| Buffer Consumption | Significant Reduction | Single-pass TFF dramatically cuts buffer use vs. traditional diafiltration [3]. |

| Scheduling Efficiency | Top Investment Priority | 35% of manufacturers rank advanced AI-driven scheduling as a top-2 investment priority to address planning complexities [15]. |

Beyond these quantitative metrics, qualitative operational benefits include a drastic reduction in manual data cleaning, the ability to perform rapid "what-if" analyses for production planning, and enhanced reproducibility due to standardized, FAIR data and automated workflows [7] [18].

Discussion

Strategic Implications and Analysis

The integration of FAIR data principles with AI is not merely a technical upgrade but a strategic imperative that redefines manufacturing agility. This approach directly addresses the "replication crisis" in scientific manufacturing, where inconsistent data and undocumented methods lead to irreproducible results and lost value [16]. By making data AI-ready, organizations can transition from reactive problem-solving to predictive and adaptive operations.

A key outcome is the emergence of the "AI co-pilot" for production planning. Faced with a shortage of skilled planners and increasing market volatility, nearly 35% of manufacturers are prioritizing investments in AI-driven scheduling systems [15] [18]. These tools do not replace human experts but augment them, generating multiple KPI-optimized schedule scenarios (e.g., maximizing on-time delivery vs. minimizing changeovers) for planners to select from, thereby elevating their role from number-crunchers to strategic decision-makers.

Furthermore, this data-driven foundation is crucial for overcoming the traditional "big data barrier" that has locked small and mid-sized manufacturers out of the AI revolution. Modern AI platforms, fed by consistent and well-structured FAIR data, can deliver value without requiring decades of historical data, making advanced optimization accessible to a broader range of organizations [18].

Technological Integration Pathways

The successful implementation of this workflow hinges on several core technologies. The adoption of a Unified Namespace and data standards simplifies data management and creates the necessary agility for real-time analytics [15]. In purification, the shift towards single-use and single-pass TFF is critical for achieving the required speed and flexibility, reducing changeover times from days to hours and enabling continuous processing [3].

The diagram below illustrates the logical architecture that connects FAIR data to manufacturing outcomes through AI, creating a virtuous cycle of improvement.

The transition to fully agile manufacturing is inextricably linked to the maturation of a data-driven workflow built upon the FAIR principles and enabled by AI. This Application Note provides a proven protocol for establishing this workflow, demonstrating that the deliberate structuring of data to be Findable, Accessible, Interoperable, and Reusable is not an administrative burden but a critical strategic investment. It is the catalyst that unlocks the full potential of AI, transforming manufacturing from a static, sequential process into a dynamic, self-optimizing system capable of responding with unprecedented speed and efficiency to the demands of modern production and complex therapeutic development.

The efficiency of modern drug discovery is critically dependent on the rapid execution of the Design-Make-Test-Analyse (DMTA) cycle. Within this framework, the "Make" phase—the synthesis and purification of target compounds—often represents the most significant bottleneck [2]. The integration of automated purification systems with synthesis platforms is therefore a pivotal innovation, leveraging advanced reactors, sophisticated sensors, automated fraction collectors, and intelligent control software to accelerate this process [2] [19]. This document details the key hardware and software components that constitute these integrated systems, providing application notes and protocols to support their implementation in research laboratories focused on drug development.

Key System Components and Their Functions

Automated purification systems are cyber-physical systems that combine specialized hardware for physical processes with software for digital oversight and control. The synergy between these components enables high-throughput, reproducible, and data-rich experimentation.

Table 1: Core Components of Automated Purification Systems

| Component Category | Specific Examples / Models | Key Functions | Technical Specifications |

|---|---|---|---|

| Reactors | Continuous Flow Reactors (e.g., Vapourtec R Series) [20] | Enable reproducible, scalable synthesis with superior heat/mass transfer [21]. | Flow rates from µL/min to mL/min; temperature control from sub-ambient to >150°C; pressure resistance up to 20 bar [20]. |

| Sensors | UV/Vis Spectrophotometers [20] | Real-time reaction monitoring and product detection. | Flow cells with path lengths of 2-10 mm; wavelength range 200-800 nm. |

| Mass Spectrometers (MS) [19] | Provides definitive compound identification. | Coupled with HPLC/SFC; electrospray ionization (ESI). | |

| Charged Aerosol Detectors (CAD) [19] | Universal detection for non-chromophoric compounds. | Compatible with gradient elution. | |

| Fraction Collectors | Gilson GX-271, GX-241 [20] | Automated collection of purified compound bands. | Compatible with microplates, tubes, and bottles; time-, peak-, or volume-based collection modes. |

| Teledyne Foxy R2 [22] | High-throughput fraction collection for HPLC. | RFID rack detection; flow rates up to 125 mL/min; dual rack capacity for high sample numbers. | |

| Control & Data Software | Vendor-Specific Control Software (e.g., Vapourtec) [20] | Direct hardware control and basic data logging. | Enables real-time reaction list modification and data saving [20]. |

| Laboratory Information Management Systems (LIMS - e.g., SAPIO LIMS) [19] | Centralized sample tracking, data management, and workflow orchestration. | Customizable to specific project needs (small molecules, peptides, PROTACs). | |

| Data Processing Tools (e.g., Analytical Studio) [19] | Automated processing of chromatographic data (DAD, MS, CAD). | Accelerates decision-making for purification and quality control. |

Integrated Experimental Protocol: High-Throughput Purification for Drug Discovery

This protocol outlines a standardized workflow for the automated analysis and purification of compound libraries, integrating the components described above. It is adapted from established high-throughput purification (HTP) platforms used in industrial R&D settings [19].

Protocol Objectives and Applications

- Primary Objective: To provide a robust, scalable method for the purification of crude reaction mixtures from medicinal chemistry campaigns, enabling rapid compound delivery for biological testing.

- Applications: Supporting the DMTA cycle by purifying small molecules, peptides, and PROTACs on a milligram to gram scale using Reversed-Phase High-Performance Liquid Chromatography-Mass Spectrometry (RP-HPLC-MS) and/or Supercritical Fluid Chromatography-Mass Spectrometry (SFC-MS) [19].

- Key Outcome: Delivery of purified compounds as dimethyl sulfoxide (DMSO) solutions ready for biological assay distribution, with a purity threshold of >95% as confirmed by orthogonal analytical methods [19].

Detailed Step-by-Step Methodology

Step 1: Sample Submission and Registration

- Procedure: The chemist submits crude samples to the HTP platform via the Laboratory Information Management System (LIMS). Each sample is registered with a unique identifier, and relevant metadata (e.g., project code, expected mass, chemical structure) is recorded.

- Critical Parameters: Accurate sample identification and tracking through the LIMS is essential for workflow integrity [19].

Step 2: Pre-Purification Analysis (PreQC)

- Procedure:

- Method Scouting: An automated analytical LC-MS or SFC-MS system screens each sample using a set of generic fast gradients and orthogonal stationary phases (e.g., C18, phenyl, HILIC) to determine the optimal separation conditions [19].

- Data Analysis: The raw chromatographic data (DAD, MS) is automatically processed by data analysis software (e.g., Analytical Studio). The software identifies the target compound peak and assesses the complexity of the mixture.

- Critical Parameters: Selection of mobile phase modifiers (e.g., formic acid, ammonium hydroxide) to influence selectivity and peak shape. The goal is to achieve a resolution (Rs) of >1.5 for the target compound from major impurities [19].

Step 3: Method Translation and Purification

- Procedure:

- Method Transfer: The optimal analytical method identified in PreQC is automatically scaled to a preparative method and transferred to the preparative HPLC or SFC system.

- Automated Purification: The preparative system, equipped with a suitable column, executes the purification. A fraction collector is triggered by the MS and/or UV signal to collect the eluent containing the target compound.

- Critical Parameters:

- Fraction Collector Setup: Configure the collector to collect the entire peak or just the "steady state" center of the peak to maximize purity and yield [20].

- Collection Vessel: Use containers appropriate for the subsequent solvent evaporation step.

Step 4: Post-Purification Analysis and Quality Control (PostQC & FinalQC)

- Procedure:

- Analysis: An aliquot of the purified fraction is automatically analyzed by LC-MS or SFC-MS using generic gradients to assess purity.

- Orthogonal Confirmation: High-Throughput Nuclear Magnetic Resonance (HT-NMR) analysis is performed, aided by automated Python scripts for data collection and analysis, to confirm structure and assess residual solvent content [19].

- Critical Parameters: Purity is confirmed by multiple detection methods (DAD, MS, CAD) to ensure analytical orthogonality and accuracy.

Step 5: Sample Reformating and Delivery

- Procedure:

- Solvent Evaporation: The solvent from the collected fractions is removed under reduced pressure using centrifugal evaporators.

- Redissolution: The dried compounds are automatically redissolved in a specified volume of DMSO.

- Delivery: The DMSO stock solutions are submitted to Compound Logistics, ready for replication and distribution to biological assays [19].

- Critical Parameters: Ensure accurate DMSO concentration for direct use in assays.

System Workflow Visualization

The following diagram illustrates the logical flow and data integration of the automated high-throughput purification protocol.

Diagram 1: Automated High-Throughput Purification Workflow. This diagram outlines the key steps and decision points in the integrated purification protocol, highlighting the central role of the LIMS for data management.

The Scientist's Toolkit: Essential Research Reagent Solutions

The following table details key reagents, software, and consumables essential for operating the automated purification platform described in this protocol.

Table 2: Essential Research Reagent Solutions for Automated Purification

| Item Name | Type | Function / Application | Notes & Specifications |

|---|---|---|---|

| LC-MS Grade Acetonitrile & Methanol | Solvent | Mobile phase for RP-HPLC-MS analysis and purification. | Low UV cutoff and minimal ion suppression for MS detection [19]. |

| Ammonium Hydroxide & Formic Acid | Mobile Phase Additive | pH modifiers for mobile phases in RP-HPLC. | Used to manipulate selectivity and improve peak shape of ionizable compounds [19]. |

| SAPIO LIMS | Software | Centralized data management and workflow automation. | Customizable platform for tracking samples from submission to final delivery [19]. |

| Analytical Studio | Software | Automated processing of chromatographic data (DAD, MS, CAD). | Critical for high-throughput data review and decision-making in PreQC and PostQC [19]. |

| C18 and Silica Stationary Phases | Consumable | Chromatographic columns for analytical and preparative RP and SFC separations. | Different surface chemistries provide orthogonal selectivity for method screening [19]. |

| Deuterated Solvent (e.g., DMSO-d6) | Reagent | Solvent for HT-NMR analysis. | Used for final quality control and structural confirmation of purified compounds [19]. |

From Theory to Bench: Implementing Integrated Systems for Biologics, Glycans, and Small Molecules

The evolution of automated purification systems integrated with synthesis platforms has positioned advanced Tangential Flow Filtration (TFF) technologies as critical enablers for next-generation biomanufacturing. Single-pass tangential flow filtration (SPTFF) and single-use TFF systems represent transformative approaches that address key bottlenecks in downstream processing for monoclonal antibodies (mAbs) and viral vectors. Unlike traditional TFF systems that operate in recirculation mode, SPTFF concentrates and diafilters products in a single pass through the filtration module, significantly reducing processing time, hold-up volumes, and shear stress on sensitive biologics [23] [24]. Simultaneously, single-use TFF technologies eliminate cross-contamination risks and reduce cleaning validation requirements, making them particularly valuable in multi-product clinical manufacturing facilities [25].

The integration of these technologies within automated synthesis and purification platforms enables continuous processing, which reduces facility footprint by 50-70% while tightening quality variance between production lots [26]. This technical note provides detailed application protocols and performance data for implementing SPTFF and single-use TFF systems in the purification of mAbs and viral vectors, with specific emphasis on operational parameters that ensure optimal yield and product quality within automated biomanufacturing workflows.

Single-Pass TFF (SPTFF) Applications

SPTFF for Monoclonal Antibody Preconcentration

Experimental Protocol for mAb Clarified Cell Culture Fluid (CCF) Preconcentration

- Objective: Achieve 20x volume concentration factor of mAb CCF using SPTFF to reduce Protein A chromatography loading volume and processing time [27].

- Equipment and Materials:

- SPTFF system with Revaclear 400 hollow fiber polyethersulfone membranes (30 kDa MWCO, 1.8 m² surface area) [27]

- Masterflex L/S peristaltic pump with Tygon E-LFL tubing [27]

- Digital pressure gauges (Ashcroft) for pressure monitoring [27]

- Clarified cell culture fluid (CCF) containing mAb (4 g/L titer) [27]

- Phosphate-Buffered Saline (PBS), pH 7.5 for system flushing [27]

- Methodology:

- Flush the membrane module with 150 mM PBS at pH 7.5, ensuring removal of all air bubbles from hollow fibers and tubing [27].

- Conduct flux-stepping experiments in total recycle mode (permeate and retentate returned to feed reservoir) to determine critical flux for fouling:

- For long-term operation, configure SPTFF system for single-pass operation with target concentration factor of 20x [27].

- Maintain TMP below 30 kPa throughout the process to prevent membrane fouling [27].

- Operate system continuously for 24 hours, collecting samples of feed, retentate, and permeate at predetermined intervals for offline analysis [27].

Table 1: Performance Metrics of SPTFF for mAb CCF Preconcentration

| Parameter | Performance Value | Conditions |

|---|---|---|

| Volume Concentration Factor | 20x | Single-pass operation [27] |

| Maximum mAb Concentration | 9 g/L | Preconcentrated product [27] |

| Operation Duration | 24 hours | Continuous operation [27] |

| Transmembrane Pressure | < 30 kPa | Stable operation without fouling [27] |

| Protein A Resin Savings | Up to 80% | Reduced chromatography loading volume [27] |

SPTFF for Adeno-Associated Virus (AAV) Purification

Experimental Protocol for AAV Clarified Cell Lysate (CCL) Concentration and Purification

- Objective: Concentrate and purify AAV clarified cell lysate while minimizing shear-induced aggregation and fragmentation of viral capsids [23].

- Equipment and Materials:

- SPTFF system with 300 kDa regenerated cellulose (RC) membranes [23]

- Positively-charged adsorptive filter for CCL preconditioning [23]

- AAV clarified cell lysate (produced in HEK293 cells via triple transfection) [23]

- Peristaltic pump with low-shear tubing [23]

- Host Cell Protein (HCP) ELISA and qPCR assays for analytics [23]

- Methodology:

- Precondition AAV CCL by passing through a positively-charged adsorptive filter to reduce foulants and increase critical flux for fouling [23].

- Identify optimal operating parameters by conducting flux-stepping experiments below the critical flux (Jfoul) to maintain stable operation [23].

- Configure SPTFF system with 300 kDa RC membranes for complete AAV retention and high HCP removal [23].

- Operate at permeate fluxes below the critical flux to minimize membrane fouling and maintain process stability [23].

- Monitor AAV recovery yield using qPCR assays for viral genomes [23].

- Quantify impurity removal through HCP ELISA and DNA quantification assays [23].

Table 2: Performance Metrics of SPTFF for AAV CCL Concentration and Purification

| Parameter | Performance Value | Conditions |

|---|---|---|

| AAV Recovery Yield | Near-complete retention | 300 kDa RC membrane [23] |

| Host Cell Protein Removal | >90% | Operation below critical flux [23] |

| Shear Sensitivity | Significant reduction vs. batch TFF | Single pump pass [23] |

| Critical Flux Enhancement | Achievable with preconditioning | Adsorptive filter pretreatment [23] |

Single-Use TFF Applications

Single-Use TFF System Implementation

Protocol for Single-Use TFF in Clinical Manufacturing

- Objective: Implement single-use TFF system for intermediate ultrafiltration-diafiltration (UF-DF) of biologics in a cGMP multi-product facility [25].

- Equipment and Materials:

- Millipore SU Mobius FlexReady Solution for TFF model TF2 system [25]

- Single-use flow path (gamma-irradiated) with 5 m² membrane size [25]

- Retentate tank with working volume of 10-50 L [25]

- Ultrasonic permeate flow meter and retentate pressure control valve [25]

- Product-specific buffer solutions for diafiltration [25]

- Methodology:

- System Setup: Install pre-sterilized disposable flow path (approximately 60 minutes installation time) [25].

- Integrity Testing: Perform membrane integrity test using integrated system pumps (no auxiliary pump required) [25].

- Process Parameter Configuration:

- Diafiltration Operation:

- Product Recovery:

Table 3: Performance Comparison: Single-Use vs. Stainless Steel TFF Systems

| Parameter | Single-Use TFF System | Stainless Steel TFF System |

|---|---|---|

| Setup and Installation Time | 60 minutes [25] | Several hours [25] |

| Pre-use Cleaning | Not required (gamma sterilized) [25] | CIP required (2-step process) [25] |

| Cross-contamination Risk | Minimal (disposable flow path) [25] | Requires validation [25] |

| Data Management | Automated electronic data capture [25] | Manual transcription to paper records [25] |

| Mixing Efficiency | Retentate diverter plate + magnetic agitator [25] | Retentate return flow distribution only [25] |

| Product Recovery | Direct buffer transfer to retentate tank [25] | Additional weighing step required [25] |

High-Throughput Automated TFF for Low-Volume Applications

Protocol for Automated TFF Using aµtoPulse System

- Objective: Implement high-throughput TFF processing for multiple low-volume samples (under 10 mL) with minimal hands-on time and sample loss [28].

- Equipment and Materials:

- Methodology:

- System Configuration:

- Process Setup:

- Operation:

- Recovery:

Integrated System Implementation and Process Control

Integration with Automated Synthesis Platforms

The successful integration of SPTFF and single-use TFF technologies within automated synthesis platforms requires careful attention to system interoperability and process control. Implementation of these technologies enables fully continuous biomanufacturing operations with significantly reduced footprint and operating costs [26]. For mAb production, integrating SPTFF with high-performance countercurrent membrane purification (HPCMP) has demonstrated 60 ± 8% reduction in host cell proteins and more than 30-fold removal of DNA while maintaining 94 ± 3% mAb step yield [27]. The buffer requirement for this integrated process was only 76 L/kg mAb, representing significant reduction compared to traditional processes [27].

Machine Learning and Process Control in TFF

Advanced process control strategies incorporating machine learning are emerging as powerful tools for optimizing TFF operations in automated purification systems. These approaches enable real-time monitoring and control of critical parameters including transmembrane pressure, flux rates, and crossflow velocity [29]. Implementation of Process Analytical Technology (PAT) frameworks allows for continuous quality monitoring and control throughout the filtration process, reducing batch failure rates and improving product consistency [29]. Automated TFF systems with integrated analytics can shorten concentration tests by 70% and provide feedback to automated filter-control loops, enhancing process reliability and reducing operator intervention [26].

The Scientist's Toolkit: Essential Research Reagents and Materials

Table 4: Key Research Reagent Solutions for TFF Applications

| Item | Function/Application | Specifications |

|---|---|---|

| 300 kDa Regenerated Cellulose (RC) Membranes | Optimal for AAV purification with complete capsid retention and high HCP removal [23] | 300 kDa molecular weight cutoff, low protein binding [23] |

| Polyethersulfone (PES) Membranes | General purpose TFF for mAbs and proteins; low protein-binding with superior flow rates [30] | Various MWCOs (10-500 kDa); high chemical stability [30] |

| Revaclear 400 Hollow Fiber Modules | SPTFF preconcentration of mAb CCF [27] | 30 kDa MWCO, 1.8 m² surface area, PES material [27] |

| Cytiva T-series Delta RC Cassettes | Processing of mRNA, saRNA, and LNP-encapsulated RNA [30] | 100 kDa MWCO, regenerated cellulose, high step yields (80-94%) [30] |

| Positively-Charged Adsorptive Filters | Preconditioning of AAV clarified cell lysate to reduce foulants [23] | Increases critical flux for SPTFF operation [23] |

| Single-Use Flow Path Assemblies | Disposable fluid paths for single-use TFF systems; eliminate cleaning validation [25] | Gamma-irradiated, pre-sterilized, with integrated pressure sensors [25] |

| aµtoPulse Filter Chips | High-throughput, low-volume TFF processing [28] | Dual-membrane design (12 cm² + 3.5 cm²), 180 µL hold-up volume [28] |

The implementation of single-pass and single-use TFF technologies represents a significant advancement in biologics purification, particularly when integrated within automated synthesis platforms. SPTFF provides distinct advantages for both mAb and viral vector processing by reducing shear stress, minimizing hold-up volumes, and enabling continuous operation. Single-use TFF systems offer compelling benefits in multi-product facilities through elimination of cross-contamination risks and reduction of cleaning validation requirements. The protocols and performance data presented in this application note provide researchers and process development scientists with practical methodologies for implementing these technologies in both development and clinical manufacturing environments. As the biopharmaceutical industry continues to evolve toward more integrated and continuous manufacturing processes, these advanced TFF technologies will play an increasingly critical role in enabling efficient, scalable, and robust purification of therapeutic biologics.

The synthesis of complex carbohydrates, or glycans, represents one of the most significant challenges in modern synthetic chemistry due to their structural complexity and the critical need for precise stereochemical control. Automated glycan assembly (AGA) has emerged as a transformative approach to address the bottlenecks associated with traditional manual synthesis, which is labor-intensive, time-consuming, and requires specialized expertise. High-performance liquid chromatography (HPLC)-based platforms have been developed to automate both the synthesis and purification of carbohydrate building blocks and oligosaccharides, significantly accelerating the production of these biologically essential molecules [31] [32] [33].

The demand for robust methods to produce both natural glycans and their mimetics has increased substantially with the improved understanding of glycan functions in health and disease. Carbohydrates play crucial roles in numerous biological processes, including cell-cell recognition, immune response, and pathogen invasion. Despite their importance, the accessibility of complex glycans remains scarce from natural sources, making chemical synthesis a necessity for advancing glycoscience research and therapeutic development [32] [33]. HPLC-based automation (HPLC-A) platforms address this challenge by providing a reproducible, scalable, and transferable methodology that can be operated even by non-specialists, thereby democratizing access to complex carbohydrate molecules [32].

HPLC-A Platform Architecture and Components

System Configuration and Operational Principles

The modular character of HPLC instrumentation allows for the implementation of specialized attachments and components through a plug-in approach, with operational modes modulated by computer programming. Modern HPLC-A platforms comprise two main circuits: the reaction circuit (upstream operations) and the separation circuit (downstream operations), both operated by standard HPLC software [31] [33]. This integrated architecture enables a fully automated continuum from reagent delivery to purified compound collection.

The reaction circuit is typically equipped with a quaternary HPLC pump, an autosampler programmed to deliver all necessary reagents, and a reactor with temperature control and stirring capabilities. Recent enhanced setups incorporate commercial jacketed reactors, real-time in-line temperature control/detection systems, and precision reaction mixture transfer systems [31]. The separation circuit features a quaternary pump, disposable flash chromatography cartridges, a UV detector, and an automated fraction collector. A programmable logic controller (PLC) often manages the transfer of the crude reaction mixture from the reactor to the chromatography column, while sensors and solenoid valves control or interrupt flow during this critical transfer process [33].

Evolution of HPLC-A Platform Generations

HPLC-A technology has evolved through several generations, each offering enhanced automation capabilities:

- Generation A: Provided operational convenience and faster reaction times but remained largely manual in operation [32].

- Generation B: Incorporated an autosampler for delivering glycosylation promoters, reducing manual intervention but still requiring operator assistance for mode switching [32].

- Generation C: Implemented a standard two-way split valve enabling complete "press-of-a-button" automation for solid-phase synthesis [32].

- Current Systems: Feature four-way split valves, automated fraction collectors, and temperature-controlled reactors, enabling multiple sequential syntheses with single-button initiation [32] [33].

Table 1: Evolution of HPLC-A Platform Capabilities

| Generation | Key Features | Automation Level | Primary Applications |

|---|---|---|---|

| Generation A | Faster reaction times, UV monitoring | Semi-manual | Solid-phase glycan synthesis |

| Generation B | Autosampler for promoter delivery | Semi-manual | Solid-phase glycan synthesis |

| Generation C | Two-way split valve | Fully automated | Solid-phase glycan synthesis |

| Current Systems | Four-way split valve, fraction collector, temperature control | Fully automated | Solution-phase synthesis, building block preparation |

Workflow Visualization

The following diagram illustrates the integrated workflow of a modern HPLC-A platform for automated synthesis and purification:

Diagram Title: HPLC-A Platform Workflow

Applications and Performance of HPLC-A Platforms

Synthesis of Carbohydrate Building Blocks

HPLC-A platforms have demonstrated exceptional utility in performing various protecting group manipulations essential for preparing carbohydrate building blocks. These automated systems successfully conduct reactions including silylation, benzylation, benzoylation, and picoloylation under temperature-controlled conditions [31] [33]. The platform's capability to handle diverse chemical transformations streamlines the production of selectively protected monosaccharide derivatives required for oligosaccharide assembly.

In a representative application, the fully automated silylation of a 6-OH derivative using TBDMSCl in DCM, with imidazole in DMF and triethylamine as base, proceeded for 5 hours at room temperature. The subsequent automated purification and fraction collection yielded the 6-O-TBDMS derivative in 81% yield [33]. Similarly, benzoylation and picoloylation reactions provided corresponding products in commendable yields, though benzylation resulted in a lower yield (36%) due to the formation of multiple side products [33]. These results highlight the platform's effectiveness in automating crucial protecting group strategies while identifying areas for further optimization.

Glycosylation and Oligosaccharide Synthesis

The core application of HPLC-A technology lies in the formation of glycosidic bonds to construct oligosaccharides. The platform has been successfully applied to both solid-phase and solution-phase glycosylation strategies. In solid-phase approaches, resin-immobilized acceptors are packed into columns integrated into the HPLC system, with glycosylations performed by recirculating premixed donor and promoter solutions [32] [33]. Solution-phase approaches offer complementary advantages, particularly for accessing multiple targets in parallel.

Optimization studies have revealed that direct translation from manual to automated glycosylation conditions may require parameter adjustments. For instance, initial automated glycosidation of a thioglucoside donor with a primary glycosyl acceptor using NIS/TfOH activation provided the target disaccharide in only 25% yield compared to 93% yield achieved manually [32]. Subsequent optimization through increased TfOH equivalents (0.5 equiv) and extended recirculation time (60 minutes) significantly improved the yield to 84% [32]. This demonstrates the importance of refining reaction parameters specifically for automated environments rather than assuming direct transferability of manual protocols.

Table 2: Performance of HPLC-A Platform in Diverse Applications

| Reaction Type | Specific Transformation | Key Reaction Conditions | Reported Yield | Reference |

|---|---|---|---|---|

| Protecting Group Manipulation | Silylation (6-OH) | TBDMSCl, imidazole, TEA, DCM, 5 h, rt | 81% | [33] |

| Protecting Group Manipulation | Benzoylation | BzCl, pyridine, DCM, rt | Commendable yield | [33] |

| Glycosylation | Disaccharide formation (Thioglycoside) | NIS (2.0 eq), TfOH (0.5 eq), DCM, 60 min | 84% | [32] |

| Glycosylation | Disaccharide formation (Benzoylated acceptor) | Donor (3.0 eq), NIS (2.0 eq), TfOH (0.5 eq) | 87% | [32] |

| Glycosylation | Disaccharide formation (Trichloroacetimidate) | TMSOTf (0.5 eq), DCM, 60 min | 88% | [32] |

Integration with Real-Time Monitoring and Advanced Analytics

Recent advancements in HPLC-A platforms have incorporated real-time monitoring capabilities using various analytical techniques. These include in-line spectroscopy such as HPLC, Raman, and NMR, which enable closed-loop optimization of reactions [34]. The integration of low-cost sensors for color, temperature, conductivity, and pH provides continuous process monitoring, allowing for dynamic procedure execution and self-correction based on real-time feedback [34].

Vision-based condition monitoring systems further enhance platform autonomy by detecting critical hardware failures, such as syringe breakage, using multi-scale template matching and structural similarity analysis [34]. This comprehensive sensor integration creates a "process fingerprint" that can be used for subsequent validation of reproduced procedures, significantly enhancing reproducibility and reliability [34].

Experimental Protocols

General Protocol for Automated Reactions

The following standardized protocol is adapted from multiple sources detailing HPLC-A operations [31] [32] [33]:

System Preparation:

- Add activated molecular sieves (3Å) to the DCM solvent bottle feeding the quaternary pump.

- Flush the autosampler and delivery capillary tubing with toluene followed by dry DCM.

- Wash the reactor sequentially with acetone and dry DCM.

Reagent Preparation:

- Prepare solutions of all reactants and reagents at specified concentrations in appropriate solvents.

- Load solutions into designated vials in the autosampler tray according to the programmed sequence.

Column Equilibration:

- Engage Pump 2 to pass appropriate solvents through the chromatography column for equilibration.

- Verify system pressure stability before initiating the reaction sequence.

Reaction Execution:

- Initiate the automated sequence via the standard HPLC software interface.

- The autosampler delivers specified volumes of reagents to the reactor in the programmed sequence.

- The reaction proceeds with stirring under temperature control for the predetermined duration.

Reaction Quenching and Transfer:

- When applicable, deliver quenching reagents via the autosampler.

- Open the solenoid valve at the reactor outlet to initiate transfer of the reaction mixture to the chromatography column.

- Rinse the reactor with small amounts of toluene (delivered via Pump 1) and transfer rinses to the column.

Chromatographic Purification:

- Execute the predefined gradient elution method using Pump 2.

- Monitor eluent via UV detector set at appropriate wavelengths for the target compounds.

- Collect fractions automatically based on UV signal thresholds or timed intervals.

Post-Processing:

- Manually combine fractions containing pure product based on TLC or analytical HPLC analysis.

- Concentrate combined fractions under reduced pressure to obtain purified compounds.

Protocol for Automated Glycosylation

This specific protocol for solution-phase glycosylation adapts conditions from Demchenko et al. [32]:

Donor and Acceptor Preparation:

- Prepare solutions of thioglycoside donor (e.g., 1, 3.0 equiv) and glycosyl acceptor (e.g., 4, 1.0 equiv) in anhydrous DCM.

- Prepare promoter solutions: NIS (2.0 equiv) and TfOH (0.5 equiv) in anhydrous DCM.

System Setup:

- Load the Omnifit column with activated 3Å molecular sieves.

- Add activated molecular sieves to the DCM solvent bottle feeding Pump 1.

- Purge the entire system with dry nitrogen to maintain anhydrous conditions.

Reaction Execution:

- Program the autosampler to deliver donor, acceptor, and promoter solutions to the reactor.

- Set the recirculation mode for 60 minutes via Pump line D.

- Maintain the system at room temperature throughout the reaction.

Workup and Purification:

- Transfer the reaction mixture to the chromatography column using the peristaltic pump transfer system.

- Purify using a gradient elution from hexane to ethyl acetate.

- Collect fractions automatically based on UV detection at 254 nm.

Product Isolation:

- Combine fractions containing the pure disaccharide product.

- Concentrate under reduced pressure to obtain the product as a solid or syrup.

- Confirm structure and purity by 1H NMR and TLC analysis.

The Scientist's Toolkit: Essential Research Reagents and Materials

Table 3: Essential Research Reagents and Materials for HPLC-A Platforms

| Item | Specification | Function/Application | Notes |

|---|---|---|---|

| Solvents | Anhydrous DCM, DMF, toluene | Reaction medium, washing | Distilled from CaH₂ and stored over 3Å molecular sieves [31] |

| Molecular Sieves | 3Å, activated beads | Water scavenging for glycosylation | Added to solvent bottles and integrated columns [32] |

| Glycosyl Donors | Thioglycosides, trichloroacetimidates | Glycosyl donors for chain elongation | Require promoters for activation [32] |

| Promoters | NIS/TfOH, TMSOTf | Activating agents for glycosylation | Delivered via autosampler in solution [32] |

| Protecting Group Reagents | TBDMSCl, BzCl, BnBr | Hydroxyl group protection | Enable selective functionalization [33] |

| Base Reagents | Imidazole, triethylamine, pyridine | Acid scavengers in protection reactions | Critical for silylation, acylation reactions [33] |

| Chromatography Media | SilicaFlash R60, 20–45 μm | Stationary phase for purification | Packed in disposable cartridges [31] |

| Purification Solvents | ACS-grade hexane, ethyl acetate | Mobile phase for chromatography | Used without further purification [31] |

HPLC-based automated platforms represent a significant advancement in carbohydrate synthesis technology, effectively addressing the historical challenges associated with glycan production. By integrating synthesis and purification in a fully automated system, HPLC-A platforms enhance reproducibility, reduce manual intervention, and make complex carbohydrate synthesis accessible to non-specialists. The modular nature of these systems allows for continuous implementation of new components and methodologies through a plug-in approach, ensuring the technology remains at the forefront of glycoscience innovation [31] [33].

Future developments in HPLC-A technology will likely focus on enhanced integration with real-time analytical monitoring, including more widespread implementation of in-line NMR and MS detection [34]. The incorporation of machine learning algorithms for reaction optimization and the development of more sophisticated feedback control systems will further advance the autonomy and efficiency of these platforms [34] [35]. As the glycobiology market continues to expand—projected to reach USD 2,497.07 million by 2033—the role of automated synthesis platforms in accelerating therapeutic discovery and development will become increasingly vital [36]. Through continued refinement and adoption, HPLC-A technology promises to unlock new possibilities in glycoscience research and glycan-based therapeutic development.

The integration of automated synthesis, purification, and testing platforms is revolutionizing the design and optimization of biologics and small molecules. This article presents detailed application notes and protocols for several state-of-the-art, closed-loop workflows that enable rapid iteration from genetic or chemical design to functional protein or compound. Framed within broader research on automated purification systems integrated with synthesis platforms, these case studies highlight how coupling machine learning with high-throughput experimental feedback accelerates discovery cycles for researchers and drug development professionals.

Traditional biological and chemical discovery relies on linear, often disjointed, Design-Build-Test-Learn (DBTL) cycles. Recent advancements advocate for a paradigm shift towards fully integrated, closed-loop systems [37] [38]. These platforms consolidate synthesis, purification, and assay into seamless workflows, drastically reducing cycle times from weeks to days or even hours. A key enabler is the repositioning of "Learning" to the beginning of the cycle (LDBT), where machine learning models trained on vast datasets guide the initial design, enabling more predictive and efficient exploration of sequence or chemical space [37]. The following case studies exemplify this shift, providing actionable protocols and quantitative benchmarks.

Case Study 1: Protein CREATE – A Computational and Experimental Pipeline for De Novo Binders

Application Note: Protein CREATE (Computational Redesign via an Experiment-Augmented Training Engine) is an integrated pipeline for the closed-loop design of de novo protein binders [39]. It addresses the critical gap between in silico sequence generation and experimental characterization at scale.

Experimental Protocol: Phage Display Binding-by-Sequencing Assay

- Design: Generate initial protein binder sequences using context-dependent inverse folding with models like ESM-IF, given a target receptor structure [39].

- Build (DNA to Display):

- Clone designed DNA libraries into a phage display vector.

- Propagate phage to display the encoded protein variants on the capsid.

- Test (Quantitative Binding Selection):

- Incubate the phage library with target-immobilized beads.

- Perform wash steps to remove non-binders.

- Extract genomic DNA from bound phage.

- Label genomes with a Unique Molecular Identifier (UMI) barcode for accurate variant counting.

- Perform next-generation sequencing (NGS).

- Learn & Iterate:

- Calculate enrichment scores for each variant by comparing NGS counts in the bound fraction versus the initial library.

- Use this quantitative binding data (from thousands of variants in parallel) to retrain or score the generative protein language model.

- Initiate a new design round with the updated model.

Key Quantitative Data:

- Throughput: Capable of assaying thousands of designed binders in parallel [39].

- Cycle Time: Quantitative binding data collection within 3 days [39].

- Validation: Demonstrated ~13-fold enrichment difference between a strong (KD=18.8 nM) and weak (KD=260 nM) binder, correlating with SPR data [39].

- Success: Identified novel IL-7Rα binders with <60% sequence identity to parent and dissociation constants within two orders of magnitude of the parent [39].

Case Study 2: Automated Synthesis–Purification–Bioassay for Small Molecules

Application Note: This platform represents a fully automated, integrated system for the batch-supported synthesis, purification, quantification, and biochemical testing of small-molecule libraries, validating its utility in drug discovery campaigns [40].

Experimental Protocol: Integrated Library Synthesis and Screening

- Design: Plan library based on acylation or Buchwald coupling reactions using commercially available starting cores.

- Build & Purify (Automated Synthesis):

- Reagents and catalysts are dispensed automatically into reaction vials on a platform like the Chemspeed SWAVE synthesizer.

- Reactions proceed under controlled temperature and atmosphere.

- Reaction mixtures are automatically transferred to an in-line preparative HPLC-MS system.

- MS-triggered fraction collection isolates desired products.

- Test & Quantify (Integrated Analysis):

- A Charged Aerosol Detector (CAD) analyzes an aliquot of each HPLC fraction to determine compound concentration (±20% accuracy vs. NMR) [40].

- Based on CAD concentration, a precise volume is dispensed into a 96-well assay plate and dried.

- Compounds are dissolved in DMSO to a precise stock concentration (e.g., 10 mM) using a liquid handler.

- An 11-point, 3-fold serial dilution is prepared in a 384-well plate.

- Compounds are transferred via pin tool into a biochemical assay (e.g., TR-FRET binding assay) and read on a plate reader.

- Learn: Bioassay data (IC50/EC50) is automatically processed and compared to conventional synthesis data to inform the next design cycle.

Key Quantitative Data:

| Metric | Amide Library (22 members) | Aromatic Amine Library (33 members) |

|---|---|---|

| Total Platform Time | 15 hours | 30 hours |

| Reaction Yield Range | 2% – 71% | 3% – 92% |

| Compounds Passing Purity (>90%) | 19 of 22 | 29 of 33 |

| Data Correlation | Excellent agreement with conventional methods [40] | Excellent agreement with conventional methods [40] |

Case Study 3: Automated Cell-Free Workflow for Transcription Factor Engineering

Application Note: This protocol details a high-throughput, automated cell-free gene expression (CFE) workflow optimized for the Echo Acoustic Liquid Handler, enabling ultra-rapid testing of protein variant libraries directly from DNA [41].

Experimental Protocol: High-Throughput CFE Screening

- Design: Generate library of transcription factor (TF) variants (e.g., via site-saturation mutagenesis).

- Build (DNA Template Preparation): Use PCR to generate linear expression templates (LETs) encoding TF variants, bypassing cloning.

- Test (Automated Reaction Assembly & Readout):

- Reagent Optimization: Pre-type fluids on the Echo (e.g., use "B2" preset for DNA in PCR buffer) for precise nanoliter transfers [41].

- Assembly: The Echo transfers 100 nL of DNA LET and 900 nL of CFE reaction mix (cell extract, energy sources, salts) into a 384-well destination plate to form a 1 μL reaction.

- Induction: Add a panel of ligand conditions (e.g., 0-100 μM of metal ions) to test TF sensor sensitivity and selectivity.

- Incubation & Readout: Incubate plate to express the TF and its output fluorescent reporter (e.g., GFP). Measure fluorescence.

- Learn: Calculate fold-change activation for each variant across ligand conditions to identify improved biosensors.

Key Quantitative Data:

- Throughput: 3,682 unique CFE reactions assayed in <48 hours [41].

- Miniaturization: Optimal reliable reaction volume: 1 μL (Z'-factor > 0.5) [41].

- Precision: Automated dispensing showed linear correlation (R²=0.99) with manual pipetting across volumes [41].

Workflow Logic and Pathway Diagrams

Closed-Loop Design-Build-Test-Learn Cycle

Integrated DNA-to-Protein Experimental Workflow

The Scientist's Toolkit: Key Research Reagent Solutions

| Item | Function in Closed-Loop Workflow | Example/Citation |

|---|---|---|

| Phage Display System | Display protein variant libraries on viral capsids for affinity selection against immobilized targets. | Core of Protein CREATE binding-by-sequencing [39]. |

| Cell-Free Gene Expression (CFE) System | Lyate-based in vitro transcription/translation system for rapid, high-throughput protein synthesis without live cells. | Enables testing of 1000s of TF variants in <48h [41]. Also cited as accelerator [37]. |

| Linear Expression Templates (LETs) | PCR-amplified DNA encoding gene, promoter, and terminator. Bypasses cloning for direct use in CFE. | Used for rapid TF variant testing [41]. |

| Charged Aerosol Detector (CAD) | "Universal" HPLC detector for mass-based concentration determination of non-chromophoric compounds post-purification. | Enables automated quantitation for bioassay dosing [40]. |

| Unique Molecular Identifier (UMI) | Short, random nucleotide barcode added to each DNA molecule pre-sequencing. Enables accurate digital counting of variants. | Critical for quantitative binding scores in Protein CREATE [39]. |

| Echo Acoustic Liquid Handler | Uses sound waves to transfer nanoliter volumes of reagents contact-free. Enables miniaturized, high-density reaction assembly. | Central to automated CFE workflow assembly [41]. |

| OPC-UA Compatible Hardware | Industry-standard communication protocol for connecting lab equipment (synthesizers, analyzers) to a central control system. | Enables closed-loop optimization in automated flow chemistry [42]. |

| Machine Learning Models (ESM-IF, ProteinMPNN) | Protein language or structure-based models for de novo sequence design or optimization given functional constraints. | Used for zero-shot design in case studies [39] [37]. |

Maximizing System Performance: Strategies for Troubleshooting, Optimization, and Scale-Up

Automated purification systems integrated with synthesis platforms are transformative technologies in modern drug development, accelerating the design-make-test-analyze (DMTA) cycle. However, these integrated systems face significant operational challenges that can compromise efficiency and product quality. Fouling of critical components, microbial contamination, and solvent compatibility issues represent the most common pitfalls that can disrupt automated workflows, reduce throughput, and jeopardize product integrity. This application note provides detailed protocols and strategies to mitigate these challenges, enabling researchers to maintain peak performance of automated purification systems while ensuring the synthesis of high-purity compounds.

Understanding the Key Challenges

Membrane and Resin Fouling

In automated filtration and purification systems, fouling occurs through several mechanisms. Particulate fouling involves the accumulation of suspended solids, colloids, and microorganisms on membrane surfaces, forming a cake layer that restricts flow [43]. Organic fouling results from adsorption of natural organic matter (NOM) and algal by-products that create sticky biofilms [43]. Inorganic scaling occurs when dissolved minerals like calcium carbonate precipitate and form crystalline structures on membrane surfaces, particularly problematic in water sources with high mineral content [43]. In chromatography systems, foulants such as host cell proteins, nucleic acids, and lipids can bind to resins, reducing binding capacity and resolution over multiple cycles [44].

Microbial Contamination