Automated Synthesis Systems in Drug Discovery: A Comparative Review of Commercial Platforms, Applications, and Future Directions

This article provides a comprehensive comparison of commercial automated synthesis systems for researchers, scientists, and drug development professionals.

Automated Synthesis Systems in Drug Discovery: A Comparative Review of Commercial Platforms, Applications, and Future Directions

Abstract

This article provides a comprehensive comparison of commercial automated synthesis systems for researchers, scientists, and drug development professionals. It covers the foundational principles of automation in chemical synthesis, explores the methodologies and real-world applications of various commercial platforms, addresses common troubleshooting and optimization challenges, and offers a validated comparative analysis of system capabilities. By synthesizing the latest advancements in robotic platforms, AI integration, and flow chemistry, this review serves as a strategic guide for selecting and implementing automated synthesis technologies to accelerate the Design-Make-Test-Analyse cycle in medicinal chemistry and nanomaterial development.

The Rise of Automated Synthesis: Core Principles and Technological Evolution

The field of chemical synthesis is undergoing a profound transformation, driven by the integration of robotics, artificial intelligence (AI), and machine learning (ML). Automated synthesis, once limited to simple repetitive tasks, now encompasses a broad spectrum of technologies ranging from basic robotic assistance to fully autonomous self-driving laboratories. This evolution is critically important for researchers and drug development professionals seeking to accelerate the discovery and optimization of novel molecules and materials. In the demanding context of drug discovery, the iterative Design-Make-Test-Analyse (DMTA) cycle relies heavily on the efficient synthesis of target compounds, a process that has traditionally represented a significant bottleneck [1]. The emergence of sophisticated automation addresses this challenge directly, enhancing efficiency, improving reproducibility, and enabling the exploration of chemical spaces that are intractable through manual methods [2].

This guide provides a comparative analysis of automated synthesis systems, framing them within a continuum from basic automation to full autonomy. It objectively examines the capabilities, performance, and applications of these systems by synthesizing data from current literature, including experimental protocols and quantitative results. The aim is to offer a clear, data-driven resource that aids scientists in navigating the expanding landscape of synthetic automation.

Defining the Spectrum of Automation

The term "automated synthesis" is not monolithic; it covers a range of systems with varying levels of intelligence, independence, and capability. Understanding this hierarchy is essential for selecting the appropriate technology for a given research goal.

Table: Levels of Automation in Chemical Synthesis

| Automation Level | Human Role | Key Characteristics | Typical Applications |

|---|---|---|---|

| Basic Automation | Operates equipment; designs all experiments. | Robotic execution of pre-defined, repetitive tasks. High throughput but no decision-making. | Parallel synthesis, sample preparation, repetitive reaction steps [2]. |

| Computer-Assisted Synthesis | Interprets AI proposals; selects and executes experiments. | AI-powered retrosynthesis and condition prediction (CASP). Human required for final decision and physical setup [1]. | Planning routes for novel or complex target molecules [1]. |

| Closed-Loop (Self-Optimizing) | Defines initial problem and optimization goals. | System runs experiments, analyzes data, and selects next conditions autonomously via ML. | Multi-objective optimization of reaction conditions and formulations [3] [4]. |

| Autonomous (Self-Driving Labs) | Supervises system; identifies new research objectives. | Highest degree of autonomy. Integrates synthesis, analysis, and AI-driven goal-seeking with minimal intervention [4]. | Discovery of new materials or reactions without pre-defined targets [4]. |

A critical framework for understanding these levels is the "degree of autonomy," which classifies systems based on the required human intervention [4]:

- Piecewise Systems: The algorithm and physical platform are entirely separate. A researcher must collect data, transfer it to an algorithm, and then manually implement the algorithm's suggested next experiments [4].

- Semi-Closed-Loop Systems: There is direct communication between the platform and the algorithm, but a researcher must still intervene for specific steps, such as sample purification or offline analysis [4].

- Closed-Loop Systems: The entire process—experimental execution, data analysis, and selection of subsequent experiments—is conducted without human intervention. This enables rapid, data-greedy optimization campaigns [3] [4].

- Self-Motivated Systems: A theoretical future level where systems can autonomously define and pursue novel scientific objectives, completely replacing human-guided discovery [4].

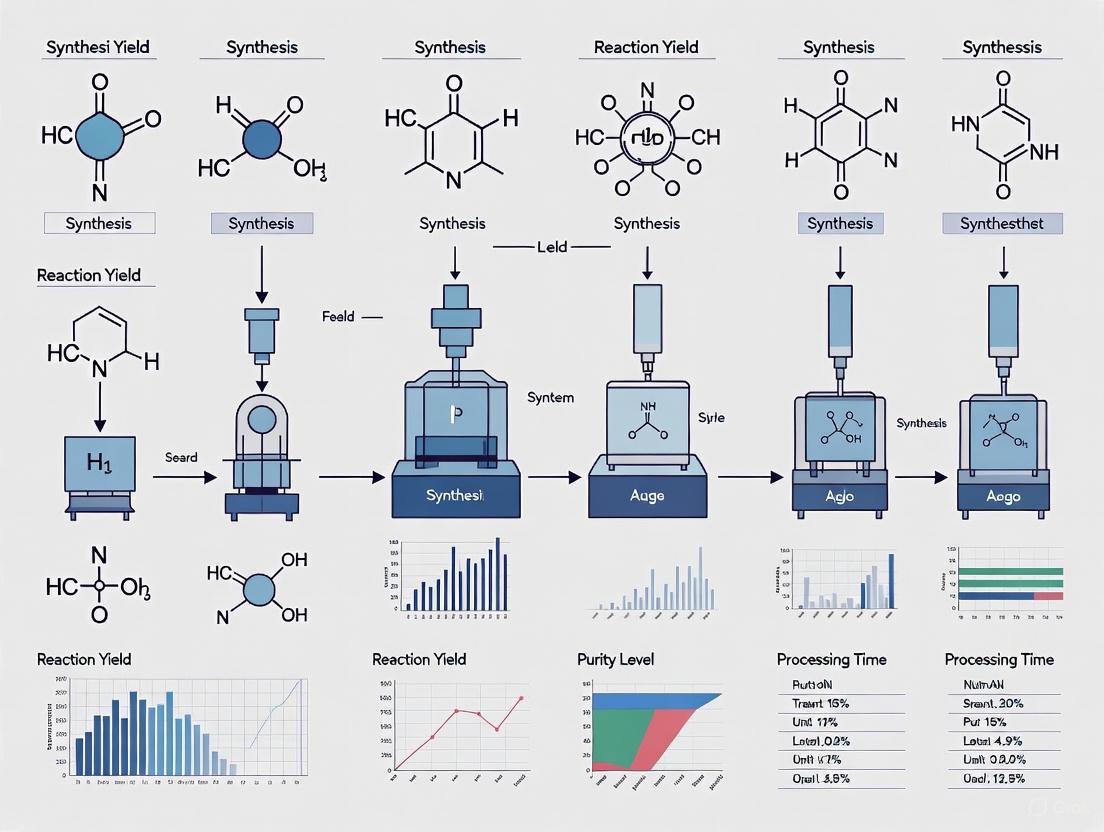

Diagram 1: The spectrum of automation in chemical synthesis, showing increasing delegation of tasks from human to machine.

Performance Metrics and Comparative Data

Evaluating the performance of automated synthesis systems requires a standardized set of metrics beyond simple throughput. Key performance indicators include optimization rate, operational lifetime, throughput, experimental precision, and material usage [4].

Case Study: Many-Objective Optimization of Polymer Nanoparticles

A landmark study demonstrates the power of a closed-loop self-driving laboratory for a complex many-objective optimization. The system synthesized polymer nanoparticles via RAFT polymerisation-induced self-assembly (PISA) and used orthogonal online analytics (NMR, GPC, DLS) to characterize outcomes [3].

- Experimental Protocol: The platform optimized the RAFT polymerisation of diacetone acrylamide mediated by a poly(dimethylacrylamide) macro-CTA. The input variables were temperature (68–80 °C), residence time (6–30 minutes), and the [monomer]:[CTA] ratio (100–600). The multiple, competing objectives were to:

- Maximize monomer conversion (from NMR).

- Minimize molar mass dispersity, Đ (from GPC).

- Target a particle size of 80 nm with minimized polydispersity index (PDI) (from DLS) [3].

- AI Optimization: The study compared cloud-integrated algorithms—TSEMO, RBFNN/RVEA, and EA-MOPSO—to navigate this complex space and generate a Pareto front of optimal solutions [3].

- Performance Outcome: The platform successfully conducted 67 reactions and analyses autonomously over 4 days, mapping the reaction space and identifying optimal conditions that balanced the multiple objectives [3].

Table: Quantitative Performance Data from SDL Case Studies

| System / Study | Key Performance Metric | Reported Outcome | Context & Notes |

|---|---|---|---|

| Polymer NP SDL [3] | Campaign Duration | 4 days | For 67 experiments with full analysis. |

| Throughput (Demonstrated) | ~17 experiments/day | Includes synthesis and multi-modal analysis. | |

| Optimization Objectives | 6+ | Monomer conversion, Đ, particle size, PDI, etc. | |

| C-CAS / Gomes Lab [5] | Reaction Throughput | 16,000+ reactions | AI-driven system generating over 1 million compounds. |

| Microfluidic Platform [4] | Throughput (Theoretical) | 1,200 measurements/hour | Maximum potential sampling rate. |

| Throughput (Demonstrated) | 100 samples/hour | Actual rate for studied reactions with longer durations. |

The Impact of Data Quality and FAIR Principles

A critical factor influencing the performance of AI-driven systems is the quality and structure of the underlying data. The adoption of FAIR data principles (Findable, Accessible, Interoperable, Reusable) is emphasized as crucial for building robust predictive models [1]. The "evaluation gap," where model metrics do not always translate to real-world success, is often bridged by enriching models with comprehensive real-world experimental outcomes, including both positive and negative data [1].

Essential Tools and Reagents for Automated Workflows

The implementation of effective automated synthesis, particularly in drug discovery, relies on a supporting ecosystem of digital tools, physical robots, and chemical building blocks.

Table: Research Reagent Solutions for Automated Synthesis

| Item / Solution | Function / Description | Role in Automated Workflow |

|---|---|---|

| Building Blocks (BBs) [1] | Diverse monomers and chemical fragments (e.g., carboxylic acids, boronic acids, amines). | Dictate the explorable chemical space for drug candidates; foundational for library synthesis. |

| Pre-weighted BB Support [1] | Suppliers provide building blocks in pre-weighed, solubilized formats. | Eliminates labor-intensive in-house weighing and reformatting, reducing errors and freeing resources. |

| Virtual BB Catalogs [1] | Databases of make-on-demand building blocks (e.g., Enamine MADE). | Vastly expands accessible chemical space beyond physical stock, with synthesis upon request. |

| Chemical ChatBot / LLM [1] [5] | AI interface (e.g., "ChatGPT for Chemists") for intuitive interaction with complex models. | Lowers barrier for chemists to use AI for synthesis planning, route discussion, and SAR exploration. |

| Computer-Assisted Synthesis Planning (CASP) [1] | AI-powered software (e.g., Synthia [6]) for retrosynthetic analysis and route planning. | Generates innovative synthetic routes and evaluates synthetic accessibility during molecular design. |

Workflow Architecture of an Autonomous System

The operational logic of a closed-loop or self-driving laboratory can be abstracted into a core workflow that integrates the physical and digital worlds. This architecture is key to its autonomous function.

Diagram 2: The core closed-loop workflow of a self-driving laboratory, from problem definition to autonomous iteration [3] [4].

Step-by-Step Workflow Protocol:

- Problem Initialization: The researcher defines the input variables (e.g., temperature, concentration, residence time) and their bounds, as well as the target objectives (e.g., maximize yield, minimize dispersity, target particle size). Initial experiments are often selected using a Design of Experiments (DoE) approach or Latin Hypercube Sampling (LHS) [3].

- Automated Synthesis: The robotic platform executes the synthesis under the specified conditions. This often occurs in continuous flow reactors or parallel batch reactors, which are amenable to automation and precise control [3] [2].

- Orthogonal Online Analytics: Immediately after synthesis, the reaction mixture is automatically characterized. The cited polymer nanoparticle SDL uses:

- Machine Learning Model Update: The collected data (input conditions and resulting output objectives) is used to train or update a surrogate model (e.g., a Gaussian process) of the experimental space [3].

- Autonomous Decision Making: An acquisition function (e.g., from a Bayesian optimization algorithm like TSEMO or RBFNN/RVEA) uses the model to propose the next set of experimental conditions that are most likely to improve the multi-objective goal, thus closing the loop [3].

The field of automated synthesis is rapidly advancing from systems that simply execute pre-programmed tasks to intelligent partners that can plan and discover autonomously. For researchers and drug development professionals, the choice of system depends heavily on the problem's complexity. Basic automation excels at increasing throughput for well-understood reactions, while closed-loop SDLs are unmatched for navigating high-dimensional, multi-objective optimization spaces.

The future points toward greater integration, with "chemical ChatBots" lowering the barrier to using complex AI tools [1], and centralized initiatives like the NSF Center for Computer Assisted Synthesis (C-CAS) fostering collaboration to accelerate the development of these transformative technologies [5]. As these systems become more sophisticated and widespread, they hold the promise of radically shortening discovery timelines, from years to months, and unlocking new frontiers in chemistry and materials science.

The field of chemical synthesis has undergone a revolutionary transformation from manual, labor-intensive processes to highly sophisticated automated systems. This evolution began with Bruce Merrifield's solid-phase peptide synthesis in the 1960s, which introduced the foundational concept of using a solid support to simplify purification and enable automation [7]. This breakthrough earned Merrifield the 1984 Nobel Prize in Chemistry and created a paradigm shift that extended far beyond peptide chemistry, ultimately paving the way for today's AI-driven robotic platforms capable of autonomous synthesis and optimization [8] [9].

The journey from Merrifield's initial concept to modern robotic systems represents a fundamental reimagining of how chemical synthesis is performed. Where traditional organic synthesis has depended on highly trained chemists to create and perform molecular assembly processes manually, automated synthesis has progressively addressed limitations of inconsistent reproducibility, inadequate efficiency, and the time-consuming nature of manual operations [8]. This historical development trajectory has moved through distinct phases: initial automation of simple repetitive steps, integration of computer control and monitoring, and most recently, the incorporation of artificial intelligence and machine learning for autonomous decision-making [10] [11].

For researchers, scientists, and drug development professionals evaluating commercial automated synthesis systems today, understanding this evolutionary pathway provides critical context for comparing system capabilities, identifying appropriate technologies for specific applications, and recognizing both the maturity and limitations of current platforms. This comparison guide examines key developmental milestones, performance characteristics across system generations, and the experimental protocols that demonstrate the capabilities of modern automated synthesis platforms.

Historical Milestones in Automated Synthesis

The Foundational Breakthrough: Solid-Phase Peptide Synthesis

The origins of automated chemical synthesis trace back to R. Bruce Merrifield's pioneering work at The Rockefeller Institute, where he developed and refined solid-phase peptide synthesis (SPPS) between 1959 and 1963 [7]. His revolutionary approach anchored the C-terminal amino acid of the peptide to an insoluble porous resin support, which allowed for the use of excess reagents to drive reactions to completion while enabling simple purification by washing away unreacted materials [7] [9]. This fundamental innovation provided three critical advantages over previous solution-phase methods: it eliminated intermediate purification steps, significantly accelerated synthesis times, and made the process inherently amenable to automation.

Merrifield's method achieved an exceptional chemical reaction efficiency of 99.5%, reducing peptide synthesis from what previously took years to a matter of days [7]. The first automated solid-phase synthesizer emerged in 1968, just five years after Merrifield's initial publication, demonstrating how quickly the technology advanced once the core concept was established [9]. The commercial implementation of these systems throughout the 1970s and 1980s progressively enhanced their capabilities, with key developments including the introduction of the Fmoc protecting group (1970), Wang resin (1973), and the Boc/Bzl protection scheme, each contributing to improved synthesis efficiency and broader application scope [9].

Table 1: Key Historical Developments in Solid-Phase Peptide Synthesis

| Year | Development | Significance | Key Researchers/Companies |

|---|---|---|---|

| 1963 | Solid-phase peptide synthesis on crosslinked polystyrene beads | Introduced concept of solid support for simplified purification | Merrifield |

| 1964 | Boc/Bzl protection scheme | Enhanced protection strategy for peptide synthesis | Merrifield |

| 1968 | First automated solid phase synthesizer | Enabled fully automated peptide assembly | Multiple |

| 1970 | Fmoc protecting group introduced | Provided base-labile protection alternative | Carpino and Han |

| 1973 | Wang resin development | Improved cleavage conditions for peptide acids | Wang |

| 1983 | First production synthesizer with preactivation | Industrial-scale peptide synthesis capability | CSBio and others |

| 1987 | First commercial multiple peptide synthesizer | Enabled parallel synthesis for high-throughput | Multiple |

| 2003 | Stepwise preparation of long peptides (~100 AA) | Extended synthesis capability to longer sequences | Multiple |

Expansion Beyond Peptide Synthesis: The Rise of General Automation

While peptide synthesis drove initial automation developments, the underlying principles soon expanded to broader chemical synthesis applications. The 1980s and 1990s saw the emergence of combinatorial chemistry approaches, with simultaneous parallel peptide synthesis (1985) and split-mix synthesis for combinatorial libraries (1988) enabling unprecedented throughput for drug discovery applications [9]. This period also witnessed the development of more sophisticated solid supports and linkers, such as the 2-chlorotritylchloride resin (1988) and Sieber resin (1987), which expanded the range of molecules that could be efficiently synthesized [9].

The early 21st century brought revolutionary advances in convergent synthesis strategies, most notably with Kent's introduction of native chemical ligation in 1994, which enabled the synthesis of significantly larger proteins and peptides by joining fully synthesized segments [9]. This approach culminated in achievements such as the 2007 convergent chemical synthesis of a 203-residue "Covalent Dimer" of HIV-1 protease enzyme, demonstrating that automated methods could address targets of substantial complexity [9]. Throughout this expansion period, the core principles established by Merrifield - solid support, stepwise synthesis, and automated repetitive cycles - remained fundamental to system designs, even as applications diversified.

Comparative Analysis of Automated Synthesis Systems

Evolution of Technical Capabilities and Performance Metrics

The progression from first-generation automated synthesizers to contemporary AI-integrated robotic platforms has resulted in dramatic improvements across multiple performance dimensions. Early systems focused primarily on automating the repetitive coupling and deprotection steps of solid-phase peptide synthesis, while modern platforms incorporate real-time monitoring, closed-loop optimization, and autonomous decision-making capabilities [8] [11]. The transition from dedicated peptide synthesizers to general-purpose chemical robotics represents perhaps the most significant expansion of capability, with systems like the Chemputer platform demonstrating the ability to synthesize diverse molecular targets beyond peptides, including small molecules and molecular machines [12].

Table 2: Performance Comparison Across Automated Synthesis System Generations

| System Characteristic | First Generation (1970s-1980s) | Second Generation (1990s-2000s) | Modern AI-Integrated Platforms (2010s-Present) |

|---|---|---|---|

| Synthesis Scale | 0.1-0.5 mmol | 0.01-1.0 mmol | 0.001-100 mmol |

| Amino Acid Coupling Time | 60-120 minutes | 20-60 minutes | 5-30 minutes |

| Maximum Peptide Length | 20-30 residues | 30-70 residues | 70-150+ residues |

| Purity for 20-mer | 70-85% | 85-95% | 90-98%+ |

| Monitoring Capabilities | None or basic UV | UV monitoring standard | NMR, LC, MS real-time monitoring |

| Automation Level | Step automation | Full sequence automation | Fully autonomous with optimization |

| Typical Yield | Variable, often low | Consistent, moderate-high | Highly consistent, optimized |

Quantitative performance data demonstrates clear advances across generations. For peptide synthesis, modern systems like those from CSBio can synthesize peptides up to 132 amino acids in length with high purity in a fully automated, unattended operation [13]. This represents a substantial improvement over early systems, which typically maxed out at 20-30 residues with significantly lower purity. The integration of advanced monitoring techniques, including inline NMR and IR spectroscopy, has enabled real-time reaction optimization that was impossible with earlier systems [8] [12]. For general organic synthesis, platforms like the Chemputer have demonstrated the ability to execute complex multi-step syntheses averaging 800 base steps over 60 hours with minimal human intervention [12].

Key Commercial Systems and Their Distinctive Capabilities

The commercial landscape for automated synthesis systems has diversified significantly, with platforms now targeting specific application domains and user requirements. For peptide synthesis, companies like CSBio offer specialized synthesizers ranging from research-scale systems to industrial production units, with capabilities including chilled and heated synthesis, Fmoc/tBu chemistry, and support for difficult peptide sequences through specialized protocols [13]. These systems typically feature fully automated operation, with the ability to complete entire peptide syntheses without user intervention, including automatic solvent and amino acid additions [13].

For broader chemical synthesis applications, platforms such as the Chemputer system represent a more generalized approach to automation. This system uses a chemical description language (XDL) to standardize and automate synthetic procedures, achieving reproducibility across different hardware installations [12]. The integration of online NMR and liquid chromatography provides real-time feedback that enables dynamic adjustment of process conditions, a critical capability for optimizing challenging syntheses [12]. Similarly, the AI-Chemist platform described by Jiang and colleagues incorporates AI for proposal and ranking of synthetic plans, execution of synthetic steps, and monitoring of the entire process through multiple reactions [8].

Table 3: Comparison of Modern Automated Synthesis Platforms

| Platform/System | Primary Application Focus | Key Differentiating Capabilities | Representative Performance Data |

|---|---|---|---|

| CSBio Peptide Synthesizers | Peptide synthesis (research to production) | Fully automated operation; support for long and difficult sequences | 132-mer synthesis unattended; ~300mg crude peptide from 15ml RV for 20-mer |

| Chemputer Platform | General organic synthesis; molecular machines | XDL chemical programming language; online NMR/LC monitoring | 800 steps over 60h for rotaxane synthesis; minimal human intervention |

| AI-Chemist | Broad chemical synthesis with AI integration | Full AI-driven workflow from planning to execution | Autonomous hypothesis testing through 688 reactions in 8 days |

| iChemFoundry (ZJU) | High-throughput chemical discovery | Integration of AI, automation, and high-throughput techniques | Rapid screening and optimization of reaction conditions |

| Radial Flow Synthesizer | Small molecule library generation | Modular continuous flow around central core | Synthesis of rufinamide derivative libraries with inline monitoring |

Experimental Protocols and Methodologies

Standardized Peptide Synthesis Protocol

The fundamental experimental protocol for solid-phase peptide synthesis has remained consistent in its core steps while becoming increasingly optimized through automation. A typical synthesis cycle for each amino acid addition includes: deprotection (2 cycles with appropriate reagents), DMF washing (6 cycles to remove deprotection byproducts), coupling (with activated amino acids), and additional DMF washing (2 cycles to remove excess coupling reagents) [13]. This cycle repeats for each amino acid in the target sequence, with modern fully automated systems capable of executing the entire synthesis without user intervention.

For a standard 20-mer peptide synthesis using CSBio systems, the detailed methodology involves: (1) loading the appropriate resin into a reaction vessel sized for the desired scale (typically 15ml for research-scale producing 50-500mg); (2) preparing protected amino acid solutions in appropriate solvents at optimized concentrations; (3) programming the sequence with standard or optimized coupling protocols; (4) initiating automated synthesis with system monitoring of reagent levels and step completion; (5) final cleavage and deprotection using TFA-based cocktails; and (6) precipitation and purification [13]. The critical parameters ensuring high purity include coupling efficiency (>99.5% per step), adequate washing between steps, and appropriate deprotection completeness, all continuously optimized in modern systems.

Advanced Autonomous Synthesis Workflow

For more advanced autonomous platforms like the Chemputer, the experimental protocol incorporates additional layers of monitoring and decision-making. The synthesis of [2]rotaxane molecular machines follows this workflow: (1) synthetic planning using AI-assisted retrosynthesis tools to identify optimal routes; (2) protocol translation into XDL (chemical description language) commands executable by the platform; (3) automated setup with verification of reagent availability and system readiness; (4) execution with real-time monitoring using online NMR and liquid chromatography to track reaction progress; (5) dynamic adjustment of process conditions based on monitoring feedback; (6) automated purification using integrated chromatography systems; and (7) final analysis and data logging for continuous system improvement [12].

This methodology demonstrates how modern systems have expanded beyond simple step automation to incorporate closed-loop optimization. The real-time NMR monitoring enables yield determination at intermediate stages, allowing the system to extend reaction times, adjust temperatures, or modify reagent stoichiometries to maximize outcomes [12]. The integration of multiple purification techniques, including silica gel and size exclusion chromatography, within the automated workflow addresses one of the most persistent bottlenecks in chemical synthesis - product isolation and purification [12].

Diagram 1: Autonomous Synthesis Platform Workflow - This diagram illustrates the closed-loop operation of modern AI-integrated synthesis platforms featuring real-time monitoring and dynamic parameter adjustment.

The Scientist's Toolkit: Essential Research Reagent Solutions

Successful implementation of automated synthesis methodologies requires careful selection of specialized reagents and materials that enable efficient and reproducible results. The toolkit has evolved significantly from Merrifield's original polystyrene beads to include diverse solid supports, activating agents, and specialized solvents optimized for automated platforms.

Table 4: Essential Research Reagents for Automated Synthesis Systems

| Reagent/Material | Function | Key Characteristics | Application Notes |

|---|---|---|---|

| Wang Resin | Solid support for peptide acid synthesis | p-alkoxybenzyl alcohol linker; cleavable with TFA | Standard for Fmoc SPPS; good for most standard sequences |

| Rink Amide Resin | Solid support for peptide amide synthesis | TFA-labile linker system | Essential for C-terminal amide peptides |

| 2-Chlorotrityl Chloride Resin | Support for acid-sensitive sequences | Very acid-labile; cleaved with mild acid | Protection of sensitive peptides during synthesis |

| Fmoc-Protected Amino Acids | Building blocks for chain assembly | Base-labile Fmoc group with appropriate side-chain protection | Standard for Fmoc SPPS protocols |

| HBTU/HATU | Coupling reagents | Activates carboxyl group for amide bond formation | High efficiency with minimal racemization |

| TFA (Trifluoroacetic Acid) | Cleavage and deprotection | Removes peptides from resin and side-chain protecting groups | Standard cleavage cocktail component with scavengers |

| TIS (Triisopropylsilane) | Scavenger | Traps reactive cations during TFA cleavage | Prevents side reactions during final deprotection |

| DIC (Diisopropylcarbodiimide) | Coupling reagent | Activates carboxylic acids for amide bond formation | Often used with Oxyma Pure for enhanced efficiency |

| DMF (Dimethylformamide) | Primary solvent | Dissolves amino acids and reagents; swells resin | High purity essential for successful long syntheses |

| NMP (N-Methyl-2-pyrrolidone) | Alternative solvent | Higher boiling point than DMF; better for some sequences | Useful for difficult couplings or elevated temperatures |

The selection of appropriate reagents fundamentally influences synthesis outcomes, particularly for challenging sequences or specialized targets. For instance, the use of pseudoprolines (introduced in 1996) can dramatically improve the synthesis of difficult peptides by disrupting secondary structure formation that impedes efficient coupling [9]. Similarly, modern coupling reagents like HATU and HBTU provide superior activation with reduced racemization compared to earlier agents, enabling higher purity in the final products [13]. The ongoing development of specialized resins, such as those engineered for specific cleavage profiles or loading capacities, continues to expand the boundaries of what can be successfully synthesized on automated platforms.

The historical development from Merrifield's peptide synthesizer to modern robotic platforms reveals a clear trajectory toward increasingly autonomous, intelligent, and general-purpose synthesis systems. Current challenges facing the field include achieving seamless integration of all modular components, developing more user-friendly interfaces, creating systems with smaller physical footprints suitable for standard laboratories, and reducing costs to broaden accessibility [8]. The ongoing integration of artificial intelligence and machine learning throughout the synthesis workflow - from planning to execution to optimization - represents the most promising avenue for addressing these challenges.

Future developments will likely focus on enhancing the cognitive capabilities of automated platforms, enabling them to not only execute predefined procedures but also to design experiments, interpret results, and formulate new hypotheses based on emerging data [8] [11]. As these systems become more sophisticated and widespread, they promise to liberate chemists from repetitive manual tasks, allowing greater focus on creative and strategic aspects of molecular design and discovery [8]. The continued convergence of robotics, artificial intelligence, and chemical synthesis expertise will undoubtedly yield increasingly capable systems that further accelerate the pace of discovery and development across pharmaceuticals, materials science, and nanotechnology.

Automated synthesis systems are transforming pharmaceutical research by directly addressing its core challenges: the need for reproducible results, enhanced efficiency, and safer operating environments. This guide objectively compares the performance of different automated approaches—flow chemistry, robotic batch systems, and AI-integrated platforms—using published experimental data to highlight their capabilities and trade-offs.

Performance Comparison of Automated Synthesis Technologies

The table below summarizes quantitative performance data from published studies on various automated synthesis systems, highlighting their impact on key pharmaceutical research drivers.

Table 1: Comparative Performance of Automated Synthesis Systems in Pharmaceutical Applications

| System Type / Study | Application | Reported Yield / Purity | Time Efficiency | Reproducibility & Scalability |

|---|---|---|---|---|

| Automated Flow Chemistry [14] | Multistep synthesis of Diphenhydramine, Lidocaine, Diazepam, Fluoxetine | 82-94% yield (3 compounds), 43% (Fluoxetine) | Diphenhydramine: 15 min (vs. 5+ hours batch) | End-to-end, integrated platform; Demonstrated scalability to thousands of doses |

| Robotic Batch System [15] | Parallel synthesis of 20 BMB-derived nerve-targeting agents | 29% avg yield, 51% avg purity | 72 hours for 20 compounds (vs. 120 hours manual) | High reliability; All 20 compounds successfully synthesized in triplicate |

| AI-Driven LLM Framework [16] | Cu/TEMPO aerobic alcohol oxidation; Various other reactions | N/S (Focused on route development and optimization) | Dramatically reduced literature review and experimental planning time | Autonomous, end-to-end development from literature search to purification |

| Automated cDNA Synthesis [17] | cDNA synthesis and labelling for microarrays | N/A | ~5 hours for 48 samples | Significantly reduced variance between replicates (Spearman correlation: 0.92 auto vs 0.86 manual) |

Detailed Experimental Protocols and Methodologies

Automated Flow Chemistry for Active Pharmaceutical Ingredients (APIs)

A reconfigurable, refrigerator-sized continuous flow platform was designed for the end-to-end synthesis of pharmaceutical compounds [14]. The system consisted of an upstream unit (stock containers, pumps, pressure regulators, reactors, separators) and a downstream unit (precipitation, crystallization, formulation), with real-time monitoring via FlowIR [14].

Key Experimental Data:

- Diphenhydramine HCl: 82% yield, 15-minute synthesis (versus >5 hours in batch)

- Lidocaine HCl: 90% yield, 36-minute synthesis (versus 4-5 hours in batch)

- Diazepam: 94% yield, 13-minute synthesis (versus 24 hours in batch)

- Fluoxetine HCl: 43% yield, improved safety profile

This platform demonstrated enhanced reproducibility through precise digital control of flow rates, pressure, and temperature, minimizing human intervention and variability [14].

Robotic Batch System for Parallel Library Synthesis

An integrated robotic system was constructed for solid-phase combinatorial chemistry, comprising five specialized modules: a 360° Robot Arm (RA), Capper-Decapper (CAP), Split-Pool Bead Dispenser (SPBD), Liquid Handler (LH) with heating/cooling capabilities, and a Microwave Reactor (MWR) [15].

Synthesis Protocol for Nerve-Targeting Agents:

- Loading: 4-vinylaniline onto 2-chlorotrityl resin in DCM with DIPEA

- Heck Reaction: Catalyzed by Pd(OAc)₂/P(O-Tol)₃/TBAB at 100°C

- Microwave Reaction: Treatment with KOtBu in toluene under microwave conditions

- Cleavage: From beads using 20% TFA/DCM

- Analysis: UPLC and MALDI-TOF MS for characterization

Performance Metrics: The system synthesized 20 BMB derivatives three times with high reliability, achieving an average overall yield of 29% and average library purity of 51%, with 7 compounds exceeding 70% purity [15].

AI-Integrated Synthesis Development Framework

The LLM-based Reaction Development Framework (LLM-RDF) employed six specialized AI agents to autonomously handle synthesis development tasks [16]:

Agent Roles:

- Literature Scouter: Searched academic databases to identify relevant synthetic methodologies

- Experiment Designer: Planned substrate scope and condition screening experiments

- Hardware Executor: Translated experimental designs into automated system commands

- Spectrum Analyzer: Interpreted analytical data (e.g., GC, LC/MS)

- Separation Instructor: Developed purification protocols

- Result Interpreter: Analyzed experimental outcomes and recommended improvements

Application: This framework was successfully demonstrated in developing a copper/TEMPO-catalyzed aerobic alcohol oxidation reaction, from literature search through optimization and scale-up [16].

The Scientist's Toolkit: Essential Research Reagent Solutions

Table 2: Key Reagents and Materials for Automated Synthesis Platforms

| Reagent/Material | Function in Automated Synthesis | Example Applications |

|---|---|---|

| 2-Chlorotrityl Resin | Solid support for combinatorial synthesis | Solid-phase synthesis of BMB nerve-targeting agents [15] |

| Pd(OAc)₂/P(O-Tol)₃ Catalyst System | Palladium catalyst for cross-coupling reactions | Heck reaction in robotic batch synthesis [15] |

| Cu(I) Salts (CuBr, Cu(OTf)) | Catalyst for aerobic oxidation reactions | Cu/TEMPO catalytic system for alcohol oxidation [16] |

| TEMPO ((2,2,6,6-Tetramethylpiperidin-1-yl)oxyl) | Co-catalyst for selective alcohol oxidation | Sustainable aldehyde synthesis in AI-guided workflow [16] |

| Carboxylic Acid-Coated Paramagnetic Beads | Automated purification of nucleic acids | cDNA cleanup in automated sample preparation [17] |

Experimental Workflows for Automated Synthesis

The following diagrams illustrate the core workflows and decision processes for implementing automated synthesis technologies in pharmaceutical research.

Automated Synthesis System Decision Framework

End-to-End AI-Driven Synthesis Workflow

Key Insights for Implementation

Reproducibility Advantage: Automated systems significantly reduce well-to-well variability, with automated cDNA synthesis demonstrating a 0.92 Spearman correlation between replicates versus 0.86 for manual procedures [17].

Efficiency Gains: Automated parallel synthesis can complete 20-compound libraries in 72 hours compared to 120 hours manually—a 40% reduction in synthesis time [15].

Safety Integration: Flow chemistry systems minimize human exposure to hazardous intermediates and enable safer handling of exothermic reactions and high-pressure conditions [14] [18].

The integration of automation with artificial intelligence represents the next frontier, with LLM-based systems now capable of guiding the entire synthesis development process from literature search to experimental execution and optimization [16].

The choice of chemical reactor architecture is a fundamental decision that profoundly impacts the efficiency, safety, and scalability of synthetic processes in research and development. Batch reactors represent the traditional, well-established approach where reactions occur in a closed vessel with all reactants added at the beginning of the process. In contrast, flow reactors (also known as continuous flow reactors) represent a modern paradigm where reactants are continuously pumped through a tube or microstructured system, enabling precise control over reaction parameters. A third category, modular platforms, combines elements of both with advanced automation and robotics to create flexible, programmable synthesis systems. These platforms are increasingly integrated with sophisticated software and real-time analytics, representing the cutting edge of automated chemical synthesis [12].

The global market trends underscore the growing adoption of these technologies. The flow chemistry market, valued at approximately USD 3.5 billion in 2025, is projected to grow at a compound annual growth rate (CAGR) of 8.49% through 2032 [19]. Similarly, the market for fully automated laboratory synthesis reactors is experiencing robust growth, driven by demands for efficiency in pharmaceutical, chemical, and academic research [20]. This guide provides an objective comparison of these core system architectures, focusing on their operational principles, performance characteristics, and suitability for different applications within automated synthesis.

Fundamental Operational Principles

Batch Reactors

Batch processing is a cyclical approach where a specific quantity of reactants is combined in a vessel and exposed to controlled conditions (e.g., heat, pressure) for a defined period. After the reaction is complete, the product is removed, and the reactor is cleaned for the next batch [21] [22]. This method is conceptually simple and highly flexible, allowing for easy changes in reactants or product formulations between batches. Common laboratory-scale batch reactors include pressure reactors and jacketed reactors, often equipped with attachments for monitoring and control [21]. Their primary benefits include excellent quality control for small batches, ease of monitoring, and precise control over reaction time and temperature [22].

Flow Reactors

Flow chemistry involves performing reactions in a continuously flowing stream within a network of tubes, chips, or modules. Plug Flow Reactors (PFRs), a common type, are characterized by minimal back-mixing, with reactants moving in a "plug-like" manner with uniform velocity and consistent residence time [22]. Another type, the Continuous Stirred-Tank Reactor (CSTR), involves continuous addition of reactants and simultaneous removal of products, ideal for reactions requiring thorough mixing [22]. The key advantages of flow systems include superior heat and mass transfer due to high surface-to-volume ratios, enhanced safety when handling hazardous intermediates, access to wider process windows (e.g., high temperatures and pressures), and more straightforward scalability by simply extending operation time ("scale-out") [23] [21].

Modular Automated Platforms

Modular robotic platforms, such as the Chemputer, represent a convergence of hardware automation and digital control. These systems use a chemical description language to standardize and automate complex synthetic sequences, integrating various modules for reactions, separations, and purifications [12]. A central innovation is the integration of online analytics like NMR and liquid chromatography, which provide real-time feedback to dynamically adjust process conditions [12]. This enables autonomous execution of multi-step syntheses with minimal human intervention, significantly improving reproducibility for complex and time-consuming procedures, such as the synthesis of molecular machines like rotaxanes [12].

Table 1: Core Characteristics of Reactor Architectures

| Characteristic | Batch Reactor | Flow Reactor (PFR) | Modular Robotic Platform |

|---|---|---|---|

| Process Nature | Cyclical, closed system | Continuous, steady-state | Programmable, automated workflow |

| Reaction Scale-Up | Volume-based (larger vessels) | Time-based ("scale-out") | Digital recipe replication |

| Heat/Mass Transfer | Moderate (depends on stirring) | Excellent (high surface-to-volume ratio) | Varies with module design |

| Process Control | Discrete parameter control per batch | Precise, continuous control of parameters | Dynamic, with real-time analytical feedback |

| Flexibility | High for changing reactants/products | High for established processes | High, reconfigurable via software |

| Inherent Safety | Limited for exothermic/hazardous reactions | High (small reagent volume at any time) | High, reduces manual handling |

Performance Comparison and Experimental Data

Quantitative Performance Metrics

Direct comparisons in scientific literature provide the most objective performance data. A notable study compared the selective hydrogenation of functionalized nitroarenes, a critical transformation in agrochemical and pharmaceutical industries, using both batch and continuous flow reactors [24]. The data reveals significant differences in performance, particularly regarding selectivity and reaction rate.

Table 2: Comparative Catalytic Performance in Hydrogenation of o-Chloronitrobenzene (o-CNB) [24]

| Catalyst | Operation Mode | Pressure (atm) | Temperature (°C) | Selectivity to o-CAN | Reaction Rate (mol/(molmet*h)) |

|---|---|---|---|---|---|

| Pd/C | Batch (Liquid) | 12 | 150 | 86% | 2910 |

| Au/TiO2 | Batch (Liquid) | 12 | 150 | 100% | 167 |

| Au/TiO2 | Continuous Flow (Gas) | 1 | 150 | 100% | 12 |

| Au/Mo2N | Continuous Flow (Gas) | 1 | 220 | 100% | 42 |

The data shows that while batch reactors can achieve very high reaction rates (e.g., 2910 for Pd/C), they may sacrifice selectivity (86%). Conversely, certain catalysts in flow reactors can achieve perfect selectivity (100%) while maintaining a measurable reaction rate, albeit lower. This highlights a key trade-off and demonstrates that the optimal system depends on the primary objective—maximum speed or maximum purity.

Case Study: Photoredox Reaction Scaling

A detailed study on a flavin-catalyzed photoredox fluorodecarboxylation reaction illustrates a hybrid optimization and scale-up workflow. The process began with High-Throughput Experimentation (HTE) in a 96-well plate batch reactor to screen 24 photocatalysts, 13 bases, and 4 fluorinating agents [23]. After identifying hits, the reaction was optimized using a Design of Experiments (DoE) approach and then transferred to a flow reactor for larger-scale production [23]. The results are summarized below.

Table 3: Scale-Up Performance of a Photoredox Reaction from HTE to Flow [23]

| Scale | Reactor Type | Key Achievements |

|---|---|---|

| Screening/Optimization | 96-well Plate (Batch) | Identification of superior homogeneous photocatalyst and optimal base. |

| Initial Scale-Up (2 g) | Vapourtec Ltd UV150 Photoreactor (Flow) | 95% conversion, demonstrating successful transfer from batch screening. |

| 100 g Scale | Custom two-feed flow setup | Further optimization of light power, residence time, and temperature. |

| Kilo Scale | Production Flow Reactor | 1.23 kg product obtained at 97% conversion, 92% yield (6.56 kg/day throughput). |

This case demonstrates the complementary strengths of each architecture: HTE for rapid, parallel screening and flow reactors for efficient, safe, and highly scalable production.

Experimental Protocols for Reactor Comparison

To objectively compare reactor performance, a standardized experimental methodology is essential. The following protocol, based on the hydrogenation study [24], can be adapted for various reactions.

Protocol for Comparative Hydrogenation Study

Objective: To compare the yield, selectivity, and scalability of a model hydrogenation reaction (e.g., halonitrobenzene to haloaniline) in batch versus continuous flow reactors.

Materials:

- Reagents: Halonitrobenzene substrate (e.g., o-chloronitrobenzene), hydrogen gas (H₂), solvent (e.g., ethanol), and catalyst (e.g., Pd/C, Au/TiO₂).

- Equipment: Batch pressure reactor (e.g., 100 mL autoclave), continuous flow fixed-bed reactor (e.g., glass reactor with 15 mm inner diameter), HPLC or GC-MS for analysis, gas mass flow controllers.

Methodology:

- Batch Reaction:

- Charge the autoclave with a known mass of substrate, solvent, and catalyst.

- Purge the system with an inert gas (e.g., N₂), then pressurize with H₂ to the target pressure (e.g., 5-12 bar).

- Heat the reactor to the target temperature (e.g., 150°C) with vigorous stirring.

- Maintain conditions for a specified time, then cool and depressurize the reactor.

- Take a sample, separate the catalyst, and analyze for conversion and selectivity.

- Continuous Flow Reaction:

- Pack the catalyst into the fixed-bed reactor tube.

- Pre-treat the catalyst under a H₂ stream at the reaction temperature.

- Prepare a solution of the substrate in the solvent.

- Using pumps, feed the substrate solution and H₂ gas (at controlled flow rates, e.g., 1 atm pressure) into the reactor heated to the target temperature (e.g., 150-220°C).

- Allow the system to reach steady state (effluent composition stabilizes).

- Collect the liquid effluent and analyze for conversion and selectivity.

Data Analysis:

- Calculate key performance indicators: Substrate Conversion, Product Selectivity, and Reaction Rate (e.g., mol of converted substrate per mol of metal catalyst per hour).

- Compare the operational stability over time, noting any catalyst deactivation in the batch system versus the steady-state operation in flow.

System Workflow and Architecture

The logical workflow for selecting and implementing a reactor system involves assessing chemical requirements, choosing an architecture, and executing the process. The following diagram illustrates this decision-making and operational flow.

The Scientist's Toolkit: Essential Research Reagent Solutions

The successful implementation of reactor technologies relies on a suite of specialized reagents, catalysts, and materials. The following table details key solutions used in the featured experiments and their functions.

Table 4: Key Research Reagent Solutions for Automated Synthesis

| Reagent/Material | Function | Example Use Case | Compatibility Notes |

|---|---|---|---|

| Supported Metal Catalysts (Pd/C, Au/TiO₂) | Heterogeneous catalyst for hydrogenation reactions. | Selective reduction of nitro groups in halonitroarenes [24]. | Widely used in both batch and flow; choice of metal (Pd vs. Au) critically affects selectivity. |

| Flavin Photocatalysts | Organic photocatalyst for photoredox reactions. | Catalyzes light-driven fluorodecarboxylation reactions [23]. | Enables radical pathways under mild conditions; homogeneous catalysts preferred for flow to avoid clogging. |

| Stainless Steel Reactors | Material for reactor construction. | High-pressure/temperature flow reactions in production scale [19]. | Robust and cost-effective; offers good chemical resistance and thermal stability for many processes. |

| Specialized Solvents (Anhydrous, Deoxygenated) | Reaction medium for air/moisture sensitive chemistry. | Used in HTE for cross-electrophile coupling and organometallic reactions [25]. | Essential for maintaining inert atmosphere in HTE well plates and flow systems. |

| Enzyme Catalysts | Biocatalysts for stereoselective steps. | Used in hybrid organic-enzymatic synthesis planning platforms [26]. | Enables green chemistry principles under mild conditions; integrated into chemoenzymatic workflows. |

The choice between batch, flow, and modular robotic architectures is not a matter of identifying a single superior technology, but rather of selecting the right tool for a specific chemical and operational challenge. Batch reactors remain the versatile and intuitive choice for small-scale R&D, offering unparalleled flexibility. Flow reactors excel in process intensification, safe handling of hazardous chemistry, and scalable production, often leading to higher selectivity and greener processes. Modular automated platforms represent the future of digital synthesis, offering unprecedented reproducibility and autonomy for constructing complex molecules.

The experimental data clearly shows that flow systems can achieve perfect selectivity in cases where batch systems struggle, albeit sometimes at different reaction rates. The decision framework for researchers should be guided by the reaction's specific characteristics, the primary goals of the research (e.g., discovery vs. production), and the available infrastructure. The ongoing integration of artificial intelligence and machine learning with these platforms promises to further revolutionize synthetic chemistry, accelerating the discovery and development of new molecules and materials [20] [25].

The advancement of automated synthesis systems is pivotal for accelerating research and development in pharmaceuticals, materials science, and chemical engineering. This guide provides an objective comparison of three core hardware components—robotic arms, liquid handlers, and reactor modules—within the context of commercial automated synthesis systems. Aimed at researchers, scientists, and drug development professionals, the analysis is grounded in recent experimental data and performance metrics to inform strategic investment and integration decisions [20] [26].

Performance Comparison of Core Components

The following tables summarize key quantitative performance indicators and market characteristics for each hardware component, derived from recent studies and reports.

Table 1: Robotic Arm Performance in Visual Servoing & Assembly

| Metric | BFS-Canny-IED Algorithm (RAVS System) [27] | Deep RL for Sequential Fabrication [28] | General AI-Powered Arms [29] |

|---|---|---|---|

| Primary Task | Dynamic visual tracking & servo control | Sequential block assembly (T1: reaching, T2: planning) | Multi-industry tasks (assembly, surgery, harvesting) |

| Key Algorithm/ Tech | GPU-accelerated BFS, Canny, Harris edge detection | SAC (T1), DDQN (T2) Deep Reinforcement Learning | AI, Machine Learning, IoT, Edge Computing |

| Accuracy/ Precision | Tracking error converged to small range; Feature detection F1 score >90% | Evaluated via degree & variation indices; DDQN showed strong adaptability | High precision crucial for surgery, electronics assembly |

| Speed/ Efficiency | 110 FPS at 4K (4096*2160); Avg. run time ≥30.28 ms | Training efficacy and reliability assessed | 24/7 operation; improves throughput in logistics/manufacturing |

| Experimental Context | Robotic Arm Visual Servo (RAVS) system for dynamic targets | Simulated/real block wall assembly for architectural robotics | Case studies in healthcare, logistics, manufacturing, agriculture |

Table 2: Liquid Handler System Market & Technical Trends

| Metric | Automated Liquid Handlers Market [30] [31] | Key Technological Trends [32] [31] | Representative Applications [30] |

|---|---|---|---|

| Market Size (2025) | ~USD 15,000 million [31] | N/A | N/A |

| Forecast CAGR (2025-2029/2033) | 5.8% (2025-2029) [30], 10% (2025-2033) [31] | N/A | N/A |

| Dominant Region | North America (est. 35.1% growth contribution) [30] | N/A | N/A |

| Key Drivers | Demand in drug discovery, genomic/proteomic research, clinical diagnostics [30] | AI-driven automation, miniaturization (nanoliter/picoliter), software integration, point-of-care testing [31] | High-throughput screening, assay miniaturization, genomics/ADME-Tox profiling [30] |

| Major Segments | Product: Robotic workstations, reagents, accessories. End-user: Pharma/biotech, labs [30] | Electronic, Automated, Manual systems [31] | Drug discovery segment valued at USD 277.6M (2019) [30] |

Table 3: Reactor Module Performance in Chemical Synthesis

| Metric | Fully Automated Lab Synthesis Reactor [20] | OCM Reactor Concepts (Miniplant Scale) [33] | Virtual Synthesis Planning Platform [26] |

|---|---|---|---|

| Reactor Type | Intermittent, Semi-Intermittent, Continuous | Packed Bed (PBR), Packed Bed Membrane (PBMR), Chemical Looping (CLR) | In silico planning (ChemEnzyRetroPlanner) |

| Primary Application | Experimental research, chemical synthesis, catalyst studies [20] | Oxidative Coupling of Methane (OCM) to C2+ hydrocarbons | Hybrid organic-enzymatic retrosynthesis planning |

| Key Performance Indicator | Market CAGR: 8% (2025-2033); Valued ~$250M (2025) [20] | C2 Selectivity: PBR (base), PBMR (↑23%), CLR (up to 90%) [33] | Outperforms existing tools in route planning (RetroRollout* algorithm) |

| Innovation Focus | AI/ML integration, high-throughput, miniaturization, continuous flow [20] | Oxygen distribution control to improve selectivity/yield; scalability assessment [33] | AI-driven decision-making, enzyme recommendation, in silico validation |

| Experimental Context | Market analysis and trend assessment [20] | Parametric study of temperature & GHSV on catalyst Mn-Na2WO4/SiO2 [33] | Platform validation across multiple organic compound datasets |

Detailed Experimental Protocols

To contextualize the data in the tables, key experimental methodologies from the cited research are outlined below.

Protocol 1: Validation of Robotic Arm Visual Servo (RAVS) System with BFS-Canny-IED Algorithm [27]

- Objective: To evaluate the real-time performance and tracking accuracy of a visual servo system using a novel image edge detection algorithm.

- System Setup: A robotic arm system integrated with a vision camera. The image processing pipeline was implemented on a GPU for parallel computation.

- Algorithm Steps:

- Image Acquisition: Capture high-resolution (up to 4K) video feed from the workspace.

- Feature Extraction: Execute the integrated BFS-Canny-Harris algorithm on the GPU:

- Apply Gaussian denoising and Sobel gradient calculation.

- Perform non-maximum suppression and dual thresholding (Canny).

- Use Breadth-First Search (BFS) to connect weak edges from strong edge "seeds".

- Simultaneously compute Harris corner detection in parallel threads.

- Control Law Calculation: Map the extracted 2D feature errors to camera/end-effector velocity commands via an image Jacobian matrix.

- Tracking Experiment: Command the arm to track a dynamic target. Record the positional error over time.

- Metrics Measured: Algorithm frames per second (FPS), average processing time per frame, tracking error convergence, and detection accuracy/recall/F1 scores against ground truth.

Protocol 2: Performance Evaluation of OCM Reactor Concepts at Miniplant Scale [33]

- Objective: To compare the selectivity and yield of C2 hydrocarbons (ethane/ethylene) among PBR, PBMR, and CLR configurations.

- Materials: Catalyst (Mn-Na2WO4/SiO2), methane and air/oxygen feeds, tubular reactors, porous α-Alumina membrane (for PBMR), oxygen carrier material (e.g., BSCF for CLR enhancement).

- Procedure:

- Reactor Operation:

- PBR: Co-feed CH4 and O2 at set ratios into a catalyst-packed bed.

- PBMR: Feed CH4 axially through the catalyst bed while distributing O2 radially via a porous membrane to control local concentration.

- CLR: Operate in cyclic mode—reduce catalyst/oxygen carrier with CH4 (fuel step), then re-oxidize with air (regeneration step).

- Parameter Variation: Systematically vary temperature (650–950°C) and Gas Hourly Space Velocity (GHSV) for each reactor type.

- Product Analysis: Analyze effluent gas composition using gas chromatography (GC) to determine concentrations of CH4, O2, C2H4, C2H6, CO, and CO2.

- Reactor Operation:

- Data Analysis: Calculate CH4 conversion, C2 selectivity, and C2 yield for each condition. Compare performance maxima and responses to parameter changes across reactor types.

Protocol 3: Benchmarking Automated Liquid Handler in High-Throughput Screening (HTS) [30] [31]

- Objective: To validate the precision, reproducibility, and throughput of an automated liquid handling workstation in a drug discovery assay.

- Setup: A robotic liquid-handling workstation integrated with a microplate reader and incubator. Use of 96-well or 384-well plates.

- Protocol Steps:

- System Calibration: Pre-run calibration for volumetric accuracy using dye-based or gravimetric methods at target volumes (nL-µL range).

- Assay Execution: Automate a standard cell-based or biochemical assay:

- Dispensing: Serial dilutions of a compound library across plate rows.

- Reagent Addition: Precise addition of cells, substrates, or detection reagents.

- Incubation & Reading: Transfer plates to integrated incubator, then to reader.

- Control Plates: Include manually pipetted plates for comparison.

- Validation Metrics: Measure coefficient of variation (CV%) across replicates for signal intensity, Z’-factor for assay quality, and correlation of dose-response curves (IC50/EC50) between automated and manual runs. Throughput is measured in plates processed per day.

System Integration and Workflow Diagrams

Diagram 1: Integrated Automated Synthesis Workflow

Diagram 2: Closed-Loop Experiment Execution Flow

The Scientist's Toolkit: Key Research Reagent Solutions

This table lists essential materials and their functions as featured in the experimental contexts of the compared hardware systems.

| Component Category | Specific Item / Solution | Primary Function in Experimental Context | Reference |

|---|---|---|---|

| Catalyst & Reaction Materials | Mn-Na2WO4/SiO2 Catalyst | The primary heterogeneous catalyst for the Oxidative Coupling of Methane (OCM) reaction, providing activity and selectivity for C2+ hydrocarbons. | [33] |

| Catalyst & Reaction Materials | Ba0.5Sr0.5Co0.8Fe0.2O3−δ (BSCF) | An oxygen carrier material added to inert packing in a Chemical Looping Reactor (CLR) to enhance the oxygen storage capacity and improve CH4 conversion. | [33] |

| Reactor Hardware | Porous α-Alumina Membrane | A key component of a Packed Bed Membrane Reactor (PBMR) for OCM, enabling controlled, distributed oxygen dosing along the reactor length to improve selectivity. | [33] |

| Image Processing | BFS-Canny-Harris GPU Kernel | A software "reagent" for robotic vision. It is a parallel processing algorithm for high-speed, accurate edge and corner detection, enabling real-time visual servoing. | [27] |

| AI/Planning Algorithm | RetroRollout* Search Algorithm | The core AI search algorithm in the ChemEnzyRetroPlanner platform, used for planning optimal hybrid organic-enzymatic synthesis routes. | [26] |

| Liquid Handling Consumables | High-Precision Microplate & Tips | Essential consumables for automated liquid handlers. Enable accurate nanoliter-to-microliter volume transfers for high-throughput screening assays. | [30] [31] |

| Model & Training Framework | Deep RL Algorithms (SAC, DDQN) | Software frameworks (like Soft Actor-Critic, Double Deep Q-Network) used to train robotic arms for autonomous task learning in sequential fabrication. | [28] |

The Role of AI and Machine Learning in Modern Synthesis Planning

The integration of Artificial Intelligence (AI) and Machine Learning (ML) is fundamentally reshaping the landscape of chemical and biological synthesis planning. This transformation is most evident in the evolution of commercial automated synthesis systems, which are transitioning from manual, expert-driven tools to intelligent, data-driven platforms. This guide provides a comparative analysis of how AI/ML technologies are embedded within modern synthesis systems, evaluating their performance, supported by experimental data and protocols, within the broader context of automated synthesis research.

Comparative Analysis of AI-Driven Synthesis Planning Capabilities

The market for AI in Computer-Aided Synthesis Planning (CASP) is experiencing explosive growth, projected to rise from USD 2.13-3.1 billion in 2024/2025 to between USD 68.06 and 82.2 billion by 2034/2035, reflecting a CAGR of 38.8%-41.4% [34] [35]. This growth is driven by the adoption of AI to reduce drug discovery timelines and costs by 30-50% in preclinical phases [35]. The table below compares the core AI functionalities and their implementation across different system types.

Table 1: Comparison of AI/ML Capabilities in Synthesis Planning Systems

| AI/ML Capability | Description & Function | Representative Systems/Approaches | Reported Impact/Performance |

|---|---|---|---|

| Retrosynthesis & Route Prediction | Uses ML/DL models trained on vast reaction databases to propose viable synthetic pathways and predict yields. | CASP Platforms (e.g., Molecule.one, Chematica); LLM-based agents [34] [16]. | Dominates the technology segment with an 80.3% share; enables multi-step route design and ranking by feasibility [34]. |

| Reaction Condition Optimization | Employs algorithms to screen and optimize variables (catalyst, solvent, temperature) for high yield/selectivity. | High-Throughput Experimentation (HTE) integrated with AI; LLM-RDF's Experiment Designer [16] [25]. | AI-driven HTE can simultaneously screen 1536 reactions, accelerating data generation for ML model training [25]. |

| Generative Molecular Design | Leverages generative AI models to design novel chemical structures with desired properties from scratch. | Generative AI models integrated into CASP; used for de novo molecule discovery [35] [36]. | Used to identify novel antibiotic candidates (e.g., Insilico Medicine's Chemistry42); reduces discovery timelines [35]. |

| Experimental Workflow Automation | Coordinates AI planning with robotic hardware for end-to-end, closed-loop "design-make-test-analyze" cycles. | LLM-based Reaction Development Framework (LLM-RDF); integration with lab robotics [34] [16]. | Frameworks like LLM-RDF use specialized agents (e.g., Hardware Executor) to translate plans into automated experiments [16]. |

| Synthetic Data Generation | Creates algorithmically-generated data to augment training sets, protect privacy, and test software. | Synthetic Data Vault; generative models for tabular data [37] [38]. | Over 60% of data for AI applications in 2024 was estimated to be synthetic; used for data augmentation and system testing [38]. |

The dominant application is in small molecule drug discovery, accounting for 75.2% of the AI-CASP market, with pharmaceutical and biotech companies being the primary end-users (70.5% share) [34].

Experimental Protocols: Implementing AI in Synthesis Workflows

Protocol: LLM-Agent Driven End-to-End Reaction Development

This methodology, based on the LLM-RDF framework, demonstrates autonomous synthesis development [16].

- Objective: To autonomously develop a synthesis protocol for a target transformation (e.g., Cu/TEMPO aerobic alcohol oxidation).

- Agents & Tools:

- Literature Scouter: Pre-prompted GPT-4 agent connected to Semantic Scholar database via RAG.

- Experiment Designer: GPT-4 agent that designs HTE plates for substrate/condition screening.

- Hardware Executor: Agent that converts experimental designs into instrument commands for automated platforms.

- Spectrum Analyzer & Result Interpreter: Agents that analyze GC/MS/NMR data and interpret results.

- Procedure:

- A natural language prompt is given to the Literature Scouter to search for relevant methods.

- The agent retrieves and summarizes papers, recommending a specific catalytic system with extracted conditions.

- The Experiment Designer receives the conditions and designs a high-throughput screening matrix.

- The Hardware Executor executes the screening on an automated liquid handling and reactor system.

- Raw analytical data is processed by the Spectrum Analyzer.

- The Result Interpreter analyzes yields, identifies trends, and suggests optimization steps.

- Key Data: The framework successfully guided the full development process, including kinetics study and scale-up, for the model reaction and validated on three other distinct reaction types [16].

Protocol: AI-Enhanced High-Throughput Experimentation (HTE) for Optimization

This protocol highlights the synergy between AI and HTE for rapid reaction optimization [25].

- Objective: To optimize a reaction by simultaneously exploring a multi-dimensional condition space.

- Materials: Automated liquid handler, microtiter plates (MTPs), diverse reagent stock solutions, in-line or parallel analysis system (e.g., UPLC-MS).

- Procedure:

- Strategic Design: Use AI/cheminformatics tools to design a non-random, bias-reduced screening library of reagents and conditions, moving beyond simple grid searches.

- Automated Execution: Employ robotics to dispense nanomole-to-micromole quantities of reagents into MTPs under an inert atmosphere if required.

- Parallel Reaction & Analysis: Run reactions in parallel, followed by high-throughput analysis. Data is automatically processed into a structured format.

- ML Model Training: Use the generated dataset (including "negative" results) to train a predictive ML model (e.g., for yield or selectivity).

- Closed-Loop Optimization: The model predicts promising, unexplored conditions, which are then tested in the next HTE cycle.

- Key Consideration: Mitigating spatial bias within plates (e.g., edge effects) is critical for reproducibility [25].

Visualization of an Integrated AI-Driven Synthesis Workflow

Diagram 1: AI-Driven Synthesis Planning & Execution Cycle (76 chars)

The Scientist's Toolkit: Key Research Reagent Solutions

The effectiveness of automated synthesis is contingent on the integration of hardware, software, and high-quality consumables.

Table 2: Essential Research Reagent Solutions for AI-Enhanced Synthesis

| Item Category | Specific Examples | Function in AI/ML Workflow |

|---|---|---|

| Specialized Reagents & Building Blocks | Protected amino acids (Fmoc/Boc), machinable resins, diverse catalyst libraries, proprietary reagent kits (e.g., for peptide synthesis) [39] [40]. | Provide the chemical basis for reactions. AI platforms design syntheses assuming their availability. Consistent quality is critical for reproducible HTE and reliable ML training data. |

| Integrated Software/Platforms | AI-CASP software (e.g., Schrödinger's, BIOVIA, ChemPlanner), generative AI models (Chemistry42), laboratory information management systems (LIMS) [34] [35]. | Form the "brain" of the operation. They execute retrosynthesis, predict conditions, manage experimental data, and interface with automation hardware. |

| Automated Synthesis Hardware | Automated parallel peptide/DNA synthesizers (e.g., from CEM, Biotage, Twist Bioscience), robotic liquid handlers, modular reactor platforms (e.g., Chemspeed) [41] [39] [40]. | Act as the "hands." They physically execute the experiments designed by AI, enabling the high-throughput generation of data essential for ML. |

| Analytical & Purification Modules | In-line UPLC-MS, GC-MS, automated flash chromatography systems, HPLC purification integrated with synthesizers [39] [25]. | Provide the "eyes." Enable rapid, parallel analysis of reaction outcomes, converting chemical results into digital data for AI/ML analysis and decision-making. |

| Synthetic Data Generation Tools | Platforms like the Synthetic Data Vault (SDV) for tabular data [38]. | Augment limited real experimental datasets, protect sensitive information, and create data for stress-testing synthesis planning algorithms before real-world application. |

Performance Comparison: AI vs. Traditional Methods

The quantitative advantage of AI-integrated systems is clear when compared to traditional manual approaches.

Table 3: Performance Metrics: AI-Augmented vs. Traditional Synthesis Planning

| Metric | Traditional Manual Approach | AI-Augmented/Automated Approach | Data Source / Context |

|---|---|---|---|

| Route Design Time | Days to weeks, based on expert literature search and intuition. | Minutes to hours, via algorithmic search of reaction databases and predictive modeling [36] [16]. | LLM agents can complete literature search and data extraction in a single query [16]. |

| Experimental Throughput | Low (one to several reactions per day). | Very High (hundreds to thousands of reactions per day via HTE) [25]. | Ultra-HTE allows for 1536 simultaneous reactions [25]. |

| Data Generation for Learning | Sparse, often only successful results are reported. | Comprehensive, structured datasets including negative results, ideal for ML [25]. | FAIR data management from HTE is key for robust ML models [25]. |

| Optimization Cycle Time | Iterative, linear OVAT (One Variable at a Time) testing. | Parallel, multi-variate search with closed-loop AI recommendation. | AI uses HTE data to predict optimal conditions, drastically reducing cycles [34] [25]. |

| Discovery Serendipity | Relies on individual researcher's observation. | Can be engineered by AI-designed broad, unbiased screening libraries [25]. | Strategic plate design can reduce bias and promote discovery of novel reactivity [25]. |

In conclusion, the role of AI and ML in modern synthesis planning is no longer ancillary but central, transforming automated synthesis systems from passive tools into active, collaborative partners in research. The comparative data indicates that systems integrating advanced CASP, generative AI, and robotic execution offer substantial performance gains in speed, efficiency, and data quality. However, the ultimate model remains "human-in-the-loop," where scientist expertise guides and validates the proposals of intelligent algorithms [36] [16]. The future of commercial systems lies in further democratizing access to these capabilities and enhancing interoperability across the digital-experimental divide.

Commercial Platform Deep Dive: Technologies, Capabilities, and Sector Applications

This guide provides an objective comparison of leading commercial automated synthesis systems, focusing on their operational principles, performance data, and applicability in modern research and drug development.

The following table summarizes the core characteristics of the automated synthesis systems.

| System Name | Primary Approach / Architecture | Key Differentiating Features | Reported Synthesis Capabilities |

|---|---|---|---|

| Chemputer [42] [43] [44] | Chemputation: Programmable execution of chemical reaction code on universally re-configurable hardware. Framed as a Chemical Synthesis Turing Machine (CSTM). [42] | • Universal Chemical Computer Goal: Aims to synthesize any stable, isolable molecule in finite time. [42]• Code-Driven: Uses a high-level chemical description language (χDL) for defining reactions; code is portable between platforms. [42] [44]• Closed-Loop & Error Correction: Dynamic error-correction routines for fault-tolerant execution. [42] | • Validated Synthesis: Produced pharmaceutical compounds (Nytol, rufinamide, sildenafil) autonomously, with yields comparable to or better than manual synthesis. [44] |

| Chemspeed [45] [46] | Modular & Configurable Automation: Combines base systems with robotic tools, modules, reactors, and software for tailored workflows. [45] | • Scalable Design: Start with a benchtop system (e.g., SWING) and expand as needed. [45]• Integrated Software Suite: AUTOSUITE software for experiment design and execution. [45]• Broad Toolset: Integrated gravimetric solid dispensing, liquid handling, and various reactors. [46] | • High Reproducibility: Excels at running multiple, repetitive, and sequenced reactions with high consistency. [45] [46]• Quantitative Performance: Capable of running complex, multi-step workflows including reaction, workup, and analysis without human intervention. [46] |

| Scintomics GRP | Information limited in search results | Information limited in search results | Information limited in search results |

| Symyx | Information limited in search results | Information limited in search results | Information limited in search results |

Performance and Experimental Data Comparison

Documented Synthesis Performance

| System | Reported Experiment / Molecule | Reported Yield / Performance Data | Key Experimental Conditions |

|---|---|---|---|

| Chemputer | Multi-step synthesis of Nytol, Rufinamide, and Sildenafil [44] | Yields comparable to or better than those achieved manually. [44] | • Full autonomy: No human intervention. [44]• Digital code execution: Recipes written in a chemical programming language (χDL). [42] [44] |

| Chemspeed | High-throughput reaction optimization (e.g., microwave optimization) [46] | Enables automated, sequential experimentation outside of lab working hours. [46] | • Integrated peripherals: Uses gravimetric dispensing, liquid handling, and reactor blocks (e.g., MTP pressure block). [46]• Software-controlled parameters: Temperature, reagent amounts, time. [46] |

Experimental Protocols and Methodologies

1. Chemputer-based Automated Synthesis Protocol [42] [44]

- Step 1: Code-Based Reaction Design: The synthetic route for a target molecule is defined using the χDL chemical programming language. This code describes the reaction graph, including reagents, unit operations, and process variables. [42]

- Step 2: Compilation ("Chempilation"): The high-level χDL code is compiled by the "chempiler" software, which maps the abstract reaction graph onto the specific hardware configuration of the Chemputer rig. [42]

- Step 3: Automated Execution: The compiled code is executed on the modular Chemputer hardware.

- Reagent Handling: The system automatically handles solid and liquid reagents from designated source vials. [42]

- Reaction Control: Reactions are carried out in sequence in appropriate reactors, with control over stirring, heating, and cooling. [44]

- Work-up and Purification: The system can perform subsequent work-up operations, such as liquid-liquid extraction or evaporation, as programmed. [44]

- Step 4: In-Line Analysis and Error Correction: The platform can integrate real-time analysis (e.g., NMR, MS) for feedback. A dynamic error-correction routine ensures fault-tolerant execution by keeping per-step fidelity high. [42]

- Step 5: Product Isolation: The final product is delivered in a specified vessel. [44]

2. Chemspeed Workflow Optimization Protocol [46]

- Step 1: Platform Zoning: In the APPLICATION EDITOR software, the user defines the physical layout of the platform by drag-and-drop assigning vial racks, reactor blocks, and the solid and liquid reservoirs to virtual "zones". [46]

- Step 2: Macro Task Creation: The user creates a "Macro" task in the task editor, defining a sequence of operations.

- Transfer Tasks: Specifies gravimetric solid dispensing or volumetric liquid transfer from source to destination zones. [46]

- Reaction Control: Defines reaction parameters (temperature, time) for the reactor blocks. [46]

- Workflow Logic: Programs the movement of vials to and from reactors and workup zones. [46]

- Step 3: Application Execution and Monitoring: The method is run via the APPLICATION EXECUTOR. The platform initializes all instruments and then executes the sequence. Progress of reactions can be monitored in real-time, and data (e.g., reaction progress charts) can be exported for analysis. [46]

System Workflows and Functional Logic

The core functional logic of the Chemputer and Chemspeed platforms can be visualized in the following diagrams, highlighting their distinct approaches to automating chemical synthesis.

Chemputation Workflow Logic

Chemspeed Application Execution Logic