Automating Biopharma: AI-Driven Strategies for Reaction Scale-Up and Purification

This article provides a comprehensive guide for researchers and drug development professionals on integrating automation and artificial intelligence into reaction scale-up and product purification.

Automating Biopharma: AI-Driven Strategies for Reaction Scale-Up and Purification

Abstract

This article provides a comprehensive guide for researchers and drug development professionals on integrating automation and artificial intelligence into reaction scale-up and product purification. It covers foundational principles of automated reaction pathway exploration and modern purification technologies like single-use TFF. The content delivers practical methodologies for implementation, addresses common troubleshooting and optimization challenges, and outlines rigorous validation and comparative analysis frameworks. By synthesizing the latest advancements, this resource aims to equip scientists with the knowledge to accelerate process development, enhance product quality, and de-risk the transition from lab to production.

Foundations of Automation: From Reaction Pathways to Purification Principles

Automated Exploration of Reaction Pathways and Potential Energy Surfaces

The automated exploration of reaction pathways and Potential Energy Surfaces (PES) represents a transformative advancement in computational chemistry, enabling the rapid prediction of reaction mechanisms and kinetics crucial for pharmaceutical development. Traditional methods for mapping PES—the multidimensional landscape defining energy as a function of molecular geometry—have relied heavily on chemical intuition and manual intervention, making them time-consuming and difficult to scale. The integration of machine learning (ML), automated workflow systems, and high-performance computing has revolutionized this domain, allowing researchers to systematically discover reaction pathways, transition states, and catalytic cycles with minimal human input [1] [2]. This paradigm shift is particularly valuable for drug development, where understanding complex molecular transformations is essential for optimizing synthetic routes, predicting metabolite pathways, and designing efficient catalysts.

Within the broader thesis of automated reaction scale-up and purification, precise PES knowledge provides the fundamental thermodynamic and kinetic parameters needed to model reactions across scales. Automated exploration bridges quantum-mechanical calculations with industrial application, creating a data-driven pipeline from mechanistic insight to process optimization [3]. This document details the core frameworks, software tools, experimental protocols, and applications that constitute the modern automated PES exploration toolkit for research scientists.

Core Frameworks and Software Tools

Several sophisticated software frameworks now enable automated PES exploration, each employing distinct strategies to navigate chemical space efficiently.

The autoplex Framework

The autoplex framework implements an automated approach to exploring and fitting machine-learned interatomic potentials (MLIPs) to PES data. Its design emphasizes interoperability with existing materials modelling infrastructure and enables high-throughput computation on scalable systems. A key innovation is the integration of random structure searching (RSS) with active learning, where the MLIP is iteratively improved using data from DFT single-point evaluations. This method significantly reduces the need for costly ab initio molecular dynamics simulations by focusing computational resources on the most informative configurations [1].

The framework has been validated across diverse systems, including elemental silicon, TiO₂ polymorphs, and the full titanium-oxygen binary system. Performance metrics demonstrate that autoplex can achieve quantum-mechanical accuracy (errors on the order of 0.01 eV/atom) for stable and metastable phases after a few thousand single-point calculations [1]. This robustness makes it particularly suitable for pre-clinical drug development, where understanding the solid-form landscape of an Active Pharmaceutical Ingredient (API) is critical.

LLM-Guided Pathway Exploration

A novel program utilizing Python and Fortran leverages Large Language Models (LLMs) to guide chemical logic for automated reaction pathway exploration. This tool integrates quantum mechanics with rule-based methodologies and enhances efficiency through active-learning in transition state sampling and parallel multi-step reaction searches with efficient filtering [2]. Its capability for high-throughput screening is exemplified in case studies of organic cycloadditions, asymmetric Mannich-type reactions, and organometallic catalysis, positioning it as a powerful tool for data-driven reaction development and catalyst design [2].

AMS PESExploration Module

The PESExploration module within the Amsterdam Modeling Suite (AMS) automates the discovery of reaction pathways and transition states. It systematically maps the PES to identify local minima, transition states, and entire reaction networks without the need for manual pre-guessing of geometries [4]. Its application to reactions like water splitting on a TiO₂ surface demonstrates how it provides immediate insights into reaction energetics and kinetics through an intuitive interface [4].

Hybrid Mechanistic and Data-Driven Modeling

For process scale-up, a hybrid modeling framework that integrates molecular-level kinetic models with deep transfer learning addresses the challenge of predicting product distribution across different reactor scales. This approach uses a mechanistic model to describe the intrinsic reaction kinetics from lab data and employs transfer learning to adapt to the changing transport phenomena at pilot or industrial scale [3]. A key feature is a specialized deep transfer learning network architecture using Residual Multi-Layer Perceptrons (ResMLPs) that mirror the logic of the mechanistic model, allowing for targeted fine-tuning when process conditions or feedstock compositions change [3].

Table 1: Quantitative Performance of the autoplex Framework for Selected Systems [1]

| System | Target Structure/Phase | DFT Single-Point Evaluations to Reach ~0.01 eV/atom Accuracy | Final Energy Error (eV/atom) |

|---|---|---|---|

| Silicon (Elemental) | Diamond-type | ~500 | ~0.01 |

| β-tin-type | ~500 | ~0.01 | |

| oS24 allotrope | Few thousand | ~0.01 | |

| Titanium-Oxygen (Binary Oxide) | Rutile (TiO₂) | ~1,000 | ~0.01 |

| Anatase (TiO₂) | ~1,000 | ~0.01 | |

| TiO₂-Bronze | Several thousand | ~0.01 | |

| Full Ti-O System | Ti₂O₃ | Several thousand | ~0.001 |

| TiO (Rocksalt) | Several thousand | ~0.001 |

Experimental Protocols

This section provides detailed methodologies for implementing automated PES exploration, from initial setup to data analysis.

Protocol 1: Automated PES Exploration with Active Learning

This protocol describes the general workflow for setting up an automated PES exploration run using an active-learning framework like autoplex or ARplorer.

3.1.1 Reagents and Computational Resources

- Hardware: High-performance computing (HPC) cluster with multiple nodes, each with ≥ 16 CPU cores and ≥ 128 GB RAM. GPU acceleration is beneficial for MLIP training.

- Software:

- Initial Data: Initial molecular geometry/structures as 3D Cartesian coordinates in XYZ file format or Crystallographic Information File (CIF).

3.1.2 Step-by-Step Procedure

System Definition:

- Define the chemical system, including all constituent elements.

- Specify the initial structure(s) or a composition for random structure generation.

- Set the calculation parameters for the reference electronic structure method (e.g., DFT functional, basis set, cut-off energy).

Workflow Configuration:

- Configure the active learning loop parameters: batch size (e.g., 100 structures per iteration), total number of iterations, and convergence criteria for the MLIP (e.g., target energy error).

- Define the RSS parameters for generating new candidate structures, such as cell and atomic displacement magnitudes.

Initial Model Generation (Optional):

- If no initial data exists, perform a short, initial RSS using a generic potential or DFT to generate a small, diverse training set.

MLIP Training:

- Train an initial MLIP on the available training data (from step 3 or provided by the user).

Active Learning Loop:

- Step A: Exploration. Use the current MLIP to perform RSS, generating thousands of candidate structures.

- Step B: Selection. Evaluate all explored structures with the MLIP and select a batch of candidates for DFT validation. Selection is based on uncertainty quantification (e.g., high error estimation) or energy-based criteria to find novel minima or transition states.

- Step C: Validation. Perform single-point DFT calculations on the selected candidates to obtain accurate energies and forces.

- Step D: Augmentation. Add the newly validated data points to the training dataset.

- Step E: Retraining. Retrain the MLIP on the augmented dataset.

- Repeat Steps A-E until the convergence criteria are met (e.g., no new low-energy structures are found, or MLIP error is below a threshold).

Analysis:

- Cluster the final set of structures to identify all unique local minima (reactants, products, intermediates).

- Perform nudged elastic band (NEB) or dimer calculations between minima to find transition states and confirm connectivity, using the robust final MLIP to accelerate the process.

- Calculate thermodynamic and kinetic properties (reaction energies, barrier heights) for the constructed reaction network.

Protocol 2: Cross-Scale Modeling via Transfer Learning

This protocol uses hybrid modeling and transfer learning to adapt a lab-scale kinetic model for pilot-scale prediction, crucial for reaction scale-up.

3.2.1 Reagents and Computational Resources

- Data:

- Source Domain: High-fidelity laboratory-scale dataset with detailed molecular-level product distributions under various conditions.

- Target Domain: Limited pilot-scale dataset, typically comprising bulk property measurements (e.g., boiling point distribution, density).

- Software: Python with deep learning libraries (e.g., PyTorch, TensorFlow), and computational fluid dynamics (CFD) software for data generation.

3.2.2 Step-by-Step Procedure

Develop Mechanistic Model:

- Build a molecular-level kinetic model for the complex reaction system (e.g., fluid catalytic cracking) using laboratory-scale data [3].

- Generate a comprehensive dataset of molecular compositions and product distributions under varied lab-scale conditions using this model.

Design Neural Network Architecture:

- Construct a dual-input network architecture as described in [3]:

- Process-based ResMLP: Takes process conditions (temperature, pressure, residence time) as input.

- Molecule-based ResMLP: Takes feedstock molecular composition as input.

- Integrated ResMLP: Combines outputs from both networks to predict product molecular composition.

- Construct a dual-input network architecture as described in [3]:

Train Laboratory-Scale Model:

- Train the entire neural network on the dataset generated from the lab-scale mechanistic model. This model now serves as a fast, data-driven surrogate for the lab-scale reactor.

Incorporate Property-Formation Equations:

- Integrate mechanistic equations for calculating bulk properties (e.g., cetane index, octane number) into the output layer of the neural network. This bridges the gap between molecular-level predictions and available pilot-scale data [3].

Transfer Learning Fine-Tuning:

- Freeze the layers of the network that are deemed scale-invariant (e.g., the Molecule-based ResMLP if the feedstock is unchanged).

- Fine-tune the remaining layers (e.g., Process-based and Integrated ResMLPs) using the limited pilot-scale bulk property data. This adapts the model to the new reactor geometry and associated transport phenomena.

Pilot-Scale Prediction and Optimization:

- Use the fine-tuned model to predict product distribution and properties at the pilot scale.

- Connect the model to a multi-objective optimization algorithm to identify optimal pilot plant conditions for target objectives (e.g., maximizing yield, minimizing impurities) [3].

Applications in Pharmaceutical Research and Scale-Up

Automated PES exploration tools are catalyzing advances in several key areas of drug development:

Reaction Mechanism Elucidation and Optimization: Tools like ARplorer and AMS PESExploration can automatically map out complex multi-step reaction pathways, including those involving organocatalysis or transition metal catalysis, which are ubiquitous in API synthesis [2]. This provides a fundamental understanding of reaction selectivity and helps identify strategies to suppress impurity formation.

Solid Form Landscape Assessment: The ability of autoplex to efficiently explore polymorphs, hydrates, and co-crystals of an API with high accuracy is critical for intellectual property protection and ensuring the stability and bioavailability of the final drug product [1].

Accelerated Process Scale-Up: The hybrid transfer learning approach directly addresses the "scale-up gap" [3]. By enabling accurate prediction of pilot-scale performance from lab data, it reduces the need for expensive and time-consuming trial-and-error campaigns, accelerating the transition from bench to production.

Integration with Purification Protocols: Understanding the reaction network and impurity profile generated from PES studies allows for the proactive development of purification methods. Software like Chrom Reaction Optimization 2.0 can then be used to fine-tune analytical and preparative chromatography methods for isolating the API and key intermediates from complex reaction mixtures [5].

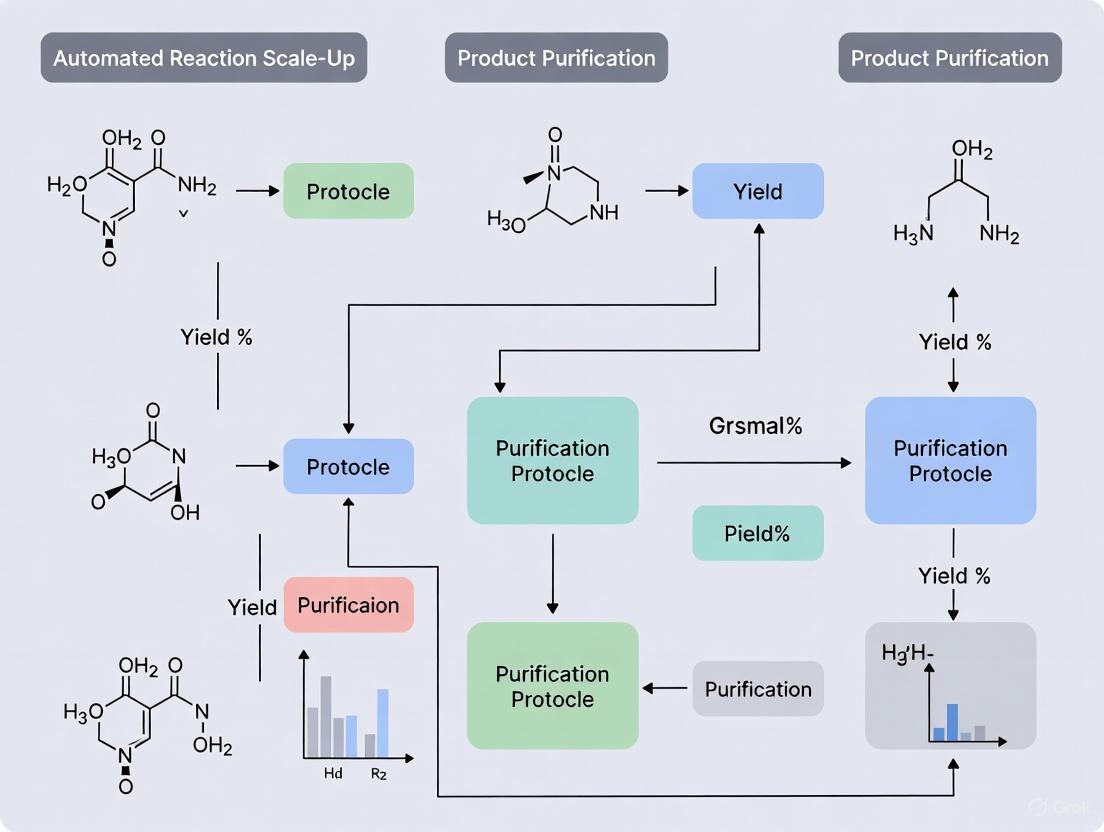

Workflow and Signaling Diagrams

The following diagrams illustrate the logical flow of the automated PES exploration and scale-up protocols.

Automated PES Exploration Workflow

Hybrid Model for Reaction Scale-Up

The Scientist's Toolkit: Essential Research Reagents and Solutions

This section details key software and computational tools essential for implementing the described protocols.

Table 2: Key Research Reagent Solutions for Automated PES Exploration

| Tool/Solution Name | Type | Primary Function | Application Context |

|---|---|---|---|

| autoplex [1] | Software Framework | Automated exploration and fitting of ML interatomic potentials. | High-throughput PES exploration for materials and molecular crystals. |

| ARplorer [2] | Software Program | LLM-guided automated reaction pathway exploration. | Mechanistic study of organic and organometallic catalytic reactions. |

| AMS PESExploration [4] | Commercial Software Module | Automated discovery of reaction pathways and transition states. | General-purpose reaction mechanism analysis for molecular systems. |

| Gaussian Approximation Potential (GAP) [1] | ML Potential Framework | Data-efficient regression of PES using Gaussian process regression. | Building accurate MLIPs for active learning within frameworks like autoplex. |

| atomate2 [1] | Workflow Manager | Automation and management of computational materials science workflows. | Orchestrating high-throughput DFT and MLIP calculations on HPC clusters. |

| Chrom RO 2.0 [5] | Analytical Software | Optimization of chromatographic methods for reaction analysis. | Quantifying reaction components and impurities for model validation. |

| Residual MLP (ResMLP) [3] | Neural Network Architecture | Deep transfer learning for complex reaction systems. | Adapting lab-scale kinetic models for pilot-scale prediction (scale-up). |

LLM-Guided Chemical Logic for Efficient Reaction Discovery

The acceleration of reaction discovery is a critical objective in modern chemical research, particularly within drug development. Traditional methods often rely on extensive experimental screening or computationally expensive simulations, which can be slow and resource-intensive. The integration of Large Language Models (LLMs) offers a transformative approach by leveraging their advanced reasoning capabilities to guide exploration. This document details the application notes and protocols for employing LLM-guided frameworks to streamline reaction discovery and optimization, contextualized within automated reaction scale-up and product purification research. These frameworks augment the traditional research pipeline by introducing intelligent, reasoning-guided hypothesis generation and validation, thereby reducing the experimental burden and accelerating the path from discovery to production.

Multi-Agent Frameworks for Autonomous Chemical Optimization

A prominent approach for applying LLMs to chemical problems involves multi-agent systems, where specialized AI agents collaborate to solve complex tasks. One such framework, built upon the AutoGen platform, employs multiple specialized agents with distinct roles to autonomously infer operating constraints and guide chemical process optimization [6] [7]. This system is designed to function even when operational constraints are initially ill-defined, a common scenario in novel reaction discovery.

Agent Roles and Workflow

The framework utilizes a team of five specialized agents that operate in two primary phases: an initial autonomous constraint generation phase, followed by an iterative optimization phase [6]. The table below summarizes the core functions of each agent.

Table 1: Specialized Agents in a Multi-Agent Optimization Framework

| Agent Name | Core Function | Role in Workflow |

|---|---|---|

| ContextAgent | Infers realistic variable bounds and generates process context from minimal descriptions [6]. | Operates independently in the first phase to establish feasible operating parameters. |

| ParameterAgent | Introduces initial parameter-value pairs as starting points for the optimization [6]. | Initiates the iterative optimization cycle; initial guesses can be arbitrary. |

| ValidationAgent | Serves as a checkpoint, evaluating proposed parameters against generated constraints [6]. | Identifies constraint violations and redirects invalid proposals for correction. |

| SimulationAgent | Executes the process evaluation by running a pre-defined simulation model [6]. | Calculates key performance metrics (e.g., cost, yield) for a given parameter set. |

| SimulationAgent | Maintains optimization history and proposes refined parameter sets [6]. | Acts as the optimization engine, using historical data to suggest improvements. |

The workflow proceeds in a structured cycle: ParameterAgent introduces values → ValidationAgent checks feasibility → SimulationAgent evaluates performance → SuggestionAgent analyzes results and proposes improvements [6]. This cycle repeats autonomously until performance convergence is detected. This approach has demonstrated a 31-fold speedup compared to traditional grid search methods, converging to an optimal solution in under 20 minutes for a hydrodealkylation process case study [6] [7].

Tool-Augmented LLMs for Complex Chemical Tasks

Beyond multi-agent systems, another powerful paradigm is augmenting a single LLM with expert-designed chemistry tools. This approach equips the LLM with the ability to perform precise chemical operations, bridging the gap between abstract reasoning and domain-specific execution.

The ChemCrow Framework

ChemCrow is an LLM chemistry agent integrated with 18 expert-designed tools, using GPT-4 as the underlying reasoning engine [8]. It is designed to accomplish tasks across organic synthesis, drug discovery, and materials design. The operational logic of ChemCrow follows the ReAct (Reasoning-Acting) paradigm, where the LLM reasons about a task, uses a tool to act, and observes the result in an iterative loop until a solution is reached [8].

Table 2: Selected Tools and Functions in the ChemCrow Framework

| Tool Name / Category | Specific Function | Application in Reaction Discovery |

|---|---|---|

| Synthesis Planning | Plans synthetic routes for target molecules [8]. | Autonomously planned and executed syntheses of an insect repellent and organocatalysts. |

| RoboRXN Platform | A cloud-connected robotic synthesis platform for executing chemical reactions [8]. | Allows the agent to transition from digital planning to physical execution in an automated lab. |

| Molecular Property Prediction | Predicts properties like solubility and drug-likeness [8]. | Informs the selection of viable candidate molecules during discovery. |

| IUPAC-to-Structure Conversion | Converts IUPAC names to molecular structures (e.g., via OPSIN) [8]. | Overcomes a key limitation of LLMs in handling precise chemical nomenclature. |

This tool-augmented approach has been successfully validated in complex scenarios. For instance, ChemCrow autonomously planned and executed the synthesis of an insect repellent (DEET) and three distinct thiourea organocatalysts on the RoboRXN platform [8]. In a collaborative discovery task, ChemCrow was instructed to train a machine-learning model to screen a library of candidate chromophores. The agent successfully loaded, cleaned, and processed the data, trained a model, and proposed a novel chromophore structure, which was subsequently synthesized and confirmed to have absorption properties close to the target [8].

Experimental Protocols & Data Presentation

Protocol: Multi-Agent Optimization for Reaction Condition Screening

This protocol outlines the steps for using a multi-agent LLM framework to optimize reaction conditions, such as temperature, pressure, and reactant concentration [6] [7].

- Process Description Input: Provide a natural language description of the reaction system to the ContextAgent. The description should include key components and known qualitative constraints.

- Autonomous Constraint Generation: The ContextAgent processes the description using embedded domain knowledge to infer realistic lower and upper bounds for all decision variables (e.g.,

150 °C <= T <= 300 °C). - Iterative Optimization Cycle: a. Parameter Proposal: The ParameterAgent or SuggestionAgent proposes a set of reaction conditions. b. Constraint Validation: The ValidationAgent checks the proposed parameters against the generated constraints. If violations occur, the proposal is sent back for revision. c. Simulation & Evaluation: Validated parameters are passed to the SimulationAgent, which runs a simulation (e.g., using an IDAES model) to calculate performance metrics. d. Analysis and Refinement: The SuggestionAgent records the parameters and results. It then analyzes the trend and uses reasoning to propose a new, improved set of conditions.

- Termination: The cycle continues until the SuggestionAgent determines that performance metrics (e.g., yield, cost) have converged to an optimum based on a pre-defined threshold for diminishing returns.

Quantitative Performance Metrics

The performance of optimization frameworks is typically evaluated against established benchmarks using key chemical engineering metrics.

Table 3: Quantitative Performance Comparison of Optimization Methods

| Optimization Method | Key Characteristics | Performance on HDA Process | Computational Efficiency |

|---|---|---|---|

| LLM-guided Multi-Agent | Autonomous constraint generation; reasoning-guided search [6]. | Competitive with conventional methods on cost, yield, and yield-to-cost ratio [6] [7]. | 31x faster than grid search; converges in <20 minutes [6] [7]. |

| Grid Search | Exhaustive search; evaluates all parameter combinations in a discretized space [6]. | Serves as a baseline for global optimization performance [6]. | Computationally expensive; requires thousands of iterations [6]. |

| Gradient-Based Solver (IPOPT) | Requires smooth, differentiable objective functions and predefined constraints [6]. | A state-of-the-art benchmark when constraints are well-defined [6]. | High efficiency for problems meeting its mathematical requirements [6]. |

Visualization of LLM-Guided Discovery Workflows

The following diagrams, generated using Graphviz and adhering to the specified color palette and contrast rules, illustrate the logical workflows of the described frameworks.

Multi-Agent Chemical Optimization

Tool-Augmented LLM Logic (ReAct)

The Scientist's Toolkit: Essential Research Reagent Solutions

The following table details key computational tools and resources that form the foundation for implementing LLM-guided chemical discovery protocols.

Table 4: Essential Research Reagent Solutions for LLM-Guided Discovery

| Tool / Resource Name | Type | Primary Function in Protocol |

|---|---|---|

| AutoGen | Software Framework | Enables the development of the multi-agent conversation framework used for collaborative optimization [6]. |

| IDAES Platform | Process Simulation | Provides the high-fidelity process models and equation-oriented optimization capabilities used by the SimulationAgent [6]. |

| RDKit | Cheminformatics Library | Often used as a backend tool for molecular manipulation, property calculation, and reaction handling [8]. |

| OPSIN | Parser Library | Converts IUPAC names to structured molecular representations (e.g., SMILES), overcoming an LLM limitation [8]. |

| RoboRXN | Cloud-Lab Platform | Allows for the physical execution of designed synthesis protocols, bridging digital discovery and automated experimentation [8]. |

| ChemCoTBench Dataset | Benchmarking Data | Provides a dataset for training and evaluating LLMs on chemical reasoning tasks via modular operations [9]. |

The Shift to Automated, Agile Biopharma Manufacturing

The biopharmaceutical industry is undergoing a significant transformation driven by the integration of automation and digitalization. This shift is moving traditional batch processing toward intelligent, continuous, and flexible manufacturing operations. The modern "digital plant" leverages connected systems and data-driven decision-making to accelerate process development, enhance quality control, and enable agile responses to market demands [10]. This paradigm is crucial for advancing complex therapeutics, where traditional methods struggle with variability and scale-up challenges. The core of this transformation lies in the synergistic application of process analytical technology (PAT), advanced modeling, and robotic automation to create a closed-loop control environment that ensures product quality and operational integrity from development through commercial manufacturing [11] [10].

Automated Reaction Modeling and Scale-Up

Kinetic Modeling for Reaction Development

The foundation of predictable scale-up lies in developing accurate kinetic models that describe reaction behavior across different conditions. Reaction Lab software exemplifies this approach by enabling chemists to quickly develop kinetic models from laboratory data, significantly accelerating project timelines [12]. This platform allows researchers to:

- Copy and paste chemical structures directly from ChemDraw or Electronic Lab Notebooks (ELNs) to define reaction components [12].

- Fit chemical kinetics and unknown relative response factors (RRFs) to establish quantitative relationships between process parameters and outcomes [12].

- Explore response surface and design space for yield optimization and impurity control through virtual Design of Experiment (DoE) studies [12].

- Leverage full value from data-rich experiments by integrating HPLC area, area percent, and RRF data directly into model development [12].

User feedback indicates that this intuitive approach to kinetic modeling can be mastered in as little as four hours, making sophisticated modeling accessible to bench chemists and facilitating wider adoption in day-to-day reaction development activities [12].

Hybrid Mechanistic-AI Modeling for Cross-Scale Prediction

For complex molecular reaction systems, a unified modeling framework that integrates mechanistic understanding with artificial intelligence (AI) addresses fundamental scale-up challenges. Recent research demonstrates a hybrid mechanistic modeling and deep transfer learning approach that successfully predicts product distribution across scales for systems like naphtha fluid catalytic cracking [3].

This methodology develops a molecular-level kinetic model from laboratory-scale experimental data, then employs a deep neural network to represent the complex reaction system. To bridge the data discrepancy between laboratory and pilot scales, a property-informed transfer learning strategy incorporates bulk property equations directly into the neural network architecture [3].

Table 1: Hybrid Modeling Framework Components for Cross-Scale Prediction

| Component | Function | Application in Scale-Up |

|---|---|---|

| Molecular-Level Kinetic Model | Describes intrinsic reaction mechanisms from lab data | Provides foundational understanding of reaction pathways |

| Deep Neural Network | Represents complex molecular reaction systems | Enables rapid prediction of product distributions |

| Transfer Learning | Adapts model knowledge across different scales | Addresses transport phenomenon variations between lab and production reactors |

| Property-Informed Strategy | Incorporates bulk property equations | Bridges data gap between molecular-level lab data and bulk property production data |

The network architecture specifically designed for complex reaction systems integrates three residual multi-layer perceptrons (ResMLPs) that mirror the computational logic of mechanistic models, allowing targeted parameter fine-tuning during transfer learning based on process changes [3].

Experimental Protocol: Hybrid Model Development for Reaction Scale-Up

Objective: To develop and validate a hybrid mechanistic-AI model for predicting pilot-scale product distribution from laboratory-scale reaction data.

Materials and Equipment:

- Laboratory-scale reactor system with temperature and pressure control

- Analytical instrumentation (e.g., HPLC, GC-MS) for detailed product characterization

- Computational environment with deep learning frameworks (e.g., TensorFlow, PyTorch)

- Software for mechanistic modeling of reaction kinetics

Methodology:

- Laboratory-Scale Data Generation: Conduct experiments across a designed range of process conditions (temperature, pressure, catalyst concentration) and feedstock compositions. Collect detailed product distribution data at molecular level [3].

- Mechanistic Model Development: Build a molecular-level kinetic model using laboratory-scale data. Validate model predictions against experimental results not used in parameter estimation [3].

- Data Set Generation: Use the validated mechanistic model to generate comprehensive molecular conversion datasets across varied compositions and conditions, creating the training set for the neural network [3].

- Neural Network Architecture Implementation: Design a network with three specialized ResMLP modules:

- Process-based ResMLP for processing reactor conditions

- Molecule-based ResMLP for capturing feedstock compositional features

- Integrated ResMLP for predicting final product molecular composition [3]

- Laboratory-Scale Model Training: Train the neural network using data generated in Step 3. Validate predictions against holdout laboratory experimental data.

- Transfer Learning Implementation: Fine-tune specific ResMLP modules using limited pilot-scale data:

- Freeze Molecule-based ResMLP when feedstock composition remains unchanged

- Fine-tune Process-based and Integrated ResMLPs to adapt to new reactor configurations and operating conditions [3]

- Model Validation: Compare hybrid model predictions with independent pilot-scale experimental results. Evaluate accuracy of product distribution and bulk property predictions.

Figure 1: Workflow for Developing Hybrid Scale-Up Model

Advancements in Automated Purification Protocols

Digitalization and Process Control in Downstream Processing

Downstream processing has seen significant innovation through digitalization strategies that enhance efficiency and product quality. Modern purification platforms incorporate multiple technologies working in concert:

- Model-Based DSP Design: Integration of host cell proteins (HCP) profiling and characterization enables more effective purification strategy optimization, enhancing product purity and process efficiency [11].

- Digital Twins for Chromatography: Automated generation of mechanistic chromatography models creates digital shadows of processes, enabling improved real-time monitoring of chromatographic elution through Kalman filtering techniques that combine real-time data with mechanistic modeling [11].

- Buffer Recycling: Implementation of buffer recycling in chromatography significantly reduces consumption of water and chemicals, addressing sustainability concerns while maintaining product quality [11].

- Multi-PAT Sensor Integration: Advanced process analytical technologies, including through-vial impedance spectroscopy (TVIS) for freeze-drying and automated Raman spectroscopy, provide in-line monitoring of critical quality attributes like protein aggregation during downstream processing [11].

Experimental Protocol: Automated Target Enrichment for Sequencing Workflows

Objective: To implement an automated target enrichment protocol for hands-off library preparation with increased reproducibility and reduced error rates.

Materials and Equipment:

- SPT Labtech firefly+ platform with Firefly Community Cloud access

- Agilent SureSelect Max DNA Library Prep Kits

- Agilent Target Enrichment panels (e.g., Exome V8, Comprehensive Cancer Panel)

- Standard laboratory reagents for nucleic acid processing

Methodology:

- Platform Configuration: Access the automated target enrichment protocols through the Firefly Community Cloud. Ensure the firefly+ platform is calibrated according to manufacturer specifications [13].

- Reagent Preparation: Thaw and prepare Agilent SureSelect Max DNA Library Prep reagents according to kit specifications. Ensure all solutions are properly mixed and free of particulates [13].

- Sample Loading: Transfer normalized DNA samples to designated input positions on the platform. Include appropriate control samples as required by the experimental design.

- Protocol Selection: Choose the appropriate target enrichment protocol based on the Agilent panel being utilized (e.g., Exome V8, Comprehensive Cancer Panel) [13].

- Automated Processing: Initiate the hands-off library preparation protocol. The system automatically performs:

- DNA fragmentation and size selection

- End repair and A-tailing

- Adaptor ligation

- Library amplification

- Target enrichment hybridization

- Post-capture cleanup and amplification [13]

- Quality Assessment: Verify library quality and quantity using appropriate methods (e.g., fragment analyzer, qPCR) before sequencing.

- Data Analysis: Process sequencing data according to standard bioinformatics pipelines for the specific application.

This automated approach addresses key bottlenecks in sequencing workflows, enabling laboratories to scale sequencing faster and more reliably while reducing hands-on time and variability associated with manual processing [13].

Critical Steps in Natural Product Purification

For complex natural products and novel biotherapeutics, purification requires specialized approaches that maintain compound integrity while ensuring regulatory compliance. The critical steps include:

- Raw Material Sourcing & Pre-treatment: Careful selection of well-characterized biological sources with confirmation of species, origin, and harvest time to ensure process stability. Pre-treatment steps such as drying, grinding, or defatting remove contaminants and reduce batch variability [14].

- Extraction Optimization: Selection of appropriate extraction methods (e.g., supercritical fluid, ultrasound-assisted, microwave-assisted) with optimization of solvent type, temperature, and pressure to maximize yield while preserving compound integrity [14].

- Chromatographic Resolution: Optimization of stationary/mobile phases, flow rate, temperature, and detection to ensure high-purity separation and removal of structurally similar impurities [14].

- Concentration & Drying: Implementation of processes like rotary evaporation, lyophilization, or spray drying to remove solvents and moisture while ensuring product stability and controlling physical form [14].

Table 2: Automated Purification Technologies and Applications

| Technology | Primary Function | Key Benefit | Representative Implementation |

|---|---|---|---|

| Membrane Chromatography | Purification via flow-through membranes | Rapid processing, reduced buffer consumption | Implementation at 2kL scale for clinical manufacturing [11] |

| Automated Raman Spectroscopy | In-line monitoring of protein aggregation | Real-time CQA tracking during DSP | Case study for chromatographic process monitoring [11] |

| Process Analytical Technology (PAT) | Multi-sensor monitoring of critical process parameters | Enhanced process control and understanding | Hamilton Flow Cell COND 4UPtF for conductivity measurement [11] |

| Automated Library Preparation | Hands-off target enrichment for sequencing | Reproducibility, reduced error rates | firefly+ platform with Agilent SureSelect kits [13] |

Figure 2: Automated Purification Workflow Steps

The Scientist's Toolkit: Essential Research Reagent Solutions

Table 3: Key Research Reagents and Materials for Automated Bioprocessing

| Reagent/Material | Function | Application Context |

|---|---|---|

| Agilent SureSelect Max DNA Library Prep Kits | Preparation of sequencing libraries with robust chemistry | Automated target enrichment on firefly+ platform [13] |

| LightCycler 480 SYBR Green I Master Mix | Fluorescent detection of amplified DNA in qPCR | Real-time PCR amplification and quantification [15] |

| Specialized Chromatography Resins | High-resolution separation of complex mixtures | Purification of novel modalities (viral vectors, RNA therapies) [11] |

| Process Analytical Technology Sensors | Real-time monitoring of critical process parameters | In-line conductivity measurement in chromatography [11] |

| Single-Use Bioreactor Systems | Flexible cell culture with integrated monitoring | Process intensification and continuous processing [10] |

Core Challenges in Traditional Scale-Up and Purification

In biologics and complex chemical manufacturing, transitioning a process from the laboratory to industrial production presents significant scientific and operational hurdles. While upstream production often receives greater attention, downstream purification frequently becomes the critical bottleneck, directly impacting yield, cost, and time to market [16]. In the context of automated reaction scale-up, these challenges are exacerbated by discrepancies in data types and process behaviors across different scales [3]. This application note details the core challenges in traditional scale-up and purification, provides structured quantitative data, and outlines detailed experimental protocols to aid researchers and drug development professionals in navigating this complex landscape. The focus is on understanding these limitations to better inform the development of automated, robust scale-up and purification protocols.

Core Scale-Up Challenges: A Quantitative Analysis

The table below summarizes the primary bottlenecks encountered during the scale-up of purification processes, particularly in biologics manufacturing.

Table 1: Key Challenges in Traditional Purification Scale-Up

| Challenge Category | Specific Bottlenecks | Impact on Manufacturing |

|---|---|---|

| Chromatography Scale-Up | Decreased resin performance, high resin costs, limited reusability, longer processing times [16]. | Increased financial strain, risk of product degradation, reduced yield. |

| Filtration & Separation | Membrane clogging, increased pressure damaging sensitive molecules, batch-to-batch variability [16]. | Process inconsistency, loss of product quality and integrity. |

| Overall Process Limitations | Slow throughput, yield loss with each step, inflexible facilities for varied products [16]. | Inability to match upstream production pace, high cumulative product loss, lack of agility. |

| Data & Modeling Gaps | Discrepancies in data types at various scales (e.g., molecular-level lab data vs. bulk property plant data) [3]. | Hinders accurate cross-scale prediction and modeling, making scale-up time-intensive and expensive. |

| Economic & Environmental Impact | High buffer consumption in chromatography [11]. | Increased cost of goods (COGs) and significant environmental footprint. |

Detailed Experimental Protocols for Investigating Scale-Up Challenges

Protocol 1: Assessing Chromatography Resin Performance Across Scales

Objective: To evaluate the performance and binding capacity decay of chromatography resins when scaling from laboratory-scale columns to pilot-scale columns.

Materials:

- Resin: Cation-exchange chromatography resin (e.g., POROS).

- Columns: Pre-packed laboratory column (e.g., 1 mL bed volume) and pilot-scale column (e.g., 100 mL bed volume).

- Protein Solution: Monoclonal antibody (mAb) solution at a defined concentration.

- Buffers: Equilibration buffer (e.g., 50 mM Sodium Phosphate, pH 7.4) and elution buffer (e.g., 50 mM Sodium Phosphate + 1 M NaCl, pH 7.4).

- Equipment: ÄKTA or equivalent chromatography system, UV detector, conductivity meter, fraction collector.

Methodology:

- Column Packing Qualification: Perform height equivalent to a theoretical plate (HETP) and peak asymmetry analysis on both columns to ensure packing uniformity and operational efficiency [17].

- Dynamic Binding Capacity (DBC) Determination:

- Equilibrate both columns with 5 column volumes (CV) of equilibration buffer.

- Load the mAb solution at a constant flow rate, monitoring UV absorbance at 280 nm at the column outlet.

- The DBC is calculated as the amount of protein loaded when the breakthrough curve reaches 10% of the inlet concentration.

- Process Repeatability: Execute a minimum of three consecutive purification cycles on each column, measuring DBC and product yield in each cycle.

- Data Analysis: Compare the DBC and yield decay rates between the laboratory and pilot-scale columns. A significant drop in DBC or faster yield decay at the larger scale indicates a scale-up performance issue.

Protocol 2: Evaluating Tangential Flow Filtration (TFF) Membrane Fouling

Objective: To quantify the propensity for membrane fouling and its impact on process efficiency and product recovery during TFF.

Materials:

- TFF System: Single-use TFF assembly with a defined molecular weight cutoff (MWCO) membrane.

- Feed Stream: High-density microbial culture supernatant containing the target biologic.

- Buffers: Formulation buffer for diafiltration.

- Equipment: Peristaltic pump, pressure sensors, load cells for volume measurement.

Methodology:

- System Setup: Install the single-use TFF cartridge and equilibrate with an appropriate buffer.

- Process Operation: Recirculate the feed stream through the TFF system, aiming for a target concentration factor. Monitor and record the transmembrane pressure (TMP) and permeate flux at regular intervals.

- Fouling Analysis: The decline in permeate flux over time at a constant TMP (or the rise in TMP required to maintain a constant flux) is a direct indicator of membrane fouling.

- Product Analysis: Assess the final retentate for target protein concentration and purity. Analyze for signs of product shear damage or aggregation, which can be caused by increased pressure requirements [16].

- Comparative Studies: Repeat the protocol using different feed stream pre-treatments (e.g., different clarification methods) or membrane materials to identify optimal conditions that minimize fouling.

Workflow and Logical Pathway Visualizations

Traditional Scale-Up Investigation Workflow

The following diagram outlines the logical workflow for a systematic investigation into traditional scale-up challenges, from initial problem identification to data-driven solution development.

Data and Modeling Disconnect in Scale-Up

This diagram illustrates the central data-related challenge in cross-scale process development, where the rich molecular data from the laboratory must be reconciled with the bulk property data from larger scales.

The Scientist's Toolkit: Research Reagent Solutions

The following table lists key materials and technologies critical for conducting scale-up and purification research, as featured in the cited experiments and industry standards.

Table 2: Essential Research Reagents and Materials for Purification Studies

| Item | Function/Application | Specific Example |

|---|---|---|

| Chromatography Resins | Separate biomolecules based on properties like charge, hydrophobicity, or affinity. High-cost and reusability are key study factors [16]. | Cation-exchange resin (e.g., POROS) for aggregate clearance [17]. |

| TFF Membranes | Concentrate and diafilter biological products; fouling propensity is a critical parameter under investigation [18]. | Single-use TFF assemblies with polyethersulfone (PES) membranes. |

| Single-Use Assemblies | Disposable filtration systems and pre-packed columns to reduce setup times, eliminate cleaning validation, and minimize contamination risk [16] [18]. | Pre-sterilized, ready-to-use TFF pods and chromatography columns. |

| Process Analytical Technology (PAT) | In-line sensors for real-time monitoring of critical process parameters (CPPs) and critical quality attributes (CQAs) [11]. | Conductivity and UV flow cells for monitoring chromatography elution [11]. |

| Hybrid Modeling Tools | Software integrating mechanistic models with AI/transfer learning to predict product distribution across scales with limited data [3]. | Physics-informed neural networks (PINNs) for cross-scale computation. |

Tangential Flow Filtration (TFF) and Chromatography represent two foundational pillars in the purification and analysis of biologics and pharmaceuticals. TFF is a size-based separation technique ideal for concentrating biomolecules and exchanging buffers, while chromatography separates components based on differential interactions with a stationary phase. Within the context of automated reaction scale-up, a deep understanding of these unit operations is critical for developing robust, reproducible, and efficient purification protocols. This document details the fundamental principles, provides structured experimental protocols, and presents quantitative data to guide researchers and drug development professionals in integrating these techniques into advanced production workflows.

Tangential Flow Filtration (TFF) Fundamentals and Protocols

Core Principles and Workflow

Tangential Flow Filtration, also known as cross-flow filtration, separates and purifies biomolecules based on molecular size. Unlike dead-end filtration, where the feed flow is perpendicular to the filter, TFF directs the feed stream tangentially across the surface of a filter membrane [18]. This cross-flow movement minimizes the accumulation of retained molecules on the membrane surface, reducing fouling and enabling sustained filtration efficiency over longer process times [19]. The process results in two streams: the permeate, which contains molecules small enough to pass through the membrane, and the retentate, which contains the concentrated product of interest [19].

The standard TFF workflow can be broken down into six key steps, from system preparation to final product recovery [19]. The following diagram illustrates this logical sequence and the critical decision points within a purification protocol.

Key TFF Performance Parameters and Membrane Types

Successful TFF operation requires careful monitoring of several critical parameters. Transmembrane Pressure (TMP) is the driving force for filtration and must be optimized to balance flux with product stability. The concentration factor indicates the degree of sample concentration, and the yield quantifies the recovery of the target molecule [19]. Membrane selection is equally crucial; the choice depends on the application, whether it's clarifying cell culture broth, concentrating proteins, or purifying viral vectors.

Table 1: Key TFF Membrane Types and Their Applications

| Membrane Type | Pore Size Range | Primary Applications | Common Materials |

|---|---|---|---|

| Microfiltration | ≥ 0.1 µm | Removal of cells, cell debris, and large particles [19]. | Polyethersulfone (PES) |

| Ultrafiltration | < 0.1 µm | Concentration and desalting of proteins, nucleic acids, and viruses; buffer exchange [20] [19]. | Regenerated Cellulose, Polyvinylidene Fluoride (PVDF) |

| Nanofiltration | Molecular Weight Cut-Off (MWCO) specific | Removal of small viruses, endotoxins, and fine particulates [20]. | Polyethersulfone (PES) |

Detailed TFF Protocol: Concentration and Diafiltration

This protocol outlines a standard process for concentrating a protein solution and exchanging its buffer using a benchtop TFF system with a cassette membrane.

Title: Concentration and Buffer Exchange of a Recombinant Protein using Tangential Flow Filtration.

Objective: To concentrate a clarified protein solution 10-fold and transfer it from a low-salt Buffer A to a high-salt Buffer B.

Materials:

- TFF System: Peristaltic pump, pressure gauges (inlet and outlet), feed reservoir, and associated tubing.

- TFF Cassette: 100 kDa MWCO, Polyethersulfone (PES), 100 cm² surface area.

- Buffers: Buffer A (50 mM Tris, 50 mM NaCl, pH 7.4), Buffer B (50 mM Tris, 300 mM NaCl, pH 7.4).

- Sample: 1000 mL of clarified cell culture supernatant containing the target protein.

- Equipment: Conductivity meter, graduated cylinders, and sterile containers.

Method:

- System Preparation: Flush and prime the entire TFF system with Buffer A to remove air bubbles and storage solution. Ensure all connections are secure.

- Equilibration: Circulate Buffer A through the system for at least 10 minutes at the target process flow rate. Record the initial system pressures.

- Sample Loading: Introduce the 1000 mL clarified sample into the feed reservoir. Begin recirculation at a low cross-flow rate, gradually increasing to the target rate while monitoring the TMP to prevent foaming or shear stress.

- Concentration: Open the permeate line to begin filtration. Continue the process until the retentate volume is reduced to approximately 100 mL, achieving a 10X concentration factor. Monitor the process by tracking the retentate volume over time.

- Diafiltration: Once concentrated, initiate diafiltration to exchange the buffer. Begin adding Buffer B to the feed reservoir at the same rate as the permeate flow, maintaining a constant retentate volume. Continue until the volume of Buffer B added equals 5-7 times the initial retentate volume (500-700 mL). Monitor the conductivity of the retentate to confirm it matches that of Buffer B.

- Final Concentration: After diafiltration, close the permeate line and perform a final concentration step to achieve the desired final product volume (e.g., 50 mL).

- Product Recovery: Drain the system and carefully recover the retentate from the reservoir and the cassette itself using Buffer B to flush out any remaining product. Filter the final product through a 0.22 µm sterilizing-grade filter into a sterile container.

Chromatography Fundamentals and Protocols

Core Principles and Modes of Separation

Chromatography is a powerful analytical and preparative technique that separates components in a mixture based on their differential distribution between a stationary phase and a mobile phase [21]. The fundamental parameter is the retention factor, which reflects the relative time a solute spends in the stationary phase. The core principle of adsorption chromatography is described by isotherms, such as the Langmuir model, which quantifies the relationship between the concentration of a solute in the mobile phase and its concentration on the stationary phase at equilibrium [22].

Real-world chromatographic surfaces, especially for complex biomolecules, are often heterogeneous. The bi-Langmuir isotherm model accounts for this by describing adsorption as the sum of interactions with two distinct types of sites: a large population of non-selective, high-capacity sites (Type I) and a smaller population of selective, chiral-discriminating sites (Type II) [22]. Understanding this heterogeneity is key to optimizing separations, particularly under the overloaded conditions common in preparative chromatography.

Table 2: Common Chromatography Modes and Their Applications

| Chromatography Mode | Separation Basis | Typical Stationary Phase | Common Applications |

|---|---|---|---|

| Affinity Chromatography | Specific biological interaction (e.g., Protein A-antibody) [23]. | Ligand-coupled resin (e.g., Protein A, immobilized metal) | High-purity capture of antibodies and tagged proteins [23]. |

| Ion Exchange (IEX) | Net surface charge | Charged functional groups (e.g., DEAE, Carboxymethyl) | Separation of proteins, nucleotides, peptides. |

| Size Exclusion (SEC) | Molecular size/hydrodynamic volume | Porous particles | Buffer exchange, polishing step, aggregate removal. |

| Hydrophobic Interaction (HIC) | Surface hydrophobicity | Weakly hydrophobic ligands (e.g., Phenyl) | Separation of proteins based on hydrophobic patches. |

| Reversed-Phase (RPC) | Hydrophobicity | Strong hydrophobic ligands (e.g., C18) | Analysis and purification of peptides, oligonucleotides. |

Advanced Concepts: Adsorption Energy and Biosensor Insights

The Adsorption Energy Distribution (AED) is a powerful tool for characterizing the heterogeneity of a chromatographic surface beyond simple model fitting. It provides a detailed "fingerprint" of the distribution of binding energies available on the stationary phase, helping to identify the true physical adsorption model and guiding the selection of optimal separation conditions [22].

Furthermore, research in biosensor techniques like Surface Plasmon Resonance (SPR) provides direct, real-time insight into the kinetics of molecular interactions. The association (k_a) and dissociation (k_d) rate constants measured by biosensors can be directly applied to improve mechanistic models of chromatographic separations, moving the field from empirical methods toward predictive separation science [22].

Detailed Chromatography Protocol: Affinity Capture

This protocol describes the affinity capture of a monoclonal antibody from clarified cell culture supernatant using a Protein A column, a critical step in many antibody purification processes.

Title: Capture of Monoclonal Antibody using Protein A Affinity Chromatography.

Objective: To isolate a monoclonal antibody from clarified harvest with high purity and yield.

Materials:

- Chromatography System: ÄKTA or similar FPLC system with UV and conductivity monitors.

- Column: Pre-packed Protein A affinity column (e.g., MabSelect SuRe, 1 mL or 5 mL column volume).

- Buffers: Equilibration/Wash Buffer (50 mM Tris, 150 mM NaCl, pH 7.4), Elution Buffer (100 mM Citric Acid, pH 3.0), Neutralization Buffer (1 M Tris-HCl, pH 9.0), Storage Buffer (20% Ethanol).

- Sample: 50 mL of clarified cell culture supernatant.

- Equipment: 0.22 µm filter, pH meter, sterile tubes.

Method:

- System and Column Preparation: Equilibrate the chromatography system and column with 5-10 column volumes (CV) of Equilibration Buffer at the recommended flow rate until the UV baseline and conductivity are stable.

- Sample Loading: Filter the clarified harvest through a 0.22 µm filter. Load the 50 mL sample onto the column at a flow rate of 1-2 mL/min. Collect the flow-through and re-apply if necessary to maximize binding.

- Washing: Wash the column with 5-10 CV of Equilibration Buffer to remove unbound and weakly bound contaminants. Continue washing until the UV signal returns to baseline.

- Elution: Elute the bound antibody by applying 5-10 CV of Elution Buffer. Collect 1 mL fractions into tubes containing a pre-measured amount of Neutralization Buffer (e.g., 100 µL per tube) to immediately adjust the pH and prevent antibody degradation.

- Strip and Regeneration: After elution, wash the column with a stripping buffer (e.g., 0.1 M Glycine, pH 2.5-3.0) to remove any tightly bound impurities.

- Column Cleaning-in-Place (CIP) and Storage: Clean the column according to the manufacturer's instructions (e.g., with 0.5 M NaOH) to remove residual impurities and endotoxins. Finally, store the column in 20% ethanol.

The logical workflow for this affinity capture step is summarized below.

Process Intensification and Scale-Up in Purification

Integrated and Intensified Processes

To address the bottleneck of downstream purification in biomanufacturing, the industry is moving toward process intensification. A key innovation is Single-Pass Tangential Flow Filtration (SPTFF), which concentrates a product in a single pass through a membrane or series of membrane modules without recirculation [23] [18]. This reduces residence time, lowers the risk of product degradation, and dramatically cuts buffer consumption compared to traditional diafiltration [18].

Integrating SPTFF inline with other purification steps, such as affinity chromatography, can create significant efficiencies. A pilot-scale study integrating SPTFF with affinity chromatography for Adeno-associated virus (AAV) purification demonstrated an 81% reduction in total operating time, a 36% improvement in affinity resin utilization, and an 8.5-fold increase in overall productivity compared to a batch process [23]. These improvements translate directly to reduced raw material costs and faster timelines in automated scale-up workflows.

Table 3: Quantitative Benefits of an Integrated SPTFF and Chromatography Process for AAV Purification (from [23])

| Performance Metric | Batch Process (Baseline) | Integrated SPTFF + Affinity Process | Improvement |

|---|---|---|---|

| Total Operating Time | Baseline | - | 81% Reduction |

| Resin Utilization | Baseline | - | 36% Improvement |

| Overall Productivity | Baseline | - | 8.5-Fold Increase |

| Host Cell Protein Removal | - | 37% - 48% (depending on scale) | - |

| AAV Yield | - | > 99% | - |

The Scientist's Toolkit: Essential Research Reagent Solutions

The following table details key materials and reagents essential for implementing the TFF and Chromatography protocols described in this document.

Table 4: Essential Research Reagents and Materials for Purification Protocols

| Item Name | Function/Description | Example Application |

|---|---|---|

| TFF Cassette (100 kDa PES) | A flat-sheet membrane format for ultrafiltration, offering high surface area and scalability [23]. | Concentration of viral vectors like AAV [23] and proteins. |

| Protein A Affinity Resin | Stationary phase with immobilized Protein A ligand that binds specifically to the Fc region of antibodies. | Primary capture step in monoclonal antibody purification [23]. |

| Regenerated Cellulose Membrane | A hydrophilic membrane material with low protein binding, minimizing product loss [23] [20]. | Ultrafiltration and concentration of sensitive proteins. |

| Chromatography Buffers (Tris, Citrate) | Mobile phase components that create the chemical environment (pH, ionic strength) for binding and elution. | Equilibration (neutral pH) and elution (low pH) in Protein A chromatography. |

| Single-Use TFF Assembly | A pre-sterilized, integrated flow path for TFF, eliminating cleaning validation and reducing cross-contamination risk [18]. | Multiproduct facilities and purification of high-potency molecules. |

Applied Automation: Protocols for Scale-Up and Purification

Implementing the Complete Similarity Approach for Reaction Scale-Up

Scaling up chemical reactions from the laboratory to industrial production is a core challenge in pharmaceutical development. Traditional scale-up methods, based on partial similarity, often preserve only a single, dominant mixing timescale (e.g., micro or meso mixing), which can lead to unreliable results and unexpected changes in product distribution when the dominant mechanism shifts [24]. The Complete Similarity Approach (CSA) offers a rigorous alternative by maintaining the dynamic similarity of all relevant physical and chemical timescales simultaneously [24]. This ensures that the internal distribution of mixing time scales remains consistent between small- and large-scale reactors, providing a more reliable and concise foundation for scaling automated reaction and purification protocols [24].

This Application Note details the practical implementation of CSA, providing a structured methodology, experimental protocols, and scaling rules designed for researchers and drug development professionals working within automated development workflows.

Theoretical Foundation of Complete Similarity

Core Principle: Dynamic Similarity of Timescales

In a competitively fast chemical reaction, the final product distribution is determined by the interplay between the reaction kinetics and the various stages of the mixing process. The CSA mandates that the ratios of all relevant time constants remain constant across scales, unlike the Partial Similarity Approach (PSA), which keeps only one timescale constant [24].

The key timescales involved are:

- Chemical Reaction Time (

τ_rxn): Governed by reaction kinetics. - Micro-Mixing Time (

τ_micro): The time for the final mixing at the molecular level, closely related to the Kolmogorov microscale. Example definitions are the engulfment timeτ_micro,en = 17.3(ν/ε)^0.5or the viscous-convective viscous-diffusive (VCVD) timeτ_micro,VCVD = 0.5(ν/ε)^0.5 ln(Sc), whereνis the kinematic viscosity,εis the specific energy dissipation rate, andScis the Schmidt number [24]. - Meso-Mixing Time (

τ_meso): Represents the coarse-scale mixing of feed streams with their surroundings, often related to the turbulent dispersion or convective eddy disintegration. A common scaling isτ_meso ~ d_jet / ū_jetfor a confined-impinging jet mixer (CIJM) [24].

The Criterion of Damköhler Number Constancy

The central scaling parameter in CSA is the Damköhler number (Da), which represents the ratio of the mixing rate to the chemical reaction rate [24]. For complete similarity, the Damköhler number must be kept constant during scale-up:

Da = τ_mixing / τ_reaction = Idem

This requires that if the mixing time increases upon scale-up (as it typically does), the chemical reaction time must be increased proportionally. For competitive chemical model reactions (CCMRs) like the Villermaux-Dushman reaction, this is achievable by increasing the reactant concentrations to adjust the apparent reaction rate [24]. This approach ensures that the product distribution remains consistent across different scales.

Scaling Rules and Quantitative Framework

The following table summarizes the key scaling parameters and their respective treatment under the Partial Similarity Approach (PSA) and the Complete Similarity Approach (CSA) for a generic geometrically similar confined-impinging jet mixer (CIJM).

Table 1: Scale-Up Rules for Mixing-Sensitive Competitive Reactions

| Scale-Up Criterion | Partial Similarity Approach (PSA) | Complete Similarity Approach (CSA) |

|---|---|---|

| Governing Principle | Keep dominant mixing timescale constant [24] | Keep all mixing timescales chemically and dynamically similar [24] |

| Meso-Mixing Similarity | (d_jet / ū_jet)_large = (d_jet / ū_jet)_small [24] |

(d_jet / ū_jet)_large = (d_jet / ū_jet)_small |

| Micro-Mixing Similarity | ε_large = ε_small [24] |

ε_large = ε_small |

| Chemical Reaction Similarity | Not maintained | Da_large = Da_small [24] |

| Key Implication | Dominant mechanism can switch during scale-up, leading to unreliable product distribution [24] | All time scales remain in same proportion; product distribution is preserved [24] |

| Primary Application | Industrial production processes [24] | Competitive Chemical Model Reactions (CCMRs) for mixer characterization and fundamental studies [24] |

For other reactor types, such as batch adsorption reactors, similar logic applies. Kinetic similarity can be achieved by maintaining constant power-to-volume ratio (P/V) and modifying other parameters [25].

Table 2: Scaling Parameters for Batch Adsorption Reactors [25]

| Parameter | Scale-Up Criterion | Rationale |

|---|---|---|

| Power per Unit Volume (P/V) | Idem (Constant) |

Controls shear rate and liquid-film mass transfer coefficient (k) via Kolmogorov's scale [25]. |

| Dimensionless Mixing Time (θ) | θ = t_m N = Idem |

Ensures similar bulk homogenization (macro-mixing) across scales [25]. |

| Impeller Speed for Suspension (N_JS) | N ∝ d^{-0.85} (Zwietering Eq.) |

Ensures complete suspension of solid particles [25]. |

| Kinetic Similarity (for combined mass transfer) | (m/V) = Idem and N D^{0.667} = Idem |

Achieves C(t)_Bench = C(t)_Industrial for systems with intraparticle and liquid film resistance [25]. |

Experimental Protocol: CSA Validation via Villermaux-Dushman Reaction

This protocol outlines the steps to validate the Complete Similarity Approach using the Villermaux-Dushman reaction in geometrically similar Confined-Impinging Jet Mixers (CIJMs).

Research Reagent Solutions

Table 3: Essential Reagents and Materials for Villermaux-Dushman Protocol

| Item | Function / Description |

|---|---|

| Confined-Impinging Jet Mixers (CIJMs) | Geometrically similar mixers on different size scales (e.g., varying jet diameter, d_jet). The characteristic length and velocity are defined as d_jet and ū_jet, respectively [24]. |

| Villermaux-Dushman Reaction Kit | A competitive parallel reaction system between a fast and a slow reaction using a common educt. Used to quantify mixing efficiency [24]. |

| Peristaltic or Syringe Pumps | To ensure equal inlet volume flows of reactant streams into the CIJMs [24]. |

| UV-Vis Spectrophotometer | For analyzing the product distribution (quinine concentration) to determine the selectivity of the competing reactions [24]. |

| Data Acquisition System | To record and control process parameters like flow rates and pressures. |

Step-by-Step Procedure

- Reactor Setup: Install at least two geometrically similar CIJMs of different scales. Define the characteristic jet diameter (

d_jet) and average inlet velocity (ū_jet) for each. - Baseline Determination (Perfect Mixing): At a small scale, perform the Villermaux-Dushman reaction under conditions of highly efficient mixing (very high

ε). Measure the product distribution. This represents the baseline where only the fast reaction occurs. - Small-Scale Characterization:

- Conduct the reaction at various

ū_jet(and thus variousε) on the small-scale CIJM. - For each condition, measure the product distribution (e.g., the selectivity

X). - Plot the product distribution

Xagainst the Damköhler numberDafor the small-scale reactor.

- Conduct the reaction at various

- CSA Scale-Up Calculation:

- Select a target operating point (

Da_target,X_target) from the small-scale data. - For the large-scale reactor, calculate the required

ū_jet,largeto achieveε_large = ε_smallfor micro-mixing similarity. - Simultaneously, ensure

(d_jet / ū_jet)_large = (d_jet / ū_jet)_smallfor meso-mixing similarity. - Crucially, adjust the concentrations of the Villermaux-Dushman reactants in the large-scale experiment to ensure

Da_large = Da_target[24].

- Select a target operating point (

- Large-Scale Validation:

- Run the reaction on the large-scale CIJM using the calculated parameters

ū_jet,largeand the adjusted reactant concentrations. - Measure the product distribution

X_large.

- Run the reaction on the large-scale CIJM using the calculated parameters

- Data Analysis and Comparison: Compare

X_largewithX_target. Successful validation of CSA is achieved if the product distributions are identical across scales.

The workflow below visualizes this multi-step scale-up and validation process.

Integration with Automated Workflows

The CSA provides an ideal, model-based foundation for automating reaction scale-up. The deterministic scaling rules can be codified into software and coupled with automated platforms.

- Data-Rich Experimentation: Automated high-throughput screening (HTS) platforms can rapidly generate the small-scale kinetic and mixing data required to build the

X = f(Da)model [26]. LLM-based agents or other AI tools can assist in designing these experiments and extracting information from literature [26]. - Model-Based Scale-Up: Software tools like Dynochem or Reaction Lab can use the fundamental data to create kinetic and mixing models [27]. These models can automatically predict large-scale performance and calculate the required CSA parameters (e.g., new concentrations,

ū_jet). - Automated Purification: Scaling up the reaction necessitates scaling down-stream purification. Integrated platforms can automatically handle post-reaction processing, such as collecting and reformatting purified fractions for analysis and biological testing, as demonstrated in high-throughput pharmaceutical workflows [28] [29].

The following diagram illustrates this integrated, automated development cycle.

The Complete Similarity Approach moves beyond the limitations of traditional scale-up by ensuring dynamic similarity across all relevant physical and chemical timescales. By maintaining a constant Damköhler number in addition to mixing similarities, CSA enables reliable and predictable scaling of competitive chemical reactions. While particularly powerful for using model reactions for equipment characterization, its principles are fundamental. When integrated with modern automated synthesis, modeling software, and purification platforms, CSA provides a robust, data-driven framework that can significantly de-risk scale-up, accelerate process development, and enhance the reliability of automated scale-up protocols in pharmaceutical research and development.

Deploying Single-Use and Single-Pass Tangential Flow Filtration

Single-Pass Tangential Flow Filtration (SPTFF) is an advanced downstream processing technology that enables continuous concentration and buffer exchange of biological products in a single pass through the filter assembly, eliminating the need for retentate recycling typical of traditional batch TFF operations [30]. This technology is increasingly deployed in modern biomanufacturing due to its compact footprint, compatibility with single-use systems, and ability to integrate directly into continuous processing workflows [31]. Within the context of automated reaction scale-up and product purification, SPTFF represents a critical unit operation that enhances process intensification, reduces hold volumes, and improves overall manufacturing efficiency for therapeutic proteins, vaccines, and other biologics [32].

Key Principles and Comparative Advantages

Fundamental Operational Differences

SPTFF fundamentally differs from traditional TFF in its flow configuration. While traditional TFF operates in batch or fed-batch mode with multiple passes of the retentate back through the same filter, SPTFF achieves the desired concentration in a single, continuous pass by configuring multiple filtration modules in series [30]. This serial configuration creates an elongated feed channel path, increasing residence time and conversion efficiency. The basic principle underlying SPTFF is that increased residence time in the feed channel directly results in increased conversion of feed material to permeate [30].

Advantages for Automated Purification Protocols

The implementation of SPTFF within automated purification protocols offers several distinct advantages:

- Process Intensification: SPTFF systems consume valuable cleanroom production space, offering a small footprint compared to traditional batch mode TFF systems [31].

- Continuous Processing: As a continuous processing system, SPTFF effectively links upstream and downstream operations, enabling true continuous biomanufacturing [32].

- Reduced Operator Intervention: Automated SPTFF systems facilitate real-time monitoring and control of key process parameters, decreasing the need for operator intervention and reducing the risk of errors [32].

- Scalability: SPTFF technology can be scaled from laboratory to commercial manufacturing while maintaining process consistency [30].

Table 1: Comparison of Traditional TFF vs. Single-Pass TFF

| Parameter | Traditional TFF | Single-Pass TFF |

|---|---|---|

| Operation Mode | Batch/Feed-batch with recirculation | Continuous, single-pass |

| Footprint | Larger due to hold tanks | Compact, space-efficient |

| Process Integration | Discrete unit operation | Continuous processing enabled |

| Automation Potential | Moderate | High with real-time monitoring |

| Buffer Consumption | Higher | Lower |

| Hold-up Volume | Significant | Minimal |

Implementation Protocol for SPTFF

System Configuration and Setup

Implementing SPTFF using commercially available capsules or cassettes involves three fundamental steps [30]:

1. Filter Assembly Configuration

- Install filtration devices (e.g., Pellicon Capsules) in series rather than parallel

- Each section must be of equal membrane area for optimal process exploration

- Connect the retentate of the first capsule directly to the feed of the second, alternating subsequently for each additional capsule

- This serial configuration creates the elongated feed channel necessary for increased conversion

2. Establishing Operating Conditions

- Determine optimal retentate pressure at a baseline test flow rate (typically 1 LMM - liters/min per m²)

- Conduct feed flux excursions to obtain target conversion

- Incrementally increase retentate pressure until maximum desirable conversion is reached or system becomes unstable

- Allow sufficient time for polarization to fully develop and flux to stabilize (generally 1-30 minutes)

3. Confirming Process Stability

- Conduct a single-pass process simulation at the target conversion

- Operate until conversion and pressure profile become steady

- For robust process validation, run for the target duration using fresh feed material

Experimental Design and Optimization

Optimal Retentate Pressure Determination The optimal retentate pressure is application-specific and depends on feed composition and concentration. More dilute feeds generally require lower retentate pressure for a given conversion [30]. The methodology involves:

- Setting feed flux to 1 LMM

- Starting from lowest retentate pressure (retentate valve fully open)

- Increasing pressure incrementally (e.g., 1-2 psi increments)

- Monitoring conversion until maximum is reached or system becomes unstable

- Identifying the inflection point in the pressure curve where flux stops increasing significantly

Feed Flux Excursions Once optimal retentate pressure is established, feed flux excursions determine the operational parameters for target conversion:

- Maintain established optimal retentate pressure constant

- Start at 1 LMM (or higher if conversion is too high at 1 LMM)

- Record individual permeate flows to calculate conversion for each section

- Plot data to reveal optimal feed flow rate for operation at desired conversion

Table 2: Key Process Parameters and Their Effects on SPTFF Performance

| Parameter | Effect on Process | Optimization Guidance |

|---|---|---|

| Retentate Pressure | Directly impacts conversion rate; too high causes membrane fouling | Find inflection point where flux increase plateaus |

| Feed Flux (LMM) | Determines residence time and final conversion | Lower flux increases residence time and conversion |

| Number of Sections | Affects path length and overall conversion | More sections in series increase conversion |

| Feed Concentration | Influences optimal pressure setpoint | Dilute feeds require lower pressure |

| Membrane Material | Affects flux and fouling behavior | PES offers low protein-binding, high flow rates |

Scaling Considerations and Case Studies

Scalability of SPTFF Systems

Scaling SPTFF processes between different device formats or sizes can be achieved by maintaining consistent feed flux and pressure drop across the feed channel [30]. The fundamental principle for scale-up involves:

- Keeping feed flux (set by the pump) constant between scales

- Maintaining equivalent pressure drop across the feed channel (set by retentate valve)

- This approach ensures consistent conversion without re-establishing optimal retentate pressure

- Scaling is possible within device families or between different formats (capsules to cassettes)

Case Study: Concentration of Monoclonal Antibody