Benchmarking AI-Driven Synthesis Planning: A Comprehensive Guide for Pharmaceutical Research

This article provides a comprehensive framework for benchmarking AI-driven synthesis planning algorithms, a critical capability for accelerating drug discovery.

Benchmarking AI-Driven Synthesis Planning: A Comprehensive Guide for Pharmaceutical Research

Abstract

This article provides a comprehensive framework for benchmarking AI-driven synthesis planning algorithms, a critical capability for accelerating drug discovery. Tailored for researchers and drug development professionals, it explores the foundational principles, methodological approaches, and key performance metrics essential for robust evaluation. The content addresses common optimization challenges, including data quality and model generalizability, and offers insights into comparative analysis and validation against real-world pharmaceutical applications. By synthesizing current trends and future directions, this guide aims to equip scientists with the knowledge to effectively validate and implement these transformative tools in biomedical research.

The Foundation of AI Synthesis Planning: Core Concepts and Industry Impact

Defining AI-Driven Synthesis Planning and Retrosynthetic Analysis

Artificial Intelligence (AI) is fundamentally reshaping the methodologies of organic synthesis and chemical discovery. For researchers, scientists, and drug development professionals, understanding the performance and applicability of the current generation of AI-driven synthesis tools is crucial. AI-driven synthesis planning refers to computational systems that propose viable synthetic routes for target molecules, while retrosynthetic analysis is the specific problem of deconstructing a target molecule into simpler precursor molecules [1]. This guide provides a comparative benchmark of leading algorithms, framing their performance within the broader thesis of ongoing research to establish robust, standardized evaluation protocols for these rapidly evolving tools. The transition from traditional, expert-driven workflows to intelligence-guided, data-driven processes marks a pivotal shift in molecular catalysis and chemical research [1].

Performance Comparison of Leading AI Models

Benchmarking on standardized datasets is essential for evaluating the performance of retrosynthesis algorithms. The table below summarizes the Top-1 accuracy—the percentage of cases where the model's first prediction is correct—of state-of-the-art models on common benchmark datasets.

Table 1: Top-1 Accuracy Comparison on Benchmark Datasets

| Model | USPTO-50k Accuracy | USPTO-MIT Accuracy | USPTO-FULL Accuracy | Key Characteristic |

|---|---|---|---|---|

| RSGPT [2] | 63.4% | Information Missing | Information Missing | Generative Transformer pre-trained on 10B+ datapoints |

| RetroExplainer [3] | 54.2% (Class Known) | Competitive results per [10] | Competitive results per [10] | Interpretable, molecular assembly-based |

| LocalRetro [3] | ~53% (Class Known) | Information Missing | Information Missing | Not a benchmark leader in [10] |

| R-SMILES [3] | ~41% (Class Unknown) | Information Missing | Information Missing | Not a benchmark leader in [10] |

Beyond single-step prediction accuracy, the performance of these models can also be evaluated in broader planning tasks. A 2025 study assessed frontier Large Language Models (LLMs) like GPT-5 on their ability to solve planning problems described in the Planning Domain Definition Language (PDDL), a type of formal language for defining planning tasks. The study found that GPT-5 solved 205 out of 360 tasks, making it competitive with a specialized classical planner (LAMA, which solved 204) on standard benchmarks. However, when the domain was "obfuscated" (all semantic clues were removed from names), the performance of all LLMs degraded, indicating that their reasoning still relies partly on semantic understanding rather than pure symbolic manipulation [4].

Experimental Protocols for Benchmarking

To ensure fair and meaningful comparisons, the following experimental protocols are commonly employed in benchmarking AI synthesis planners.

Dataset Splitting and Preparation

The USPTO (United States Patent and Trademark Office) datasets are the most widely used benchmarks. The standard USPTO-50k contains approximately 50,000 reaction examples. Performance is typically evaluated under two scenarios:

- Reaction Class Known: The reaction type of the test example is provided to the model.

- Reaction Class Unknown: The model must predict the reaction type and the reactants without prior knowledge [3].

To prevent scaffold bias and information leakage from the training set to the test set, a Tanimoto similarity splitting method is recommended. This approach ensures that molecules in the test set have a structural similarity (based on Tanimoto coefficient) below a specific threshold (e.g., 0.4, 0.5, or 0.6) compared to all molecules in the training set, providing a more rigorous assessment of model generalizability [3].

Plan Validation and Metrics

For single-step retrosynthesis, the primary metric is top-k exact match accuracy. A prediction is considered correct only if the set of proposed reactant SMILES strings exactly matches the reported reactants in the dataset [2] [3].

For multi-step synthesis planning and broader AI planning evaluations, the key metrics are:

- Coverage: The number of tasks (or target molecules) for which a valid plan (or synthetic route) is successfully generated [4].

- Plan Validation: All generated plans must be validated using sound automated tools. In formal planning, the VAL tool is used [4]. In chemistry, reaction validation can be performed with tools like RDChiral, which checks the chemical rationality of the proposed reaction based on reaction rules [2].

Architectural and Methodological Approaches

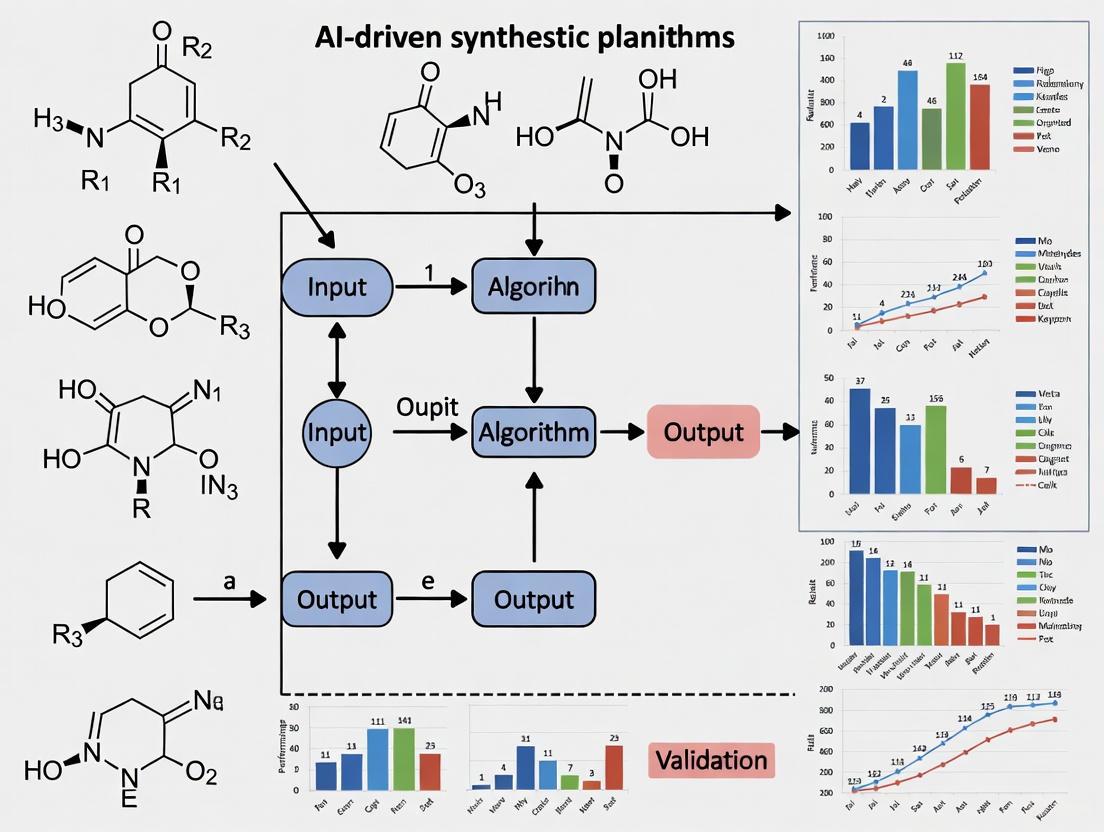

AI models for retrosynthesis can be categorized by their underlying methodology, each with distinct strengths and limitations. The logical relationship between these approaches and their core characteristics is visualized below.

Template-Based Methods

These methods rely on libraries of reaction templates—expert-defined or automatically extracted rules that describe the core structural transformation of a reaction [1]. A model identifies the reaction center in the target product and matches it with a template to trace back to possible reactants.

- Strengths: High interpretability, as predictions are linked to known chemical rules [3].

- Weaknesses: Limited generalization; they cannot predict reactions outside their template library. They can also be computationally intensive for complex molecules [1].

- Examples: ASKCOS, AiZynthFinder [1].

Semi-Template-Based Methods

This approach represents a middle ground. It first identifies the reaction center to create synthons (hypothetical intermediate structures), which are then completed into realistic reactants.

- Strengths: Reduces template redundancy and can handle a broader range of reactions than purely template-based methods [2].

- Weaknesses: Can struggle with complex, multi-center reactions [2].

- Examples: SemiRetro, Graph2Edits [2].

Template-Free Methods

These models directly generate reactant structures from the product without explicitly using reaction rules, treating the task as a machine translation problem where the product's molecular representation is "translated" into the reactants' representations.

- Strengths: High scalability and the potential to discover novel, non-template reactions [2] [1].

- Weaknesses: Early models suffered from generating invalid chemical structures and acted as "black boxes" with poor interpretability [3].

- Examples: RSGPT is a premier example—a generative transformer pre-trained on 10 billion data points that treats SMILES strings as a language [2]. RetroExplainer is another advanced model that formulates retrosynthesis as an interpretable molecular assembly process [3].

LLM-Based Planners

With the rise of large language models (LLMs), there is growing research into using them for end-to-end planning. These models, such as GPT-5, are prompted to generate a sequence of actions (a plan) from a formal description of a domain and a goal [4].

- Strengths: Demonstrated strong performance on diverse planning domains and are highly flexible [4].

- Weaknesses: Performance can degrade on purely symbolic reasoning tasks without semantic clues, and they are not guaranteed to be complete or sound [4].

The Scientist's Toolkit: Research Reagents & Solutions

The following table details key software tools and resources that constitute the essential "research reagents" for scientists working in AI-driven synthesis planning.

Table 2: Essential Research Reagents and Software Solutions

| Tool / Resource Name | Type / Category | Primary Function in Research |

|---|---|---|

| USPTO Datasets [2] [3] | Benchmark Dataset | Provides standardized, real-world reaction data for training and evaluating retrosynthesis models. |

| RDChiral [2] [1] | Chemical Tool | An automated tool for extracting and applying reaction templates; used to validate chemical rationality and generate synthetic data. |

| VAL [4] | Validation Tool | A sound validator for plans described in PDDL; ensures the correctness of generated plans in classical planning benchmarks. |

| AiZynthFinder [1] | Retrosynthesis Software | An open-source, template-based tool for rapid retrosynthetic route search and expansion. |

| ASKCOS [1] | Retrosynthesis Platform | An integrated software platform that incorporates template-based planning and has been demonstrated in robotic synthesis workflows. |

| Viz Palette [5] | Accessibility Tool | A tool to test color palettes in data visualizations for accessibility, ensuring interpretations are clear to audiences with color vision deficiencies. |

Case Studies and Integrated Workflows

The ultimate test for AI synthesis planning is its integration into full-cycle, automated workflows. A prominent case study involved combining computer-aided retrosynthesis planning with a robotically reconfigurable flow apparatus for the on-demand synthesis of 15 small molecule compounds, including the nonsteroidal anti-inflammatory drug Celecoxib and the blood thinner Warfarin [6] [1]. This end-to-end workflow, illustrated below, demonstrates the practical potential of these technologies.

In this workflow, the AI first proposes a de novo synthesis plan. Crucially, a human expert then refines the plan and the chemical recipe files to address practical considerations such as precise stereochemistry, solvent choices, and incompatibilities with the microfluidic flow system. Finally, the robotically controlled system executes the synthesis [6]. This highlights the current paradigm of human-AI collaboration, where AI augments the chemist's capabilities by handling vast literature searches and generating initial proposals, while the chemist provides critical expert oversight to ensure experimental feasibility [6] [1].

Another significant case is the BigTensorDB system, which introduces a tensor database to empower AI for science. It addresses a key limitation in existing retrosynthetic analysis—the frequent omission of reaction conditions—by designing a tensor schema to store all key information, including reaction conditions. It supports a full-cycle pipeline from predicting reaction paths to feasibility analysis, aiming to reduce user cost and improve prediction accuracy [7].

Future Outlook

The field of AI-driven synthesis is rapidly advancing, with several key trends shaping its future:

- Reinforcement Learning (RL): Inspired by strategies in LLMs, reinforcement learning from AI feedback (RLAIF) is being used to better capture the relationships between products, reactants, and templates, as seen in the training of RSGPT [2].

- Addressing Data Scarcity: The use of synthetically generated data is a pivotal innovation. Models like RSGPT are pre-trained on billions of algorithmically generated reactions to overcome the bottleneck of limited real-world data [2].

- The Push for Autonomy: The next evolutionary step is the move from automated synthesis (requiring human input to define parameters) to truly autonomous synthesis—self-governing systems that can adjust to parameters like stereoselectivity and yield in real-time without human intervention [6].

- Interpretability: Tools like RetroExplainer are leading a push towards more interpretable AI, providing quantitative attributions and transparent decision-making processes to build trust and provide deeper insights for chemists [3].

In conclusion, while AI-driven synthesis planners have reached a level of performance competitive with both human experts and classical algorithms in specific domains, the most powerful applications arise from their integration into the chemist's workflow. The future points toward more autonomous, data-efficient, and interpretable systems that will continue to transform the landscape of chemical discovery and drug development.

The rapid integration of artificial intelligence into scientific domains, particularly computer-aided synthesis planning (CASP), has created an urgent need for rigorous performance benchmarking. For researchers, scientists, and drug development professionals, selecting the appropriate AI technology is no longer a matter of trend-following but of empirical validation against specific research problems. The global AI in CASP market, valued at USD 3.1 billion in 2025 and projected to reach USD 82.2 billion by 2035, reflects both the explosive investment in and expectations for these technologies [8]. This growth is fueled by demonstrated successes, such as AI-driven reduction of drug discovery timelines by 30% to 50% in preclinical phases and the application of generative AI models for novel molecule discovery [8].

Within this context, this guide provides an objective, data-driven comparison of three foundational AI technologies—Machine Learning (ML), Deep Learning (DL), and Neural Networks (NNs)—focusing on their performance characteristics for synthesis planning applications. By synthesizing current benchmark results and experimental protocols, we aim to equip researchers with a evidence-based framework for technology selection that aligns computational capabilities with research objectives in molecular design and reaction optimization.

Technology Definitions and Core Architectural Differences

Conceptual Hierarchy and Relationships

Artificial Intelligence encompasses techniques that enable machines to mimic human intelligence. Within this field:

- Machine Learning (ML) is a subset of AI focused on building systems that learn from data with minimal human intervention. ML algorithms automatically discover patterns and insights from data to make predictions or classifications [9].

- Deep Learning (DL) is a specialized subfield of machine learning that utilizes artificial neural networks with many layers. The "deep" in deep learning refers to these multiple layers within the network that enable learning increasingly abstract features directly from raw data [10].

- Neural Networks (NNs) form the architectural foundation of deep learning models. Inspired by the human brain's network of neurons, these interconnected layers of artificial neurons are the computational engines that allow deep learning models to recognize complex patterns [10].

Key Architectural and Operational Characteristics

The fundamental differences between these technologies manifest in their data handling, feature engineering requirements, and architectural complexity:

Table: Fundamental Characteristics of AI Technologies

| Characteristic | Machine Learning (ML) | Deep Learning (DL) | Neural Networks (NNs) |

|---|---|---|---|

| Data Representation | Relies on feature engineering; domain experts often manually extract relevant features [9] | Automates feature engineering; learns hierarchical representations directly from raw data [9] | Computational units organized in layers; complexity varies from simple to deep architectures |

| Model Complexity | Fewer parameters and simpler structure (e.g., decision trees, SVM) [9] | Highly complex with many interconnected layers and nodes [9] | Architecture-dependent (from single-layer perceptrons to deep networks with millions of parameters) |

| Feature Engineering | Requires significant human expertise and domain knowledge for feature selection [10] | Minimal feature engineering needed; models discover relevant features automatically [10] | Feature learning capability scales with network depth and architecture |

| Interpretability | Generally more interpretable; easier to understand decision processes [10] [9] | "Black-box" nature makes interpretation challenging [10] [9] | Varies from interpretable shallow networks to complex deep networks with limited transparency |

Figure 1: Hierarchical relationship between AI technologies and their application in synthesis planning. Neural Networks form the architectural foundation for Deep Learning, which is itself a specialized subset of Machine Learning.

Performance Benchmarking: Experimental Data and Comparative Analysis

Comprehensive Benchmark Studies on Structured Data

Recent large-scale benchmarking provides crucial insights for synthesis planning applications, where data often involves structured molecular representations and reaction parameters. A comprehensive 2024 benchmark evaluating 111 datasets with 20 different models for regression and classification tasks revealed that deep learning models do not universally outperform traditional methods on structured data [11]. The study found DL performance was frequently equivalent or inferior to Gradient Boosting Machines (GBMs), with only specific dataset characteristics favoring deep learning approaches [11].

A specific 2024 study in Scientific Reports comparing models on highly stationary data relevant to chemical processes (vehicle flow prediction) demonstrated that XGBoost significantly outperformed RNN-LSTM models, particularly in terms of MAE and MSE metrics [12]. This finding highlights how less complex algorithms can achieve superior performance on data with consistent statistical properties, challenging the assumption that deeper models always deliver better results.

Quantitative Performance Comparison

Table: Performance Benchmarking Across AI Technologies

| Performance Metric | Traditional ML | Deep Learning | Experimental Context |

|---|---|---|---|

| Structured Data Accuracy | Frequently outperforms or matches DL [11] | Equivalent or inferior to GBMs on many tabular datasets [11] | Benchmark on 111 datasets with 20 models [11] |

| High Stationarity Data Prediction | XGBoost: Superior MAE/MSE [12] | RNN-LSTM: Higher error rates [12] | Vehicle flow prediction (stationary time series) [12] |

| Training Data Requirements | Performs well with smaller datasets (hundreds to thousands of examples) [10] [9] | Requires large datasets (thousands to millions of examples) [10] [9] | General model performance characteristics |

| Computational Resources | Lower requirements; can run on standard CPUs [10] | High requirements; typically needs powerful GPUs [10] | Training time and hardware requirements |

| Training Speed | Faster training cycles [9] | Slower training due to complexity and data volume [9] | Time to model convergence |

| Interpretability | High; transparent decision processes [10] [9] | Low; "black-box" character [10] [9] | Ability to explain model decisions |

Specialized Performance in Synthesis Planning Applications

In CASP-specific applications, deep learning has demonstrated particular value for complex pattern recognition tasks. The rise of generative AI models for novel molecule discovery has shown significant promise, with platforms like Insilico Medicine's Chemistry42 successfully identifying novel antibiotic candidates [8]. For reaction prediction and retrosynthesis planning, deep learning models processing raw molecular representations have achieved state-of-the-art performance by learning complex structural relationships that challenge manual feature engineering approaches.

The 2025 AI performance landscape shows that smaller, more efficient models are increasingly competitive. Microsoft's Phi-3-mini with just 3.8 billion parameters achieves performance thresholds that required 540 billion parameters just two years earlier, making sophisticated AI more accessible for research institutions with limited computational resources [13].

Experimental Protocols and Methodologies

Benchmarking Framework for Synthesis Planning Algorithms

Robust evaluation of AI technologies for synthesis planning requires standardized methodologies that mirror real-world research challenges. The following experimental workflow provides a structured approach for comparative technology assessment:

Figure 2: Standardized experimental workflow for benchmarking AI technologies in synthesis planning applications

Detailed Methodological Components

Data Curation and Partitioning

- Dataset Composition: Curate diverse reaction datasets encompassing successful syntheses, failed reactions, and yield data. The CASP market analysis indicates pharmaceutical companies increasingly leverage proprietary reaction databases [8].

- Stratified Splitting: Implement time-series-aware or cluster-based splitting to prevent data leakage and ensure realistic performance estimation. For stationary data similar to that in the Scientific Reports study, random splitting may suffice [12].

- Benchmark Scale: Comprehensive benchmarks should include sufficient datasets (dozens to hundreds) to enable statistically significant conclusions about performance differences [11].

Feature Engineering Protocols

- ML Approaches: Implement domain-informed feature extraction including molecular descriptors (MW, logP, HBD/HBA), fingerprint representations (ECFP, MACCS), and reaction conditions (temperature, catalyst, solvent).

- DL Approaches: Utilize raw or minimally processed inputs (SMILES strings, molecular graphs, reaction SMARTS) allowing models to learn relevant representations automatically [9].

Model Training and Validation

- Hyperparameter Optimization: Employ Bayesian optimization or grid search with appropriate cross-validation strategies. The 2024 benchmark emphasized the importance of rigorous hyperparameter tuning for fair comparisons [11].

- Validation Metrics: Track multiple metrics including accuracy, precision-recall curves, and chemical validity of outputs. For regression tasks (e.g., yield prediction), include MAE, MSE, and R² values [12].

- Statistical Testing: Implement appropriate statistical tests (e.g., paired t-tests, Wilcoxon signed-rank tests) to establish significance of performance differences observed between approaches [11].

Table: Essential Resources for AI-Driven Synthesis Planning Research

| Resource Category | Specific Tools & Platforms | Research Application | Technology Alignment |

|---|---|---|---|

| ML Libraries | Scikit-learn, XGBoost | Traditional ML model implementation for structured reaction data | Machine Learning |

| DL Frameworks | TensorFlow, PyTorch, OpenEye, RDKit, DeepChem | Neural network development for molecular pattern recognition [8] | Deep Learning |

| Molecular Representation | SMILES, SELFIES, Molecular Graphs, 3D Conformers | Input data preparation for reaction prediction | Deep Learning |

| Benchmarking Platforms | MLPerf, Hugging Face Transformers, OpenChem | Performance evaluation and model comparison [14] | All Technologies |

| Specialized CASP Software | ChemPlanner (Elsevier), Chematica (Merck KGaA), Schrödinger Suite | Commercial synthesis planning and reaction prediction [8] | Integrated Approaches |

| Computing Infrastructure | GPU Clusters, Cloud Computing (AWS, Azure, GCP), High-Performance Computing | Model training, particularly for deep learning approaches [10] | Deep Learning |

The benchmarking data presented reveals a nuanced technology landscape where no single approach universally dominates synthesis planning applications. Traditional machine learning methods, particularly Gradient Boosting Machines like XGBoost, demonstrate superior performance on structured datasets with stationary characteristics, offering greater interpretability and computational efficiency [11] [12]. Deep learning approaches excel at processing raw molecular representations and identifying complex patterns in large, diverse reaction datasets, enabling end-to-end learning without extensive feature engineering [9].

For research teams embarking on AI-driven synthesis planning projects, the optimal technology selection depends critically on specific research objectives, data characteristics, and computational resources. ML approaches provide a robust starting point for well-defined prediction tasks with structured data, while DL methods offer powerful capabilities for novel chemical space exploration and complex pattern recognition in large, heterogeneous reaction datasets. As the 2025 AI landscape evolves, the convergence of model performance and emergence of more efficient architectures continues to expand the accessible toolkit for drug development professionals [13].

The pharmaceutical industry faces a persistent challenge: the exorbitant cost and protracted timeline of bringing new therapeutics to market. Traditional drug discovery remains a labor-intensive process, typically requiring 14.6 years and approximately $2.6 billion per approved drug [15]. This inefficiency creates significant barriers to delivering novel treatments to patients. However, artificial intelligence (AI), particularly in Computer-Aided Synthesis Planning (CASP), is emerging as a transformative solution to this pharmaceutical imperative. The global AI in CASP market, valued at $2.13–3.1 billion in 2024–2025, is projected to grow at a compound annual growth rate (CAGR) of 38.8–41.4%, reaching $68.06–82.2 billion by 2034–2035 [8] [16]. This explosive growth reflects the increasing reliance on AI-driven approaches to streamline the drug development pipeline. AI-enabled workflows are demonstrating concrete potential to reduce discovery timelines by 30–50% and cut associated costs by up to 40% by accelerating target identification, compound design, and optimizing synthetic routes [8] [15]. This guide provides a performance benchmark of current AI-driven synthesis planning algorithms, comparing their methodologies, efficacy, and practical implementation to guide researchers in adopting these transformative technologies.

Benchmarking AI-Driven Synthesis Planning Algorithms

Performance Metrics and Market Context

AI-driven CASP tools are benchmarked on their ability to accelerate the identification of viable synthetic pathways, reduce reliance on traditional trial-and-error experimentation, and lower overall development costs. The adoption of these tools is concentrated in pharmaceutical and biotechnology companies, which constitute over 70% of the current market [16]. This dominance is driven by immense pressure to improve R&D productivity. According to a 2023 survey, 75% of 'AI-first' biotech firms deeply integrate AI into discovery, whereas traditional pharma companies lag with adoption rates approximately five times lower [15].

Table 1: Global Market Overview for AI in Computer-Aided Synthesis Planning

| Metric | 2024/2025 Value | 2034/2035 Projection | CAGR | Key Drivers |

|---|---|---|---|---|

| Global Market Size | $2.13–3.1 Billion [8] [16] | $68.06–82.2 Billion [8] [16] | 38.8–41.4% [8] [16] | Demand for faster drug discovery, cost reduction [8] |

| North America Market Share | 42.6% ($0.90 Billion) [16] | Projected 38.7% share by 2035 [8] | ~39.8% (U.S. specific) [16] | High R&D expenditure, strong digital infrastructure [8] [16] |

| Software Segment Share | 65.8% of market by offering [16] | 65.5% by 2035 [8] | (Aligned with overall market) | Reliance on proprietary AI platforms and algorithms [8] |

| Small Molecule Drug Discovery | 75.2% of market by application [16] | Dominance through 2035 [8] | (Aligned with overall market) | AI's capability to significantly shorten discovery timelines [8] [15] |

Comparative Analysis of Leading AI-CASP Platforms and Algorithms

Different AI-CASP platforms employ varied technological approaches, from evidence-based systems using large knowledge graphs to generative AI models that propose novel synthetic routes. The performance of these systems is typically measured by their throughput, accuracy, and ability to identify efficient, scalable, and green chemistry-compliant pathways.

Table 2: Benchmarking Key AI-CASP Platforms, Algorithms, and Performance

| Platform/Algorithm | Technology/Core Approach | Reported Performance & Benchmarking Data | Key Advantages | Documented Limitations/Challenges |

|---|---|---|---|---|

| ASPIRE AICP [17] | Evidence-based synthesis planning using a knowledge graph of 1.2M chemical reactions. | Identified viable synthesis routes for 2,000 target molecules in ~40 minutes via query optimization and data engineering [17]. | High-throughput capability; based on known, validated reactions. | Scalability can be challenging with extremely large or complex knowledge graphs. |

| Generative AI & Deep Learning Models (e.g., Chemistry42, Centaur Chemist) [8] [15] [18] | Generative models (e.g., Transformer networks, GANs) for novel molecule and pathway design. | Reduced drug discovery timelines from 5 years to 12-18 months in specific cases [15]. Insilico Medicine identified a novel antibiotic candidate using its gen AI platform [8]. | Capable of proposing novel, non-obvious disconnections and structures. | Can propose routes that are synthetically challenging; "black box" nature can lack transparency. |

| Retrosynthesis Platforms (e.g., Synthia, IBM RXN) [18] | Machine Learning combined with expert-encoded reaction rules or transformer networks. | IBM RXN predicts reaction outcomes with over 90% accuracy [18]. Synthia reduced a complex drug synthesis from 12 steps to 3 [18]. | Provides realistic, lab-ready pathways; high accuracy. | Performance is tied to the quality and breadth of the underlying training data and rules. |

| Explainable AI (XAI) Approaches [16] | Focus on providing transparent and interpretable synthesis recommendations. | Emerging trend driven by regulatory demands for clarity in AI-generated synthetic routes [16]. | Aids in regulatory compliance and chemist trust. | May trade off some level of complexity or novelty for interpretability. |

Experimental Protocols for Benchmarking AI-CASP Performance

A critical component of integrating AI-CASP into pharmaceutical R&D is the rigorous, standardized evaluation of its performance. The following protocols outline a benchmark framework adapted from recent high-throughput and validation studies.

Protocol 1: High-Throughput Synthesis Route Finding

This protocol is designed to benchmark the speed and scalability of AI-CASP systems, as demonstrated in the ASPIRE Integrated Computational Platform (AICP) study [17].

- Objective: To measure the time and computational resources required for an AI-CASP platform to generate viable synthetic routes for a large, diverse set of target molecules.

- Target Molecule Set: A predefined set of 2,000 target molecules with varying complexity, molecular weight, and structural features is used as the benchmark query [17].

- Data Source and Engineering: The platform's knowledge graph must be constructed from large, curated reaction datasets (e.g., derived from USPTO and SAVI reaction datasets). Domain-driven data engineering and query optimization techniques are critical success factors [17].

- Performance Metrics:

- Total Execution Time: The time elapsed from query initiation to the generation of a complete set of suggested routes. The benchmark threshold is ~40 minutes for 2,000 molecules [17].

- Routes per Minute: A calculated metric of throughput (e.g., ~50 molecules/minute).

- Computational Resource Utilization: Monitoring of CPU, GPU, and memory usage during the process.

- Validation: Generated routes should be evaluated by expert chemists for synthetic feasibility, or preferably, a subset should be validated through experimental testing in the laboratory.

Protocol 2: Validation with Synthetic Data and Equivalence Testing

This protocol leverages synthetic data to validate the findings of benchmark studies, ensuring robustness and reproducibility, as outlined in computational study protocols for microbiome data [19].

- Objective: To validate the performance conclusions about different AI-CASP tools or differential abundance tests by using synthetic data that mimics real-world experimental data.

- Synthetic Data Generation: Employ at least two distinct statistical or AI-based tools to generate synthetic datasets that mirror the characteristics of 38 or more experimental datasets used in an original study [19].

- Equivalence Testing: Conduct statistical equivalence tests on a non-redundant subset of 46 or more data characteristics to compare the synthetic data with the original experimental data. This is complemented by principal component analysis (PCA) for an overall assessment of similarity [19].

- Methodology Application: Apply the AI-CASP tools or methods being evaluated (e.g., 14 different analysis methods) to both the synthetic and the original experimental datasets.

- Performance Cross-Checking: Evaluate the consistency in significant feature identification and the correlation in the number of significant features found per tool between the synthetic and experimental data. This validates whether the original study's conclusions hold under controlled conditions [19].

Workflow Visualization: AI-Driven Synthesis Planning in Drug Discovery

The integration of AI-CASP into the drug discovery pipeline creates a more efficient, iterative, and data-driven workflow. The diagram below illustrates the logical flow and feedback loops from initial target identification to final compound synthesis.

AI-Driven Drug Discovery Workflow

The Scientist's Toolkit: Essential Research Reagents and Solutions

The effective implementation and experimental validation of AI-generated synthesis plans rely on a suite of computational and laboratory resources. The following table details key components of this modern toolkit.

Table 3: Essential Research Reagents and Solutions for AI-CASP Implementation

| Tool/Reagent Category | Specific Examples | Function & Application in AI-CASP |

|---|---|---|

| Proprietary AI-CASP Software/Platforms | Synthia (Merck KGaA), ChemPlanner (Elsevier), Schrödinger Suite, Centaur Chemist (Exscientia), Chemistry42 (Insilico Medicine) [8] [18] | Core platforms for performing retrosynthetic analysis, predicting reaction outcomes, and generating viable synthesis pathways. The primary intellectual property driving AI-assisted chemistry. |

| Open-Source Cheminformatics Libraries | RDKit, DeepChem, OpenEye, Chemprop [8] [18] | Democratize access to AI capabilities; used for modeling molecular interactions, optimizing drug candidates, predicting properties (e.g., solubility, toxicity), and building custom models. |

| Large-Scale Reaction Databases | USPTO, SAVI [17] | Curated datasets of chemical reactions used to train and validate machine learning models and build evidence-based knowledge graphs for synthesis planning. |

| Laboratory Automation & Robotics | Integrated automated synthesis platforms (e.g., from Synple Chem) [8] | Enable high-throughput experimental validation of AI-proposed synthesis routes, translating digital plans into physical experiments rapidly and reliably. |

| Cloud Computing & SaaS Solutions | Cloud-based AI platforms (e.g., IBM RXN for Chemistry) [16] [18] | Provide scalable computational power and facilitate global collaboration, allowing researchers to access powerful CASP tools without specialized local hardware. |

The benchmarking data and experimental protocols presented confirm that AI-driven synthesis planning is no longer a speculative technology but a tangible, high-performance tool addressing the pharmaceutical imperative of reducing timelines and costs. The evidence shows that modern AI-CASP systems can slash route-finding time from weeks to minutes and have already enabled the reduction of complex syntheses from 12 steps to 3, directly impacting cost and time to clinic [18]. With the first AI-designed drug candidates already in clinical trials—reaching Phase I in roughly half the typical timeline—the potential for significant industry-wide transformation is undeniable [15] [18].

Future advancements will hinge on overcoming key challenges, including the need for explainable AI (XAI) to build trust and meet regulatory standards, better integration with laboratory automation for closed-loop design-make-test-analyze cycles, and continued focus on green chemistry principles [8] [16]. Furthermore, as the field matures, the emergence of standardized benchmarking frameworks—similar to the one proposed for the ASPIRE platform—will be crucial for the objective evaluation and continuous improvement of these powerful tools [17]. For researchers and drug development professionals, the strategic adoption and critical evaluation of AI-CASP platforms is now a vital component of maintaining a competitive edge in the relentless pursuit of new therapeutics.

The integration of artificial intelligence (AI) into Computer-Aided Synthesis Planning (CASP) is fundamentally reshaping the landscape of drug research and development (R&D). The market is experiencing explosive growth, projected to expand from approximately $2-3 billion in 2024-2025 to over $68-82 billion by 2034-2035, reflecting a compound annual growth rate (CAGR) of 38-43% [8] [16] [20]. This growth is primarily fueled by AI's demonstrated capacity to dramatically accelerate drug discovery timelines, reduce R&D costs, and enable the exploration of novel chemical spaces that were previously inaccessible with traditional methods. This guide provides an objective comparison of the performance of AI-driven CASP technologies, detailing market trajectories, key experimental methodologies for benchmarking, and the essential tools empowering this scientific revolution.

Market Size and Growth Projections

The AI in CASP market is on a steep upward trajectory, with its value expected to multiply more than twenty-fold over the next decade. The tables below summarize the key growth metrics and regional dynamics.

Table 1: Global AI in CASP Market Size and Growth Forecasts

| Base Year | Base Year Market Size (USD Billion) | Forecast Year | Projected Market Size (USD Billion) | Compound Annual Growth Rate (CAGR) | Source |

|---|---|---|---|---|---|

| 2024 | $2.13 | 2034 | $68.06 | 41.4% | [16] |

| 2025 | $3.10 | 2035 | $82.20 | 38.8% | [8] |

| - | - | 2029 | - | 43.8% | [20] |

Table 2: Regional Market Dynamics and Key Application Segments

| Region | Market Share / Dominance | Key Drivers |

|---|---|---|

| North America | Largest share (38.7%-42.6%) [8] [16] | Substantial R&D investments, robust federal funding, advanced digital infrastructure, and presence of key industry players. |

| Asia-Pacific | Fastest-growing region (CAGR ~20.0%) [8] | Increasing AI adoption in drug discovery, innovations in combinatorial chemistry, and growing investments. |

| Application Segment | Market Dominance | Rationale |

| Small Molecule Drug Discovery | Dominant application [8] | AI's strong capability to accelerate therapeutic development and reduce discovery timelines for small molecules. |

| Organic Synthesis | Leading segment [20] | AI's pivotal role in enhancing the efficiency and accuracy of organic chemistry processes for pharmaceuticals and chemicals. |

Performance Benchmarking: AI-Driven CASP vs. Traditional Methods

Quantitative data from industry reports and peer-reviewed studies consistently demonstrate the superior performance of AI-driven CASP in head-to-head comparisons with traditional, manual-heavy R&D processes.

Table 3: Performance Comparison: AI-Driven vs. Traditional Drug Discovery

| Performance Metric | Traditional Drug Discovery | AI-Driven Drug Discovery | Data Source / Context |

|---|---|---|---|

| Exploratory Research Phase | 4-5 years, over $100M [21] | Reduced to ~$70M [21] | Biomedical research efficiency |

| Success Rate | Lower comparative baseline [21] | 80%-90% for AI-discovered drugs [21] | Clinical trial success |

| Target Identification to Candidate | 4-6 years [8] | As low as 12 months [8] | Example: Exscientia's DSP-1181 |

| Small Molecule Optimization | ~5 years [8] | ~24 months [8] | Example: Exscientia's EXS-21546 |

| Synthesis Limitation | Months per compound, thousands of dollars [22] | Compressed to days [22] | Onepot AI's service model |

Experimental Protocols for Benchmarking AI CASP Algorithms

Benchmarking the performance of AI-driven synthesis planning systems requires rigorous, standardized experimental protocols. The following section details a key methodology cited in recent research.

Protocol: Evaluating Synthetic Route Quality with Conditional Residual Energy-Based Models (CREBMs)

This protocol is based on a framework proposed to overcome the limitations of one-step retrosynthesis models, which often lack long-term planning and cannot control routes based on cost, yield, or step count [23].

1. Objective: To enhance the quality of synthetic routes generated by various base strategies by integrating an additional energy-based function that evaluates routes based on user-defined criteria.

2. Materials & Data:

- Base Model: Any existing data-driven retrosynthesis model (e.g., a one-step model trained on reaction databases).

- Benchmarking Dataset: A standardized set of target molecules for evaluation (e.g., from USPTO or Pistachio databases).

- Evaluation Criteria: Defined metrics such as Material Cost, Cumulative Predicted Yield, Number of Steps, and Feasibility (a binary metric indicating practical executability).

3. Methodology:

- Step 1 - Route Generation: The base model is used to generate a set of potential synthetic routes for each target molecule.

- Step 2 - Preference-Based Training: The CREBM is trained using a preference-based loss function. The model learns from existing data on synthetic routes to rank different options according to the desirable attributes. The training aims to ensure the model assigns a lower "energy" (i.e., higher probability) to routes that better satisfy the specified criteria [23].

- Step 3 - Quality Enhancement: The trained CREBM acts as a plug-and-play module. It re-ranks and refines the most probable synthetic routes generated by the base strategy, boosting the quality of the top-ranked outputs without altering the base model's core architecture [23].

- Step 4 - Validation: The top routes proposed by the enhanced system are evaluated computationally and, in advanced benchmarking, through wet-lab experimentation to confirm feasibility and yield.

4. Key Performance Indicators (KPIs):

- Top-1 Accuracy: The percentage of target molecules for which the top proposed route is deemed feasible and high-quality.

- Route Quality Score: A composite score based on the weighted evaluation criteria (cost, yield, steps).

- Improvement Margin: The performance boost (e.g., in accuracy) over the base model without the CREBM framework.

Experimental Workflow Diagram

The following diagram illustrates the logical workflow of the CREBM benchmarking protocol.

The Scientist's Toolkit: Essential Research Reagents & Platforms

The implementation of AI in CASP relies on a synergistic ecosystem of computational tools, data sources, and physical laboratory systems.

Table 4: Key Research Reagents and Platforms in AI-Driven CASP

| Tool Category | Example Products/Platforms | Primary Function in AI-CASP Workflow |

|---|---|---|

| Software & AI Platforms | Schrödinger BIOVIA, ChemPlanner (Elsevier), Chematica (Merck KGaA), PostEra, IKTOS, CREBM Framework [8] [23] | Provide the core AI algorithms for retrosynthetic analysis, reaction prediction, and route optimization. Act as the "brain" for synthesis planning. |

| Data & Libraries | Open-source reaction databases (e.g., RDKit), Internal ELN data, Commercial chemical libraries (e.g., Enamine) [16] [24] | Serve as the training data for AI models and the source for available starting materials. Data quality is paramount for model accuracy. |

| Computational Infrastructure | AWS, Google Cloud, Azure, High-Performance Computing (HPC) clusters [24] | Supply the processing power required to train and run complex AI models and conduct molecular simulations. |

| Automation & Robotics | Automated laboratories (e.g., Berkeley Lab's A-Lab), Onepot's POT-1 lab [21] [22] | Translate digital synthesis plans into physical experiments. Enable high-throughput experimentation and reproducible, data-rich execution. |

| Specialized AI Models | DeepChem, TensorFlow, PyTorch, AlphaFold, Generative AI models (e.g., Chemistry42) [8] [24] [21] | Offer specialized capabilities, from general-purpose ML to predicting protein structures or generating novel molecular structures. |

The market trajectory for AI in Computer-Aided Synthesis Planning is unequivocally steep and transformative for drug R&D. The convergence of massive datasets, sophisticated algorithms like CREBMs, and automated laboratory systems is creating a new paradigm where in-silico prediction and physical synthesis are tightly integrated. For researchers and drug development professionals, benchmarking these technologies requires a focus not only on predictive accuracy but also on the real-world feasibility, cost, and efficiency of the synthesized routes. As the technology matures, AI-driven CASP is set to evolve from a valuable tool to an indispensable cornerstone of modern chemical and pharmaceutical research, fundamentally expanding the realm of what is possible to synthesize and discover.

The Critical Role of Benchmarking in a Rapidly Evolving Field

In the dynamic field of AI-driven scientific discovery, robust benchmarking has emerged as the critical foundation for measuring progress, validating claims, and guiding future development. As artificial intelligence transforms domains from materials science to drug discovery, the community faces a pressing challenge: how to distinguish genuine capability from hype and ensure that these powerful tools deliver reproducible, real-world impact [25] [26]. This is particularly crucial in AI-driven synthesis planning, where algorithms promise to accelerate the design of novel molecules and materials but require rigorous evaluation to establish trust and utility within the scientific community.

The transition of AI from experimental curiosity to clinical utility has been remarkable. By 2025, over 75 AI-derived molecules have reached clinical stages, with platforms claiming to drastically shorten early-stage research and development timelines [25]. However, this rapid progress demands equally sophisticated benchmarking methodologies to answer the fundamental question: Is AI truly delivering better success, or just faster failures? [25] This article examines the current state of benchmarking for AI-driven synthesis planning through a comprehensive analysis of platforms, methodologies, and experimental protocols.

The Benchmarking Imperative in AI-Driven Science

The Challenge of Evaluating AI Performance

Benchmarking AI systems presents unique challenges that extend beyond traditional software evaluation. AI-driven scientific tools must be assessed not only on computational metrics but, more importantly, on their ability to produce valid, reproducible scientific outcomes that advance research objectives [26]. The exponential scaling of AI capabilities in 2025—with computational resources growing 4.4x yearly, training data tripling annually, and model parameters doubling yearly—has created systems with increasingly sophisticated capabilities [27]. However, a profound disconnect exists between how AI is typically evaluated in academic benchmarks and how it is actually used in practical scientific workflows [27].

Analysis of real-world AI usage reveals that collaborative tasks like technical assistance, document review, and workflow optimization dominate practical applications, yet these are poorly captured by traditional abstract problem-solving benchmarks [27]. This discrepancy is particularly problematic for AI-driven synthesis planning, where success depends on seamless integration into complex research workflows spanning computational design and experimental validation.

The Consequences of Inadequate Benchmarking

Insufficient benchmarking methodologies carry significant risks for the field. Without standardized, rigorous evaluation frameworks, researchers struggle to:

- Compare different algorithmic approaches meaningfully

- Identify genuine advancements versus incremental improvements

- Translate computational predictions into laboratory success

- Allocate scarce research resources effectively

The drug discovery field exemplifies these challenges, where computational platforms promise to reduce failure rates and increase cost-effectiveness but require robust assessment to deliver on this potential [28]. Traditional benchmarking approaches often rely on static datasets with distributions that don't match real-world scenarios, potentially leading to overoptimistic performance estimates and disappointing real-world application [26].

Current Landscape of AI-Driven Synthesis Planning

Market Growth and Platform Diversity

The AI in Computer-Aided Synthesis Planning (CASP) market has experienced explosive growth, valued at $2.13 billion in 2024 and projected to reach approximately $68.06 billion by 2034, representing a remarkable 41.4% compound annual growth rate [16]. This surge reflects rapid integration of AI-driven algorithms that are transforming how chemists design, predict, and optimize synthetic routes for complex molecules.

North America dominates the market with a 42.6% share ($0.90 billion in 2024), driven by advanced digital infrastructure and active R&D ecosystems [16]. The United States alone accounted for $0.83 billion in 2024, expected to grow to $23.67 billion by 2034 at a 39.8% CAGR [16]. By application, drug discovery and medicinal chemistry represent 75.2% of the market, underscoring the pharmaceutical industry's leading role in adopting AI-CASP technologies [16].

Table: Global AI in Computer-Aided Synthesis Planning Market (2024)

| Category | Market Share/Value | Key Drivers |

|---|---|---|

| Global Market Value | $2.13 billion (2024) | AI-driven molecular design, reduced experimental timelines |

| Projected Value (2034) | $68.06 billion | 41.4% CAGR, industrial-scale automation |

| Regional Leadership | North America (42.6%) | Advanced R&D infrastructure, pharmaceutical investment |

| Leading Application | Drug Discovery & Medicinal Chemistry (75.2%) | Need for faster compound development, reduced R&D costs |

| Dominant Technology | Machine Learning/Deep Learning (80.3%) | Enhanced molecular design accuracy, route optimization |

Leading Platforms and Approaches

Multiple technological approaches have emerged in the AI-driven synthesis planning landscape, each with distinct strengths and benchmarking considerations:

Integrated Experimental Systems: Platforms like MIT's CRESt (Copilot for Real-world Experimental Scientists) combine multimodal AI with robotic equipment for high-throughput materials testing [29]. This system incorporates diverse information sources—scientific literature, chemical compositions, microstructural images—and uses robotic synthesis and characterization to create closed-loop optimization [29].

Generative Chemistry Platforms: Companies like Exscientia have pioneered generative AI for small-molecule design, claiming to compress design-make-test-learn cycles by approximately 70% and require 10x fewer synthesized compounds than industry norms [25]. Their approach integrates algorithmic creativity with human domain expertise in a "Centaur Chemist" model [25].

Physics-Enabled Design: Schrödinger's platform exemplifies physics-based approaches, with their TYK2 inhibitor, zasocitinib, advancing to Phase III clinical trials [25]. This demonstrates how physics-enabled design strategies can reach late-stage clinical testing.

Phenomics-First Systems: Companies like Recursion leverage phenotypic screening combined with AI analysis, creating extensive biological data resources for discovery [25]. The 2024 Recursion-Exscientia merger highlights the trend toward integrating phenotypic screening with automated precision chemistry [25].

Table: Leading AI-Driven Discovery Platforms and Their Clinical Progress

| Platform/Company | Core Approach | Key Clinical Developments | Benchmarking Considerations |

|---|---|---|---|

| Exscientia | Generative Chemistry + Automated Design | Multiple clinical compounds; DSP-1181 was first AI-designed drug in Phase I (2020) | Compression of design cycles; reduction in synthesized compounds needed |

| Insilico Medicine | Generative AI Target-to-Drug | ISM001-055 (TNIK inhibitor) Phase IIa results in idiopathic pulmonary fibrosis | Target-to-clinic timeline (18 months for IPF drug) |

| Schrödinger | Physics-Enabled Molecular Simulation | TAK-279 (TYK2 inhibitor) advanced to Phase III trials | Success in late-stage clinical development |

| Recursion | Phenomic Screening + AI Analysis | Integrated with Exscientia post-merger; multiple oncology programs | Scale of biological data generation; integration of phenomics with chemistry |

| BenevolentAI | Knowledge-Graph Driven Discovery | Multiple clinical-stage candidates across therapeutic areas | Target identification and validation capabilities |

Benchmarking Frameworks and Methodologies

Establishing Robust Benchmarking Protocols

Effective benchmarking of AI-driven synthesis planning requires carefully designed protocols that reflect real-world applications. The CARA (Compound Activity benchmark for Real-world Applications) framework exemplifies this approach through several key design principles [26]:

Assay Type Distinction: CARA carefully distinguishes between Virtual Screening (VS) and Lead Optimization (LO) assays, reflecting their different data distribution patterns and practical applications [26]. VS assays typically contain compounds with diffuse, widespread similarity patterns, while LO assays feature aggregated, highly similar congeneric compounds [26].

Appropriate Train-Test Splitting: The benchmark designs specific data splitting schemes for different task types to avoid overestimation of model performance and ensure realistic evaluation [26].

Relevant Evaluation Metrics: Beyond traditional metrics like AUC-ROC, CARA emphasizes interpretable metrics that align with practical decision-making needs in drug discovery [26].

Similar principles apply to materials science platforms like CRESt, which uses multimodal feedback—literature knowledge, experimental data, human input—to refine its active learning approach and continuously improve experimental design [29].

Experimental Workflow for Benchmarking

The following diagram illustrates a comprehensive benchmarking workflow for AI-driven synthesis planning platforms, integrating computational and experimental validation:

AI Synthesis Planning Benchmark Workflow

Key Performance Metrics and Evaluation

Benchmarking AI-driven synthesis platforms requires multiple complementary metrics to capture different aspects of performance:

Computational Efficiency: Measures include time required for synthesis route prediction, computational resources consumed, and scalability to large compound libraries.

Prediction Accuracy: For virtual screening, metrics include enrichment factors, recall rates at different thresholds, and area under precision-recall curves [26]. For lead optimization, performance is measured by the ability to predict activity trends among similar compounds and identify activity cliffs [26].

Experimental Success: Ultimately, platforms must be evaluated by their ability to produce molecules that succeed in laboratory validation. Key metrics include synthesis success rate, compound purity and yield, and correlation between predicted and measured properties.

Practical Utility: This encompasses broader measures such as reduction in discovery timelines, cost savings, and success in advancing candidates to clinical development [25].

Table: Core Benchmarking Metrics for AI-Driven Synthesis Planning

| Metric Category | Specific Metrics | Interpretation & Significance |

|---|---|---|

| Computational Performance | Route Prediction Time, Resource Utilization, Throughput | Practical deployment scalability and efficiency |

| Virtual Screening Performance | Enrichment Factor (EF), Recall@1%, AUC-PR | Early recognition of active compounds from large libraries |

| Lead Optimization Performance | RMSE, Pearson Correlation, Activity Cliff Identification | Accuracy in predicting fine-grained activity differences |

| Experimental Validation | Synthesis Success Rate, Yield, Purity | Translation of computational designs to real compounds |

| Real-World Impact | Timeline Compression, Cost Reduction, Clinical Candidates | Ultimate practical value and return on investment |

Experimental Protocols and Research Toolkit

Detailed Benchmarking Methodology

Based on the CARA benchmark framework [26], a comprehensive evaluation of AI-driven synthesis platforms should include:

Data Curation and Preparation:

- Source data from reliable, well-characterized databases (ChEMBL, BindingDB, PubChem)

- Carefully distinguish between assay types (VS vs. LO) based on compound similarity patterns

- Apply rigorous data cleaning and standardization procedures

- Implement temporal splitting where appropriate to simulate real-world prediction scenarios

Model Training and Evaluation:

- Employ appropriate cross-validation strategies aligned with application context

- Include both few-shot and zero-shot learning scenarios to assess generalization

- Evaluate on external test sets completely separated from training data

- Compare against established baseline methods and traditional approaches

Experimental Validation:

- Select top-ranked compounds for synthesis and testing

- Include appropriate controls and reference compounds

- Document synthesis routes, yields, and characterization data

- Measure relevant biological activities or physical properties

Essential Research Reagent Solutions

The experimental validation of AI-driven synthesis planning requires specific reagents, tools, and platforms:

Table: Essential Research Reagents and Platforms for Benchmarking Studies

| Reagent/Platform | Function | Application in Benchmarking |

|---|---|---|

| CRESt-like Platform [29] | Integrated AI + Robotic Synthesis | Closed-loop design-make-test-analyze cycles for materials |

| Liquid Handling Robots | Automated Compound Synthesis | High-throughput preparation of AI-designed molecules |

| CAR-T Cell Therapy Platforms [30] | Specialized Therapeutic Modality | Benchmarking for complex biological therapeutics |

| AI-CASP Software [16] | Synthesis Route Prediction | Core algorithmic capability evaluation |

| ChEMBL/BindingDB Databases [26] | Compound Activity Data | Training data and ground truth for benchmark development |

| Automated Electrochemical Workstations [29] | Materials Property Testing | High-throughput characterization of synthesized materials |

| Cloud AI Infrastructure (e.g., AWS) [25] | Scalable Computation | Deployment and scaling of AI synthesis platforms |

Emerging Trends and Future Directions

The Shift Toward Agentic AI and Autonomous Systems

The field is rapidly evolving from assistive tools toward autonomous AI systems capable of planning and executing complex experimental workflows. By 2025, agentic AI represents a fundamental shift—these systems can break down complex tasks into manageable steps and execute them independently, moving beyond tools that require constant human prompting [27]. This transition enables "experiential learning," where AI systems learn through environmental interaction rather than static datasets [27].

The CRESt platform exemplifies this direction, using multimodal feedback to continuously refine its experimental approaches and hypothesis generation [29]. Such systems can monitor experiments via cameras, detect issues, and suggest corrections, creating increasingly autonomous research environments [29].

Synthetic Data and Self-Improving Systems

With natural data supplies tightening, synthetic data and synthetic training methods are exploding in 2025 [31]. AI model makers are increasingly using synthetically generated tokens and training methods to continue scaling intelligence without natural data sources [31]. Techniques like Google's self-improving models, which generate their own questions and answers to enhance performance, are reducing data collection costs while improving specialized domain performance [27].

This trend has profound implications for benchmarking, as evaluation frameworks must adapt to assess systems trained on increasingly synthetic data and ensure their real-world applicability is not compromised.

Explainable AI and Regulatory Alignment

As AI systems gain autonomy, explainability and regulatory compliance become increasingly crucial. The market is seeing rising adoption of explainable AI (XAI) techniques to meet regulatory demands and ensure transparency in automated synthesis recommendations [16]. Companies like IBM and Merck are advancing "explainable retrosynthesis" approaches to comply with strict regulatory requirements [16].

Effective governance must span risk management, audit controls, security, data governance, privacy, bias mitigation, and model performance monitoring [27]. However, implementation lags behind capability—a 2024 survey found only 11% of executives have fully implemented fundamental responsible AI capabilities [27].

Benchmarking remains the critical foundation for responsible advancement of AI-driven synthesis planning. As the field evolves at an unprecedented pace, robust, standardized evaluation methodologies are essential to distinguish genuine capability from hype, guide resource allocation, and ensure these powerful technologies deliver measurable scientific impact. The development of specialized benchmarks like CARA for compound activity prediction and integrated platforms like CRESt for materials research represents significant progress toward these goals [26] [29].

Looking forward, several challenges demand attention: improving cross-scale modeling, enhancing AI generalization in data-scarce domains, advancing AI-assisted hypothesis generation, and developing governance frameworks that keep pace with technological capability [32] [27]. The most successful implementations will be those that combine cutting-edge AI with human expertise, creating collaborative environments where each complements the other's strengths.

As AI continues to redefine the paradigm of scientific discovery, benchmarking will play an increasingly vital role in ensuring that these technologies deliver on their promise to accelerate innovation, reduce costs, and address complex challenges across materials science, drug discovery, and beyond. Through continued refinement of evaluation frameworks and collaborative development of standards, the research community can harness the full potential of AI-driven synthesis planning while maintaining scientific rigor and reproducibility.

Methodologies in Action: Building and Applying Effective Benchmarking Frameworks

The integration of Artificial Intelligence (AI) into Computer-Aided Synthesis Planning (CASP) is transforming computational chemistry and drug discovery. AI-driven CASP tools leverage machine learning (ML) and deep learning (DL) to analyze vast chemical datasets, predict reaction outcomes, and design efficient synthetic pathways [16]. This capability is crucial for accelerating drug discovery, reducing research and development costs, and prioritizing compounds that are not only biologically effective but also synthetically feasible [33]. The global AI in CASP market, valued at USD 2.13 billion in 2024, is projected to grow at a CAGR of 41.4% to approximately USD 68.06 billion by 2034, reflecting the technology's significant impact and adoption [16].

Benchmarking these AI algorithms requires a standardized set of Key Performance Indicators (KPIs) that can objectively quantify their performance. This guide focuses on three critical KPIs—Success Rate, Inference Time, and Route Optimality—providing a framework for researchers to compare the performance of different AI-driven CASP platforms and methodologies.

Defining the Core KPIs for AI-Driven CASP

Success Rate

Success Rate measures the algorithm's ability to propose chemically valid and executable synthetic routes. It is a fundamental metric for assessing the practical utility and predictive accuracy of a CASP system [34].

- Synthetic Validity: The proposed route must adhere to the rules of chemistry. The structures of intermediates and the transformation steps must be chemically plausible.

- Experimental Validation: The ultimate measure of success is the laboratory confirmation that the proposed route yields the intended target molecule with acceptable purity and yield. AI models are often trained on published successful reactions, which can introduce a bias, as databases largely lack information on failed reactions [33].

Inference Time

Inference Time refers to the computational time required for the AI model to process a target molecule and generate one or more proposed synthetic pathways [35]. This KPI is vital for user experience and operational efficiency, especially when dealing with large virtual compound libraries.

- Model Latency: The time taken by the AI model to generate a retrosynthetic pathway for a single target molecule. This is critical for interactive design cycles where chemists need rapid feedback [35].

- Throughput: The volume of molecules a system can process per unit of time (e.g., molecules per hour). This is crucial for high-throughput screening in large-scale drug discovery projects [35].

Route Optimality

Route Optimality evaluates the quality and practicality of the proposed synthesis route based on multiple criteria that impact cost, safety, and sustainability. A high success rate is diminished if the proposed route is impractical to scale.

- Step Count: The number of linear steps in the synthesis; fewer steps generally correlate with higher overall yield and lower cost.

- Convergence: Convergent synthetic pathways (where fragments are synthesized in parallel and combined late) are typically more efficient than linear ones.

- Green Chemistry Principles: This includes the use of safer solvents, less hazardous reagents, and atom-economical reactions to minimize environmental impact. The adoption of AI-driven green chemistry is a key trend in the field [8].

- Synthetic Accessibility (SA) Score: A computational metric that estimates the ease or difficulty of synthesizing a molecule, often on a scale from 1 (easy) to 10 (difficult) [33].

Comparative Performance of Leading CASP Platforms

The following tables synthesize available data on the performance of various AI-driven CASP tools. It is important to note that direct, standardized comparisons are scarce, as benchmarking studies often use different datasets and criteria.

Table 1: Comparative KPI Performance of Select CASP Platforms

| Platform / Model | Reported Success Rate (%) | Inference Time / Speed | Key Route Optimality Metrics |

|---|---|---|---|

| ASKCOS (MIT) | High accuracy in reaction outcome prediction [34] | Rapid route generation [34] | Recommends viable routes from available chemicals [33] |

| IBM RXN for Chemistry | Demonstrates high accuracy in reaction prediction [34] | Neural machine translation for efficient prediction [33] | N/A |

| Chematica/Synthia (Merck) | Expert-quality routes [34] | Unprecedented speed in planning [34] | Optimizes for the most suitable and sustainable route [33] |

| Neural-Symbolic Models | High accuracy with interpretable mechanisms [34] | N/A | Generates expert-quality retrosynthetic routes [34] |

| Monte Carlo Tree Search (MCTS) | High planning success rate [34] | N/A | Finds optimal synthetic pathways [34] |

| Generative AI Models (e.g., IDOLpro) | Generated high-affinity ligands 100x faster [33] | 100x faster candidate generation [33] | Designs molecules with 10-20% better binding affinity while integrating constraints [33] |

Table 2: Quantitative Benchmarks from AI-Discovered Drug Candidates

This table illustrates the real-world impact of AI in reducing discovery timelines, which is indirectly related to CASP performance.

| Drug Name / Company | AI Application | Development Stage | Timeline Reduction |

|---|---|---|---|

| DSP-1181 (Exscientia) | AI-driven small-molecule design | Phase I completed, discontinued | 12 months vs. 4–6 years [8] |

| EXS-21546 (Exscientia) | AI-guided small-molecule optimization | Preclinical | ~24 months vs. 5+ years [8] |

| BEN-2293 (BenevolentAI) | AI target discovery | Phase I | ~30 months (faster development) [8] |

Experimental Protocols for KPI Benchmarking

To ensure fair and reproducible comparisons between CASP tools, researchers should adhere to standardized experimental protocols.

Benchmarking Dataset Curation

- Source: Utilize a standardized, diverse set of target molecules with known synthesis pathways, such as those from the USPTO (United States Patent and Trademark Office) database or other public chemical reaction datasets [36].

- Splitting: Divide the dataset into training/validation/test sets, ensuring that molecules in the test set are not present in the training data to evaluate the model's generalizability.

- Challenges: Be aware of inherent biases in public datasets, which often skew toward popular transformations and commercially accessible chemicals, limiting transferability to novel chemistries [33].

Protocol for Measuring Success Rate

- Input: Feed each target molecule from the benchmark test set into the CASP platform.

- Output Collection: Record the top-k (e.g., top-1, top-3, top-5) proposed synthetic routes for each molecule.

- Validation:

- Computational Validation: Check routes for chemical validity (e.g., correct atom mapping, chemically feasible transformations).

- Expert Evaluation: Have expert chemists score the routes based on chemical plausibility.

- Experimental Validation (Gold Standard): Execute the top proposed routes in a laboratory to confirm the successful synthesis of the target molecule.

Protocol for Measuring Inference Time

- Environment Setup: Run all CASP platforms on hardware with identical specifications (e.g., CPU/GPU type and memory) to ensure a fair comparison.

- Timing: For each target molecule, measure the time elapsed from the submission of the molecular structure to the receipt of the first proposed route (time-to-first) and the complete set of proposed routes (time-to-all).

- Averaging: Report the average inference time across the entire test set of molecules.

Protocol for Evaluating Route Optimality

For each proposed route, calculate the following metrics:

- Step Count: The total number of synthetic steps.

- Convergence: Quantify synthetic convergence, for example, by the number of starting materials that are combined in the latest step of the synthesis.

- Synthetic Accessibility (SA) Score: Calculate the SA Score for the target molecule and key intermediates using established algorithms [33].

- Green Metrics: Evaluate the route using metrics like Process Mass Intensity (PMI) or a green solvent guide score, if reagent and solvent data is available.

The following workflow diagram illustrates the integrated process of benchmarking a CASP algorithm from dataset preparation to KPI evaluation.

The Scientist's Toolkit: Essential Research Reagents and Solutions

The following reagents, software, and data resources are fundamental for developing and benchmarking AI-driven CASP algorithms.

Table 3: Key Research Reagent Solutions for CASP Benchmarking

| Item Name | Type | Function in CASP Research |

|---|---|---|

| USPTO Database | Data | A large, public dataset of chemical reactions used for training and benchmarking reaction prediction and retrosynthesis algorithms [36]. |

| RDKit | Software | An open-source toolkit for cheminformatics and machine learning, used for manipulating molecules, calculating descriptors, and integrating with ML models [8]. |

| DeepChem | Software | An open-source platform that democratizes access to AI capabilities in drug discovery, providing libraries for molecular modeling and reaction prediction [8]. |

| SA Score Algorithm | Software/Metric | A computational method that estimates synthetic accessibility from molecular structure, used to filter or rank AI-generated molecules [33]. |

| GPU/TPU Accelerators | Hardware | Specialized processors crucial for accelerating the training of deep learning models and reducing inference latency in large-scale CASP applications [35]. |

| Reaction Template Libraries | Data | Encoded sets of chemical transformation rules used by template-based retrosynthetic analysis algorithms (e.g., in ASKCOS) to decompose target molecules [33]. |

The rigorous benchmarking of AI-driven CASP tools through well-defined KPIs—Success Rate, Inference Time, and Route Optimality—is paramount for advancing the field and building trust in these systems among researchers and drug development professionals. While current platforms demonstrate impressive capabilities in generating expert-quality routes at unprecedented speeds, challenges remain. These include the need for more high-quality and diverse reaction data, especially on failed experiments, and the development of more robust models that can generalize to novel chemical spaces [33]. Future progress will hinge on the community's commitment to open benchmarking, standardized protocols, and the development of multi-objective optimization algorithms that seamlessly balance synthetic feasibility with biological activity and other drug-like properties [33]. As these technologies mature, AI-driven synthesis planning is poised to become an indispensable cornerstone of efficient and sustainable drug discovery.

The revolution in artificial intelligence (AI)-driven organic synthesis hinges on the quality and diversity of the chemical reaction data used to train these models. As machine learning algorithms increasingly power critical tasks in drug discovery and chemical research—including retrosynthesis planning, reaction outcome prediction, and synthesizability assessment—their performance is fundamentally constrained by the underlying datasets. High-quality, diverse reaction datasets enable models to accurately predict reaction outcomes, control chemical selectivity, simplify synthesis planning, and accelerate catalyst discovery [37]. The benchmarking of synthesis planning algorithms reveals that their effectiveness varies significantly based on the data they are trained on, with differences between algorithms becoming less pronounced when evaluated under strictly controlled conditions that account for data quality [38]. This comparison guide examines the landscape of chemical reaction datasets, their quantitative attributes, and their measurable impact on the performance of AI-driven synthesis planning tools, providing researchers with a framework for selecting appropriate data resources for specific applications in pharmaceutical and chemical development.

Comparative Analysis of Major Chemical Reaction Datasets

Dataset Specifications and Performance Metrics

The quality and composition of reaction datasets directly influence the performance of AI models in predicting viable synthetic routes. Below is a detailed comparison of key datasets used in training and benchmarking synthesis planning algorithms.

Table 1: Comparison of Major Chemical Reaction Datasets for AI Applications

| Dataset Name | Size (Reactions) | Key Characteristics | Data Quality Features | Primary Applications in Synthesis Planning |

|---|---|---|---|---|

| Science of Synthesis (SOS) | 470,000+ | Expert-curated, covers broad scope of organic chemistry | Manually abstracted, consistently structured, high reliability | Retrosynthesis prediction, forward-reaction prediction, analysis of chemical reactivity [39] |

| USPTO Derivatives (e.g., USPTO-50K) | 50,000-480,000 | Extracted from patent literature via text-mining | Automated extraction, requires significant cleanup, contains errors | Benchmarking reaction prediction models, training retrosynthesis algorithms [40] |

| mech-USPTO-31K | 31,000 | Subset of USPTO with validated arrow-pushing mechanisms | Curated mechanisms, hand-coded mechanistic templates | Mechanism-based reaction prediction, understanding electron movements [40] |

| Reaxys | Millions | Commercial database, comprehensive coverage | Mixed quality, contains inconsistencies requiring preprocessing | Broad chemical research, requires curation for optimal ML performance [41] |