From Manual Checks to AI Agents: The New Frontier of Reproducibility Assessment in Biomedical Research

This article explores the critical evolution from traditional manual methods to emerging automated systems for assessing research reproducibility.

From Manual Checks to AI Agents: The New Frontier of Reproducibility Assessment in Biomedical Research

Abstract

This article explores the critical evolution from traditional manual methods to emerging automated systems for assessing research reproducibility. For researchers, scientists, and drug development professionals, we provide a comprehensive analysis of foundational concepts, practical methodologies, common challenges, and validation frameworks. Drawing on current developments in AI-driven assessment, standardized data collection ecosystems, and domain-specific automation, this review synthesizes key insights to guide the selection and implementation of reproducibility strategies across diverse research contexts, from social sciences to automated chemistry and clinical studies.

The Reproducibility Crisis and Why Assessment Methodology Matters

In the rigorous world of scientific research and drug development, the ability to reproduce results is the cornerstone of validity and trust. Reproducibility means that experiments can be repeated using the same input data and methods to achieve results consistent with the original findings [1]. However, many fields face a "reproducibility crisis," with over 70% of researchers in one survey reporting they had failed to reproduce another scientist's experiments [1]. This challenge forms the critical context for evaluating manual verification versus automated assessment methodologies. As scientific processes grow more complex, the choice between these approaches significantly impacts not only efficiency but, more importantly, the reliability and credibility of research outcomes, particularly in high-stakes domains like pharmaceutical development where errors can have severe consequences [2].

Key Concepts and Definitions

Manual Verification: The Human Element

Manual verification relies on human operators to execute processes, conduct analyses, and interpret results without the intervention of programmed systems. In laboratory settings, this encompasses tasks ranging from traditional chemical synthesis—which remains highly dependent on trained chemists performing time-consuming molecular assembly—to manual proofreading of pharmaceutical documentation and visual inspection of experimental results [3] [2]. This approach leverages human intuition, adaptability, and experiential knowledge, allowing researchers to adjust their approach spontaneously as they uncover new issues or observe unexpected phenomena [4].

Automated Assessment: The Machine Precision

Automated assessment employs computer systems, robotics, and artificial intelligence to execute predefined procedures with minimal human intervention. In synthesis research, this spans from AI-driven synthesis planning software to robotic platforms that physically perform chemical reactions [3]. These systems operate based on carefully designed algorithms and protocols, offering consistent execution unaffected by human fatigue or variation. Automated assessment fundamentally transforms traditional workflows by introducing unprecedented levels of speed, consistency, and precision to repetitive and complex tasks [5].

Reproducibility vs. Repeatability

Within scientific methodology, a crucial distinction exists between reproducibility and repeatability:

- Repeatability refers to the likelihood of producing the exact same results when an experiment is repeated under identical conditions, using the same location, apparatus, and operator [1].

- Reproducibility measures whether consistent results can be achieved when using different research methods, potentially across different laboratories, equipment, and research teams [1]. Both concepts are essential for establishing scientific validity, with reproducibility representing a more robust standard that ensures findings are not artifacts of a specific experimental setup.

Quantitative Comparison: Manual vs. Automated Performance

The following tables summarize experimental data comparing the performance characteristics of manual and automated approaches across critical dimensions of scientific work.

Table 1: Accuracy and Throughput Comparison in Measurement and Verification Tasks

| Performance Metric | Manual Approach | Automated Approach | Experimental Context |

|---|---|---|---|

| Measurement Accuracy | Statistically significant differences between T1 & T2 measurements (p<0.05) [6] | Semi-automated AI produced highest tooth width values [6] | Tooth width measurement on plaster models [6] |

| Error Rate | Prone to human error, especially with repetitive tasks [7] | Accuracy >95% in medication identification [8] | Automated medication verification system [8] |

| Throughput | Time-consuming for large experiments [5] | Simultaneously tests multiple reaction conditions [5] | Chemical synthesis optimization [5] |

| Reliability Correlation | Pearson's r = 0.449-0.961 [6] | Fully automated AI: r = 0.873-0.996 [6] | Tooth width, Bolton ratios, space analysis [6] |

| Process Time | 1 hour per document proofreading [2] | Same task completed within minutes [2] | Pharmaceutical document inspection [2] |

Table 2: Reproducibility and Operational Characteristics

| Characteristic | Manual Verification | Automated Assessment |

|---|---|---|

| Reproducibility (ICC) | Excellent in tooth width (ICC: 0.966-0.983) [6] | Excellent in tooth width (ICC: 0.966-0.983) [6] |

| Result Consistency | Variable between operators and over time [1] | Highly consistent and objective [7] |

| Protocol Adherence | Subtle variations between researchers [1] | Precise execution of predefined protocols [5] |

| Scalability | Limited by human resources and fatigue [7] | Easy to scale for large-scale, routine tasks [7] |

| Initial Investment | Lower initial costs [4] | Higher setup and maintenance costs [7] |

| Operational Cost | Higher long-term costs for repetitive tasks [2] | Cost-efficient for high-volume repetitive tasks [7] |

Experimental Protocols and Methodologies

Protocol 1: AI-Based Measurement Validation Study

Objective: To evaluate the validity, reliability, and reproducibility of manual, fully automated AI, and semi-automated AI-based methods for measuring tooth widths, calculating Bolton ratios, and performing space analysis [6].

Materials and Methods:

- Sample Preparation: 102 plaster models and 102 corresponding occlusal photographs were prepared for analysis [6].

- Measurement Techniques: Each case was analyzed using three distinct methods:

- Manual Method: Traditional measurement using digital calipers by trained examiners.

- Fully Automated AI: Complete algorithmic measurement without human intervention.

- Semi-Automated AI: Hybrid approach combining AI processing with human oversight.

- Parameters Measured: Mesiodistal tooth widths, anterior and overall Bolton ratios, required space, available space, and space discrepancy in both arches [6].

- Statistical Analysis: Validity was assessed using repeated measures ANOVA, reliability using Pearson's correlation coefficients, and reproducibility using intraclass correlation coefficients (ICC) [6].

Key Findings: While all methods demonstrated excellent reproducibility for direct tooth width measurements (ICC: 0.966-0.983), the manual method showed highest reproducibility in derived Bolton ratios. AI-based methods exhibited greater variability in complex derived measurements, highlighting the context-dependent performance of automated approaches [6].

Protocol 2: Automated Medication Verification System

Objective: To develop and validate an automated medication verification system (AMVS) capable of accurately classifying multiple medications within a single image to reduce medication errors in healthcare settings [8].

Materials and Methods:

- Hardware Configuration: A "Drug Verification Box" was constructed with a sealed, light-tight environment containing a Raspberry Pi 4B with 4GB CPU, camera module, and controlled LED lighting. The medication tray was 3D-printed with dimensions of 100×100mm, positioned 70mm from the camera [8].

- Image Processing: The system employed edge detection algorithms (contours function in OpenCV) with thresholding to delineate object boundaries. Individual drugs were identified within images using segmentation analysis [8].

- AI Classification: A deep learning model using a pre-trained ResNet architecture was fine-tuned for medication classification, eliminating the need for manual annotation of medication regions [8].

- Dataset: 300 validation and training images (30 per drug across 10 categories) for edge detection validation, plus 50 randomly captured inference images containing multiple drugs [8].

Key Findings: The system achieved >95% accuracy in drug identification, with approximately 96% accuracy for drug sets containing fewer than ten types and 93% accuracy for sets with ten types. This demonstrates the potential of automated systems to enhance accuracy in complex identification tasks [8].

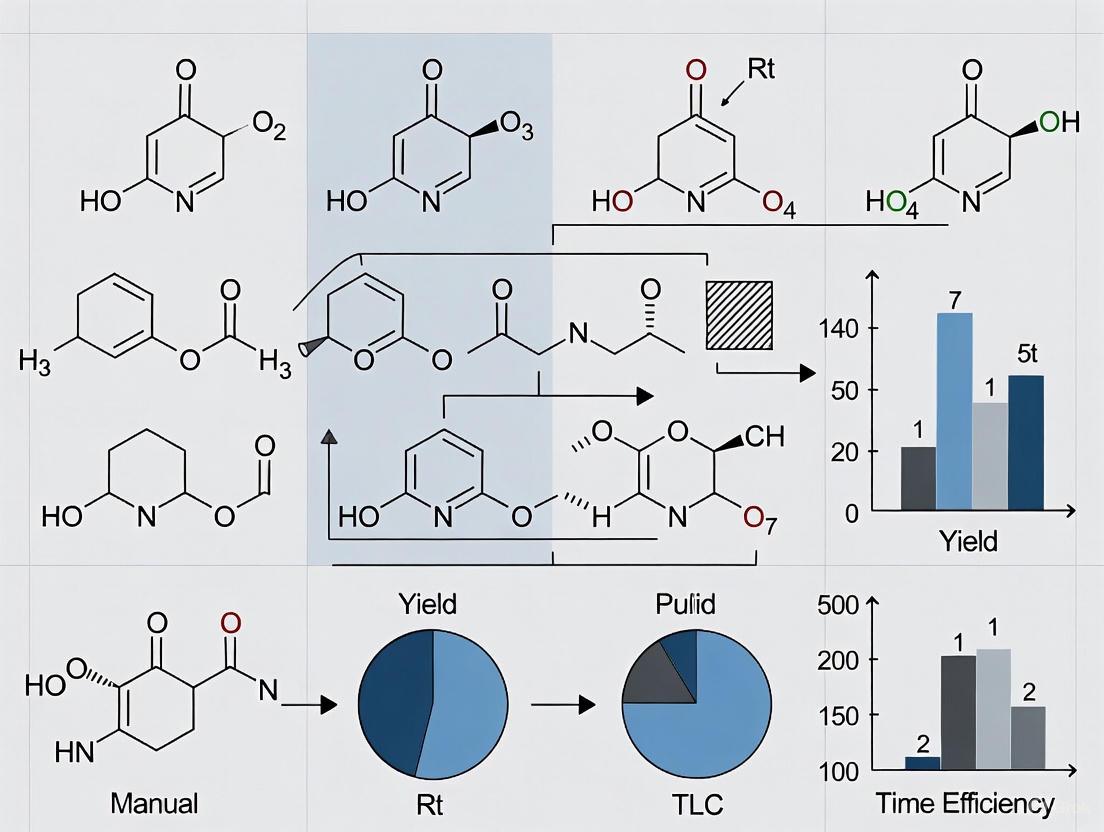

Workflow Visualization

Research Methodology Comparison: This diagram illustrates the fundamental differences in workflow between manual verification and automated assessment approaches, highlighting points where variability may be introduced or controlled.

The Scientist's Toolkit: Essential Research Reagents and Solutions

Table 3: Key Research Reagent Solutions for Reproducibility Studies

| Reagent/Platform | Function | Application Context |

|---|---|---|

| OpenCV with Contours Function | Accurate object boundary delineation in images | Automated medication verification systems [8] |

| Pre-trained ResNet Models | Rapid image classification without training from scratch | Drug recognition and classification [8] |

| TIDA (Tetramethyl N-methyliminodiacetic acid) | Supports C-Csp3 bond formation in automated synthesis | Small molecule synthesis machines [3] |

| Automated Reactor Systems | Enable real-time monitoring and control of reactions | Chemical synthesis optimization [5] |

| Radial Flow Synthesizers | Provide stable, reproducible multistep synthesis | Library generation for drug derivatives [3] |

| LINQ Cloud Laboratory Orchestrator | Connects activities in workflows with full traceability | Laboratory automation and reproducibility assessment [1] |

The comparison between manual verification and automated assessment reveals a nuanced landscape where neither approach dominates universally. Manual approaches bring irreplaceable human judgment, adaptability, and cost-effectiveness for small-scale or novel investigations [4]. Automated systems offer superior precision, scalability, and consistency for repetitive, high-volume tasks [7] [5]. The most effective research strategy leverages the strengths of both methodologies—employing automated systems for standardized, repetitive components of workflows while reserving human expertise for complex decision-making, exploratory research, and interpreting ambiguous results. This integrated approach maximizes reproducibility while maintaining scientific creativity and adaptability, ultimately advancing the reliability and efficiency of scientific research, particularly in critical fields like pharmaceutical development where both precision and innovation are essential.

The Credibility Crisis as a Driver for New Assessment Methodologies

The scientific community faces a pervasive reproducibility crisis, an alarming inability to independently replicate published findings that threatens the very foundation of scientific inquiry [9] [10]. In a 2016 survey by Nature, 70% of researchers reported failing to reproduce another scientist's experiments, and more than half failed to reproduce their own work [11]. This credibility gap is particularly critical in fields like drug discovery, where the Design-Make-Test-Analyse (DMTA) cycle is hampered by the "Make" phase—the synthesis of novel compounds—being a significant bottleneck reliant on manual, time-consuming, and technique-sensitive processes [12] [3]. This article, framed within the broader thesis on reproducibility assessment, compares manual and automated synthesis research, demonstrating how automated methodologies address this crisis by enhancing reproducibility, efficiency, and data integrity.

The Reproducibility Crisis and the Synthesis Bottleneck

The reproducibility crisis is fueled by a combination of factors, including publication bias favoring novel results, questionable research practices, inadequate statistical methods, and a "publish or perish" culture that sometimes prioritizes quantity over quality [9]. A critical, often-overlooked contributor is the reliance on manual research methods. In laboratory synthesis, manual operation leads to inconsistent reproducibility and inadequate efficiency, hindering the evolution of dependable, intelligent automation [3]. The inherent challenges are magnified when complex biological targets demand intricate chemical structures, necessitating multi-step synthetic routes that are labor-intensive and fraught with variables [12].

This manual paradigm is not limited to wet-lab chemistry. In research synthesis—the process of combining findings from multiple studies—practitioners report that 60.3% cite time-consuming manual work as their biggest frustration, with 59% specifically identifying "reading through data and responses" as the most time-intensive task [13]. This manual bottleneck exhausts mental energy that could otherwise be directed toward strategic interpretation and innovation.

Comparative Analysis: Manual vs. Automated Synthesis

The following comparison evaluates manual and automated synthesis across key performance metrics critical to reproducibility and efficiency in a research and development environment.

Table 1: Performance Comparison of Manual vs. Automated Synthesis Methodologies

| Assessment Metric | Manual Synthesis | Automated/AI-Assisted Synthesis |

|---|---|---|

| Reproducibility & Consistency | Prone to variability due to differences in technician skill and technique [3]. | High; robotic execution provides standardized, consistent results [3]. |

| Throughput & Speed | Low; slow, labor-intensive process, a major bottleneck in the DMTA cycle [12]. | High; capable of running hundreds of reactions autonomously (e.g., 688 reactions in 8 days) [3]. |

| Data Integrity & FAIRness | Inconsistent; reliant on manual, often incomplete, lab notebook entries [12]. | High; inherent digital data capture enforces Findable, Accessible, Interoperable, Reusable (FAIR) principles [12]. |

| Synthesis Planning | Relies on chemist intuition and manual literature searches [12]. | AI-driven retrosynthetic analysis proposes diverse and innovative routes [12] [3]. |

| Reaction Optimization | Iterative, time-consuming, and often intuition-driven [12]. | Uses machine learning and closed-loop systems for efficient, data-driven optimization [3]. |

| Resource Utilization | High demand for skilled labor time on repetitive tasks [13]. | Liberates highly-trained chemists from routine tasks to focus on creative problem-solving [3]. |

Table 2: Experimental Outcomes from Documented Automated Synthesis Systems

| Automated System / Platform | Key Experimental Outcome | Implication for Reproducibility & Efficiency |

|---|---|---|

| Mobile Robotic Chemist [3] | Autonomously performed 688 reactions over 8 days to test variables. | Demonstrates unparalleled scalability and endurance for gathering experimental data. |

| Chemputer [3] | Assembled three pharmaceuticals with higher yields and purities than manual synthesis. | Standardizes complex multi-step procedures, ensuring superior and more reliable output. |

| AI-Chemist [3] | Full-cycle platform performing synthesis planning, execution, monitoring, and machine learning. | Creates a integrated, objective R&D workflow minimizing human-induced variability. |

| Closed-Loop Optimization [3] | Machine learning identified optimal conditions for Suzuki-Miyaura coupling reactions. | Systematically and efficiently pinpoints robust, general reaction conditions. |

| Radial Flow Synthesizer [14] | Automated multistep synthesizer with inline NMR and IR monitoring provided stable, reproducible processes. | Enables real-time analysis and feedback, ensuring consistent product quality across runs. |

Experimental Protocols in Automated Synthesis

Protocol: AI-Driven Retrosynthesis and Route Validation

This protocol leverages artificial intelligence to design and validate synthetic routes before physical execution.

- Input Target Molecule: The desired small-molecule structure is provided to a Computer-Assisted Synthesis Planning (CASP) platform in a standardized digital format (e.g., SMILES) [12].

- AI-Powered Retrosynthetic Analysis: The CASP platform, trained on millions of published reactions, performs a recursive disconnection of the target molecule into simpler, commercially available building blocks [12] [3]. Search algorithms like Monte Carlo Tree Search are used to evaluate potential multi-step routes [12].

- Route Feasibility Evaluation: The proposed routes are ranked based on predicted success, cost, and step-count. For difficult transformations, the system may propose screening plate layouts for High-Throughput Experimentation (HTE) to empirically validate route feasibility [12].

- Condition Prediction: Machine learning models (e.g., graph neural networks) predict optimal reaction conditions (solvent, catalyst, temperature) for each synthetic step [12].

Protocol: Autonomous Robotic Synthesis and Optimization

This protocol outlines a closed-loop workflow for the automated execution and optimization of chemical reactions.

- Digital Recipe Generation: A validated synthetic route is translated into a machine-readable code, specifying commands for the robotic platform [3].

- Automated Reaction Setup: A robotic system prepares reactions by dispensing precise volumes of reagents and solvents from designated storage modules into reaction vessels [3].

- Execution and Inline Monitoring: Reactions are carried out in continuous flow or batch reactors. Inline analytical tools (e.g., NMR, IR, TLC) monitor reaction progress in real-time [14] [3].

- Data Collection and Machine Learning: Outcomes (yield, purity) from each experiment are automatically recorded. An iterative machine learning system uses this data to prioritize and select the subsequent most informative reactions to run, creating a closed-loop optimization cycle [3].

Diagram 1: From Crisis to Automated Solutions

Diagram 2: Automated Synthesis Workflow

The Scientist's Toolkit: Essential Research Reagent Solutions

The shift to automated and data-driven methodologies relies on a new class of "reagent solutions"—both physical and digital.

Table 3: Key Research Reagent Solutions for Automated Synthesis

| Tool / Solution | Function |

|---|---|

| Computer-Assisted Synthesis Planning (CASP) | AI-powered software that proposes viable synthetic routes for a target molecule via retrosynthetic analysis [12]. |

| Pre-Weighted Building Blocks | Commercially available starting materials, pre-weighed and formatted for direct use, reducing labor and error in reaction setup [12]. |

| MAke-on-DEmand (MADE) Libraries | Vast virtual catalogues of synthesizable building blocks, dramatically expanding accessible chemical space beyond physical inventory [12]. |

| Automated Synthesis Platforms | Integrated robotic systems (e.g., Chemputer, radial synthesizer) that execute chemical synthesis from a digital recipe [3]. |

| Inline Analytical Modules | Instruments like NMR or IR spectrometers integrated into the synthesis platform for real-time reaction monitoring and analysis [14]. |

| Chemical Inventory Management System | Sophisticated software for real-time tracking, secure storage, and regulatory compliance of chemical inventories [12]. |

The credibility crisis in science is not an insurmountable challenge but a powerful driver for innovation. The comparative data and experimental protocols presented herein objectively demonstrate that automated synthesis methodologies outperform manual approaches across critical metrics: they deliver superior reproducibility, higher throughput, and robust data integrity. By adopting these new assessment methodologies and the associated toolkit, researchers and drug development professionals can transform a crisis of confidence into an era of more reliable, efficient, and accelerated scientific discovery.

Reproducibility, the ability to independently verify scientific findings using the original data and methods, serves as a cornerstone of scientific integrity across disciplines. In the social sciences, computational reproducibility is defined as the ability to reproduce results, tables, and figures using available data, code, and materials, a process essential for instilling trust and enabling cumulative knowledge production [15]. However, reproducibility rates remain alarmingly low. Audits in fields like economics suggest that less than half of articles published before 2019 in top journals were fully computationally reproducible [15]. Similar challenges plague preclinical research, where the cumulative prevalence of irreproducible studies exceeds 50%, costing approximately $28 billion annually in the United States alone due to wasted research expenditures [16]. This comparison guide objectively assesses the methodologies and tools for evaluating reproducibility, contrasting manual assessment practices prevalent in social sciences with automated synthesis technologies transforming chemical and drug development research. We provide experimental data and detailed protocols to illuminate the distinct challenges, solutions, and performance metrics characterizing these diverse scientific domains.

Comparative Analysis: Manual Assessment vs. Automated Synthesis

The approaches to ensuring and verifying reproducibility differ fundamentally between domains relying on human-centric manual processes and those utilizing automated systems. The table below summarizes the core characteristics of each paradigm.

Table 1: Core Characteristics of Reproducibility Assessment Approaches

| Feature | Manual Reproducibility Assessment (Social Sciences) | Automated Synthesis (Chemical/Bioimaging) |

|---|---|---|

| Primary Objective | Verify computational results using original data & code [15] | Ensure consistent, reliable synthesis of chemical compounds [17] |

| Typical Process | Crowdsourced attempts; structured, multi-stage review [18] | Integrated, software-controlled robotic workflow [17] |

| Key Tools | Social Science Reproduction Platform (SSRP), OSF preregistration [19] [18] | Robotic arms, liquid handlers, microwave reactors [17] |

| Success Metrics | Rate of successful replication, effect size comparison [19] | Synthesis yield, purity, time efficiency [17] |

| Reported Success Rate | ~62% (for high-profile social science studies) [19] | Near 100% consistency in compound re-synthesis [17] |

| Primary Challenge | Low rates of reproducibility; insufficient incentives [15] [19] | High initial capital cost and technical complexity [20] |

| Economic Impact | $28B/year on irreproducible preclinical research (US) [16] | Market for synthesis instruments growing to USD 486.4M by 2035 [20] |

Manual Reproducibility Assessment in Social Sciences

Experimental Protocols and Workflows

The standard methodology for assessing reproducibility in social sciences involves a structured, collaborative process. The Social Science Reproduction Platform (SSRP) exemplifies this with a four-stage process: Assess, Improve, Review & Collaborate, and Measure [18]. Key to rigorous assessment is the use of pre-registration, where researchers publicly declare their study design and analysis plan on platforms like the Open Science Framework (OSF) before beginning their research to prevent reporting bias [19]. Protocols also demand the use of original materials and the endorsement of replication protocols by the original authors whenever possible [19]. To ensure sufficient statistical power, replication studies often employ sample sizes much larger than the originals; one large-scale project used samples about five times larger than the original studies [19].

The following workflow diagram maps the pathway for a typical manual reproduction attempt.

Supporting Experimental Data

Large-scale replication projects provide robust data on the state of reproducibility in social sciences. One project attempting to replicate 21 high-powered social science experiments from Science and Nature found that only 13 (62%) showed significant evidence consistent with the original hypothesis [19]. Furthermore, the replication studies on average revealed effect sizes that were about 50% smaller than those reported in the original studies [19]. Prediction markets, where researchers bet on replication outcomes, have proven highly accurate, correctly forecasting the results of 18 out of 21 replications, suggesting the community possesses tacit knowledge about which findings are robust [19]. A systematic review of management studies placed their replication prevalence rate almost exactly between those of psychology and economics, though method and data transparency are often medium to low, rendering many replication attempts impossible [21].

Automated Synthesis in Chemical Research

Experimental Protocols and Workflows

In contrast to social sciences, reproducibility in chemical research for drug development is increasingly addressed through integrated automation. The core protocol involves an integrated solid-phase combinatorial chemistry system created using commercial and customized robots [17]. These systems are designed to optimize reaction parameters, including varying temperature, shaking, microwave irradiation, and handling different washing solvents for separation and purification [17]. A central computer software controls the entire system through RS-232 serial ports, executing a user-defined command sequence that coordinates all robotic components [17]. This includes a 360° Robot Arm (RA), a Capper–Decapper (CAP), a Split-Pool Bead Dispenser (SPBD), a Liquid Handler (LH) with a heating/cooling rack, and a Microwave Reactor (MWR) [17]. The functional reliability of the automated process is confirmed through systematic, repeated synthesis and comparison using techniques like molecular fingerprinting and Uniform Manifold Approximation and Projection (UMAP) [17].

The automated synthesis process for creating a library of nerve-targeting agents is detailed below.

Supporting Experimental Data

Experimental data demonstrates the efficacy of automated synthesis for enhancing reproducibility. In one study, 20 nerve-specific contrast agents (BMB derivatives) were systematically synthesized three times using the automated robotic system [17]. The entire library was synthesized automatically within 72 hours, a significant reduction from the 120 hours required for manual parallel synthesis of the same scale [17]. All 20 library members were obtained with an average overall yield of 29% and an average library purity of 51%, with greater than 70% purity for 7 compounds [17]. When scaled up, the automated large-batch synthesis (50 mg resins) for specific compounds like BMB-1 was completed in just 46 hours with a 92% purity and 55% yield, matching or exceeding the quality of manual synthesis but with dramatically improved speed and consistency [17]. The global market for these automated systems is projected to grow from USD 229.5 million in 2025 to USD 486.4 million by 2035, reflecting accelerated adoption driven by the demand for reproducible, efficient peptide production [20].

Table 2: Experimental Results: Automated vs. Manual Synthesis of BMB-1 [17]

| Synthesis Method | Time | Reported Purity | Reported Yield |

|---|---|---|---|

| Automated Small Batch (10 mg resins) | 72 hours | 68% ± 11% | 36% |

| Manual Synthesis (10 mg resins) | 120 hours | 92% | 56% |

| Automated Large Batch (50 mg resins) | 46 hours | 92% | 55% |

The Scientist's Toolkit: Essential Research Reagents and Solutions

The following table details key resources and instruments central to reproducibility efforts in both social science and biomedical domains.

Table 3: Essential Research Reagent Solutions for Reproducibility

| Item Name | Field of Use | Function & Explanation |

|---|---|---|

| Social Science Reproduction Platform (SSRP) | Social Science | A platform that crowdsources and catalogs attempts to assess and improve the computational reproducibility of social science research [15] [18]. |

| Open Science Framework (OSF) | Social Science | A free, open-source platform for supporting research and enabling transparency. Used for preregistering studies and sharing data, materials, and code [19]. |

| Peptide Synthesizer | Chemical/Drug Development | An automated platform that coordinates solid-phase synthesis reactions, enabling parallel synthesis of multiple peptide sequences with high reproducibility [20]. |

| Liquid Handler (LH) | Chemical/Drug Development | A robotic system that automates the aspirating and dispensing of liquids and solvents with high precision, a key component of integrated chemistry systems [17]. |

| Microwave Reactor (MWR) | Chemical/Drug Development | A reactor that uses microwave irradiation to accelerate chemical reactions, providing precise control over reaction parameters like temperature and time [17]. |

| Purification Equipment (HPLC) | Chemical/Drug Development | High-Pressure Liquid Chromatography systems are used to separate and purify synthesized compounds, which is critical for ensuring product quality and consistency [20]. |

| Prediction Markets | Social Science | A tool using market mechanisms to aggregate researchers' beliefs about the likelihood that published findings will replicate, helping prioritize replication efforts [19]. |

The pursuit of reproducibility follows distinctly different paths in the realms of social science and experimental biomedical research. Social science relies on manual, community-driven efforts centered on transparency, open data, and the replication of computational analyses, yet faces significant challenges in incentive structures and consistently low success rates. In contrast, chemical and drug development research is increasingly adopting fully automated, integrated robotic systems that bake reproducibility into the synthesis process itself, achieving high consistency at a significant capital cost. Both fields, however, are innovating to improve the reliability of scientific findings. Social sciences are turning to preregistration and platforms like the SSRP, while the life sciences are driving a robust market for automated synthesis instruments. Understanding these domain-specific challenges, protocols, and tools is the first step for researchers and drug development professionals in systematically addressing the critical issue of reproducibility.

The Critical Role of FAIR Principles in Modern Reproducibility

The modern scientific landscape faces a significant challenge known as the "reproducibility crisis," where findings from one study cannot be consistently replicated in subsequent research, leading to wasted resources and delayed scientific progress. In data-intensive fields like drug development, this problem is particularly acute due to the volume, complexity, and rapid creation speed of scientific data [22]. In response to these challenges, the FAIR Guiding Principles were formally published in 2016 as a concise and measurable set of guidelines to enhance the reuse of digital research objects [23]. FAIR stands for Findable, Accessible, Interoperable, and Reusable—four foundational principles that emphasize machine-actionability, recognizing that humans increasingly rely on computational support to manage complex data [22]. This framework provides a systematic approach for researchers, scientists, and drug development professionals to assess and improve their data management practices, creating a more robust foundation for reproducible science.

The FAIR Principles Demystified: A Framework for Assessment

The FAIR Principles provide a structured framework for evaluating data management practices. The following table breaks down each component and its significance for reproducibility.

| FAIR Principle | Core Requirement | Impact on Reproducibility |

|---|---|---|

| Findable | Data and metadata are assigned persistent identifiers, rich metadata is provided, and both are registered in searchable resources [22]. | Enables other researchers to locate the exact dataset used in original research, the first step to replicating an experiment. |

| Accessible | Data and metadata are retrievable using standardized, open protocols, with clear authentication and authorization procedures [22] [24]. | Ensures that once found, data can be reliably accessed now and in the future for re-analysis. |

| Interoperable | Data and metadata use formal, accessible, and broadly applicable languages and vocabularies [22] [24]. | Allows data from different sources to be integrated and compared, enabling meta-analyses and validation across studies. |

| Reusable | Data and metadata are richly described with multiple attributes, including clear licenses and detailed provenance [22]. | Provides the context needed for a researcher to understand and correctly reuse data in a new setting. |

A key differentiator of the FAIR principles is their specific emphasis on enhancing the ability of machines to automatically find and use data, in addition to supporting its reuse by individuals [23]. This machine-actionability is crucial for managing the scale of modern research data and for enabling automated workflows that are foundational to reproducible computational science.

Manual vs. Automated FAIR Implementation: A Comparative Assessment

The path to implementing FAIR principles can vary significantly, from manual, researcher-led processes to automated, infrastructure-supported workflows. The following comparison outlines the performance, scalability, and reproducibility outcomes of these different approaches, drawing on current evidence from the field.

Comparison of Manual and Automated FAIRification Approaches

| Assessment Criteria | Manual / Human-Driven Synthesis | Automated / Machine-Driven Synthesis |

|---|---|---|

| Typical Workflow | Researcher-led documentation, ad-hoc file organization, personal data management. | Use of structured templates, metadata standards, and repository-embedded curation tools. |

| Metadata Completeness | Highly Variable: Prone to incomplete or inconsistent annotation due to reliance on individual diligence [25]. | Superior: Enforced by system design; tools like ISA framework and CEDAR workbench ensure consistent (meta)data collection [25]. |

| Evidence from Case Studies | Evaluation of Gene Expression Omnibus (GEO) data found 34.5% of samples missing critical metadata (e.g., sex), severely restricting reuse [25]. | Frameworks based on the Investigation, Study, Assay (ISA) model support structured deposition, enhancing data completeness for downstream analysis [25]. |

| Scalability & Cost | Low Scalability, High Hidden Cost: Labor-intensive, does not scale with data volume, leading to significant time investment and increased risk of costly irreproducibility [25]. | High Scalability, Initial Investment Required: Requires development of tools and infrastructure, but maximizes long-term return on research investments by minimizing obstacles between data producers and data scientists [25]. |

| Interoperability | Limited: Custom terminology and formats create data silos, hindering integration with other datasets [25]. | High: Relies on community standards and controlled vocabularies (e.g., DSSTox identifiers), enabling reliable data integration [25]. |

Experimental Protocol for Assessing FAIRness

A growing body of research employs systematic methodologies to evaluate the current state of FAIR compliance and identify gaps. The protocol below is synthesized from recent commentaries and assessments in the environmental health sciences [25].

- Repository Selection and Data Retrieval: Identify a major public data repository relevant to the field (e.g., Gene Expression Omnibus for genomic data). Define a cohort of datasets based on specific criteria (e.g., in vivo studies on a class of chemicals).

- Metadata Audit Framework: Develop a checklist of critical metadata fields required for reuse. This framework is often based on existing reporting standards like the Tox Bio Checklist (TBC) or the Minimum Information about a Sequencing Experiment (MINSEQE) [25].

- Quantitative Gap Analysis: Systematically audit each dataset in the cohort against the checklist. Quantify the percentage of datasets missing each type of critical metadata.

- Impact Assessment on Reuse: Correlate the absence of specific metadata elements with the practical inability to perform common integrative analyses, such as a meta-analysis or validation of computational models.

A Researcher's Toolkit for Implementing FAIR Principles

Transitioning to FAIR-compliant data management requires a set of conceptual and practical tools. The following table details key solutions and resources that facilitate this process.

Research Reagent Solutions for FAIR Data

| Solution / Resource | Function in FAIRification Process | Relevance to Reproducibility |

|---|---|---|

| Persistent Identifiers (DOIs) | Provides a permanent, unique link to a specific dataset in a repository [24]. | Ensures the exact data used in a publication can be persistently identified and retrieved, a cornerstone of reproducibility. |

| Metadata Standards & Checklists (e.g., MIAME, MINSEQE) | Provide community-agreed frameworks for the minimum information required to interpret and reuse data [25]. | Prevent ambiguity and missing critical experimental context, allowing others to replicate the experimental conditions. |

| Structured Metadata Tools (e.g., ISA framework, CEDAR) | Software workbenches that help researchers create and manage metadata using standardized templates [25]. | Captures metadata in a consistent, machine-actionable format, overcoming the limitations of free-text README files. |

| Controlled Vocabularies & Ontologies | Standardized terminologies (e.g., ITIS for taxonomy, SI units) for describing data [24]. | Ensures that concepts are defined uniformly, enabling accurate data integration and comparison across different studies. |

| Trusted Data Repositories | Online archives that provide persistent identifiers, stable access, and often curation services [24]. | Preserves data long-term and provides the infrastructure for making it Findable and Accessible, as required by funders [25]. |

Visualizing the FAIR Assessment Workflow

The following diagram illustrates the logical process of evaluating a dataset's readiness for reuse, contrasting the outcomes of FAIR versus non-FAIR compliant data management practices.

The critical role of the FAIR Principles in modern reproducibility is undeniable. They provide a structured, measurable framework that shifts data management from an ancillary task to an integral component of the scientific method. As evidenced by ongoing research and funder policies, the scientific community is moving toward a future where machine-actionable data is the norm, not the exception [25] [23]. This transition is essential for overcoming the reproducibility crisis, particularly in high-stakes fields like drug development. The comparative analysis reveals that while manual data management is inherently fragile and prone to error, automated and tool-supported approaches based on the FAIR principles offer a scalable, robust path toward ensuring that our valuable research data can be found, understood, and reused to validate findings and accelerate discovery. For researchers and institutions, the adoption of FAIR is no longer just a best practice but a fundamental requirement for conducting credible, reproducible, and impactful science in the 21st century.

Understanding Analytical Multiplicity in Data Science

Analytical multiplicity represents a fundamental challenge to reproducibility across scientific disciplines, particularly in data science and pharmaceutical research. This phenomenon occurs when researchers have substantial flexibility in selecting among numerous defensible analytical pathways to address the same research question. When combined with selective reporting, this flexibility can systematically increase false-positive results, inflate effect sizes, and create overoptimistic measures of predictive performance [26].

The consequences are far-reaching: in preclinical research alone, approximately $28 billion is spent annually on findings that cannot be replicated [26]. This reproducibility crisis erodes trust in scientific evidence and poses particular challenges for drug development, where decisions based on non-replicable findings can lead to costly late-stage failures. Understanding and addressing analytical multiplicity is therefore essential for researchers, scientists, and drug development professionals seeking to produce robust, reliable findings.

What is Analytical Multiplicity? A Conceptual Framework

The multiple comparisons problem arises when many statistical tests are performed on the same dataset, with each test carrying its own chance of a Type I error (false positive) [27]. As the number of tests increases, so does the overall probability of making at least one false positive discovery. This probability is measured through the family-wise error rate (FWER) [27].

In technical terms, if we perform (m) independent comparisons at a significance level of (\alpha), the family-wise error rate is given by:

[\bar{\alpha} = 1 - (1 - \alpha_{\text{per comparison}})^m]

This means that for 100 tests conducted at (\alpha = 0.05), the probability of at least one false positive rises to approximately 99.4%, far exceeding the nominal 5% error rate for a single test [27].

Table 1: Outcomes When Testing Multiple Hypotheses

| Null Hypothesis is True (H₀) | Alternative Hypothesis is True (Hₐ) | Total | |

|---|---|---|---|

| Test Declared Significant | V (False Positives) | S (True Positives) | R |

| Test Declared Non-Significant | U (True Negatives) | T (False Negatives) | m-R |

| Total | m₀ | m-m₀ | m |

Experimental Comparison: Manual vs. Automated Synthesis in Radiopharmaceutical Development

Study Design and Methodologies

Recent research in gallium-68 radiopharmaceutical development provides a compelling case study for examining analytical multiplicity in practice. Studies have directly compared manual and automated synthesis methods for compounds like 68Ga-PSMA-11, DOTA-TOC, and NOTA-UBI [28] [29].

Manual Synthesis Protocol:

- Small-scale initial experiments repeated multiple times to assess impact of pH, incubation temperature and time, buffer type and volume

- Optimization of bifunctional chelators to determine optimal radiometal-chelator-ligand complex

- Evaluation of robustness and repeatability through up-scaling reagents and radioactivity

Automated Synthesis Protocol:

- Use of cassette modules (e.g., GAIA, Scintomics GRP) housed in hot cells

- Implementation of radical scavengers to reduce radiolysis

- Standardized processes compliant with Good Manufacturing Practice (GMP) guidelines [29]

Comparative Performance Data

Table 2: Comparison of Manual vs. Automated Synthesis Methods for 68Ga Radiopharmaceuticals

| Performance Metric | Manual Synthesis | Automated Synthesis | Significance |

|---|---|---|---|

| Process Reliability | Variable results between operators and batches | High degree of robustness and repeatability | Automated methods more robust [28] |

| Radiation Exposure | Increased operator exposure | Markedly reduced operator exposure | Important for workplace safety [29] |

| GMP Compliance | Challenging to standardize | Facilitates reliable compliance | Critical for clinical application [29] |

| Radiolysis Control | Less controlled | Requires radical scavengers but better controlled | Automated methods more consistent [29] |

| Inter-batch Variability | Higher variability | Reduced variability through standardization | Improved product quality [28] |

Research across disciplines reveals that analytical multiplicity arises from multiple decision points throughout the research process [26]. The framework below illustrates how these sources of uncertainty create a "garden of forking paths" in data analysis.

Addressing Multiplicity: Statistical Solutions and Methodological Approaches

Multiple Testing Corrections

To control the inflation of false positive rates, several statistical techniques have been developed:

- Bonferroni Correction: The simplest method, dividing the significance threshold (\alpha) by the number of tests ((m)), providing (\alpha_{\text{per comparison}} = \alpha/m) [27]

- Holm-Bonferroni Method: A sequentially rejective procedure that offers more power while controlling family-wise error rate

- False Discovery Rate (FDR) Control: Less stringent than FWER control, FDR methods limit the expected proportion of false discoveries among significant results [27]

Robustness Assessment Frameworks

Emerging approaches directly address analytical multiplicity by assessing robustness across multiple analytical pathways:

- Specification Curve Analysis: Testing all reasonable analytical choices and presenting the full distribution of results

- Multiverse Analysis: Systematically mapping and testing all possible analytical decisions in a "multiverse" of analyses

- Vibration of Effects: Examining how effect sizes vary across different model specifications in epidemiology [26]

- Sensitivity Analysis: Long-standing tradition in climatology and ecology assessing robustness to alternative model assumptions [26]

Essential Research Reagent Solutions for Robust Synthesis

Table 3: Key Materials and Reagents in Gallium-68 Radiopharmaceutical Synthesis

| Reagent/Material | Function | Application Notes |

|---|---|---|

| 68Ge/68Ga Generators | Source of gallium-68 radionuclide | Typically using 0.6M HCl for elution [29] |

| NOTA Chelators | Bifunctional chelators for peptide binding | Forms stable complexes with gallium-68 [29] |

| Sodium Acetate Buffer | pH control during radiolabelling | Common buffer for 68Ga-labelling [29] |

| HEPES Buffer | Alternative buffering system | Used in specific automated synthesis protocols [29] |

| Radical Scavengers | Reduce radiolytic degradation | Essential for automated synthesis to control impurities [29] |

| UBI Peptide Fragments | Targeting vectors for infection imaging | Particularly fragments 29-41 and 31-38 [29] |

Experimental Workflow: From Manual Optimization to Automated Production

The development pathway for robust analytical methods typically progresses from manual optimization to automated production, as illustrated in the workflow below.

Analytical multiplicity presents both a challenge and an opportunity for data science and drug development research. While the flexibility in analytical approaches can lead to non-replicable findings if misused, consciously addressing this multiplicity through robust methodological practices enhances research credibility.

The comparison between manual and automated synthesis methods demonstrates how standardization reduces variability and improves reproducibility. Automated approaches provide higher robustness and repeatability while reducing operator radiation exposure [28] [29]. However, the initial manual optimization phase remains essential for understanding parameter sensitivities and establishing optimal conditions.

For researchers navigating this complex landscape, transparency about analytical choices, implementation of multiple testing corrections when appropriate, and systematic robustness assessments across reasonable analytical alternatives offer a path toward more reproducible and reliable scientific findings. By acknowledging and explicitly addressing analytical multiplicity, the scientific community can strengthen the evidentiary basis for critical decisions in drug development and beyond.

Implementing Manual and Automated Assessment Frameworks

Within the critical discourse on research reproducibility, manual assessment methodologies represent the established paradigm for evaluating scientific quality and credibility. These human-centric processes, primarily peer review and expert inspection, serve as a fundamental gatekeeper before research enters the scientific record. This guide objectively compares these two manual approaches, framing them within a broader thesis on reproducibility assessment. While automated synthesis technologies are emerging, manual assessment remains the cornerstone for validating scientific rigor, methodological soundness, and the overall contribution of research, particularly in fields like drug development where decisions directly impact health outcomes [30] [31]. The following sections provide a detailed comparison of peer review and expert inspection, supported by experimental data, protocols, and analytical workflows.

Core Principles and Methodologies

High-Quality Peer Review

Peer review is a formal process where field experts evaluate a manuscript before publication. Its effectiveness rests on foundational principles including the disclosure of conflicts of interest, the application of deep scientific expertise, and the provision of constructive feedback aimed at strengthening the manuscript [30].

A reviewer's responsibilities are systematic and thorough, encompassing several key areas [30]:

- Validation of Data and Conclusions: Meticulously examining data to ensure conclusions are well-supported and identifying overinterpretations.

- Evaluation of Key Dimensions: Assessing the manuscript's data integrity, novelty, potential impact, and methodological soundness.

- Identification of Improvement Areas: Providing specific, actionable suggestions to enhance clarity, robustness, and impact.

- Impartial Evaluation: Ensuring the assessment is based solely on scientific content, free from bias related to the author's institution or reputation.

The process follows a structured approach to ensure each part of the manuscript is rigorously evaluated [30]:

- Title and Abstract: Assessing accuracy and conciseness.

- Introduction: Evaluating the background, rationale, and clarity of the research purpose.

- Methods: Scrutinizing ethical soundness, appropriateness of methodology, statistical approach, and ensuring sufficient detail for reproducibility.

- Results: Checking for transparent, unambiguous, and non-redundant data presentation.

- Discussion: Verifying the accuracy of interpretations, acknowledgment of limitations, and whether conclusions are proportionate to the data.

- Figures and Tables: Ensuring visual elements are accurate, non-redundant, and understandable without referring to the main text.

Expert Inspection

Expert inspection is a broader, often more flexible, manual assessment technique where one or more specialists examine a research product, which can include protocols, data, code, or published manuscripts. Unlike the standardized peer review for journals, expert inspections are often tailored to a specific objective, such as auditing a laboratory's procedures, validating an analytical pipeline, or assessing the reproducibility of a specific claim. The methodology is typically less prescribed and more dependent on the inspector's proprietary expertise and the inspection's goal, which may focus on technical verification, fraud detection, or compliance with specific standards (e.g., Good Clinical Practice in drug development).

Comparative Analysis: Peer Review vs. Expert Inspection

The following tables synthesize the core characteristics, advantages, and disadvantages of peer review and expert inspection, providing a direct comparison for researchers.

Table 1: Core Characteristics and Methodological Comparison

| Feature | Peer Review | Expert Inspection |

|---|---|---|

| Primary Objective | Quality control and validation for publication in scientific literature [30]. | Targeted verification, audit, or validation for specific reproducibility concerns. |

| Typical Output | Publication decision (accept/reject/revise) and constructive feedback for authors [30]. | Inspection report, audit findings, or technical recommendation. |

| Formality & Structure | High; follows a structured, section-by-section process dictated by journal guidelines [30]. | Variable; can be highly structured or adaptive, based on the inspection's purpose. |

| Anonymity | Can be single-anonymized, double-anonymized, or transparent [32]. | Typically not anonymous; the inspector's identity is known. |

| Scope of Assessment | Comprehensive: title, abstract, introduction, methods, results, discussion, figures, and references [30]. | Can be comprehensive but is often narrowly focused on a specific component (e.g., data, code, a specific method). |

Table 2: Performance and Practical Comparison

| Aspect | Peer Review | Expert Inspection |

|---|---|---|

| Key Advantages | - Provides foundational credibility to published research [30].- Offers authors constructive feedback, improving the final paper [30].- Multiple review models (e.g., transparent, transferrable) can enhance the process [32]. | - Can be highly focused and in-depth on specific technical aspects.- Potentially faster turnaround for targeted issues.- Flexibility in methodology allows for customized assessment protocols. |

| Key Challenges | - Time-intensive for reviewers, leading to potential delays [30].- Susceptible to conscious and unconscious biases [30] [32].- Often lacks formal recognition or reward for reviewers [30]. | - Findings can be highly dependent on a single expert's opinion.- Lack of standardization can affect consistency and generalizability.- Potentially high cost for engaging top-tier specialists. |

| Impact on Reproducibility | Acts as a primary filter; focuses on methodological clarity and statistical soundness to ensure others can, in principle, replicate the work [30]. | Provides a secondary, deeper dive to actively verify reproducibility or diagnose failures in specific areas. |

Experimental Protocols for Manual Assessment

Protocol for a Standard Peer Review Experiment

To empirically compare the effectiveness of different peer review models, one could implement the following experimental protocol:

- Objective: To measure the effect of double-anonymized versus transparent peer review on the quality and constructiveness of reviewer comments.

- Materials: A set of pre-publication manuscripts (e.g., from a preprint server) with similar subject matter and methodological complexity.

- Procedure:

- Random Allocation: Randomly assign each manuscript to one of two groups: double-anonymized review or transparent review (where identities of authors and reviewers are known to each other).

- Reviewer Recruitment: Engage a pool of qualified reviewers, ensuring a mix of career stages and expertise.

- Review Execution: Conduct the review process according to the assigned model. Reviewers in both groups use a standardized form to assess originality, methodological soundness, and clarity [32].

- Data Collection: Collect the following metrics:

- Time taken to complete the review.

- Score on a standardized "constructiveness scale" for feedback.

- Number of methodological flaws identified.

- Post-review survey of reviewer and author satisfaction.

- Data Synthesis: Perform a qualitative synthesis of feedback tone and a quantitative analysis of the collected metrics to compare the outcomes between the two groups [33].

Protocol for an Expert Inspection Experiment

To evaluate the efficacy of expert inspection in identifying data integrity issues, the following protocol can be used:

- Objective: To determine the accuracy and efficiency of expert inspection in detecting seeded errors in a dataset and its accompanying analysis code.

- Materials: A synthetic dataset with known, seeded errors (e.g., data entry duplicates, miscodings, misapplied statistical tests).

- Procedure:

- Sample Preparation: Create multiple versions of the dataset and code, each with a different set and number of seeded errors.

- Expert Recruitment: Engage a cohort of domain experts and methodologies as inspectors.

- Inspection Task: Provide each expert with a dataset and code package. Their task is to identify and document all potential errors within a fixed time frame.

- Data Collection: Record:

- The number of true errors correctly identified (true positives).

- The number of correct analyses flagged as errors (false positives).

- The time taken to complete the inspection.

- Data Synthesis: Calculate standard performance metrics for classification, such as Accuracy, F-measure, and Area Under the ROC Curve (AUC), to quantitatively compare the performance of different experts or inspection methodologies [34].

The Scientist's Toolkit: Essential Reagents for Manual Assessment

The following table details key "research reagents" – in this context, methodological tools and resources – that are essential for conducting rigorous manual assessments.

Table 3: Key Research Reagent Solutions for Manual Assessment

| Item | Function in Manual Assessment |

|---|---|

| Structured Data Extraction Tables | Standardized forms or sheets used to systematically extract data from studies during systematic reviews or meta-analyses, ensuring consistency and reducing omission [35]. |

| Standardized Appraisal Checklists | Tools like the Jadad score for clinical trials or similar quality scales used to uniformly assess the methodological quality and risk of bias in individual studies [35]. |

| Statistical Software (R, Python) | Platforms used to perform complex statistical re-analyses, calculate pooled effect sizes in meta-analyses, and generate funnel plots to assess publication bias [35]. |

| Reference Management Software | Applications essential for managing and organizing citations, which is crucial during the literature retrieval and synthesis phases of a review or inspection [31]. |

| Digital Lab Notebooks & Code Repositories | Platforms that provide a transparent and version-controlled record of the research process, enabling inspectors and reviewers to verify analyses and methodological steps. |

Workflow and Pathway Diagrams

The following diagram illustrates the logical workflow of a typical peer review process, from submission to final decision.

This next diagram outlines a high-level workflow for planning and executing an expert inspection, highlighting its more flexible and targeted nature.

Inconsistent data collection practices across biomedical, clinical, behavioral, and social sciences present a fundamental challenge to research reproducibility [36]. These inconsistencies arise from multiple factors, including variability in assessment translations across languages, differences in how constructs are operationalized, selective inclusion of questionnaire components, and inconsistencies in versioning across research teams and time points [36]. Even minor modifications to survey instruments—such as alterations in branch logic, response scales, or scoring calculations—can significantly impact data integrity, particularly in longitudinal studies [36]. The consequences are profound: in clinical settings, slight deviations in assessment methods can lead to divergent patient outcomes, while in research, such inconsistencies undermine study integrity, bias conclusions, and pose significant challenges for meta-analyses and large-scale collaborative studies [36].

The reproducibility crisis extends across scientific disciplines. A review of urology publications from 2014-2018 found that only 4.09% provided access to raw data, 3.09% provided access to materials, and a mere 0.58% provided links to protocols [37]. None of the studied publications provided analysis scripts, highlighting the severe deficiencies in reproducible research practices [37]. This context underscores the critical need for standardized approaches to data collection that can ensure consistency across studies, research teams, and timepoints.

ReproSchema: A Schema-Driven Solution

Conceptual Framework and Architecture

ReproSchema is an innovative ecosystem designed to standardize survey-based data collection through a schema-centric framework, a library of reusable assessments, and computational tools for validation and conversion [36]. Unlike conventional survey platforms that primarily offer graphical user interface-based survey creation, ReproSchema provides a structured, modular approach for defining and managing survey components, enabling interoperability and adaptability across diverse research settings [36]. At its core, ReproSchema employs a hierarchical schema organization with three primary levels, each described by its own schema [38]:

- Protocol: The highest level that defines a set of assessments or questionnaires to be included in a given study

- Activity: Describes a given questionnaire or assessment, including all its items

- Item: Represents individual questions from an assessment, including question text, response format, and UI specifications

This structured approach ensures consistency across studies, supports version control, and enhances data comparability and integration [36]. The ReproSchema model was initially derived from the CEDAR Metadata Model but has evolved significantly to accommodate the needs of neuroimaging and other clinical and behavioral protocols [38]. Key innovations include alignment with schema.org and NIDM, support for structured nested elements, integration with Git/Github for persistent URIs, addition of computable elements, and user interface elements that guide data collection implementation [38].

Core Components and Workflow

The ReproSchema ecosystem integrates a foundational schema with six essential supporting components [36]:

- reproschema-library: A library of standardized, reusable assessments formatted in JSON-LD

- reproschema-py: A Python package that supports schema creation, validation, and conversion to formats compatible with existing data collection platforms

- reproschema-ui: A user interface designed for interactive survey deployment

- reproschema-backend: A back-end server for secure survey data submission

- reproschema-protocol-cookiecutter: A protocol template that enables researchers to create and customize research protocols

- reproschema-server: A Docker container that integrates the UI and back end

The typical ReproSchema workflow involves multiple stages that ensure standardization and reproducibility [36]. Researchers can begin with various input formats, including PDF/DOC questionnaires (convertible using LLMs), existing assessments from the ReproSchema library, or REDCap CSV exports. The reproschema-protocol-cookiecutter tool then provides a structured process for creating and publishing a protocol on GitHub with organized metadata and version control. Protocols are stored in GitHub repositories with version-controlled URIs ensuring persistent access. The reproschema-ui provides a browser-based interface for interactive survey deployment, while survey responses are stored in JSON-LD format with embedded URIs linking to their sources. Finally, reproschema-py tools facilitate output conversion into standardized formats including NIMH Common Data Elements, Brain Imaging Data Structure phenotype format, and REDCap CSV format.

Figure 1: ReproSchema Workflow for Standardized Data Collection

Comparative Evaluation: ReproSchema vs. Alternative Platforms

Methodology for Platform Assessment

To objectively assess ReproSchema's capabilities, researchers conducted a systematic comparison against 12 survey platforms [36] [39]. The evaluation employed two distinct frameworks:

- FAIR Principles Assessment: Each platform was evaluated against 14 criteria based on the Findability, Accessibility, Interoperability, and Reusability principles [36]

- Survey Functionality Assessment: Platforms were assessed for their support of 8 key survey functionalities essential for comprehensive data collection [36]

The compared platforms included: Center for Expanded Data Annotation and Retrieval (CEDAR), formr, KoboToolbox, Longitudinal Online Research and Imaging System (LORIS), MindLogger, OpenClinica, Pavlovia, PsyToolkit, Qualtrics, REDCap (Research Electronic Data Capture), SurveyCTO, and SurveyMonkey [36]. This diverse selection ensured representation of platforms used across academic, clinical, and commercial research contexts.

Experimental Results and Comparative Performance

ReproSchema demonstrated distinctive capabilities in the comparative analysis, meeting all 14 FAIR criteria—a achievement not matched by any other platform in the evaluation [36]. The results highlight ReproSchema's unique positioning as a framework specifically designed for standardized, reproducible data collection rather than merely a data collection tool.

Table 1: FAIR Principles Compliance Across Platforms

| Platform | Findability | Accessibility | Interoperability | Reusability | Total FAIR Criteria Met |

|---|---|---|---|---|---|

| ReproSchema | 4/4 | 4/4 | 3/3 | 3/3 | 14/14 |

| CEDAR | 3/4 | 3/4 | 3/3 | 2/3 | 11/14 |

| REDCap | 2/4 | 3/4 | 2/3 | 2/3 | 9/14 |

| Qualtrics | 2/4 | 2/4 | 2/3 | 2/3 | 8/14 |

| SurveyMonkey | 1/4 | 2/4 | 1/3 | 1/3 | 5/14 |

| PsyToolkit | 2/4 | 3/4 | 2/3 | 2/3 | 9/14 |

| OpenClinica | 3/4 | 3/4 | 2/3 | 2/3 | 10/14 |

| KoboToolbox | 2/4 | 3/4 | 2/3 | 2/3 | 9/14 |

In terms of functional capabilities, ReproSchema supported 6 of 8 key survey functionalities, with particular strengths in standardized assessments, multilingual support, and automated scoring [36]. While some commercial platforms supported a broader range of functionalities, ReproSchema's unique value lies in its structured, schema-driven approach that ensures consistency and reproducibility across implementations.

Table 2: Survey Functionality Support Across Platforms

| Functionality | ReproSchema | REDCap | Qualtrics | Survey-Monkey | Open-Clinica | Kobo-Toolbox |

|---|---|---|---|---|---|---|

| Standardized Assessments | Yes | Partial | Partial | No | Partial | No |

| Multilingual Support | Yes | Yes | Yes | Yes | Yes | Yes |

| Multimedia Integration | Yes | Yes | Yes | Yes | Partial | Yes |

| Data Validation | Yes | Yes | Yes | Limited | Yes | Yes |

| Advanced Branching | Yes | Yes | Yes | Limited | Yes | Limited |

| Automated Scoring | Yes | Limited | Limited | No | Limited | No |

| Real-time Collaboration | No | Yes | Yes | Yes | Yes | Limited |

| Mobile Offline Support | No | Yes | Limited | Limited | Yes | Yes |

Experimental Protocols and Use Cases

Implementation Methodology

Implementing ReproSchema follows a structured protocol that leverages its core components [40]. The process begins with installing the ReproSchema Python package (pip install reproschema), then creating a new protocol using the cookiecutter template [40]. The schema development follows ReproSchema's hierarchical structure:

Item Creation Protocol:

Validation Protocol:

Researchers validate schemas using the command-line interface: reproschema validate my_protocol.jsonld [40]. The validation process checks schema compliance, required fields, response option completeness, and URI persistence, ensuring all components meet ReproSchema specifications before deployment [40].

Applied Use Cases Demonstrating Versatility

Three research use cases illustrate ReproSchema's practical implementation and versatility [36]:

NIMH-Minimal Mental Health Assessments: ReproSchema standardized essential mental health survey Common Data Elements required by the National Institute of Mental Health, ensuring consistency across research implementations while maintaining flexibility for study-specific adaptations [36].

Longitudinal Studies (ABCD & HBCD): The framework systematically tracked changes in longitudinal data collection for the Adolescent Brain Cognitive Development (ABCD) and HEALthy Brain and Child Development (HBCD) studies, maintaining assessment comparability across multiple timepoints while transparently documenting protocol modifications [36].

Neuroimaging Best Practices Checklist: Researchers converted a 71-page neuroimaging best practices guide (the Committee on Best Practices in Data Analysis and Sharing Checklist) into an interactive checklist, enhancing usability while maintaining comprehensive documentation [36].

The Researcher's Toolkit: Essential Components for Implementation

Table 3: Research Reagent Solutions for ReproSchema Implementation

| Component | Type | Function | Access Method |

|---|---|---|---|

| reproschema-py | Software Tool | Python package for schema creation, validation, and format conversion | pip install reproschema [40] |

| reproschema-library | Data Resource | Library of >90 standardized, reusable assessments in JSON-LD format | GitHub repository [36] |

| reproschema-ui | Interface | User interface for interactive survey deployment | Docker container or Node.js application [36] |

| reproschema-protocol-cookiecutter | Template | Structured template for creating and customizing research protocols | Cookie cutter template [36] |

| JSON-LD | Data Format | Primary format combining JSON with Linked Data for semantic relationships | JSON-LD serialization [40] |

| LinkML | Modeling Language | Linked data modeling language for defining and validating schemas | YAML schema definitions [38] |

| SHACL | Validation | Shapes Constraint Language for validating data quality against schema | SHACL validation constraints [40] |

| GitHub | Infrastructure | Version control and persistent URI service for protocols and assessments | Git repository hosting [36] |

Comparative Strengths and Limitations

Advantages of Schema-Driven Standardization

ReproSchema's schema-driven approach offers several distinct advantages over conventional survey platforms. Its perfect adherence to FAIR principles (14/14 criteria) ensures exceptional findability, accessibility, interoperability, and reusability of both survey instruments and collected data [36]. The built-in version control through Git integration enables precise tracking of assessment modifications across study versions and research sites, addressing a critical limitation of traditional platforms [36]. Furthermore, ReproSchema's structured, hierarchical organization with persistent URIs for all elements ensures long-term data provenance and semantic interoperability, allowing researchers to trace data points back to their exact survey instruments [38].

The framework's compatibility with existing research workflows represents another significant advantage, with conversion tools supporting export to REDCap CSV, FHIR standards, NIMH Common Data Elements, and Brain Imaging Data Structure phenotype formats [36]. This interoperability reduces adoption barriers and facilitates integration with established research infrastructures. Additionally, the library of pre-validated assessments (>90 instruments) accelerates study setup while ensuring measurement consistency across research teams and disciplines [36].

Limitations and Implementation Considerations

Despite its strengths, ReproSchema presents certain limitations that researchers must consider. The platform currently lacks robust mobile offline support and real-time collaboration features available in some commercial alternatives [36]. The learning curve associated with JSON-LD and linked data concepts may present initial barriers for research teams accustomed to graphical survey interfaces, requiring investment in technical training [41]. Additionally, while ReproSchema provides superior standardization and reproducibility features, teams requiring rapid, simple survey deployment for non-longitudinal studies might find traditional platforms more immediately practical [36].

ReproSchema represents a paradigm shift in research data collection, moving from isolated, platform-specific surveys to structured, schema-driven instruments that prioritize reproducibility from inception. Its perfect adherence to FAIR principles and support for critical survey functionalities position it as a robust solution for addressing the reproducibility crisis in scientific research [36]. The framework's demonstrated success in standardizing mental health assessments, tracking longitudinal changes, and converting complex guidelines into interactive tools highlights its practical utility across diverse research contexts [36].

For the research community, adopting schema-driven approaches like ReproSchema promises significant long-term benefits: reduced data harmonization efforts, enhanced cross-study comparability, improved meta-analysis reliability, and ultimately, more efficient translation of research findings into clinical practice. As research increasingly emphasizes transparency and reproducibility, tools like ReproSchema that embed these principles into the data collection process itself will become essential components of the scientific toolkit.

In the rigorous fields of drug development and scientific research, the reproducibility of an analysis is as critical as its outcome. As large language models (LLMs) are increasingly used to automate data science tasks, a critical challenge emerges: their stochastic and opaque nature can compromise the reliability of the generated analyses [42]. Unlike mathematics with single correct answers, data science is inherently open-ended, often admitting multiple defensible analytical paths, making transparency and reproducibility essential for trust and verification [42] [43]. To address this, the Analyst-Inspector framework provides a statistically grounded, automated method for evaluating and ensuring the reproducibility of LLM-generated data science workflows [42] [43]. This guide explores how this framework integrates with modern AI agent frameworks, offering researchers a robust model for assessing their utility in mission-critical domains.

The Reproducibility Challenge in AI-Driven Science

The ability to independently replicate results is a cornerstone of the scientific method. In synthetic chemistry, for instance, irreproducible methods waste time, money, and resources, often due to assumptions of knowledge or undocumented details in procedures [44]. Similarly, in AI-generated data analysis, the problem is twofold: the inherent variability of LLM outputs and the "garden of forking paths" in data science, where different, equally justifiable modeling strategies can lead to distinct conclusions [43].

Manual verification of LLM-generated code is labor-intensive and requires significant expertise, creating a scalability bottleneck [42] [43]. The analyst-inspector framework addresses this by automating the evaluation of the underlying workflow—the structured sequence of reasoning steps and analytical choices—rather than just the final code or output [43]. This shift is crucial for establishing the transparency required in fields like pharmaceutical research, where high-stakes decisions are based on analytical findings.

The Analyst-Inspector Framework: A Primer

Grounded in classical statistical principles of sufficiency and completeness, the analyst-inspector framework evaluates whether a workflow contains all necessary information (sufficiency) without extraneous details (completeness) for independent replication [43].

Core Mechanism and Workflow

The framework operates through a structured interaction between two AI models:

- The Analyst LLM: Generates a complete data science solution, including both the executable code and a natural language workflow describing the analytical steps, rationale, and key decisions [43].

- The Inspector LLM: An independent model that uses only the workflow description (without the analyst's code) to attempt a reproduction of the analysis. The goal is to generate functionally equivalent code that arrives at the same conclusion [43].

A successful reproduction indicates that the original workflow was sufficiently detailed and clear, minimizing reliance on implicit assumptions or model-specific knowledge. The following diagram illustrates this process.

Quantitative Evaluation in Practice

In a large-scale evaluation of this framework, researchers tested 15 different analyst-inspector LLM pairs across 1,032 data analysis tasks from three public benchmarks [43]. The study quantified reproducibility by measuring how often the inspector could produce functionally equivalent code and the same final answer as the analyst, based solely on the workflow.

The table below summarizes key findings on how different prompting strategies impacted the reproducibility and accuracy of analyses generated by various LLMs.

Table 1: Impact of Prompting Strategies on LLM Reproducibility and Accuracy (Adapted from [43])

| Prompting Strategy | Core Principle | Effect on Reproducibility | Effect on Accuracy |

|---|---|---|---|

| Standard Prompting | Baseline instruction to solve the task. | Served as a baseline for comparison. | Served as a baseline for comparison. |