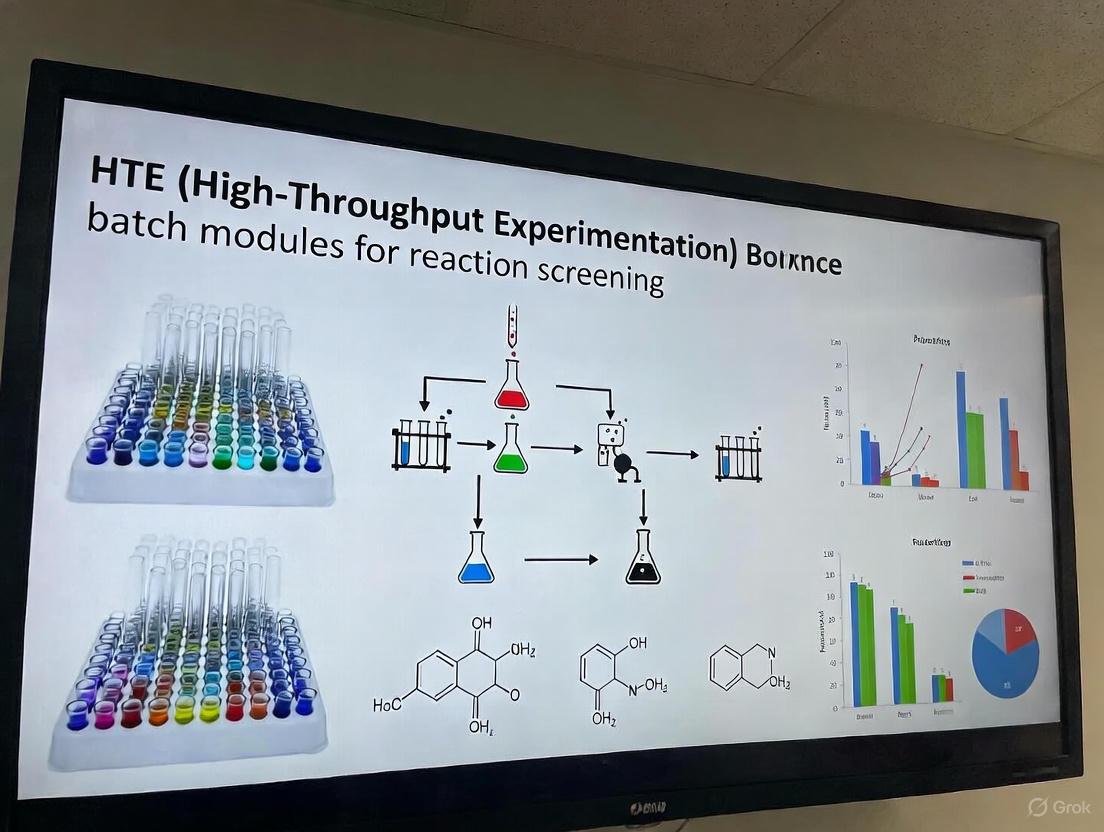

HTE Batch Modules for Reaction Screening: A Complete Guide for Accelerated Drug Discovery

This article provides a comprehensive overview of High-Throughput Experimentation (HTE) batch modules for chemical reaction screening, tailored for researchers, scientists, and drug development professionals.

HTE Batch Modules for Reaction Screening: A Complete Guide for Accelerated Drug Discovery

Abstract

This article provides a comprehensive overview of High-Throughput Experimentation (HTE) batch modules for chemical reaction screening, tailored for researchers, scientists, and drug development professionals. It covers the foundational principles of HTE batch systems, explores their practical applications in optimizing diverse reactions like Buchwald-Hartwig amination and photoredox chemistry, details advanced troubleshooting and machine-learning-driven optimization strategies, and offers a critical validation against alternative continuous flow approaches. The scope is designed to equip scientists with the knowledge to implement and leverage HTE batch platforms for faster, more efficient discovery and process development in biomedical research.

What are HTE Batch Modules? Core Principles and System Components

Defining High-Throughput Experimentation (HTE) in Chemical Synthesis

High-Throughput Experimentation (HTE) is a technique that enables the parallel execution of large arrays of chemically diverse reactions while requiring significantly less effort and material per experiment compared to traditional methods [1]. In modern organic chemistry and drug discovery, HTE has become a core foundation for reaction discovery, optimization, and understanding reaction scope [2]. This approach allows researchers to systematically interrogate reactivity across diverse chemical spaces, accelerating the development of synthetic methodologies and the identification of optimal conditions for challenging transformations.

The power of HTE lies in its ability to pose comprehensive questions about chemical reactivity and obtain detailed answers through rationally designed experimental arrays. By examining permutations of reaction components including catalysts, ligands, solvents, and reagents, researchers can rapidly identify optimal conditions while simultaneously developing a deeper understanding of how each component influences reaction outcomes [1].

Key Applications and Significance in Drug Discovery

HTE has transformed modern synthetic chemistry, particularly in pharmaceutical research where it addresses critical challenges:

- Reaction Discovery and Optimization: HTE is equally powerful for optimizing individual steps in total synthesis and driving discovery of novel methodology [1]. It enables rapid identification of preferred catalysts, reagents, and solvents for given transformations.

- Material-Efficient Screening: The miniaturization inherent in HTE (typically 0.1-1.0 mg per reaction) allows evaluation of broad condition arrays with limited amounts of precious compounds [2] [1].

- Comprehensive Reaction Understanding: Large arrays explicitly examine combinations of experimental factors, revealing patterns and relationships that would remain hidden with traditional experimentation [1].

- Accelerated Medicinal Chemistry: HTE tools allow project teams to "fail fast," directing them to pursue productive synthetic routes before substantial time and resources are invested [2].

The significance of HTE is particularly evident in drug development, where it has become standard practice in leading pharmaceutical companies. Over 80% of the top 10 largest pharma companies utilize HTE approaches, demonstrating its critical role in modern drug discovery pipelines [3].

Experimental Design and Workflow

Rational Experimental Design

Effective HTE requires carefully constructed experimental arrays that maximize information gain while conserving resources. Unlike traditional experimentation that tests small numbers of conditions sequentially, HTE employs systematic approaches:

- Factor Selection: Key reaction parameters (catalyst, ligand, solvent, base, temperature) are identified based on literature analysis and mechanistic understanding [2] [1].

- Chemical Space Coverage: Solvents and reagents are selected to maximize breadth of chemical space examined, using numerical parameters like dielectric constant and dipole moment to ensure diversity [1].

- Control Inclusion: Arrays include negative controls and experimental replicates to validate results and assess reproducibility [1].

- Dimensional Balancing: When constraints exist, the most influential factors receive the largest array dimensions, while minor factors are assigned smaller dimensions [1].

End-User Plate Systems

A sophisticated HTE implementation involves "end-user plates" – pre-prepared arrays of catalysts and reagents stored under appropriate conditions. Domainex's platform exemplifies this approach [2]:

Table 1: End-User Plate Specifications and Applications

| Plate Type | Reaction Scale | Key Advantages | Ideal Use Cases |

|---|---|---|---|

| μL plate | 0.1-0.5 mg substrate | Minimal material consumption; High-density screening | Precious intermediates; Early discovery |

| mL plate | 1-5 mg substrate | Easier handling; Direct scalability | Route scouting; Process development |

These systems provide significant efficiency: a 24-well Suzuki-Miyaura coupling plate tests 6 palladium pre-catalysts with 2 bases and 2 solvents, delivering comprehensive condition screening with less than one hour of lab work [2].

HTE Workflow Process

The complete HTE process involves multiple interconnected steps from experimental design to data analysis and decision-making [3]:

HTE Workflow Process

Case Study: Suzuki-Miyaura Cross-Coupling Optimization

Experimental Protocol

Objective: Identify optimal conditions for Suzuki-Miyaura cross-coupling of challenging aryl chlorides with boronic acids.

Plate Design: 24-well format with 6 palladium pre-catalysts, 2 bases (K₃PO₄ and Cs₂CO₃), and 2 solvents (t-AmOH/H₂O and 1,4-dioxane/H₂O) [2].

Procedure:

- Plate Preparation: Pre-catalyst solutions in THF are dispensed into glass vials and evaporated to deposit accurate catalyst quantities (performed under inert atmosphere for reproducibility).

- Reagent Addition: Aryl chloride and boronic acid substrates are added as stock solutions (10-50 mM concentration in DMSO or reaction solvent).

- Base/Solvent Addition: Base solutions (0.1 M) in solvent/water mixtures (4:1 ratio) are added via liquid handling.

- Reaction Execution: Plate is sealed and heated to 80-100°C for 12-18 hours with agitation.

- Analysis:

- Quench with acetonitrile containing internal standard (N,N-dibenzylaniline)

- UPLC-MS analysis with 2-minute gradient methods

- Automated data processing using tools like PyParse for peak identification and integration [2]

Data Analysis and Results Interpretation

Quantitative Analysis: Conversion metrics are calculated using "corrP/STD" values: product peak area divided by internal standard peak area, normalized to maximum observed ratio [2]. This approach enables reliable cross-comparison between wells while mitigating UPLC-MS analytical artifacts.

Table 2: Representative Suzuki-Miyaura HTE Results

| Pre-catalyst | Base | Solvent System | Conversion (corrP/STD) | Isolated Yield (%) |

|---|---|---|---|---|

| BrettPhos Pd G3 | K₃PO₄ | t-AmOH/H₂O | 1.00 | 88% |

| RuPhos Pd G3 | Cs₂CO₃ | 1,4-dioxane/H₂O | 0.95 | 82% |

| t-BuXPhos Pd G3 | K₃PO₄ | t-AmOH/H₂O | 0.15 | <5% |

| XPhos Pd G3 | Cs₂CO₃ | 1,4-dioxane/H₂O | 0.98 | 85% |

Key Findings:

- BrettPhos Pd G3 with K₃PO₄ in t-AmOH/H₂O delivered optimal performance (88% isolated yield)

- t-BuXPhos Pd G3 showed substrate-specific incompatibility, highlighting how HTE reveals condition-dependent catalyst performance

- Successful conditions were directly scalable from microgram to multimilligram scale without re-optimization [2]

The Scientist's Toolkit: Essential HTE Components

Research Reagent Solutions

Table 3: Essential HTE Reagents and Equipment

| Component | Function | Examples & Specifications |

|---|---|---|

| Palladium Pre-catalysts | Cross-coupling catalysis | BrettPhos Pd G3, RuPhos Pd G3, XPhos Pd G3; 0.5-2.0 mol% loading [2] |

| Phosphine Ligands | Stabilize active metal centers; Modulate reactivity | Biaryl phosphines (XPhos), alkylphosphines (PCy3), bis-phosphines (dppf) [2] |

| Solvent Systems | Reaction medium; Solubility control | t-AmOH/H₂O, 1,4-dioxane/H₂O, THF, toluene; 4:1 organic/water ratio [2] |

| Base Arrays | Promote transmetalation; Scavenge acids | K₃PO₄, Cs₂CO₃, KOAc; 1.5-3.0 equivalents [2] [1] |

| Analysis Internal Standards | Quantitative UPLC-MS calibration | N,N-dibenzylaniline; 2 µmol as 4 mM DMSO stock [2] |

| HTE Plates & Hardware | Reaction vessels; Heating/agitation | 24-well "end-user" plates (μL and mL scale); Glass vials with sealing mats [2] |

| Software Solutions | Experimental design; Data analysis | AS-Experiment Builder (plate design); AS-Professional (visualization); PyParse (automated UPLC-MS analysis) [2] [3] |

Advanced Statistical Analysis in HTE

The HiTEA Framework

Advanced HTE data analysis employs sophisticated statistical frameworks like the High-Throughput Experimentation Analyzer (HiTEA), which combines three orthogonal approaches [4]:

HiTEA Statistical Framework

Key Statistical Approaches

- Random Forest Analysis: Identifies which reaction variables (catalyst, solvent, base) most significantly impact outcomes without assuming linear relationships [4].

- Z-Score ANOVA-Tukey: Normalizes yields across different substrate classes and identifies statistically significant best-in-class and worst-in-class reagents [4].

- Principal Component Analysis: Visualizes how high-performing and low-performing reagents populate chemical space, revealing clustering and selection biases [4].

This comprehensive statistical framework enables extraction of meaningful chemical insights from complex HTE datasets, moving beyond simple condition identification to fundamental reaction understanding.

Critical Considerations for Successful HTE Implementation

Data Quality and Analysis Challenges

Quantitative HTS (qHTS) presents specific statistical challenges that researchers must address:

- Parameter Estimation Variability: Nonlinear modeling with the Hill equation for concentration-response data produces highly variable parameter estimates when experimental designs fail to establish both asymptotes [5].

- False Positive/Negative Risks: Flat response curves from potent compounds may generate poor fits and be misclassified as inactive, while truly null compounds might spuriously appear active due to random variation [5].

- Measurement Error Impact: Random error significantly diminishes reproducibility of parameter estimates, necessitating experimental replicates and careful error assessment [5].

Implementation Best Practices

- Embrace Comprehensive Data Inclusion: Removal of 0% yielding reactions leads to poorer understanding of reaction classes; both positive and negative data are essential for robust model building [4].

- Address Systematic Error Sources: Well location effects, compound degradation, signal bleaching, and compound carryover can introduce bias that challenges replication [5].

- Utilize Appropriate Software Tools: Vendor-neutral platforms like AS-Experiment Builder streamline plate design, experimental execution, and data visualization while enabling seamless metadata flow [3].

- Validate with Model Substrates: Before deploying project-specific screens, validate HTE designs with known model systems to verify catalyst reactivity in plate-based formats [2].

High-Throughput Experimentation represents a paradigm shift in chemical synthesis, enabling systematic exploration of reaction spaces that were previously inaccessible through traditional methods. By implementing rational experimental designs, leveraging miniaturized platforms, and applying sophisticated statistical analysis, researchers can accelerate reaction optimization and gain fundamental insights into chemical reactivity.

The integration of HTE approaches into drug discovery pipelines has demonstrated significant value in reducing development timelines, conserving precious materials, and building robust synthetic routes. As HTE methodologies continue to evolve and become more accessible, they promise to further transform synthetic chemistry practice across academic and industrial settings.

High-Throughput Experimentation (HTE) has revolutionized reaction screening and optimization in modern research and development, particularly within the pharmaceutical industry. The architecture of a contemporary HTE batch platform is an integrated system comprising three core technological subsystems: automated liquid handlers for precise reagent delivery, parallel batch reactors for controlled reaction execution, and advanced analytics platforms for data processing and insight generation. This integrated approach enables the exploration of vast chemical reaction spaces—encompassing variables such as reagents, solvents, catalysts, and temperatures—in a highly efficient and parallelized manner, moving beyond traditional, resource-intensive one-factor-at-a-time methods [6]. When synergistically combined with machine learning (ML) optimization frameworks like Minerva, these platforms demonstrate robust performance in handling large parallel batches, high-dimensional search spaces, and the experimental noise inherent in real-world laboratories [6]. This document details the architecture, protocols, and data handling of a modern HTE platform, framed within the context of accelerating reaction screening research for drug development professionals.

Core Architectural Subsystems

A modern HTE batch platform is a coordinated system where data and materials flow seamlessly between specialized modules. The architecture is structured to support highly parallel, automated experimentation from initial setup to final analysis.

Automated Liquid Handling Systems

Automated liquid handlers are the workhorses of sample and reagent preparation in HTE. They enable the highly precise and rapid dispensing of reagents into reaction vessels, which is a prerequisite for conducting large-scale screening campaigns with 24, 48, 96, or more parallel reactions. Their primary function is to transform a chemist's digital experimental design into a physical, ready-to-run assay plate. Key capabilities include:

- Miniaturization: Performing reactions at microliter scales to reduce reagent cost and waste.

- Precision and Accuracy: Ensuring reproducible reagent concentrations across all wells.

- Throughput: Rapidly preparing hundreds of reaction combinations per hour.

- Integration: Operating in concert with other robotic systems to transport plates to and from reactor blocks and analytics.

Parallel Batch Reactor Systems

Parallel batch reactors provide the controlled environment where chemical transformations occur. These systems consist of multiple miniature reactors (autoclaves or well-plates) that operate simultaneously under defined conditions. As exemplified by commercial systems, a typical setup may comprise four to eight parallel Hastelloy autoclaves, each with a reactor volume of 300 ml, capable of operating flexibly over a wide pressure and temperature range with excellent comparability [7]. These systems are designed to generate high-quality, scalable data, making them indispensable for evaluating catalysts, synthesizing battery materials, and conducting polymerization applications [7]. Critical features include:

- Parallelization: Simultaneous execution of 4 to 96+ reactions.

- Environmental Control: Precise regulation of temperature, pressure, and stirring for each reactor.

- Material Compatibility: Construction from chemically resistant materials like Hastelloy to withstand diverse reaction conditions.

- Modularity: Configurations that can include reactors dedicated to different modes, such as separate reaction and catalyst activation lines [7].

Data Analytics and Machine Intelligence

The data generated by HTE campaigns is vast and complex, necessitating a sophisticated analytics layer to transform raw results into actionable insights. This layer relies on a modern data platform architecture that manages the entire lifecycle of data, from ingestion to analysis [8]. Machine learning frameworks, particularly those based on Bayesian optimization, are central to this layer. They guide experimental design by balancing the exploration of unknown reaction spaces with the exploitation of promising conditions identified from prior data [6]. The core functions of this subsystem are:

- Data Ingestion and Storage: Automatically collecting data from analytical instruments and storing it in scalable cloud data lakes or warehouses [8].

- Data Processing: Cleaning, formatting, and transforming raw data into a usable format for analysis.

- Machine Learning-Guided Optimization: Using algorithms like Gaussian Process regressors and acquisition functions (e.g., q-NParEgo, TS-HVI) to select the most informative next batch of experiments for multi-objective optimization (e.g., yield and selectivity) [6].

- Visualization and Reporting: Providing dashboards and tools for researchers to interact with the data, track campaign progress, and make decisions.

Table 1: Key Quantitative Performance Metrics from an ML-Driven HTE Optimization Campaign [6]

| Optimization Metric | Performance Data | Context and Significance |

|---|---|---|

| Batch Size | 96-well plates | Standard for solid-dispensing HTE workflows; enables high parallelism [6] |

| Search Space Complexity | 88,000 conditions; 530 dimensions | Demonstrates capability to navigate high-dimensional spaces [6] |

| Optimization Outcome (Ni-catalyzed Suzuki) | 76% AP yield, 92% selectivity | Outperformed chemist-designed HTE plates for a challenging transformation [6] |

| Pharmaceutical Process Optimization | >95% AP yield & selectivity for API syntheses | Identified high-performing, scalable conditions for Ni-Suzuki and Pd-Buchwald-Hartwig reactions [6] |

| Development Timeline Acceleration | 4 weeks vs. 6 months | ML framework drastically reduced process development time compared to a prior campaign [6] |

Integrated Experimental Workflow

The power of a modern HTE platform lies in the seamless integration of its subsystems into a coherent, iterative workflow. This workflow closes the loop between experimental design, execution, and data analysis.

Detailed Experimental Protocol: ML-Driven Reaction Optimization

This protocol outlines a specific application of the HTE platform for optimizing a chemical reaction using a machine learning-guided approach, as validated in recent literature [6].

Protocol Title: ML-Guided Optimization of a Nickel-Catalyzed Suzuki Reaction in a 96-Well HTE Format

4.1.1 Background and Objective The objective is to identify optimal reaction conditions for a nickel-catalyzed Suzuki coupling, a challenging transformation relevant to pharmaceutical synthesis, by maximizing Area Percent (AP) yield and selectivity. The protocol leverages the Minerva ML framework to efficiently navigate a large search space of ~88,000 potential conditions [6].

4.1.2 Research Reagent Solutions and Materials

Table 2: Essential Research Reagents and Materials for Ni-Catalyzed Suzuki HTE Campaign

| Reagent/Material | Function/Purpose | Example/Note |

|---|---|---|

| Nickel Catalyst Precursors | Non-precious metal catalysis center | Earth-abundant alternative to Pd, aligning with cost and sustainability goals [6]. |

| Ligand Library | Modulates catalyst activity and selectivity | A diverse set of phosphine and nitrogen-based ligands is typically screened. |

| Aryl Halide Substrate | Electrophilic coupling partner | Varies based on specific reaction target. |

| Boronated Substrate | Nucleophilic coupling partner | e.g., Aryl boronic acid or ester. |

| Base Library | Facilitates transmetalation step | e.g., Carbonates, phosphates. |

| Solvent Library | Reaction medium | Selected from a range of common solvents (e.g., THF, 1,4-dioxane, DMF), adhering to pharmaceutical solvent guidelines where possible [6]. |

| 96-Well Reaction Plate | Miniaturized reaction vessel | Compatible with liquid handler and reactor block. |

4.1.3 Step-by-Step Procedure

Reaction Space Definition:

- Define the discrete combinatorial set of plausible reaction conditions. This includes selecting specific members for each variable category: catalyst, ligands, solvents, bases, and continuous variables like concentration and temperature.

- Apply chemical knowledge and practical constraints (e.g., solvent boiling point, unsafe reagent combinations) to filter out impractical conditions automatically [6].

Initial Experimental Batch (Iteration 1):

- Utilize algorithmic quasi-random Sobol sampling to select an initial batch of 96 reaction conditions. This strategy maximizes the coverage of the reaction space, increasing the likelihood of discovering informative regions [6].

- The ML framework outputs a digital experimental design file listing the specific condition for each well in the 96-well plate.

Reaction Plate Preparation (Liquid Handling):

- Program the automated liquid handler with the experimental design file.

- The robot precisely dispenses the appropriate stock solutions of substrates, catalyst, ligands, base, and solvent into each well of the 96-well plate according to the specified concentrations and volumes.

Reaction Execution (Batch Reactor):

- Transfer the sealed 96-well plate to a parallel batch reactor system capable of maintaining a uniform temperature across all wells.

- Initiate the reactions and allow them to proceed for the set reaction time.

Quenching and Analysis (Liquid Handler & Analytics):

- After the reaction time has elapsed, use the liquid handler to add a quenching agent to each well to stop the reaction.

- Prepare samples from each well for analysis, typically by UPLC or HPLC, using the liquid handler for dilution and transfer to analysis vials or plates.

- Analyze the samples. The analytical software will output data files containing metrics like Area Percent (AP) yield and selectivity for each reaction.

Data Processing and ML Model Update:

- Ingest the raw analytical results into the central data platform (e.g., using a tool like Airbyte for data integration) [9] [8].

- The ML framework (Minerva) automatically retrieves the structured data. A Gaussian Process (GP) regressor is trained on all accumulated experimental data to predict reaction outcomes and their uncertainties for all possible conditions in the predefined space [6].

Next-Batch Selection:

- A multi-objective acquisition function (e.g., q-NParEgo, TS-HVI) evaluates all possible conditions, balancing the exploration of uncertain regions with the exploitation of known high-performing conditions [6].

- The function selects the next batch of 96 conditions predicted to provide the most information for progressing towards the optimization objectives (high yield and selectivity).

Iteration:

- Repeat steps 3 through 7 for the newly selected batch of experiments.

- Continue this iterative process until convergence is achieved (i.e., no significant improvement is observed), the optimization objectives are met, or the experimental budget is exhausted.

4.1.4 Data Analysis and Interpretation

- The primary metric for evaluating optimization performance is the hypervolume metric. This calculates the volume in objective space (e.g., yield vs. selectivity) enclosed by the set of conditions found by the algorithm, measuring both convergence towards the optimum and the diversity of solutions [6].

- Compare the final hypervolume achieved by the ML-driven campaign against a baseline (e.g., traditional chemist-designed grid search) or the theoretical maximum.

Table 3: Example Quantitative Outcomes from an ML-Driven HTE Campaign for API Synthesis [6]

| Reaction Type | Key Optimization Objectives | Reported Outcome | Development Impact |

|---|---|---|---|

| Ni-catalyzed Suzuki Coupling | Maximize Yield and Selectivity | Multiple conditions with >95% AP yield and selectivity | Identified viable, low-cost metal catalysis route. |

| Pd-catalyzed Buchwald-Hartwig Amination | Maximize Yield and Selectivity | Multiple conditions with >95% AP yield and selectivity | Accelerated process development for an API. |

| Pharmaceutical Process (unspecified) | Identify scalable process conditions | Improved conditions at scale identified in 4 weeks | Reduced development time from a previous 6-month campaign [6]. |

The Scientist's Toolkit: Essential Software and Data Solutions

The effective operation of an HTE platform relies on a suite of software tools for data management, statistical analysis, and machine learning.

Table 4: Key Quantitative Analysis and Data Tools for HTE Research

| Tool / Solution | Primary Function in HTE | Key Features for HTE Workflows |

|---|---|---|

| Python (Custom ML Frameworks, e.g., Minerva) | Core ML-guided optimization | Bayesian optimization (Gaussian Processes), scalable acquisition functions (q-NParEgo, TS-HVI), handling of categorical variables [6]. |

| R / RStudio | Statistical analysis and data visualization | Advanced statistical testing, custom graphics (ggplot2), extensive packages for data analysis; ideal for in-depth exploration of HTE results [9]. |

| JMP | Interactive visual data exploration and DOE | Design of Experiments (DOE) capabilities, point-and-click interface for visual data exploration, dynamic modeling [9]. |

| Airbyte | Data ingestion and integration | Syncs data from hundreds of sources (e.g., analytical instruments, LIMS) into a central data platform, automating data pipeline creation [9]. |

| Modern Data Platform (Architecture) | Holistic data management | Manages data lifecycle: Ingestion, Storage, Processing, Access, Pipelines, and Governance, ensuring data is usable and trustworthy [8]. |

| MAXQDA / NVivo | Analysis of qualitative experimental notes | AI-assisted coding of researcher observations or free-text notes; supports mixed-methods analysis when combined with quantitative HTE data [9]. |

The architecture of a modern HTE batch platform represents a paradigm shift in chemical reaction screening. By integrating highly automated liquid handlers, parallelized batch reactors, and a sophisticated, ML-driven analytics layer, these platforms enable researchers to navigate complex chemical landscapes with unprecedented speed and intelligence. The detailed protocol and data presented herein demonstrate the practical application of this architecture, culminating in the rapid identification of high-performing reaction conditions for challenging transformations like nickel-catalyzed cross-couplings. This integrated, data-centric approach is indispensable for accelerating research timelines in drug development and beyond.

In modern reaction screening research, High-Throughput Experimentation (HTE) has become indispensable for accelerating discovery and optimization in fields ranging from pharmaceutical development to materials science. At the core of any HTE workflow are multiwell plates, which serve as standardized reaction vessels enabling the parallel execution of hundreds to thousands of microscopic experiments. The migration from traditional single-run batch reactors to miniaturized, parallelized systems in 96, 384, and 1536-well formats represents a paradigm shift in chemical and biological research methodology. These platforms provide the foundational structure for automating reagent addition, mixing, temperature control, and analysis, thereby dramatically increasing experimental efficiency while reducing reagent consumption and waste generation. This application note details the practical implementation of multiwell plates as reaction vessels within HTE batch modules, providing researchers with standardized protocols and critical technical specifications to enable robust, reproducible screening campaigns.

Technical Specifications and Comparative Analysis

The physical and functional characteristics of multiwell plates directly determine their suitability for specific HTE applications. Adherence to international standards ensures compatibility with automated handling systems, liquid dispensers, and detection instrumentation across platforms from various manufacturers.

Standardized Microplate Dimensions

All standard microplates conform to the ANSI/SLAS standards, ensuring dimensional uniformity for automated compatibility. The standard footprint for microplates is 127.76 mm in length by 85.48 mm in width, with variations in height and internal well geometry defining the different well formats [10] [11]. This consistency allows the same plate to be used across different instruments and robotic platforms within an automated workflow. The following table summarizes the key dimensional parameters for common plate formats used in HTE.

Table 1: Physical Dimensions of Standard Multiwell Plate Formats

| Parameter | 96-Well Plate | 384-Well Plate | 1536-Well Plate |

|---|---|---|---|

| Total Wells | 96 | 384 | 1536 |

| Well Layout (Rows x Columns) | 8 x 12 | 16 x 24 | 32 x 48 |

| Standard Well-to-Well Spacing (mm) | 9.00 | 4.50 | 2.25 |

| Well Volume (µL) | ~350 [11] | ~105 [11] | ~12 [11] |

| Recommended Working Volume (µL) | 80-350 [11] | 24-90 [11] | 4-12 [11] |

| Common Well Bottom Types | F-, U-, V-, C-Bottom [10] | F-Bottom | F-Bottom |

Working Volume and Well Geometry

The choice of well format is primarily dictated by the required reaction scale and the available detection sensitivity. The progressive miniaturization from 96-well to 1536-well formats enables significant resource savings.

Table 2: Well Geometry and Volume Capacity

| Format | Well Diameter (mm) | Well Depth (mm) | Max Volume (µL) | Typical HTE Reaction Volume |

|---|---|---|---|---|

| 96-Well | ~7.15 [11] | ~10.80 [11] | ~400 [11] | 50-200 µL |

| 384-Well | ~3.65 [11] | ~10.40 [11] | ~105 [11] | 20-50 µL |

| 1536-Well | ~1.70 [11] | ~4.80 [11] | ~12 [11] | 5-10 µL |

The geometry of the well bottom (e.g., flat, round, or conical) is a critical consideration. Flat-bottom (F-bottom) wells are optimal for optical detection methods like absorbance or fluorescence, while round-bottom (U or V-bottom) wells are superior for facilitating efficient mixing of small liquid volumes and minimizing dead volume during liquid handling [10].

Experimental Protocols for HTE Reaction Screening

The following protocols provide a framework for executing chemical reaction screens across different multiwell plate formats, with an emphasis on reproducibility and integration with automated systems.

Protocol: General Workflow for Reaction Setup in 96-Well Plates

This protocol is designed for screening reaction conditions where reagent consumption is not a primary constraint, offering a balance between ease of handling and throughput.

Research Reagent Solutions & Materials

- Multiwell Plate: 96-well, polypropylene, U-bottom (for mixing) or F-bottom (for optical analysis) [11].

- Plate Seal: Adhesive aluminum foil or heat-sealing film to prevent solvent evaporation and cross-contamination.

- Liquid Handler: Automated pipetting system or manual multi-channel pipette.

- Platform Shaker: Orbital shaker capable of accommodating microplates (e.g., Teleshake, 100-2000 rpm) [12].

- Heating/ Cooling Incubator: For temperature-controlled reactions (e.g., thermal cycler, shaking incubator) [13].

Procedure

- Plate Layout Design: Map the reaction conditions on a plate template. Assign controls (positive, negative, solvent blanks) to specific wells, typically in the first and last columns.

- Reagent Dispensing:

- Use an automated liquid handler to dispense solvents and stock solutions of substrates into the designated wells. A typical final volume for a 96-well screen is 100-150 µL.

- Pre-dispense reagents sensitive to air or moisture in an inert atmosphere glove box if required.

- Reaction Initiation: Using the liquid handler, rapidly add the catalyst or initiating reagent solution across all wells to start the reactions simultaneously.

- Sealing: Immediately apply a pierceable, adhesive seal to the plate to prevent evaporation.

- Mixing and Incubation:

- Place the sealed plate on an orbital microplate shaker. Mix at 500-1500 rpm for 1-2 minutes to ensure homogeneity [12].

- Transfer the plate to a pre-heated/cooled incubator or thermal cycler set to the desired reaction temperature (e.g., 25°C, 60°C). Incubate for the specified duration.

- Reaction Quenching: After the incubation period, add a quenching solution (e.g., acid, base, or a scavenger resin) to all wells via the liquid handler.

- Analysis: Centrifuge the plate briefly to collect condensation. Remove the seal and either proceed with in-plate analysis (e.g., UV-Vis, fluorescence) or transfer aliquots to analysis vials or plates for LC-MS, GC-MS, or other offline methods.

Protocol: Miniaturized Screening in 384-Well and 1536-Well Formats

This protocol is for ultra-high-throughput applications where reagent conservation and maximum data point generation are critical.

Research Reagent Solutions & Materials

- Multiwell Plate: 384-well or 1536-well, polypropylene, F-bottom (optically clear for detection) [11].

- Non-Volatile Seal: Optically clear, adhesive seal.

- Nano-Liter Liquid Handler: Acoustic dispenser or positive-displacement nano-dispenser capable of handling µL to nL volumes accurately.

- High-Frequency Microplate Shaker: e.g., Teleshake 1536, capable of frequencies up to 8500 rpm for effective mixing of sub-microliter volumes [14].

- Microplate Centrifuge: With a rotor adapted for high-density plates.

- Microplate Reader: Compatible with 384/1536-well formats for high-throughput absorbance, fluorescence, or luminescence detection [13].

Procedure

- Plate Layout and Dispensing:

- Design a dense, randomized condition layout using specialized software.

- Use a nano-liter liquid handler to transfer substrates and reagents directly into the wells. Typical final volumes are 20-50 µL for 384-well and 5-10 µL for 1536-well plates [11].

- Initiation and Sealing: Initiate reactions by dispensing the smallest volume component (e.g., catalyst) across the plate. Seal immediately with an optically clear film.

- Mixing and Incubation:

- Mix the sealed plate on a high-frequency shaker (e.g., 4000-8500 rpm for 1-5 minutes) to overcome high surface tension in low-volume wells [14].

- Incubate at the target temperature in a thermally calibrated incubator. Due to the high surface-to-volume ratio, evaporation control is critical; ensure seals are properly applied.

- Quenching and Analysis:

- Quench reactions by adding a small volume of quenching solution.

- For colorimetric or fluorometric assays, analyze the plate directly in a microplate reader [13].

- For MS-based analysis, dilute reaction mixtures with a compatible solvent and use an automated system to inject from the plate.

The Scientist's Toolkit: Essential Research Reagent Solutions and Materials

Successful HTE relies on a suite of specialized tools and reagents designed for miniaturization and automation.

Table 3: Essential Materials for HTE with Multiwell Plates

| Item | Function/Description | Key Consideration for HTE |

|---|---|---|

| Polypropylene Plates | Chemically resistant workhorse for most synthetic chemistry applications [15]. | Withstands a wide temperature range and is resistant to many organic solvents. Ideal for storage and reaction setup. |

| Polystyrene Plates | Optically superior material for direct photometric assays [15]. | Check chemical resistance; not compatible with many organic solvents (e.g., acetone, DMSO, ethyl acetate) [16]. |

| Adhesive Seals | Seals plate to prevent evaporation and contamination during incubation and shaking. | Choose pierceable seals for reagent addition or optical clear seals for in-plate detection. |

| Automated Liquid Handler | Dispenses reagents with high precision and reproducibility across all wells. | Capabilities range from µL-handling for 96-well to nL-handling for 1536-well formats. |

| Microplate Shaker | Ensures homogenous mixing of reaction components. | Orbital pattern is standard. Higher frequencies (≥4000 rpm) are needed for 1536-well formats [14] [12]. |

| Microplate Reader | Provides high-throughput endpoint analysis via absorbance, fluorescence, or luminescence. | Must be compatible with the plate format and have the appropriate wavelength filters/detectors for the assay [13]. |

Workflow and Material Compatibility Visualization

Implementing a robust HTE workflow requires careful planning of the experimental sequence and a clear understanding of material compatibility. The following diagrams illustrate the logical flow of a screening campaign and the critical decision points for selecting appropriate reaction vessels.

HTE Screening Workflow

Diagram 1: HTE Screening Workflow. This diagram outlines the standard sequence of operations for a high-throughput reaction screen, highlighting the plate format selection as a critical initial decision that influences all subsequent steps.

Material Compatibility Selection

Diagram 2: Material Selection Guide. A decision tree to guide the selection between polypropylene and polystyrene plates based on the chemical and physical demands of the experiment, highlighting the need to verify chemical compatibility for polystyrene [16].

High-Throughput Experimentation (HTE) using batch modules has become a cornerstone of modern research and development in chemistry and pharmacology. By allowing numerous reactions to be conducted simultaneously under varied conditions, HTE enables a rapid and systematic exploration of complex chemical spaces. This approach is particularly powerful when integrated with machine learning (ML) algorithms, which guide experimental design towards optimal outcomes. This document details the key advantages of HTE batch systems, focusing on their capacity for parallelization, their ability to facilitate the efficient exploration of categorical variables, and their inherent cost-effectiveness. Framed within the context of reaction screening research, the following sections provide quantitative data, detailed protocols, and visual workflows to illustrate these core benefits.

Key Advantages and Data Presentation

HTE batch modules fundamentally accelerate research by parallelizing experiments and efficiently navigating the high-dimensional parameter spaces common in chemical synthesis. The quantitative impact of these advantages is summarized in the tables below.

Table 1: Throughput and Efficiency of HTE Platforms. This table compares different HTE configurations, highlighting their experimental throughput and primary applications [6] [17] [18].

| HTE Platform / Configuration | Typical Parallel Batch Size | Key Efficiency Metrics | Primary Applications / Advantages |

|---|---|---|---|

| Standard HTE Batch Module | 16 - 48 reactors [19] | High comparability, high-quality scalable data [19]. | Catalyst testing & optimization (gas phase, synthesis gas) [19]. |

| Microtiter Plates (MTP) | 96 / 384 wells [17] | 192 reactions in ~4 days [17]; screen size increased from ~20-30 to ~50-85 per quarter post-automation [18]. | Stereoselective Suzuki–Miyaura couplings, Buchwald–Hartwig aminations, photochemical reactions [17]. |

| UltraHTE (MTP) | 1536 wells [17] | Exploration of larger spaces of reaction parameters [17]. | Optimizing chemistry-related processes, initially from biological assays [17]. |

| ML-Driven Workflow (e.g., Minerva) | 96 wells per batch [6] | Identified optimal conditions in 4 weeks vs. 6-month traditional campaign [6]. | Multi-objective optimization (e.g., yield, selectivity) for challenging transformations like Ni-catalysed Suzuki reaction [6]. |

Table 2: Machine Learning Performance in Navigating Categorical Variables. This table summarizes the performance of different ML acquisition functions used in HTE for optimizing reactions with multiple, competing objectives [6].

| Machine Learning Acquisition Function | Key Feature / Scalability | Performance in Multi-Objective Optimization |

|---|---|---|

| q-NParEgo | Scalable for large batch sizes [6]. | Effective in optimizing multiple competing objectives (e.g., yield and selectivity) [6]. |

| Thompson Sampling with HVI (TS-HVI) | Scalable for large batch sizes [6]. | Effective in optimizing multiple competing objectives (e.g., yield and selectivity) [6]. |

| q-Noisy Expected Hypervolume Improvement (q-NEHVI) | Scalable for large batch sizes [6]. | Effective in optimizing multiple competing objectives (e.g., yield and selectivity) [6]. |

| Sobol Sampling | Used for initial batch selection [6]. | Maximizes initial reaction space coverage to discover informative regions [6]. |

Experimental Protocols

Protocol 1: ML-Driven Multi-Objective Reaction Optimization in a 96-Well HTE Batch Plate

This protocol describes the application of a scalable machine learning framework for optimizing chemical reactions with multiple objectives using an automated HTE batch platform [6].

1. Reaction Setup and Initialization

- Reaction Selection: Select a target chemical transformation (e.g., nickel-catalysed Suzuki coupling [6]).

- Define Search Space: Define the combinatorial space of plausible reaction conditions. This includes categorical variables (e.g., ligands, solvents, additives) and continuous variables (e.g., temperature, concentration). The space should be constrained by practical chemistry knowledge (e.g., excluding unsafe reagent combinations) [6].

- Initial Sampling: Use an algorithmic quasi-random Sobol sampling method to select an initial batch of 96 diverse reaction conditions. This maximizes the initial coverage of the reaction space [6].

2. Automated Reaction Execution

- Liquid Handling: Use an automated liquid handling system to dispense solvents and liquid reagents into a 96-well plate [18].

- Solid Dosing: Employ an automated powder-dosing robot (e.g., CHRONECT XPR) to accurately dispense solid reagents (e.g., catalysts, bases, starting materials) into the designated wells. This ensures precision, especially at sub-milligram scales, and eliminates human error [18].

- Reaction Conditions: Place the sealed 96-well plate on a heating/stirring station that can maintain the required temperature and mixing for all wells simultaneously [17].

3. Analysis and Data Processing

- Product Analysis: After the reaction time has elapsed, analyze the reaction mixtures using inline or offline analytical tools, typically UPLC/MS or GC/MS [17].

- Data Extraction: Quantify reaction outcomes for multiple objectives, such as Area Percent (AP) yield and selectivity [6].

- Data Mapping: Map the collected outcome data to the corresponding experimental conditions in a digital format [6].

4. Machine Learning and Next-Batch Selection

- Model Training: Train a Gaussian Process (GP) regressor on all data collected to date. This model predicts reaction outcomes and their uncertainties for all possible conditions in the defined search space [6].

- Condition Selection: Use a scalable multi-objective acquisition function (e.g., q-NParEgo, TS-HVI) to evaluate all possible conditions. The function balances exploration (trying uncertain conditions) and exploitation (improving on known good conditions) to select the next most informative batch of 96 experiments [6].

- Iteration: Repeat steps 2-4 for as many iterations as desired, typically until convergence or the experimental budget is exhausted [6].

Protocol 2: High-Throughput Catalyst Screening for a Gas-Phase Reaction

This protocol outlines the steps for parallel screening of heterogeneous catalysts using a dedicated 16-reactor HTE batch system [19].

1. System and Catalyst Preparation

- Reactor Loading: Place each unique catalyst to be screened into an individual reactor within the high-throughput system [19].

- System Check: Ensure the modular system is configured for the target application (e.g., gas-phase chemistry, syngas conversion) and is leak-tight [19].

2. Parallelized Reaction and Monitoring

- Process Control: Initiate the flow of reactant gases (e.g., H₂, CO) through all 16 reactors in parallel. The system maintains identical process conditions (pressure, temperature, gas flow rate) across all reactors [19].

- In-line Analytics: Utilize integrated analytical tools to monitor reaction products continuously or at set intervals. This generates high-quality, comparable data for each catalyst [19].

3. Data Evaluation and Scaling

- Performance Analysis: Evaluate catalyst performance based on metrics such as conversion, selectivity, and turnover frequency.

- Scalability Assessment: Use the high-quality data generated by the HTE system to inform decisions on scaling up the most promising catalysts for commercial evaluation [19].

Workflow and Signaling Pathway Visualization

Diagram 1: ML-Driven HTE Optimization Workflow. This diagram illustrates the closed-loop, iterative process of machine-learning-guided high-throughput experimentation.

Diagram 2: Efficient Encoding of Categorical Variables. This diagram shows the transformation of high-cardinality categorical data into a compact numerical format for machine learning.

The Scientist's Toolkit: Essential Research Reagent Solutions

The successful implementation of HTE relies on specialized materials and equipment. The following table details key solutions used in the featured experiments.

Table 3: Key Research Reagent Solutions for HTE Batch Modules.

| Item / Solution | Function in HTE | Application Example |

|---|---|---|

| Automated Powder Dosing System | Precisely dispenses solid reagents (catalysts, starting materials) at milligram to gram scales into reaction vials, ensuring accuracy and reproducibility [18]. | Dosing transition metal complexes and organic starting materials for catalytic cross-coupling reactions in a 96-well plate [18]. |

| Catalyst Libraries | Pre-prepared collections of diverse catalytic complexes (e.g., Ni, Pd) enabling rapid screening for a given transformation [6] [18]. | Screening for optimal catalyst in a Suzuki coupling or Buchwald-Hartwig amination [6] [17]. |

| Diverse Ligand Sets | Collections of ligand structures that, when screened, modulate catalyst activity and selectivity, crucial for reaction optimization [6]. | Identifying the optimal ligand for a nickel-catalysed transformation to improve yield and selectivity [6]. |

| Solvent Kits | A standardized set of solvents covering a range of polarities and properties, allowing for efficient solvent screening [17]. | Included as a categorical variable in an HTE screen to determine the optimal reaction medium [6] [17]. |

| MTP-Compatible Reagent Stocks | Pre-dissolved solutions of common reagents at standardized concentrations, facilitating rapid liquid handling via automated pipetting [17] [18]. | Used in a 96-well plate screen for a photoredox fluorodecarboxylation reaction to test different bases and fluorinating agents [20]. |

Implementing HTE Batch Screening: Protocols and Real-World Applications

High-Throughput Experimentation (HTE) has revolutionized reaction screening in modern chemical and pharmaceutical research. By leveraging automation and miniaturized reaction scales, HTE enables the highly parallel execution of numerous reactions, making the exploration of vast chemical spaces more cost- and time-efficient than traditional one-factor-at-a-time approaches [6]. This application note details a standardized workflow for implementing HTE batch modules, from initial reagent setup to final analysis, framed within the context of accelerating reaction optimization and drug development timelines.

Key Research Reagent Solutions

The success of an HTE campaign hinges on the careful selection and management of reagents. The following table catalogues essential materials and their functions in a typical HTE reaction screening platform [6].

Table 1: Essential Research Reagent Solutions for HTE Screening

| Reagent Category | Example Items | Primary Function in HTE |

|---|---|---|

| Catalysts | Nickel catalysts (e.g., Ni(acac)₂), Palladium catalysts (e.g., Pd₂(dba)₃) | Facilitate key bond-forming reactions (e.g., Suzuki couplings, Buchwald-Hartwig aminations) at low catalyst loadings [6]. |

| Ligands | Diverse phosphine ligands, N-heterocyclic carbenes | Modulate catalyst activity, stability, and selectivity; a primary variable for optimizing challenging transformations [6]. |

| Solvents | Dimethylformamide (DMF), Tetrahydrofuran (THF), 1,4-Dioxane, Toluene | Dissolve reactants and influence reaction outcome, kinetics, and mechanism. Selection is guided by pharmaceutical industry guidelines [6]. |

| Additives | Salts (e.g., K₃PO₄), Bases (e.g., Cs₂CO₃), Acids | Adjust reaction environment (pH, ionic strength), facilitate catalytic cycles, or sequester impurities [6]. |

| Substrates | Aryl halides, Boronic acids, Amines | The core reactants undergoing the chemical transformation of interest; often varied to study substrate scope [6]. |

Standardized HTE Workflow Protocol

This protocol outlines a robust, end-to-end process for conducting reaction screening using HTE batch modules, incorporating machine learning for efficient optimization.

Phase 1: Pre-Experimental Planning & Reagent Setup

Objective: To define the reaction condition space and prepare reagent stocks for highly parallel experimentation.

Methodology:

- Define the Search Space: Compile a discrete combinatorial set of all plausible reaction conditions. This includes categorical variables (e.g., solvents, ligands, additives) and continuous variables (e.g., temperature, concentration) [6].

- Apply Chemical Filters: Programmatically filter out impractical or unsafe condition combinations (e.g., reaction temperatures exceeding solvent boiling points, incompatible reagent pairs like NaH and DMSO) [6].

- Reagent Stock Solution Preparation:

- Prepare concentrated stock solutions of all catalysts, ligands, and substrates in appropriate, degassed solvents.

- Utilize automated liquid handling systems to dispense these stocks into designated wells of a master reaction block (e.g., 96-well format) to ensure precision and reproducibility.

- Store the master block under an inert atmosphere if moisture- or oxygen-sensitive reagents are involved.

Phase 2: Reaction Execution & Parallel Workup

Objective: To initiate reactions in a highly parallel manner and process them for analysis.

Methodology:

- Initial Batch Selection: Initiate the campaign using algorithmic quasi-random Sobol sampling to select an initial batch of experiments (e.g., 1x 96-well plate). This ensures diverse coverage of the reaction condition space [6].

- Reaction Initiation: Use an automated robotic platform to dispense the final reactants or initiating agents (e.g., bases) into the master reaction block. Securely seal the plate to prevent evaporation.

- Environmental Control: Place the reaction block into a pre-heated/cooled agitator (e.g., an orbital shaker within an incubator) to maintain constant temperature and mixing for the specified reaction duration.

- Parallel Quenching & Workup: After the allotted time, automatically transfer the reaction mixtures to a workup block containing a quenching solution (e.g., aqueous buffer, silica slurry). Subsequent parallel operations may include liquid-liquid extraction using an automated plate washer or filtration.

Phase 3: Analysis & Data-Driven Optimization

Objective: To analyze reaction outcomes and use machine learning to guide subsequent experimental batches.

Methodology:

- High-Throughput Analysis: Analyze the worked-up reaction samples using parallel analytical techniques, typically UPLC-MS or HPLC-MS. Key quantitative outputs include Area Percent (AP) Yield and Selectivity [6].

- Machine Learning Model Training: Input the experimental conditions and their corresponding outcomes (yield, selectivity) into a Gaussian Process (GP) regressor. The GP model learns to predict reaction outcomes and their uncertainties for all possible condition combinations within the defined search space [6].

- Next-Batch Experiment Selection: Use a multi-objective acquisition function (e.g., q-NParEgo, Thompson Sampling with Hypervolume Improvement) to select the next batch of experiments. This function balances exploration of uncertain regions of the search space with exploitation of known high-performing regions [6].

- Iterative Optimization: Repeat the cycle of experiment execution, analysis, and model-informed batch selection until convergence is achieved, performance stagnates, or the experimental budget is exhausted.

Workflow Visualization

The following diagram illustrates the integrated, iterative process of the standard HTE workflow.

Quantitative Data Presentation

The performance of the HTE workflow is quantified by its efficiency in navigating the reaction condition space. The table below summarizes key metrics and outcomes from a published optimization campaign [6].

Table 2: Performance Metrics from an HTE Optimization Campaign for a Nickel-Catalyzed Suzuki Reaction

| Parameter | Value / Outcome | Context & Significance |

|---|---|---|

| Search Space Size | ~88,000 conditions | Demonstrates the ability to navigate a vast combinatorial space [6]. |

| HTE Platform | 96-well plates | Standardized format for highly parallel experimentation [6]. |

| Key Outcomes (ML-guided) | 76% AP Yield, 92% Selectivity | Successfully identified high-performing conditions for a challenging transformation where traditional methods failed [6]. |

| Comparison (Chemist-designed) | No successful conditions found | Highlights the advantage of the ML-driven workflow over traditional intuition-based screening [6]. |

| Pharmaceutical Case Study Result | >95% AP Yield and Selectivity | Achieved for both a Ni-catalyzed Suzuki coupling and a Pd-catalyzed Buchwald-Hartwig reaction, validating robustness [6]. |

| Timeline Acceleration | 4 weeks vs. 6 months | ML-driven HTE identified improved process conditions at scale significantly faster than a prior development campaign [6]. |

High-throughput experimentation (HTE) has emerged as a powerful strategy for accelerating chemical synthesis and optimization, offering a systematic approach to navigating complex reaction parameter spaces efficiently [21]. This application note details a proven HTE protocol for the optimization of a Buchwald-Hartwig amination, a cornerstone reaction in pharmaceutical development for forming C–N bonds. The methodology is presented within the broader research context of employing HTE batch modules for reaction screening, enabling the rapid acquisition of rich datasets with minimal time and resource investment [21]. This approach is particularly valuable for drug development professionals and process chemists who require robust, scalable, and data-driven methods to expedite development timelines.

Research Reagent Solutions

The successful execution of an HTE campaign hinges on the preparation and organization of key reagents. The table below catalogues essential materials for a typical Buchwald-Hartwig amination screening.

Table 1: Essential Research Reagents and Materials for HTE Screening

| Reagent/Material | Function/Role in Screening |

|---|---|

| 96-Well Aluminum Reaction Plate | Machined aluminum plate serving as a modular batch reactor for parallel execution of 96 reactions with simultaneous heating and mixing [21]. |

| Solid Transfer Scoops | Enables rapid and parallel transfer of solid reagents, such as catalysts, bases, and ligands, crucial for maintaining high-throughput workflows without robotic automation [21]. |

| Palladium Catalysts | Transition metal catalyst facilitating the cross-coupling reaction. Multiple precursors (e.g., Pd(2)(dba)(3), Pd(OAc)(_2)) are typically screened [21]. |

| Ligand Library | A diverse collection of phosphine-based ligands (e.g., BippyPhos, XPhos, BrettPhos) that modulate the catalyst's reactivity and selectivity [21] [6]. |

| Base Library | Inorganic and organic bases (e.g., K(3)PO(4), Cs(2)CO(3), NaO(^t)Bu) that facilitate the catalytic cycle by deprotonating the amine coupling partner [21]. |

| Solvent Library | A range of organic solvents (e.g., toluene, 1,4-dioxane, DMF) with varying polarity and coordinating ability to solvate reagents and influence reaction outcome [21]. |

| Plastic Filter Plate | Used for parallel post-reaction workup, such as quenching and filtration, to prepare samples for analysis [21]. |

Experimental Protocol & Workflow

This section provides a detailed, step-by-step methodology for conducting an HTE campaign for a Buchwald-Hartwig amination, from initial setup to data analysis.

Protocol for HTE Screening in Multiwell Plates

Objective: To identify optimal reaction conditions for a Buchwald-Hartwig amination by simultaneously screening 96 different combinations of catalyst, ligand, base, and solvent.

Materials and Equipment:

- 96-well aluminum reaction plate

- Magnetic stir bars (one per well)

- Magnetic stirrer

- Solid transfer scoops and pipettes

- Reagents: Aryl halide, amine, palladium catalyst library, ligand library, base library, solvent library

- Plastic filter plate for parallel workup

- Gas Chromatograph (GC) or GC-Mass Spectrometry (GC-MS) for analysis

Procedure:

- Plate Design & Layout: Define a screening array that systematically varies key parameters. A sample design is shown in Table 2.

- Reagent Dispensing: a. Using pipettes, distribute stock solutions of the aryl halide and amine to all 96 wells according to the predetermined layout. b. Using solid transfer scoops, add the appropriate solid catalysts, ligands, and bases to each well [21]. c. Finally, add the designated solvents to each well using pipettes.

- Reaction Execution: Seal the reaction plate and place it on a pre-heated magnetic stirrer/hotplate. Stir the reactions simultaneously for the designated time (e.g., 18 hours) at a constant temperature (e.g., 80-100 °C) [21].

- Parallel Workup and Quenching: After the reaction time, remove the plate from heat and simultaneously quench all reactions by adding a standard quenching solution (e.g., a mixture of water and an organic solvent) across the plate. A filter plate can be used to remove particulates.

- Sample Analysis: Dilute an aliquot from each well and analyze by GC or GC-MS to determine reaction yield and conversion [21].

- Data Analysis: Compile the yields from all 96 experiments to create a comprehensive data matrix. The best-performing condition can be selected for immediate scale-up, or the dataset can be used to inform subsequent, more focused optimization rounds or machine learning-driven campaigns [21] [6].

Quantitative Data from HTE Campaigns

The power of HTE lies in generating large, comparable datasets. The following table summarizes hypothetical quantitative outcomes from a 96-well screening, illustrating how optimal conditions are identified.

Table 2: Exemplary HTE Screening Results for Buchwald-Hartwig Amination (Isolated Yields %)

| Well | Catalyst | Ligand | Base | Solvent | Yield (%) |

|---|---|---|---|---|---|

| A1 | Pd(2)(dba)(3) | XPhos | K(3)PO(4) | Toluene | 85 |

| A2 | Pd(2)(dba)(3) | BrettPhos | Cs(2)CO(3) | 1,4-Dioxane | 92 |

| A3 | Pd(OAc)(_2) | BippyPhos | NaO(^t)Bu | DMF | 45 |

| ... | ... | ... | ... | ... | ... |

| H12 | Pd(OAc)(_2) | JohnPhos | K(3)PO(4) | Toluene | 78 |

Recent studies highlight the synergy between HTE and machine learning (ML). For instance, a scalable ML framework (Minerva) was applied to optimize a Pd-catalysed Buchwald-Hartwig reaction for an Active Pharmaceutical Ingredient (API), identifying multiple conditions achieving >95% yield and selectivity, thereby significantly accelerating process development [6]. Furthermore, data-driven analysis of large historical HTE datasets using statistical methods like z-scores can reveal optimal conditions that differ from traditional literature-based guidelines, providing higher-quality starting points for optimization campaigns [22].

Workflow and Data Analysis Visualization

The overall process of an ML-enhanced HTE campaign, from initial setup to the identification of optimized conditions, can be visualized as a logical workflow. The following diagram delineates the key stages and decision points.

The physical organization of reagents within a 96-well plate is a critical aspect of HTE campaign design. The following diagram illustrates a simplified plate map for a two-dimensional screen.

The precise formation of carbon-carbon (C–C) bonds is a cornerstone of organic synthesis, pivotal for constructing complex molecules in pharmaceuticals, materials science, and agrochemicals [23]. Among the most powerful methods for achieving this are cross-coupling reactions, which have been recognized as significant milestones in organic and organometallic chemistry [23]. The Suzuki-Miyaura cross-coupling (SMC) reaction, in particular, has become an indispensable tool due to its exceptional versatility, mild reaction conditions, and broad functional group tolerance [23] [24]. Its profound impact was underscored by the awarding of the Nobel Prize in 2010 for pioneering work in palladium-catalyzed cross-couplings.

More recently, photoredox catalysis has emerged as a complementary and sustainable strategy for activating small molecules [25]. This approach utilizes light to generate highly reactive species from photocatalysts, enabling unique transformations that are often challenging via traditional thermal pathways. The fusion of photoredox chemistry with established methods like SMC is expanding the synthetic toolbox, allowing chemists to tackle increasingly complex synthetic challenges.

This article details practical applications and protocols for these reactions, framed within the context of High-Throughput Experimentation (HTE) batch modules for reaction screening. The provided guidelines are designed for researchers and development scientists seeking to implement these powerful methods in drug discovery and development programs.

Application Notes & Experimental Protocols

Suzuki-Miyaura Cross-Coupling (SMC)

- Reaction Setup: Conduct all manipulations under an inert atmosphere (N₂ or Ar) using standard Schlenk techniques or in a glovebox.

- Reagents:

- Aryl halide (e.g., 4-bromotoluene) (1.0 equiv, 0.5 mmol)

- Arylboronic acid (e.g., phenylboronic acid) (1.2 equiv, 0.6 mmol)

- Palladium catalyst (e.g., Pd(PPh₃)₄ or Pd(dppf)Cl₂) (2 mol%)

- Base (e.g., K₂CO₃ or Cs₂CO₃) (2.0 equiv, 1.0 mmol)

- Solvent: 5 mL of a 4:1 mixture of Toluene/Ethanol or Dioxane/Water.

- Procedure:

- Charge a dried HTE vial with a magnetic stir bar.

- Weigh in the palladium catalyst, followed by the aryl halide, boronic acid, and base.

- Add the degassed solvent mixture via syringe.

- Seal the vial and heat the reaction mixture to 80-90 °C with stirring for 12-16 hours.

- Monitor reaction progress by TLC or LC/MS.

- Upon completion, cool the reaction to room temperature.

- Quench by adding 10 mL of saturated aqueous NH₄Cl solution and extract with ethyl acetate (3 × 15 mL).

- Dry the combined organic layers over anhydrous MgSO₄, filter, and concentrate under reduced pressure.

- Purify the crude product by flash column chromatography on silica gel.

HTE Considerations for SMC Optimization

The performance of SMC reactions is highly sensitive to several parameters, making them ideal for HTE screening [24]. Key variables to screen in a batch module include:

- Ligands: Test a library of phosphine (monodentate vs. bidentate) and N-heterocyclic carbene (NHC) ligands. Electron-deficient monophosphines often accelerate the transmetalation step [24].

- Boron Source: Evaluate different organoboron reagents (boronic acids, esters, trifluoroborates) to balance reactivity and stability. Neopentyl glycol esters offer a good compromise [24].

- Base and Solvent: Screen bases (e.g., K₃PO₄, KOtBu, TMSO-K) and solvent systems (aqueous/organic mixtures, 2-MeTHF) to mitigate issues like halide inhibition and poor boronate solubility [24].

Photoredox Catalysis

- Reaction Setup: Perform in a dried HTE vial equipped with a magnetic stir bar. Use a transparent vial or reactor suitable for irradiation with visible light.

- Reagents:

- Substrate (e.g., tetrahydrofuran) (1.0 equiv, 0.2 mmol)

- Electrophile (e.g., bromomalonate) (2.0 equiv, 0.4 mmol)

- Photocatalyst (e.g., Eosin Y) (2 mol%)

- Hydrogen atom transfer (HAT) catalyst (e.g., thiol) (optional, 20 mol%)

- Procedure:

- Prepare the substrate and electrophile in the HTE vial.

- Add the photocatalyst and any additives.

- Add Degassed Acetonitrile (2 mL) as solvent.

- Seal the vial and place it in the HTE photoreactor module.

- Irradiate the reaction mixture with ~450-465 nm Blue LEDs while stirring vigorously for 6-24 hours. Ensure the light source is calibrated for consistent photon flux across all wells.

- Monitor the reaction by LC/MS.

- Upon completion, remove the vial from the reactor and concentrate the mixture directly under reduced pressure.

- Purify the residue by flash chromatography.

HTE Considerations for Photoredox Screening

- Light Source: Uniform irradiation across all wells in a batch module is critical. LED arrays provide consistent intensity and wavelength control.

- Photocatalyst Library: Screen a range of organic dyes (Eosin Y, methylene blue, Rose Bengal) and transition-metal complexes ([Ir(ppy)₃], [Ru(bpy)₃]²⁺) to match redox potentials with substrate requirements [25].

- Oxygen Sensitivity: Despite some organic dyes' relative stability, most photoredox cycles involve radical intermediates. Thorough degassing of solvents and reagents is essential for reproducible results in HTE.

Table 1: Comparison of Common Photoredox Catalysts [25]

| Catalyst | λmax (nm) | Excited State | E*red (V vs SCE) | E*ox (V vs SCE) |

|---|---|---|---|---|

| Eosin Y | 520 | Triplet | +0.83 | -1.15 |

| Methylene Blue | 650 | Triplet | +1.14 | -0.33 |

| Rose Bengal | 549 | Triplet | +0.81 | -0.96 |

| Mes-Acr⁺ | 425 | Singlet | +2.32 | - |

Table 2: Key Reaction Parameters for Suzuki-Miyaura Coupling Optimization [23] [24]

| Parameter | Options for HTE Screening | Impact / Consideration |

|---|---|---|

| Electrophile (R-X) | Aryl/Benzyl Chlorides, Bromides, Iodides, Triflates | I > OTf > Br >> Cl (reactivity); Cost & availability |

| Boron Source | Boronic Acids, Pinacol Esters, Neopentyl Glycol Esters, Trifluoroborates | Trade-off between reactivity and stability (protodeboronation) |

| Ligand | P(t-Bu)₃, SPhos, XPhos, dppf, NHC ligands | Dictates stability of Pd(0) and rate of oxidative addition/transmetalation |

| Base | K₂CO₃, Cs₂CO₃, K₃PO₄, KOtBu, TMSOK | Activates boron reagent; Affects solubility of boronate complex |

| Solvent | Toluene/Water, Dioxane/Water, DMF, 2-MeTHF/Water | Polarity affects boronate formation and halide salt inhibition |

Table 3: The Scientist's Toolkit: Key Research Reagent Solutions

| Reagent / Material | Function / Explanation |

|---|---|

| Palladium Precursors (e.g., Pd(OAc)₂, Pd₂(dba)₃) | Source of Pd(0) active species for the catalytic cycle [23]. |

| Buchwald-type Ligands (e.g., SPhos, XPhos) | Bulky, electron-rich phosphines that facilitate oxidative addition of aryl chlorides and stabilize the Pd center [23] [24]. |

| Organoboron Reagents (e.g., Aryl Boronic Esters) | Air- and moisture-stable, less prone to protodeboronation compared to boronic acids, ideal for HTE stock solutions [24]. |

| Organic Photoredox Catalysts (e.g., Eosin Y, Mes-Acr⁺) | Non-toxic, metal-free catalysts that absorb visible light to generate potent oxidants/reductants [25]. |

| Inorganic Bases (e.g., Cs₂CO₃, K₃PO₄) | Weak bases that activate the organoboron reagent for transmetalation while minimizing base-sensitive side reactions [23]. |

| Anhydrous, Degassed Solvents | Essential for both Pd-catalyzed (prevents catalyst oxidation) and photoredox (prevents radical quenching by O₂) reactions. |

Workflow and Mechanism Visualization

Diagram 1: HTE Batch Module Screening Workflow (76 characters)

Diagram 2: Suzuki-Miyaura Catalytic Cycle (72 characters)

Diagram 3: General Photoredox Catalytic Cycle (77 characters)

Application Note: Automated Reaction Screening and Optimization at Eli Lilly

Eli Lilly has pioneered the industrial deployment of custom High-Throughput Experimentation (HTE) platforms to accelerate drug discovery and development processes. The company's approach integrates advanced laboratory automation with machine learning algorithms to synchronously optimize multiple reaction variables, enabling rapid exploration of high-dimensional parametric spaces that were previously impractical to investigate through manual methods [26]. This paradigm shift from traditional one-variable-at-a-time optimization to multivariate synchronous optimization represents a fundamental transformation in pharmaceutical reaction screening, significantly reducing experimentation time while minimizing human intervention.

Lilly's HTE infrastructure encompasses multiple specialized platforms, including a remote-controlled robotic cloud lab in collaboration with Strateos, Inc., and the recently launched Lilly TuneLab AI platform [27] [28]. These systems physically and virtually integrate various aspects of the drug discovery process—including design, synthesis, purification, analysis, sample management, and hypothesis testing—into fully automated workflows. The 11,500 square foot Strateos-powered lab facility operates via a web-based interface that allows research scientists to remotely control experiments with high reproducibility [27].

Quantitative Platform Specifications and Performance Metrics

Table 1: Performance Metrics of Lilly's HTE and AI Platforms

| Platform Component | Key Specifications | Throughput Capacity | Automation Level | Data Integration |

|---|---|---|---|---|

| Strateos Cloud Lab | 11,500 sq ft facility; Integrated synthesis, purification, analysis | Not specified | Full remote operation via web interface | Real-time experiment refinement |

| Lilly TuneLab AI | Trained on $1B+ research investment; Federated learning architecture | Hundreds of thousands of unique molecules | Predictive model deployment | Privacy-preserving data contribution |

| NVIDIA Supercomputer | 1,000+ Blackwell Ultra GPUs; Unified high-speed network | Millions of experiments | AI model training at scale | December 2025 operational date |

Experimental Results and Validation

Lilly's integrated HTE approach has demonstrated significant improvements in reaction optimization efficiency. The machine learning algorithms driving these platforms can identify optimal chemical reaction conditions from complex multivariate parameter spaces that traditionally required extensive manual experimentation [26]. The AI models powering Lilly TuneLab are trained on comprehensive drug disposition, safety, and preclinical datasets representing experimental data from hundreds of thousands of unique molecules, providing unprecedented predictive capability for reaction outcome optimization [28].

The supercomputer infrastructure being developed in partnership with NVIDIA, scheduled to become operational in January 2026, represents the pharmaceutical industry's most powerful computational resource dedicated to drug discovery [29]. This "AI factory" will enable scientists to train AI models on millions of experiments to test potential medicines, dramatically expanding the scope and sophistication of reaction screening and optimization. Eli Lilly anticipates that the full benefits of these advanced HTE capabilities will yield significant returns by 2030, potentially discovering new molecules that would be impossible to identify through human effort alone [29].

Protocol: Implementation of Automated Reaction Screening

Experimental Workflow for HTE Reaction Optimization

The following diagram illustrates the integrated workflow combining robotic automation with AI-driven experimental design and optimization.

Step-by-Step Experimental Procedure

Pre-Experimental Setup Phase

- Reaction Objective Definition: Clearly define the chemical transformation target, desired yield thresholds, and critical quality attributes for the reaction products.

- Parameter Space Identification: Identify all variables to be optimized, including catalyst loading, temperature, solvent systems, concentration, and reaction time.

- Experimental Design Configuration: Using Lilly TuneLab AI platform, configure the design of experiments (DoE) to maximize information gain while minimizing the number of required experiments [28].

Automated Execution Phase

- Reagent Preparation: Utilize the Strateos robotic platform for automated liquid handling, weighing, and dissolution of reaction components in the designated solvent systems [27].

- Reaction Assembly: Implement automated distribution of reaction mixtures into appropriate reaction vessels under controlled atmosphere conditions when necessary.

- Reaction Initiation and Monitoring: Programmable temperature control and stirring initiation across all reaction vessels simultaneously, with real-time monitoring of reaction progress where applicable.

Workup and Analysis Phase

- Automated Quenching and Workup: Robotic execution of standardized workup procedures including extraction, washing, and phase separation.

- Sample Purification: Integration with automated purification systems including flash chromatography, preparative HPLC, or crystallization platforms.

- Analytical Characterization: High-throughput analysis using integrated UPLC/MS, NMR, or other relevant analytical techniques with automated sample injection.

Data Processing and Machine Learning Optimization

- Feature Extraction: Automated extraction of relevant reaction features including conversion, yield, selectivity, and impurity profiles from analytical data.

- Model Training: Application of machine learning algorithms (including those available through Lilly TuneLab) to identify complex relationships between reaction parameters and outcomes [26] [28].

- Predictive Optimization: Use trained models to predict optimal reaction conditions beyond the initially tested parameter space.

- Iterative Refinement: Continuous improvement of models through federated learning approaches as additional data becomes available from ongoing experiments [28].

The Scientist's Toolkit: Essential Research Reagent Solutions

Table 2: Key Research Reagents and Platform Components in Lilly's HTE Ecosystem

| Component Category | Specific Examples | Function in HTE | Implementation Notes |

|---|---|---|---|

| Automation Hardware | Strateos Robotic Platforms; Liquid handlers; Automated purifiers | Enables unattended execution of complex experimental workflows | Remote operation via web interface; 24/7 operational capability |

| Computational Infrastructure | NVIDIA Blackwell Ultra GPUs; High-speed networking | Powers AI/ML model training and complex simulation | 1,000+ GPUs; Enables training on millions of experiments |

| AI/ML Platforms | Lilly TuneLab; Custom optimization algorithms | Predicts optimal reaction conditions; Identifies complex parameter interactions | Federated learning preserves data privacy; Trained on $1B+ research data |

| Data Management Systems | Centralized data repositories; JUMP Cell Painting database | Stores and organizes experimental results for machine learning | Enables batch correction methods like Harmony and Seurat RPCA |

| Analytical Integration | UPLC/MS systems; Automated NMR; HPLC | Provides high-throughput characterization of reaction outcomes | Direct integration with robotic platforms for automated analysis |

Batch Correction and Data Integration Methods

The following diagram illustrates the data processing workflow for managing batch effects in high-throughput screening data, a critical consideration when integrating results across multiple experimental runs.

Implementation of Batch Correction Protocols

Eli Lilly's HTE platforms implement sophisticated batch correction methods to address technical variations across different experimental runs, laboratories, and equipment configurations. Based on benchmarking studies conducted using the JUMP Cell Painting dataset, Harmony and Seurat RPCA have emerged as top-performing methods for reducing batch effects while preserving biological signals [30]. These methods are particularly valuable when integrating data collected across Lilly's distributed research network, including the twelve laboratories that contributed to the JUMP Consortium dataset.