Implementing AI-Driven Computer-Aided Synthesis Planning (CASP): A Strategic Guide for Accelerating Drug Discovery

This article provides a comprehensive guide for researchers and drug development professionals on implementing Computer-Aided Synthesis Planning (CASP).

Implementing AI-Driven Computer-Aided Synthesis Planning (CASP): A Strategic Guide for Accelerating Drug Discovery

Abstract

This article provides a comprehensive guide for researchers and drug development professionals on implementing Computer-Aided Synthesis Planning (CASP). It explores the foundational shift from rule-based systems to modern AI and machine learning models that are transforming synthetic chemistry. The content details practical methodologies for retrosynthesis and reaction condition prediction, addresses key challenges in data quality and platform integration, and validates CASP's impact through case studies and market growth data. By synthesizing insights from current literature and emerging trends, this guide aims to equip scientific teams with the knowledge to effectively integrate CASP, thereby shortening discovery timelines, reducing R&D costs, and fostering innovative synthesis strategies.

The CASP Revolution: From Manual Retrosynthesis to AI-Powered Planning

Defining CASP and its Core Role in the Design-Make-Test-Analyse (DMTA) Cycle

Computer-Aided Synthesis Planning (CASP) represents a transformative technological advancement in chemical and pharmaceutical research, leveraging artificial intelligence and computational power to streamline the design of synthetic routes for target molecules. CASP systems are specifically engineered to assist chemists in the decision-making process by suggesting synthetic pathways that optimize for critical parameters including yield, cost, and safety [1]. The core methodology employs retrosynthetic analysis, a logical framework formalized by E. J. Corey, which involves the recursive deconstruction of a target molecule into progressively simpler, commercially available precursors [2] [3].

The field has undergone significant evolution, transitioning from early rule-based expert systems reliant on limited, manually curated reaction sets to modern data-driven machine learning models capable of analyzing vast chemical reaction databases [2] [1]. This progression has substantially enhanced the scope and novelty of proposed synthetic routes. Modern CASP methodologies integrate both single-step retrosynthesis prediction and multi-step synthesis planning, utilizing search algorithms such as Monte Carlo Tree Search to chain individual steps into complete viable routes [2]. The integration of CASP into the drug discovery pipeline addresses a fundamental bottleneck, accelerating the transition from digital design to physical compound.

The Evolving DMTA Cycle in Drug Discovery

The Design-Make-Test-Analyse (DMTA) cycle forms the core iterative engine of modern drug discovery and development. This process relies on the rapid and reliable synthesis of compound series for biological evaluation, where each phase is deeply interconnected and dependent on the outputs of the others [4]. The efficiency of this cycle is paramount for the discovery and optimization of novel small-molecule drug candidates [2].

Within this framework, the "Make" phase has traditionally represented the most costly and time-consuming segment, often involving labor-intensive, multi-step synthetic procedures with numerous variables requiring optimization [2] [4]. Inefficiencies or failures in this process inevitably waste substantial resources and delay project timelines. Consequently, the acceleration of drug discovery projects hinges on a smooth and rapid flow of high-quality ideas through a fully integrated and effective DMTA cycle [4]. It is within this critical "Make" phase that CASP has emerged as a disruptive technology, offering a pathway to drastic reductions in cycle times and a significant improvement in overall success rates.

The Core Role of CASP in the "Make" Phase

CASP's primary role within the DMTA cycle is to de-bottleneck the "Make" process, which encompasses synthesis planning, sourcing of materials, reaction setup, monitoring, purification, and characterization [2]. By digitalizing and automating the planning component, CASP introduces unprecedented efficiency and strategic insight.

Strategic Route Scouting and Selection

A key application of CASP is in the strategic scouting and selection of synthetic routes. CASP tools enable a holistic planning approach, integrating sophisticated tools and knowledge to plan specific reaction conditions with a high probability of success from the outset [2]. This contrasts with historical approaches where an overarching plan was established using standard conditions, often necessitating lengthy optimization for each step. AI-powered platforms can generate diverse and innovative ideas for synthetic route design, proving particularly powerful for complex, multi-step routes for key intermediates or first-in-class target molecules [2]. This capability allows research teams to identify the most promising synthetic route strategically before any laboratory work begins.

Ensuring Synthesizability in Molecular Design

CASP is increasingly used to assess the synthesizability of proposed compounds during the "Design" phase, creating a tighter coupling between design and manufacturing feasibility. This application ensures that the value of an elegant molecular design is realized through practical synthesis [5]. CASP-based synthesizability scores can be integrated into de novo drug design workflows as an optimization objective, ensuring generated molecular structures are not only active but also readily synthesizable [5]. This proactive assessment of synthetic accessibility is crucial for improving the overall effectiveness of the DMTA cycle, preventing the design of molecules that are impractical or prohibitively expensive to produce.

Integration with Laboratory Automation

A transformative aspect of modern CASP is its synergy with laboratory automation. The coupling of AI algorithms with robotic platforms creates a powerful combination where CASP automates the logic of synthesis, and robotic platforms automate the hands-on lab work [1]. This integration allows for the rapid, reproducible, and high-throughput synthesis of compounds based on computationally predicted routes [1]. Feedback loop mechanisms can further enhance this process, where an AI algorithm monitors the synthetic process and provides real-time feedback on reaction conditions, leading to continuous optimization [1]. This merger of digital and physical automation significantly accelerates the drug discovery process.

Quantitative Performance of CASP in DMTA

The impact of CASP on the DMTA cycle is demonstrated by quantifiable improvements in efficiency and success rates. The following tables summarize key performance data from recent implementations.

Table 1: Impact of CASP on Synthesis Planning Efficiency

| Metric | Traditional Approach | CASP-Enhanced Approach | Improvement/Notes |

|---|---|---|---|

| Route Identification | Manual literature searches & intuition | AI-powered retrosynthetic analysis | Generates diverse, innovative routes in minutes [2] |

| Solvability Rate (General) | N/A | ~70% | With 17.4 million commercial building blocks [5] |

| Solvability Rate (In-House) | N/A | ~60% | With only ~6,000 in-house building blocks; minimal performance drop [5] |

| Avg. Synthesis Route Length | N/A | Shorter routes | More building blocks enable shorter pathways [5] |

Table 2: Experimental Validation of CASP-Generated Molecules

| Study Focus | Methodology | Key Experimental Result |

|---|---|---|

| In-House De Novo Design | CASP-driven design of MGLL inhibitors; synthesis based on AI-suggested routes using in-house building blocks [5] | Successful identification of one candidate with evident biochemical activity, validating the workflow [5] |

| Synthesizability Score | Use of a retrainable in-house synthesizability score in a multi-objective de novo design workflow [5] | Generation of thousands of potentially active and easily in-house synthesizable molecules [5] |

Protocols for Implementing CASP in the DMTA Cycle

Protocol: Integrating CASP for In-House Synthesizability Assessment

This protocol outlines the procedure for generating and experimentally validating de novo molecules designed with in-house synthesizability as a core constraint, as demonstrated in recent research [5].

1. Reagent Solutions Table 3: Essential Research Reagents and Tools for CASP Implementation

| Item/Tool | Function/Description | Example/Note |

|---|---|---|

| CASP Software | Core platform for retrosynthetic analysis and route planning. | AiZynthFinder [5], other commercial or proprietary platforms. |

| Building Block Inventory | Curated list of readily available chemical starting materials. | Can be a large commercial database (e.g., Zinc, ~17.4M BBs) or a limited in-house collection (e.g., ~6,000 BBs) [5]. |

| Synthesizability Scoring Model | A machine learning model trained to predict the likelihood of a successful synthesis. | Can be trained on CASP outcomes; requires ~10,000 molecules for effective retraining [5]. |

| De Novo Design Software | Generative AI platform for proposing novel molecular structures. | Used with synthesizability and activity (e.g., QSAR) as multi-objectives [5]. |

| Laboratory Automation | Robotic platforms for high-throughput, precise synthesis. | Executes CASP-suggested routes; improves reproducibility and efficiency [1]. |

2. Procedure

- Step 1: Define Building Block Set. Compile a structured inventory of all readily available building blocks within the organization. This list forms the foundational chemical space for all subsequent synthesis planning.

- Step 2: Configure and Validate CASP. Implement the chosen CASP tool (e.g., AiZynthFinder) and configure it to use the defined in-house building block set. Validate performance by running the tool against a benchmark set of known molecules.

- Step 3: Develop In-House Synthesizability Score.

- Use the configured CASP to perform synthesis planning on a diverse dataset of molecules (e.g., ~10,000 molecules from sources like ChEMBL).

- Label each molecule as "solved" or "not solved" based on CASP's ability to find a route using in-house building blocks.

- Train a machine learning model (e.g., a graph neural network or classifier) on this dataset to predict the probability that a novel molecule is synthesizable in-house.

- Step 4: Multi-Objective De Novo Generation.

- Integrate the trained synthesizability score with other relevant objective functions, such as a QSAR model for the biological target of interest.

- Run the generative molecular design algorithm to produce a library of candidate molecules optimized for both activity and in-house synthesizability.

- Step 5: Candidate Selection and Route Execution.

- Select top-ranking candidates from the generated library.

- For each candidate, run a full CASP process to obtain a detailed, step-by-step synthetic route.

- Execute the suggested synthesis in the laboratory, preferably using automated platforms where available.

- Step 6: Testing and Analysis.

- Purify and characterize the synthesized compounds.

- Submit the compounds for biological testing (e.g., potency, selectivity) to validate the design hypotheses.

- Analyze the results and feed the data back into the DMTA cycle for the next iteration of design.

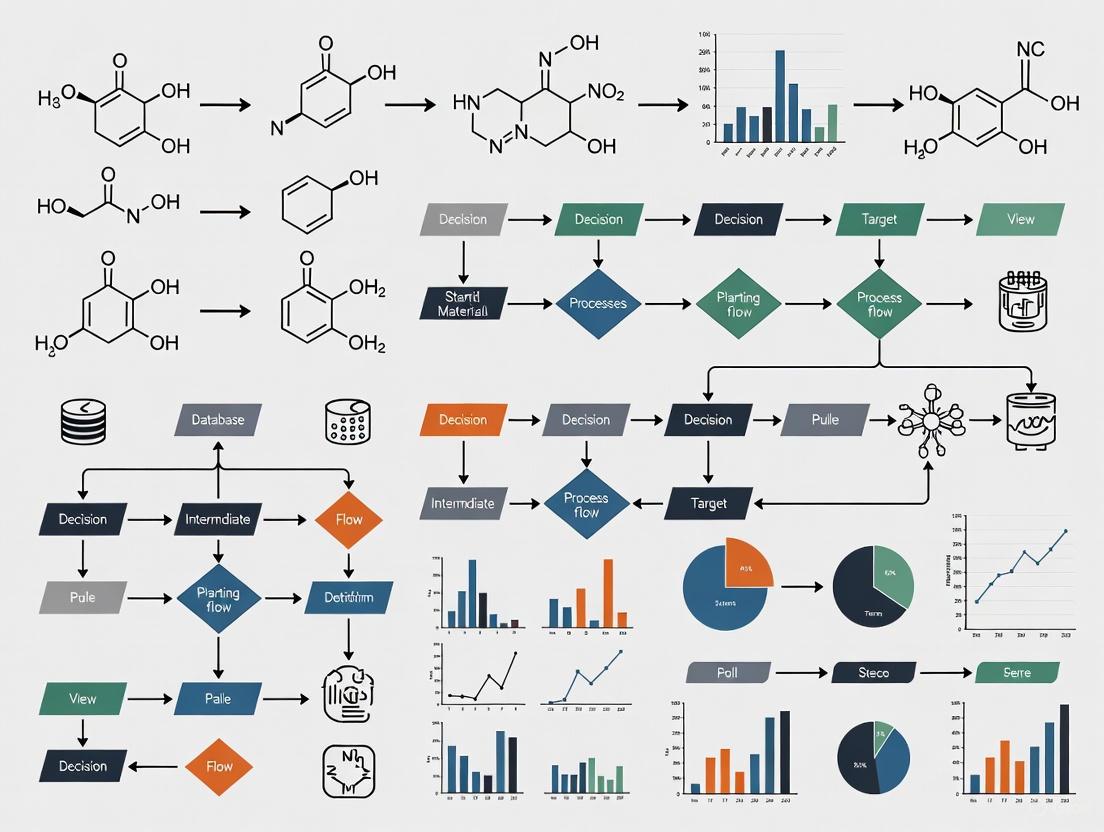

Workflow Diagram: CASP-Integrated DMTA Cycle

The following diagram visualizes the enhanced DMTA cycle, highlighting the integrated role of CASP and the feedback mechanisms that drive iterative learning.

Computer-Aided Synthesis Planning is no longer a speculative technology but a core component of a modern, efficient DMTA cycle in drug discovery. Its role in transforming the "Make" phase from a bottleneck into a strategic advantage is clearly demonstrated by its ability to generate viable synthetic routes rapidly, ensure synthesizability during molecular design, and integrate seamlessly with laboratory automation. The experimental validation of CASP-designed molecules and synthesis routes underscores its practical utility. As CASP tools continue to evolve, fueled by larger and more curated datasets adhering to FAIR principles, their predictive power and reliability will only increase, further accelerating the discovery of new therapeutics.

The Evolution from Expert Rule-Based Systems to Data-Driven Machine Learning

The field of Computer-Aided Synthesis Planning (CASP) is undergoing a fundamental transformation, driven by the evolution from traditional expert rule-based systems to modern data-driven machine learning approaches. This shift addresses a core challenge in pharmaceutical research: the immense time and cost of drug development. The traditional process can exceed 10–15 years and cost over $2 billion per approved drug, with extremely high attrition rates where only one in 20,000–30,000 initially promising compounds reaches approval [6]. Rule-based expert systems, classified as symbolic AI, operate on manually curated "if-then" rules derived from chemical knowledge [7] [8]. In contrast, data-driven machine learning approaches are subsymbolic systems that learn synthesis patterns directly from large reaction datasets [7] [9]. This evolution represents a critical pathway toward more efficient and effective drug discovery, bridging human expertise with data-driven predictive power.

Comparative Analysis: System Architectures and Performance

Core Architectural Differences

Table 1: Fundamental Characteristics of Rule-Based and Machine Learning Systems in CASP

| Feature | Rule-Based Expert Systems | Data-Driven Machine Learning |

|---|---|---|

| Core Logic | Predefined "IF-THEN" rules from human experts [8] [10] | Patterns learned autonomously from large datasets [11] [9] |

| Knowledge Source | Manual curation by chemists/domain experts [7] [8] | Historical reaction data (e.g., USPTO, Reaxys) [7] [6] |

| Transparency | High; decisions are easily interpretable and traceable [8] [9] | Low "black box" nature; difficult to interpret [7] [9] |

| Adaptability | Low; requires manual updates to rules [11] [10] | High; continuously improves with new data [11] [10] |

| Scalability | Poor; complex to maintain as rules grow [8] [9] | Excellent; handles complexity through model scaling [7] [9] |

| Data Dependency | Low; works with limited data using expert knowledge [9] | High; requires large, high-quality datasets [7] [12] |

Performance and Application Metrics

Table 2: Performance Comparison in Practical Applications

| Metric | Rule-Based Systems | Machine Learning Systems |

|---|---|---|

| Handling Novelty | Struggles with unknown chemical spaces [9] | Generates novel retrosynthetic pathways [7] [6] |

| Development Speed | Slow, knowledge-intensive setup [8] | Rapid hypothesis generation (e.g., novel drug candidate for idiopathic pulmonary fibrosis designed in 18 months [12]) |

| Handling Ambiguity | Rigid; struggles with incomplete information [8] [11] | Robust with probabilistic predictions [10] [6] |

| Best-Suited Tasks | Well-defined problems with clear rules (e.g., early expert systems like MYCIN [8]) | Complex, multi-variable prediction (e.g., molecular property prediction, reaction outcome forecasting [12] [13]) |

Experimental Protocols and Application Notes

Protocol 1: Implementing a Traditional Rule-Based Expert System for Retrosynthetic Analysis

Principle: This protocol uses manually encoded chemical transformation rules to break down a target molecule into simpler precursors [7] [8].

Procedure:

- Knowledge Base Construction: Curate a set of reaction rules derived from established chemical principles and expert knowledge (e.g., BNICE reaction rules). Each rule must define specific bond-making/breaking patterns and necessary functional group compatibility [7].

- Target Molecule Input: Represent the target molecule in a machine-readable format (e.g., SMILES, InChI) and load it into the system's working memory [8].

- Rule Matching: The inference engine scans the knowledge base to identify all rules whose conditions (IF) match substructures within the target molecule [8].

- Rule Application & Conflict Resolution: Apply matched rules to generate precursor molecules. If multiple rules fire, use a conflict resolution strategy (e.g., prioritization by rule specificity, recency, or expert-assigned confidence) [8].

- Iterative Decomposition: Recursively apply steps 3-4 to the generated precursors until readily available starting materials are identified [7].

- Pathway Scoring & Output: Rank the complete retrosynthetic pathways based on predefined criteria (e.g., rule confidence, synthetic step count, precursor complexity) and output the highest-scoring routes [7].

Protocol 2: Data-Driven Retrosynthetic Planning with Transformer Models

Principle: This protocol employs a deep learning model (e.g., a Transformer) trained on extensive reaction datasets to predict likely retrosynthetic steps in a single-step or multi-step fashion [7] [6].

Procedure:

- Data Curation & Preprocessing: Assemble a large dataset of validated chemical reactions (e.g., from USPTO, Reaxys). Preprocess data by standardizing reaction mappings, converting SMILES to tokenized sequences, and splitting into training/validation/test sets [7] [14].

- Model Architecture Selection: Implement a Transformer-based encoder-decoder architecture. The encoder processes the target molecule representation, and the decoder generates the precursor SMILES string [7].

- Model Training: Train the model using a sequence-to-sequence objective with teacher forcing. Utilize an optimizer (e.g., Adam) and a loss function (e.g., cross-entropy) to minimize the difference between predicted and actual precursor sequences [7].

- Attention Analysis for Rule Inference (Optional): Extract attention weights from the trained model to identify which parts of the input molecule the model "focuses on" when making a prediction. These weights can be converted into implicit, data-driven reaction rules [7].

- Single-Step Prediction: Input the tokenized representation of the target molecule into the trained model. The model outputs a probability distribution over possible precursor sequences.

- Beam Search Decoding: Use beam search to generate the top-k most likely single-step retrosynthetic proposals, rather than just the single most likely one.

- Multi-Step Planning: Recursively apply the single-step model to the generated precursors, building a synthetic tree. Use a scoring function (e.g., based on model probability, estimated feasibility, or starting material cost) to guide the search and identify the most promising multi-step pathway [7] [6].

Protocol 3: A Hybrid Approach for Enhanced CASP

Principle: This protocol bridges the gap between the two paradigms by using machine learning to infer generalized, human-understandable reaction rules from data, which are then deployed within a transparent, rule-based reasoning framework [7] [9].

Procedure:

- Data-Driven Rule Inference: Train a transformer model on a large corpus of atom-mapped reactions. For each reaction, convert the model's attention weights into a specific atom-mapping pattern, effectively creating a data-driven reaction rule [7].

- Rule Generalization & Consensus: Cluster the exhaustive set of inferred rules based on chemical similarity. From each cluster, derive a single, generalized consensus rule that captures the essential transformation while filtering out noise [7].

- Hybrid Knowledge Base Curation: Populate a new knowledge base with these machine-learned consensus rules. This base can be supplemented or validated with high-confidence rules from expert systems [7].

- Pathway Planning with Hybrid Inference: Execute retrosynthetic analysis using the standard rule-based protocol (Protocol 1), but utilizing the hybrid knowledge base. The system now operates with the transparency and control of a rule-based system but is powered by rules that are more comprehensive and data-driven [7].

The Scientist's Toolkit: Essential Research Reagents and Materials

Table 3: Key Resources for CASP Implementation Research

| Resource Category | Specific Examples & Functions |

|---|---|

| Chemical Reaction Datasets | USPTO: Contains patent-derived reactions for training ML models [6]. Reaxys: A comprehensive database of curated chemical reactions and substance data for validation [7]. |

| Rule-Based System Components | BNICE Reaction Rules: A manually curated set of biochemical transformation rules [7]. Inference Engine Software: Frameworks (e.g., CLIPS, Drools) that apply logical rules to facts [8]. |

| Machine Learning Frameworks | PyTorch/TensorFlow: Open-source libraries for building and training deep learning models like Transformers [12] [6]. Hugging Face Transformers: Provides pre-trained models and architectures for sequence-to-sequence tasks [7]. |

| Cheminformatics Libraries | RDKit: Open-source toolkit for cheminformatics used for molecule manipulation, descriptor calculation, and substructure matching [7] [6]. Open Babel: A chemical toolbox designed to speak the many languages of chemical data, crucial for file format conversion [6]. |

| Specialized CASP Platforms | IBM RXN for Chemistry: A cloud-based platform that uses AI models to predict chemical reaction outcomes and retrosynthetic paths [7]. Atomwise: Uses convolutional neural networks for molecular property prediction and virtual screening [12]. |

The field of computer-aided synthesis planning (CASP) is undergoing a paradigm shift, propelled from an academic niche to a central industrial strategy by artificial intelligence (AI). This transformation is driven by a multi-billion dollar market push, where the convergence of economic pressure, technological breakthroughs, and urgent global demands is accelerating the adoption of AI-driven tools for molecular design and synthesis. Within the broader context of CASP implementation research, understanding these drivers is essential for developing robust, scalable, and impactful experimental protocols that transition from theoretical models to laboratory-scale and industrial production.

The Economic Landscape: Quantifying the Market Surge

The integration of AI in chemicals, particularly for synthesis and discovery, represents one of the fastest-growing segments in the industry. The financial commitment underscores its perceived strategic value.

Table 1: Global AI in Chemicals Market Size & Forecast

| Metric | Value | Notes & Source |

|---|---|---|

| Market Size (2025) | USD 2.29 - 2.83 Billion | Slight variance between sources [15] [16]. |

| Projected Market Size (2034) | USD 28.00 - 28.74 Billion | Consistent high-growth projection [15] [16]. |

| Compound Annual Growth Rate (CAGR, 2025-2034) | 29.36% - 32.05% | Reflects aggressive expansion [15] [16]. |

| Largest Regional Market (2024) | North America (39.4% - 42.61% share) | Driven by advanced tech infrastructure and major chemical firms [15] [16]. |

| Fastest-Growing Region | Asia-Pacific | Due to established chemical industry and government digitalization policies [16]. |

| Dominant Application Segment | Production Optimization (~30% share) | AI for real-time process control and yield improvement [15] [16]. |

| Key End-Use Segment | Base Chemicals & Petrochemicals | AI accelerates product/process development and predictive maintenance [15]. |

Core Market Drivers Fueling AI Adoption in Synthesis

The market growth quantified in Table 1 is not serendipitous but is fueled by concrete, interconnected drivers that align directly with CASP research objectives.

Driver 1: Escalating Economic & Operational Pressures

The chemical and pharmaceutical industries face immense pressure to reduce costs and accelerate time-to-market. Traditional discovery and process development are time-consuming and expensive. AI addresses this directly:

- Accelerated R&D Timelines: AI can compress years of laboratory work into weeks or days by rapidly predicting viable synthetic routes and optimizing reactions, directly addressing the "slowness" structural to the chemical industry [17] [18]. For instance, machine learning strategies perform R&D at a "higher and faster speed" by identifying appropriate molecules and generating correct formulas [15].

- Cost Reduction: AI optimizes resource use, minimizes waste (e.g., <5% ethylene glycol waste in PET production), and enables predictive maintenance to lower downtime [18]. This tackles the high cost of new technology adoption by delivering a clear return on investment [16].

Driver 2: Technological Convergence and Capability Leap

Advancements in algorithms, computing, and data availability have made complex CASP feasible.

- Generative AI and Advanced ML: Beyond analytical AI, generative AI can design novel molecules with desired properties and propose hybrid synthetic pathways (e.g., organic-enzymatic), acting as a "game-changer" for discovering greener alternatives [15] [17] [19].

- Democratization of Synthesis Planning: Open-source and commercial CASP platforms (e.g., AiZynthFinder, IBM RXN, ChemEnzyRetroPlanner) provide researchers with powerful, accessible tools, lowering the barrier to entry [19] [20].

- Integration with Robotics and Automation: AI-driven robotics automate tasks in chemical plants and labs, enabling 24/7 experimentation and creating self-optimizing feedback loops [15].

Driver 3: Demand for Sustainable and Innovative Products

Global sustainability mandates and the need for novel materials for energy, healthcare, and technology are powerful market shapers.

- Green Chemistry Mandate: AI is critical for designing environmentally benign processes, minimizing byproducts, and discovering biodegradable materials. It can help organizations be "approximately 63% more environmentally friendly" [15] [16].

- Materials for the Energy Transition: The search for new materials to support energy transition innovations is a key force pushing chemical companies toward AI [17].

- Precision Medicine and Drug Discovery: The sector's role in precision medicine demands tailored therapies. AI accelerates drug discovery by powering virtual screening, predicting drug-target interactions, and designing new drug modalities like antibody-drug conjugates (ADCs) and PROTACs [21] [18].

Application Notes & Experimental Protocols: Implementing AI-Driven Hybrid Synthesis

This protocol details the implementation of an AI-powered platform for planning hybrid organic-enzymatic syntheses, a cutting-edge application within CASP that addresses drivers of sustainability and efficiency.

Protocol Title: AI-Assisted Retrosynthetic Planning for Hybrid Organic-Enzymatic Routes

4.1 Objective: To utilize the ChemEnzyRetroPlanner platform for the fully automated design, evaluation, and in silico validation of hybrid synthesis routes for a target organic molecule or natural product [19].

4.2 Principle: The platform integrates a retrosynthetic planning algorithm (RetroRollout*) with enzyme recommendation systems and large language models (LLM) like Llama3.1. It employs a chain-of-thought strategy to autonomously decide when to incorporate biocatalytic steps, aiming for more sustainable and selective syntheses [19].

4.3 Research Reagent & Software Toolkit Table 2: Essential Digital Tools & Data for AI-Driven Synthesis Planning

| Item | Function in Protocol | Source/Example |

|---|---|---|

| Target Molecule (SMILES) | The digital representation of the compound to be synthesized. Input for the planning algorithm. | User-defined. |

CASP Platform (ChemEnzyRetroPlanner) |

Core software executing hybrid retrosynthesis, condition prediction, and enzyme recommendation. | Open-source platform [19]. |

| Reaction Database | Provides known chemical transformations for the algorithm to exploit. | e.g., USPTO, Reaxys [19]. |

| Enzymatic Reaction Database | Provides known biocatalytic transformations for hybrid route planning. | e.g., Rhea, BRENDA [19]. |

| Large Language Model (LLM) | Enhances decision-making and strategy activation within the planning workflow. | e.g., Llama3.1 integrated within platform [19]. |

| Commercial CASP Software | Alternative or benchmarking tools for route comparison. | e.g., Synthia, IBM RXN, Spaya [20]. |

| Quantum Chemistry Data | For in silico validation of enzyme active site compatibility or molecular properties. | Used in predictive models [18]. |

4.4 Detailed Methodology

- Target Input & Parameter Setting:

- Access the

ChemEnzyRetroPlannerweb interface or API [19]. - Input the target molecular structure in SMILES or SDF format.

- Set search parameters: maximum route depth, preference for enzymatic steps, allowed reaction types, and cost/ green chemistry metrics.

- Access the

AI-Driven Retrosynthetic Analysis:

- Initiate the

RetroRollout*search algorithm. The system will decompose the target molecule recursively. - At each disconnection step, the AI evaluates both traditional organic transformations and potential enzymatic steps from its databases.

- The LLM component guides the "chain-of-thought," deciding on strategy (e.g., "use an enzymatic step for this stereocenter resolution").

- Initiate the

Route Evaluation & Scoring:

- The platform generates multiple candidate routes presented in a tree graph.

- Each route is scored based on predefined criteria: estimated overall yield, number of steps, cost of starting materials, environmental impact (E-factor), and the plausibility of predicted reaction conditions.

Enzyme Recommendation & In Silico Validation:

- For routes containing enzymatic steps, the platform recommends specific enzymes or enzyme classes (e.g., ketoreductase for asymmetric reduction).

- Advanced modules may perform in silico docking or active site modeling to validate the feasibility of the substrate-enzyme interaction [19].

Experimental Translation & Validation:

- Select the top-ranked 1-2 synthetic routes for laboratory validation.

- Note: This protocol covers planning. Wet-lab execution requires standard organic and biochemical synthesis techniques, utilizing the predicted conditions (solvent, temperature, catalyst) as a starting point.

4.5 Data Analysis:

- Compare AI-proposed routes against literature-known syntheses for the same target in terms of step count and predicted green metrics.

- Evaluate the success rate of AI-predicted reaction conditions in initial laboratory attempts.

Visualizing the AI-Driven Synthesis Workflow

Diagram 1: Value Creation Path of AI in Chemical Synthesis

Diagram 2: Experimental Workflow for AI-Planned Hybrid Synthesis

The multi-billion dollar push for AI in chemical synthesis is a direct response to a triad of powerful market drivers: the unrelenting need for speed and cost-efficiency, the transformative potential of new technologies, and the global imperative for sustainable innovation. For the CASP researcher, this translates into a mandate to develop not just theoretically sound algorithms, but robust, experimentally validated protocols that integrate hybrid synthesis strategies, leverage open-source platforms, and ultimately deliver the tangible efficiencies and breakthroughs the market demands. The future of chemical synthesis is a collaborative one, between human expertise and AI-powered planning, driving toward a more innovative, efficient, and sustainable industry.

Computer-Aided Synthesis Planning (CASP) represents a transformative advancement in chemical research, leveraging artificial intelligence (AI) to redesign how chemists plan and execute molecular synthesis. By integrating machine learning (ML), deep learning (DL), and predictive analytics, CASP systems enable scientists to design and optimize synthetic pathways with unprecedented speed and accuracy, moving beyond traditional reliance on manual expertise and trial-and-error experimentation [22]. The core CASP workflow is embedded within the iterative Design-Make-Test-Analyse (DMTA) cycle in drug discovery, where the "Make" phase—encompassing synthesis planning, sourcing, reaction setup, monitoring, purification, and characterization—has traditionally been a significant bottleneck [2]. This document details the protocols and application notes for the three fundamental components of a CASP workflow: AI-driven synthesis planning, streamlined sourcing of starting materials, and the execution of automated synthesis, providing a framework for its implementation in research and development.

Synthesis Planning: AI-Driven Retrosynthetic Analysis

Synthesis planning is the foundational step in the CASP workflow, involving the deconstruction of a target molecule into simpler, commercially available precursors via retrosynthetic analysis. Modern CASP tools have evolved from early rule-based systems to data-driven machine learning models that propose viable multi-step synthetic routes [2].

Protocol for AI-Powered Retrosynthesis Planning

Objective: To generate a feasible multi-step synthetic route for a target molecule using a state-of-the-art AI model. Materials: Target molecule (SMILES or structure file), access to a CASP platform (e.g., RSGPT, ChemPlanner, RetroExplainer), standard computing hardware. Methodology:

- Input Preparation: Represent the target molecule in a standardized format, such as a SMILES string or a molecular structure file (e.g., .mol or .sdf).

- Model Application: Input the target structure into the CASP platform. Advanced models like RSGPT utilize a transformer architecture pre-trained on billions of generated reaction datapoints [23].

- Route Generation: The model performs a retrosynthetic analysis. The underlying algorithm (e.g., Monte Carlo Tree Search) explores possible disconnections, proposing a series of potential synthetic routes [2].

- Route Evaluation and Ranking: The proposed routes are ranked based on feasibility, step count, predicted yield, and cost. The model may employ Reinforcement Learning from AI Feedback (RLAIF) to validate the rationality of generated reactants and templates [23].

- Output: The final output is a list of potential multi-step synthesis plans, with the top-ranked route selected for further evaluation.

Table 1: Performance Metrics of AI Retrosynthesis Models on Benchmark Datasets

| Model Name | Model Type | USPTO-50k Top-1 Accuracy | Key Feature |

|---|---|---|---|

| RSGPT [23] | Template-free Transformer | 63.4% | Pre-trained on 10 billion synthetic data points; uses RLAIF |

| RetroComposer [23] | Template-based | ~55% (est.) | Composes templates from basic building blocks |

| SemiRetro [23] | Semi-template-based | ~55% (est.) | Predicts reactants via synthons and intermediates |

| Graph2Edits [23] | Semi-template-based | ~55% (est.) | End-to-end model integrating two-stage procedures |

| NAG2G [23] | Template-free | ~55% (est.) | Combines 2D molecular graphs and 3D conformations |

Sourcing: Management of Building Blocks and Starting Materials

The efficiency of compound synthesis is critically dependent on rapid access to a diverse array of building blocks (BBs). A sophisticated Chemical Inventory Management System is vital for real-time tracking, secure storage, and regulatory compliance [2].

Protocol for Strategic Sourcing of Building Blocks

Objective: To identify and procure the required building blocks for a planned synthesis route efficiently. Materials: List of required building blocks from the synthesis plan, access to an in-house sourcing interface or vendor catalogues (e.g., Enamine, eMolecules, Sigma-Aldrich). Methodology:

- Inventory Check: Query the internal Chemical Inventory Management System using the compound list. The system should provide metadata such as stock quantity, location, and supplier information [2].

- Vendor Search: For building blocks not in stock, use an integrated sourcing interface that searches updated punch-out catalogues from major global BB providers. This allows for structure-based filtering and comparison of lead times and pricing [2].

- Virtual Catalogue Exploration: To access a broader chemical space, search virtual catalogues like the Enamine MADE (MAke-on-DEmand) collection. These contain billions of synthesizable compounds not held in physical stock but available for synthesis upon request with a high success rate, typically delivered within weeks [2].

- Procurement: For physically available stock, select vendors offering pre-weighted BB support to minimize in-house labor and errors. For virtual compounds, place a custom synthesis order directly through the platform interface.

Table 2: Key Sourcing Platforms and Reagent Solutions for CASP Workflows

| Resource Name | Type | Function & Application |

|---|---|---|

| Enamine MADE [2] | Virtual Building Block Catalogue | Provides access to over a billion synthesizable compounds on-demand, vastly expanding accessible chemical space. |

| In-House Sourcing Interface [2] | Inventory Management Tool | Aggregates catalogues from multiple vendors (e.g., Enamine, eMolecules, Chemspace) with metadata and structure-based search. |

| Chemical Inventory Management System [2] | Internal Database | Tracks real-time availability, storage conditions, and regulatory status (e.g., narcotics) of internal chemical stocks. |

| Pre-weighted BB Support [2] | Vendor Service | Reduces overhead by providing cherry-picked, pre-weighed building blocks, eliminating in-house dissolution and reformatting. |

Execution: From Digital Plan to Chemical Reality

The execution phase translates the in-silico synthesis plan into a physical compound. This involves reaction setup, monitoring, work-up, purification, and characterization, with automation and data capture being critical for efficiency and continuous model improvement [2].

Protocol for Automated Reaction Execution and Analysis

Objective: To execute the planned synthetic route using automated laboratory equipment and document the outcomes using FAIR (Findable, Accessible, Interoperable, Reusable) data principles. Materials: Planned synthesis route, sourced starting materials, automated synthesis equipment (e.g., robotic liquid handlers, reactor stations), analytical instruments (e.g., UPLC/HPLC, MS, NMR). Methodology:

- Reaction Setup: Utilize automated liquid handlers and reactor stations for precise and reproducible reaction assembly. This minimizes human error and variability, especially in High-Throughput Experimentation (HTE) campaigns [2].

- Reaction Monitoring: Employ in-line or at-line analytical techniques (e.g., UPLC/HPLC) to track reaction progress in real-time. This data can be fed back to the CASP system for dynamic adjustment or future model refinement [2].

- Work-up and Purification: Leverage automated purification systems, such as flash chromatography or preparative HPLC, to isolate the desired compound.

- Characterization: Analyze the purified compound using standard techniques (e.g., MS, NMR) to confirm identity and purity.

- Documentation: Systematically record all experimental parameters and outcomes (both successes and failures) in an electronic lab notebook (ELN) following FAIR data principles. This structured data is crucial for retraining and improving the predictive accuracy of CASP models [2].

Integrated CASP Workflow Visualization

The following diagram illustrates the logical flow and interdependencies of the core components within a Computer-Aided Synthesis Planning workflow, from target input to compound output.

The implementation of a robust CASP workflow, integrating advanced AI synthesis planning, strategic sourcing from physical and virtual inventories, and automated execution, is pivotal for accelerating discovery in pharmaceuticals and materials science. Adherence to the detailed protocols for each component ensures efficiency, reproducibility, and continuous improvement through data-driven learning. As CASP technologies mature, their integration with laboratory automation and data management systems will further solidify their role as a cornerstone of next-generation chemical research.

AI in Action: Core Algorithms and Practical Workflows for Retrosynthesis

Retrosynthetic analysis, formalized by Corey, is a cornerstone strategy in organic synthesis that involves systematically deconstructing a target molecule into progressively simpler precursors to identify feasible synthetic routes [24]. This process is particularly crucial in competitive fields such as pharmaceutical development and materials science, where it accelerates innovation by streamlining the synthesis of complex natural products and novel compounds [24] [25]. Computer-Aided Synthesis Planning (CASP) has emerged as a transformative approach to retrosynthesis, leveraging artificial intelligence to navigate the vast complexity of chemical space and overcome limitations of human expertise [26] [27].

The evolution of CASP has progressed from early rule-based systems relying on manually encoded expert knowledge to modern data-driven approaches powered by deep learning [26] [25]. This shift has given rise to three dominant computational paradigms for retrosynthesis prediction: template-based, semi-template-based, and template-free methods [28] [29]. More recently, a novel fourth category termed template-generative models has emerged, further expanding the capabilities of automated retrosynthesis planning [26] [30]. Each paradigm offers distinct advantages and limitations in terms of prediction accuracy, interpretability, generalization capability, and computational requirements, making them suitable for different applications and contexts within drug development and materials science.

Template-Based Methods

Template-based approaches formulate retrosynthesis prediction as a template retrieval and ranking problem [28] [29]. These methods rely on pre-defined reaction templates—encoded patterns representing chemical transformations—which are matched against target molecules to identify applicable reactions [25]. The matched templates are then ranked based on various criteria to select the most promising transformations.

Key Characteristics:

- Interpretability: Template-based methods provide clear chemical rationale for predictions by explicitly identifying the reaction type being applied [24] [27].

- Validity Guarantees: Products generated through template application typically maintain chemical validity due to the predefined structural rules [26].

- Coverage Limitations: Performance is constrained by the completeness of the template database, potentially missing novel or uncommon transformations [28] [29].

- Scalability Challenges: Template databases can become computationally expensive to maintain and search as they grow [24].

Notable implementations include LocalRetro, which evaluates local atom/bond templates at predicted reaction centers while incorporating non-local effects through global reactivity attention [29], and GLN (Graph Logic Network), which employs a conditional graphical model to learn rules for applying reaction templates [24].

Semi-Template-Based Methods

Semi-template approaches strike a balance between template-based and template-free methods by dividing retrosynthesis into two sequential stages: reaction center identification and synthon completion [28] [25]. These methods first identify potential reaction centers in the target molecule, break bonds at these locations to generate synthons (reactive intermediates), then complete these synthons into valid reactants.

Key Characteristics:

- Chemical Intuition: The two-stage process aligns well with chemical reasoning, first locating where reactions occur, then determining how to complete the molecules [28].

- Reduced Template Dependency: While some implementations use templates for synthon completion, others employ generative approaches, reducing reliance on comprehensive template libraries [29].

- Error Propagation: Incorrect reaction center identification directly impacts the subsequent completion stage, potentially leading to invalid predictions [28].

- Structural Awareness: These methods explicitly consider molecular graph topology, making them particularly effective for reactions with significant structural changes [31].

Representative examples include RetroXpert, which uses an edge-enhanced graph attention network to identify reaction centers before generating reactants [29], and G2Gs, which employs a variational graph translation framework to complete synthons into reactant graphs [29].

Template-Free Methods

Template-free methods approach retrosynthesis as a sequence-to-sequence translation problem, directly generating reactant SMILES strings from product SMILES without explicit reaction rules or templates [32] [29]. These methods typically employ advanced neural architectures such as Transformers to learn transformation patterns directly from data.

Key Characteristics:

- Generalization Capability: By learning implicit transformation patterns, template-free methods can potentially predict novel reactions not covered by existing templates [28] [32].

- End-to-End Learning: The direct mapping from products to reactants simplifies the prediction pipeline into a single model [29].

- Validity Challenges: Generated SMILES strings may sometimes violate chemical validity rules, requiring additional correction mechanisms [28] [29].

- Interpretability Limitations: The "black box" nature of deep learning models can make it difficult to understand the chemical rationale behind predictions [24].

Notable implementations include the Augmented Transformer, which employs SMILES augmentation to enhance model robustness [31], and EditRetro, which reframes retrosynthesis as a molecular string editing task using iterative refinement operations [29]. Recent advancements have incorporated 3D conformational information, such as the conformer-enhanced transformer that uses Atom-align Fusion and Distance-weighted Attention mechanisms to better capture spatial relationships [31].

Emerging Approach: Template-Generative Models

Template-generative models represent a novel paradigm that combines the interpretability of template-based methods with the flexibility of template-free approaches [26] [30]. Rather than retrieving templates from a fixed database, these models generate novel reaction templates conditioned on specific target molecules and optionally user-specified reaction sites.

Key Characteristics:

- Novelty: The ability to generate previously unrecorded templates expands the accessible chemical reaction space beyond known transformations [26].

- Controllability: User specification of reaction sites enables human-guided synthesis planning, combining computational power with chemical expertise [26] [30].

- Validity Assurance: Generated templates produce grammatically coherent reactants through precise atom mapping and functional group matching [26].

- Similarity Assessment: Some implementations employ latent space representations to measure similarity between generated and known reactions, providing chemical viability insights [26] [30].

The Site-Specific Template (SST) approach exemplifies this paradigm, generating concise templates focused specifically on reaction centers without broader structural context, and employing center-labeled products (CLP) to avoid application ambiguity [26].

Quantitative Performance Comparison

Table 1: Comparative Performance of Retrosynthesis Paradigms on Benchmark Datasets

| Method | Paradigm | Top-1 Accuracy (%) | Top-5 Accuracy (%) | Validity (%) | Dataset |

|---|---|---|---|---|---|

| RetroDFM-R [24] | Template-Free (LLM) | 65.0 | - | - | USPTO-50K |

| EditRetro [29] | Template-Free | 60.8 | - | - | USPTO-50K |

| Conformer-enhanced [31] | Template-Free | 55.5 (67.2 with class) | - | - | USPTO-50K |

| Site-Specific Template [26] | Template-Generative | ~58* | ~78* | - | USPTO-FULL |

| LocalRetro [29] | Template-Based | - | - | - | - |

| G2Gs [29] | Semi-Template | - | - | - | - |

Note: Exact values approximated from performance graphs in source material [26]

Research Reagent Solutions: Essential Tools for Retrosynthesis Implementation

Table 2: Key Research Tools and Databases for Retrosynthesis Implementation

| Tool/Database | Type | Primary Function | Application Context |

|---|---|---|---|

| RDChiral [26] | Software Library | Template extraction and application | Template-based and template-generative methods |

| RDKit [28] | Cheminformatics Toolkit | Mole manipulation and SMILES processing | All paradigms, particularly semi-template methods |

| USPTO-50K/ FULL [24] [26] | Reaction Dataset | Benchmark training and evaluation | Model development and comparative validation |

| SMILES [24] [29] | Molecular Representation | String-based molecule encoding | Template-free and sequence-based approaches |

| Extended-Connectivity Fingerprints (ECFP) [25] | Molecular Descriptor | Structure similarity assessment | Template retrieval and similarity-based methods |

| AiZynthFinder [33] | Retrosynthesis Platform | Route planning and validation | Synthesis planning and model evaluation |

Experimental Protocols and Implementation Guidelines

Protocol 1: Implementing Template-Based Retrosynthesis Prediction

Objective: Predict reactants for a target molecule using template-based approach with LocalRetro methodology [29].

Materials and Reagents:

- Target product molecule (SMILES representation)

- Reaction template database (e.g., extracted from USPTO using RDChiral)

- RDKit cheminformatics toolkit

- LocalRetro model implementation

Procedure:

- Molecular Representation:

- Convert target SMILES to molecular graph representation using RDKit

- Compute atom and bond features including atom type, hybridization, and ring membership

Template Matching:

- Identify all templates in database with product patterns matching substructures in target molecule

- For each matched template, extract corresponding reaction centers

Template Ranking:

- Apply graph neural network to encode molecular structure

- Compute compatibility scores between molecular contexts and candidate templates

- Incorporate global reactivity attention to capture non-local effects

- Rank templates by descending compatibility scores

Reactant Generation:

- Apply top-ranked template to target molecule using RDKit RunReactants function

- Validate generated reactants for chemical correctness

Validation:

- Confirm atom mapping consistency between products and generated reactants

- Verify reaction center alignment with known chemical mechanisms

Troubleshooting Tips:

- Low template match rates may indicate need for expanded template database

- Chemically implausible predictions may suggest inadequate template specificity

- Computational efficiency can be improved through template pre-screening

Protocol 2: Implementing Template-Free Retrosynthesis with Edit-Based Models

Objective: Predict reactants using template-free approach with EditRetro iterative string editing methodology [29].

Materials and Reagents:

- Target product molecule (canonical SMILES)

- EditRetro model architecture (encoder-reposition decoder-placeholder decoder-token decoder)

- Chemical token vocabulary

- SMILES augmentation utilities

Procedure:

- Data Preparation:

- Convert target molecule to canonical SMILES representation

- Apply SMILES augmentation through random atom ordering to create multiple equivalent representations

Model Initialization:

- Initialize encoder with product SMILES tokens

- Generate initial hidden states through self-attention mechanism

Iterative Editing Process:

- Reposition Step: Predict token indices for reordering or deletion operations

- Placeholder Insertion: Predict number and positions of placeholder tokens

- Token Insertion: Replace placeholders with specific chemical tokens from vocabulary

- Repeat editing steps until termination condition met

Diversity Enhancement:

- Apply reposition sampling to explore alternative reaction pathways

- Utilize multiple augmented SMILES inputs to generate varied predictions

Validation:

- Check SMILES grammar validity of generated reactants

- Verify chemical consistency through round-trip validation (forward synthesis check)

Troubleshooting Tips:

- Invalid SMILES may require attention mechanism adjustment or training data expansion

- Limited diversity can be addressed through increased sampling temperature

- Poor accuracy on specific reaction types may indicate need for targeted data augmentation

Protocol 3: Implementing Template-Generative Retrosynthesis

Objective: Generate novel reaction templates for a target molecule using site-specific template methodology [26].

Materials and Reagents:

- Target product molecule with optional reaction center specifications

- Conditional Kernel-elastic Autoencoder (CKAE) architecture

- RDChiral for template application

- USPTO training data for model conditioning

Procedure:

- Input Preparation:

- Convert target molecule to graph representation

- Optionally label specific reaction centers with "*" symbols for site-specific generation

- If no centers specified, model will identify potential reaction sites

Template Generation:

- For deterministic generation: Use encoder-decoder architecture to translate product to SST (Site-Specific Template)

- For sampling-based generation: Employ CKAE to sample templates from latent space conditioned on product

- Generate templates focusing exclusively on reaction centers without broader structural context

Template Application:

- Apply generated templates to target molecule using RDChiral pattern matching

- Execute RunReactants function to produce precursor candidates

Similarity Assessment:

- Compute latent space distance between generated templates and known reactions

- Filter templates based on chemical viability thresholds

Validation:

- Verify template specificity through exact atom mapping

- Assess novelty by comparison with existing template databases

- Confirm synthetic feasibility through expert evaluation or literature validation

Troubleshooting Tips:

- Overly generic templates may require stricter conditioning on molecular context

- Low viability rates may indicate need for latent space regularization

- Application failures may suggest mismatches in reaction center handling

Protocol 4: Privacy-Aware Retrosynthesis Learning

Objective: Train retrosynthesis models across multiple institutions without sharing proprietary reaction data using Chemical Knowledge-Informed Framework (CKIF) [25].

Materials and Reagents:

- Local reaction datasets from multiple participants

- Base retrosynthesis model architecture (e.g., sequence-to-sequence Transformer)

- Molecular fingerprint calculator (ECFP or MACCS)

- Federated learning infrastructure

Procedure:

- Client Initialization:

- Each participant initializes local model with common architecture

- Preprocess proprietary reaction data to product-reactant pairs

Local Training Phase:

- Each client trains model independently on local data

- Compute model updates based on local gradients

Chemical Knowledge-Informed Aggregation:

- Clients exchange model parameters (not raw data) with central server

- Receiving client computes molecular fingerprint similarities between predicted reactants and ground truth

- Generate adaptive aggregation weights based on chemical similarity metrics

Model Personalization:

- Combine local model with aggregated external models using chemistry-aware weights

- Fine-tune personalized model on local data distribution

Iterative Refinement:

- Repeat communication rounds for multiple cycles

- Monitor performance on local validation sets

- Adjust aggregation strategy based on client-specific objectives

Troubleshooting Tips:

- Performance disparities may require client-specific weighting adjustments

- Privacy concerns can be addressed through differential privacy or homomorphic encryption

- Data heterogeneity issues may benefit from personalized aggregation strategies

Workflow Visualization

Future Perspectives and Research Directions

The field of computer-aided retrosynthesis continues to evolve rapidly, with several emerging trends shaping its future trajectory. The integration of large language models (LLMs) represents a significant advancement, as demonstrated by RetroDFM-R, which combines chemical domain knowledge with chain-of-thought reasoning through reinforcement learning [24]. This approach bridges the gap between general-purpose LLMs and specialized chemical reasoning, enabling more transparent and explainable predictions.

Multi-step retrosynthetic planning is another critical frontier, where single-step predictions are composed into complete synthetic routes [27]. Recent neurosymbolic approaches inspired by DreamCoder alternate between extending reaction template libraries and refining neural network guidance, mimicking human learning processes [27]. These systems can abstract common multi-step patterns such as cascade and complementary reactions, significantly improving planning efficiency for groups of similar molecules [27].

Privacy-preserving collaborative learning addresses the significant challenge of data sensitivity in chemical research [25]. Frameworks like CKIF (Chemical Knowledge-Informed Framework) enable distributed training across organizations without sharing proprietary reaction data, using chemical knowledge-informed aggregation of model parameters instead of raw data exchange [25]. This approach facilitates collaboration while protecting competitive advantages.

The incorporation of 3D conformational information represents another important direction, as spatial relationships critically influence reaction outcomes [31]. Methods that integrate molecular conformer data with sequence-based approaches can better capture stereochemistry and spatial constraints, particularly for complex polycyclic and heteroaromatic compounds [31].

Finally, the tight integration of retrosynthesis prediction with generative molecular design creates promising opportunities for directly optimizing synthesizability during molecular generation [33]. By treating retrosynthesis models as oracles in optimization loops, researchers can focus generative exploration on chemically accessible regions of molecular space, particularly for challenging domains like functional materials where traditional synthesizability heuristics may fail [33].

As these advancements mature, we anticipate increasingly sophisticated CASP systems that seamlessly integrate chemical knowledge, reasoning capabilities, and practical constraints to transform synthetic planning across pharmaceutical development, materials science, and chemical discovery.

The field of Computer-Aided Synthesis Planning (CASP) is undergoing a significant transformation, driven by the integration of advanced machine learning methods. Traditional CASP approaches, which relied on predefined reaction rules and expert systems, are often limited by their coverage of chemical space and inability to propose novel transformations. The emergence of generative artificial intelligence presents a paradigm shift, enabling the exploration of previously uncharted synthetic pathways [26]. Within this context, the integration of Site-Specific Templates (SST) and Conditional Kernel-elastic Autoencoders (CKAE) represents a cutting-edge architecture designed to overcome the vast complexity of chemical space and the limitations posed by experimental datasets. This approach moves beyond traditional selection-based methods to a generative framework, allowing for the discovery of novel, chemically viable reaction templates. This is particularly crucial in drug discovery, where the ability to rapidly and efficiently plan syntheses for complex small molecules can truncate development timelines, which traditionally take 10 to 15 years, and reduce costs that often exceed USD 2.6 billion per drug [34]. This document provides detailed application notes and protocols for implementing this advanced generative framework within a CASP research environment.

Core Concepts and Definitions

Site-Specific Templates (SST)

A Site-Specific Template (SST) is a concise reaction representation that captures only the atoms and bonds directly involved in a chemical transformation. Unlike broader reaction templates that may include neighboring atomic context or specific functional groups, SSTs focus exclusively on the reaction centers. This is a deliberate design choice to enhance generalization and applicability across diverse molecular structures.

- Protocol for SST Generation: SSTs are prepared by setting the radius parameter in template extraction tools like RDChiral to 0, which ensures that no atoms beyond the immediate reaction centers are captured [26]. Special functional groups and specifications for explicit degrees or numbers of hydrogens are also removed from the template definition. The prerequisite for using an SST is a Center-Labeled Product (CLP), where the specific reaction centers within the target molecule are marked with a special symbol (e.g., "*") to avoid ambiguity in template application. This combination ensures that the generated templates are both general and applied precisely to the intended molecular site [26].

Conditional Kernel-elastic Autoencoder (CKAE)

The Conditional Kernel-elastic Autoencoder (CKAE) is a generative machine learning model that forms the core of the proposed architecture. It is a type of conditional variational autoencoder that incorporates a latent space with kernel-based metrics for measuring similarity.

- Architectural Principle: The CKAE is trained to learn a structured latent representation of reaction templates conditioned on the input product molecule. During training, the model conditions on the corresponding products, allowing it to learn the relationship between a molecule and the possible transformations it can undergo [26]. Its key innovation lies in its latent space, which enables interpolation, extrapolation, and distance measurement between different reaction templates. This allows for the generation of new templates and provides a quantitative measure of similarity to known, validated reactions, offering insights into the chemical viability of proposed pathways [26] [30].

Experimental Protocols and Workflows

Protocol 1: Data Preparation and Template Extraction

This protocol details the process of preparing a dataset for training a generative template model.

- Data Sourcing: Obtain a large-scale dataset of chemical reactions with atom-mapping information, such as the USPTO-FULL dataset.

- Center-Labeled Product (CLP) Generation:

- Use the RDChiral library or similar tools to identify and label the changed atoms in the product molecule [26].

- The output is the product structure with its reaction centers explicitly marked (e.g., using an asterisk '*').

- Site-Specific Template (SST) Extraction:

- Using the atom-mapping information, employ RDChiral with the radius parameter set to 0.

- Extract the SMARTS string that defines only the atoms and bonds that change during the reaction, excluding neighboring context and explicit hydrogen counts.

- Store the resulting SSTs paired with their corresponding CLPs.

Protocol 2: Model Training and Template Generation

This protocol outlines the steps for training the CKAE model and generating novel reaction templates.

- Model Architecture Setup:

- Implement a Sequence-to-Sequence (S2S) architecture where the encoder processes the Center-Labeled Product (CLP) and the decoder generates the Site-Specific Template (SST).

- Incorporate the conditional kernel-elastic objective to shape the latent space, allowing for smooth interpolation [26].

- Model Training:

- Input: The model takes the CLP as its primary input.

- Conditioning (Optional): For site-specific generation, the model can also accept user-specified reaction centers as a conditioning input, directing the generative process.

- Output: The model is trained to output the correct SST.

- Training Loop: Train the model to maximize the conditional marginal log-likelihood of the templates, typically using a framework like stochastic gradient variational Bayes.

- Template Generation and Sampling:

- Deterministic Generation: For a given target molecule, the trained model can directly translate it into one or more candidate SSTs.

- Stochastic Sampling: Leverage the learned latent space of the CKAE. Sample a latent vector

zand, conditioned on the target molecule, decode it into a novel reaction template. This allows for the exploration of diverse and non-obvious retrosynthetic disconnections.

Protocol 3: Template Application and Route Validation

This protocol describes how to use the generated templates for retrosynthetic planning and validate the proposed pathways.

- Reactant Generation:

- For each generated SST, apply it to the target product molecule using the "RunReactants" function from the RDKit library.

- This function performs a substructure match and applies the transformation to generate precursor molecules.

- Route Exploration and Selection:

- Rank the generated precursor sets using the model's beam score or the similarity of the template's latent vector to known reactions in the dataset.

- Proceed recursively with the generated precursors as new target molecules to build multi-step synthetic trees.

- Experimental Validation:

- Select the most promising synthetic route based on step count, feasibility, and similarity to known reactions.

- Execute the synthesis in the laboratory to confirm the viability of the pathway, as demonstrated in the referenced work where a 3-step route was successfully validated for a molecule that previously required 5-9 steps [26] [30].

Data Presentation and Performance

The performance of the SST and CKAE approach has been benchmarked on standard datasets, demonstrating its competitiveness with state-of-the-art methods. The key metric is Top-K accuracy, which measures the percentage of test reactions for which the ground-truth reactants appear within the top K proposals.

Table 1: Benchmarking Performance of SST Generative Models on USPTO-FULL Dataset [26]

| Model Type | Model Name | Top-1 Accuracy | Top-5 Accuracy | Top-10 Accuracy | Key Feature |

|---|---|---|---|---|---|

| Deterministic | Model A (Product to SST/CLP) | Data from Fig. 3 required | Data from Fig. 3 required | Data from Fig. 3 required | Translates product to templates |

| Deterministic | Model B (Product & Site to SST) | Data from Fig. 3 required | Data from Fig. 3 required | Data from Fig. 3 required | Accepts user-specified reaction sites |

| Generative (CKAE) | Sampling Model | Data from Fig. 3 required | Data from Fig. 3 required | Data from Fig. 3 required | Latent space for novel template generation |

Table 2: Key Research Reagents and Computational Tools

| Item Name | Type | Function in Protocol | Source/Reference |

|---|---|---|---|

| USPTO-FULL Dataset | Dataset | Provides hundreds of thousands of atom-mapped reactions for training and benchmarking generative models. | [26] |

| RDChiral | Software Library | Used for the extraction of reaction templates (SSTs) from atom-mapped reaction data and for applying templates to molecules. | [26] |

| RDKit | Software Library | Open-source cheminformatics toolkit; its "RunReactants" function is critical for applying generated templates to target molecules to produce precursor structures. | [26] |

| Conditional Kernel-elastic Autoencoder (CKAE) | Model Architecture | The core generative model that learns a latent space of reaction templates conditioned on molecular input, enabling the generation of novel templates. | [26] |

| PyTorch / PyTorch Lightning | Framework | A flexible deep-learning framework suitable for implementing and training complex models like variational autoencoders. | [35] [36] |

Workflow Visualization

SST and CKAE Retrosynthetic Workflow

CKAE Model Architecture

Predicting Reaction Conditions with Graph Neural Networks and Clustering Frameworks

Within the framework of computer-aided synthesis planning (CASP), the accurate prediction of reaction conditions is a critical step for translating planned synthetic routes into practical laboratory execution. While significant progress has been made in predicting reaction outcomes and products, achieving diverse suggestions while ensuring the reasonableness of predictions remains a substantial challenge [37]. This application note details the implementation and protocol for "Reacon," a novel template- and cluster-based framework that leverages Graph Neural Networks (GNNs) to provide reliable and diverse reaction condition predictions, thereby enhancing the practical utility of CASP systems [38].

Core Methodology and Performance

The Reacon framework integrates graph neural networks, reaction templates, and a bespoke clustering algorithm to forecast reaction conditions—comprising catalysts, solvents, and reagents—as a cohesive system [37].

The methodology operates through a sequential workflow:

- Template Extraction and Matching: For a given reactant-product pair, Reacon extracts and matches reaction templates at three levels of specificity (r1, r0, and r0*) from a pre-built template-condition library. This step narrows the search space for plausible conditions [37].

- Condition Prediction with GNNs: A Directed Message Passing Neural Network (D-MPNN) processes the molecular graphs of the reactants and the differences between reactants and products to generate initial condition predictions [37].

- Condition Clustering: A label-based clustering algorithm groups conditions with similar functional groups, elemental compositions, and chemical functionalities, ensuring diversity and coherence in the final recommendations [37].

Quantitative Performance

The model was trained on a refined USPTO dataset containing 690,872 data points and evaluated on its ability to recall recorded conditions. The table below summarizes its key performance metrics.

Table 1: Performance Metrics of the Reacon Framework on the USPTO Test Set

| Metric | Top-1 Accuracy | Top-3 Accuracy |

|---|---|---|

| Overall Condition Recall | Not Specified | 63.48% [37] |

| Cluster-Level Recall | Not Specified | 85.65% [37] |

| External Validation (Recent Syntheses) | Not Specified | 85.00% [38] |

The high cluster-level accuracy demonstrates the framework's capability to suggest closely related and functionally similar conditions even when the exact recorded condition is not top-ranked, providing chemists with a curated set of viable alternatives [38] [37].

Experimental Protocols

Protocol A: Data Preprocessing and Template-Condition Library Construction

This protocol outlines the steps for curating the training data from the USPTO dataset and constructing the essential template-condition library.

- Objective: To prepare a high-quality, curated dataset of reactions and their associated templates and conditions for model training.

- Materials: USPTO patent dataset , RDKit , RDChiral .

- Procedure:

- Data Cleaning: Parse all reaction SMILES using RDKit. Remove any reactions that cannot be parsed [37].

- Template Extraction: Use RDChiral to extract three template types (r1, r0, r0) for each reaction. Discard reactions for which no template can be extracted or whose template occurs fewer than 5 times in the dataset [37].

- Condition Processing: Identify catalysts, solvents, and reagents. Molecules with atomic mapping are defined as reactants; those without are considered condition components. Remove reactions containing any condition component that appears fewer than 5 times [37].

- Final Filtering: Discard reactions with conditions that occurred only once, or that involve more than one catalyst, two solvents, or three reagents [37].

- Library Construction: Using the cleaned training data, construct a template-condition library that maps each unique template (r1, r0, r0) to its associated set of recorded reaction conditions [37].

- Data Splitting: Randomly split the final dataset of 690,872 reactions into training, validation, and test sets with a 0.8:0.1:0.1 ratio [37].

Protocol B: Condition Labeling and Clustering Algorithm

This protocol defines the process of featurizing reaction components and clustering similar conditions to enhance prediction diversity.

- Objective: To group chemically similar reaction conditions, facilitating diverse and reasonable suggestions.

- Materials: Processed dataset from Protocol A, label criteria (Table 2).

- Procedure:

- Feature Extraction: For each catalyst, solvent, and reagent, assign a set of non-exclusive labels from 31 possible categories. These encompass [37]:

- Functional Groups (21 labels): e.g., alkene, alcohol, halide, aromatic, amine.

- Elemental Composition (3 labels): Transition metal, reducing metal, main group metal.

- Chemical Function (5 labels): e.g., oxidizer, reductant, acid, base, Lewis acid.

- Other (2 labels): Ionic, other.

- Cluster Assignment: Two conditions are grouped into the same cluster if they meet the following criteria [37]:

- They share an identical catalyst label (or both have no catalyst).

- The combined solvent and reagent labels have at least two overlapping labels if the total number of labels exceeds 2; otherwise, the labels must be entirely identical.

- Cluster Management: For a new reaction, assign it to an existing cluster if it meets the criteria. If no match is found, create a new cluster. In case of multiple matching clusters, assign to the cluster with the most label intersections [37].

- Feature Extraction: For each catalyst, solvent, and reagent, assign a set of non-exclusive labels from 31 possible categories. These encompass [37]:

Table 2: Label Categories for Reaction Condition Clustering

| Feature Type | Number of Labels | Example Labels |

|---|---|---|

| Functional Group | 21 | Alkene, alkyne, alcohol, ether, aldehyde, ketone, carboxylic acid, ester, amide, nitro, amine, halide, aromatic [37] |

| Element | 3 | Transition metal, reducing metal, main group metal [37] |

| Function | 5 | Oxidizer, reductant, acid, Lewis acid, base [37] |

| Else | 2 | Ionic, other [37] |

Protocol C: Model Training and Prediction Inference

This protocol covers the training of the GNN model and its application for predicting conditions for a novel reaction.

- Objective: To train the prediction model and use it to infer reaction conditions for a new reactant-product pair.

- Materials: Preprocessed and split dataset (from Protocol A), PyTorch or Deep Graph Library (DGL), D-MPNN or GAT model architecture.

- Procedure - Model Training:

- Input Representation: For each reaction, the model input consists of the molecular graph of the reactant(s) and the graph difference between the reactant and product [37].

- Model Architecture: Employ a D-MPNN to learn a molecular representation. The network uses atom features (atom type, bond count, formal charge) and bond features (bond order, stereochemistry) [37].

- Training: Train the model to predict the most likely reaction conditions (catalyst, solvent, reagent) from the template-condition library. The model is trained using standard backpropagation and a cross-entropy or multi-label loss function, validated on the separate validation set [37].

- Procedure - Prediction Inference:

- Template Matching: For a new reaction, extract its templates and query the template-condition library to retrieve a candidate set of plausible conditions [37].

- Model Prediction: The trained GNN processes the reactant and product graphs to score and rank the candidate conditions.

- Result Clustering: The top-ranked conditions are then organized according to the pre-defined clusters (Protocol B), presenting the user with a diverse shortlist of condition options [37].

The Scientist's Toolkit: Essential Research Reagents and Materials

The following table details key computational and data resources required to implement the Reacon framework.

Table 3: Key Research Reagents and Resources for Implementation

| Item Name | Function/Description | Specific Example/Source |

|---|---|---|

| USPTO Dataset | A large, open-access dataset of organic reactions extracted from U.S. patents, serving as the primary source of training data. | USPTO (1976-2016) |

| RDKit | An open-source cheminformatics toolkit used for molecule manipulation, descriptor calculation, and reaction processing. | RDKit (www.rdkit.org) |

| RDChiral | A specialized tool based on RDKit for applying and extracting reaction templates with stereochemical awareness. | RDChiral |

| D-MPNN | A graph neural network architecture designed for molecular property prediction, used here to learn from reactant and product graphs. | Directed Message Passing Neural Network [37] |