Transforming Organic Synthesis: A Comprehensive Guide to High-Throughput Experimentation Workflows

High-Throughput Experimentation (HTE) has emerged as a transformative force in organic synthesis, drastically accelerating reaction discovery and optimization for researchers and drug development professionals.

Transforming Organic Synthesis: A Comprehensive Guide to High-Throughput Experimentation Workflows

Abstract

High-Throughput Experimentation (HTE) has emerged as a transformative force in organic synthesis, drastically accelerating reaction discovery and optimization for researchers and drug development professionals. This article provides a comprehensive analysis of modern HTE workflows, from foundational principles of miniaturization and parallelization to advanced integration with flow chemistry and artificial intelligence. It explores practical applications across pharmaceutical and materials chemistry, addresses key challenges in reproducibility and data management, and validates the approach through comparative case studies. By synthesizing the latest methodologies and future-facing trends, this guide serves as an essential resource for scientists seeking to implement robust, data-driven HTE platforms to shorten development cycles and drive innovation in biomedical research.

HTE Foundations: Core Principles and Evolving Landscape in Modern Organic Chemistry

High-Throughput Experimentation (HTE) represents a paradigm shift in research methodology, fundamentally restructuring traditional scientific approaches through the integrated application of miniaturization, parallelization, and automation. This framework enables the rapid execution of hundreds to thousands of parallel experiments, dramatically accelerating the pace of discovery and optimization in fields ranging from organic synthesis to drug development [1] [2]. By generating vast, rich datasets, HTE provides the empirical foundation necessary for advanced data analysis and machine learning applications, creating a powerful, iterative cycle of experimentation and learning. This whitepaper examines the core technical principles of HTE and their role in constructing efficient workflows for modern scientific research.

High-Throughput Experimentation (HTE) is a sophisticated process of scientific exploration that leverages lab automation, effective experimental design, and rapid parallel or serial experiments to accelerate research and development [2]. In the specific context of organic synthesis and methodology development, HTE serves as a valuable tool for generating diverse compound libraries, optimizing reaction conditions, and collecting high-quality data for machine learning applications [1]. The power of HTE lies in its synergistic combination of three fundamental principles: miniaturization, parallelization, and automation. When effectively implemented, this approach produces a wealth of experimental data that forms the foundation for robust technical decisions, ultimately leading to more efficient and innovative scientific outcomes.

Core Fundamental Principles

Miniaturization

Miniaturization involves the systematic reduction in the physical scale at which experiments are performed. This is not merely a matter of convenience but a critical strategy for enhancing research efficiency and scope.

Miniaturization technologies are categorized by scale, each offering distinct advantages [3]:

- Mini-scale: Several millimeters or microliters (μL).

- Micro-scale: A few millimeters down to 50 micrometers, processing samples from a few μL to 10 nanoliters (nL).

- Nano-scale: Below 50 micrometers, with sample sizes below 10 nL, even reaching picoliter (pL) or femtoliter (fL) levels.

The primary benefit of miniaturization is the drastic reduction in the consumption of often costly and precious biological and chemical reagents and samples [3]. This reduction, in turn, enables higher throughput assays through massive parallelization and multiplex detection modes. Furthermore, miniaturization promotes the development of novel procedures with simpler handling protocols. The most common miniaturized platforms include microplates, microarrays, nanoarrays, and microfluidic (lab-on-a-chip) devices [3].

Table 1: Miniaturization Platforms in HTE

| Platform | Scale/Format | Key Characteristics | Common Applications |

|---|---|---|---|

| Microplates [3] | 96, 384, 1536 wells | Standardized format, amenable to automation, well-established instrumentation. | Primary screening, enzymatic assays, cell-based assays. |

| Microarrays [3] | Spots at densities of 1000s/cm² | High feature density, minimal reagent use. | Multiplexed screening of biomolecular interactions. |

| Nanoarrays [3] | Features at densities 10â´-10âµ higher than microarrays | Ultra-high throughput, extremely low reagent consumption. | High-density screening, fundamental studies at single-molecule level. |

| Microfluidics (Lab-on-a-Chip) [3] | Microchannels (nanometers to hundreds of micrometers) | High performance, integration of multiple process steps, precise fluid control, low reagent consumption. | Complex assay automation, continuous-flow analysis, diagnostics. |

Parallelization

Parallelization is the simultaneous execution of multiple experimental reactions or assays. This principle is the direct engine of high throughput, allowing for the exponential increase in data acquisition compared to traditional sequential (one-at-a-time) experimentation.

In practice, parallelization is achieved through hardware designed to handle many reactions at once. This includes robotics, rigs, and reactors with multiple parallel vessels. In materials science and catalysis, for instance, HTE often focuses on equipment with a limited reactor parallelization (e.g., 4 to 16 reactors) that use conditions allowing for easier scale-up, striking a balance between throughput and practical relevance [2]. The combinatorial approach, while powerful, can become intractable for some material science applications, necessitating more selective experimental designs informed by techniques like active learning [2].

The core advantage of parallelization is the ability to test multiple hypotheses in parallel, which has produced an exponential increase in data generation [2]. This allows researchers to explore a broader experimental space—such as varying catalysts, ligands, solvents, and temperatures—in a single, coordinated campaign rather than over an extended period.

Automation

Automation replaces manual, repetitive tasks with electromechanical systems and sophisticated software, ensuring consistency, reproducibility, and operational efficiency throughout the HTE workflow. It is the critical enabling technology that makes large-scale, miniaturized, and parallelized experimentation feasible.

Automation in HTE encompasses both hardware and software components [2]:

- Hardware: Robotics, liquid handlers, solid dispensers, multichannel pipettors, and automated analytical instruments. Vendors such as Tecan, Hamilton, and Molecular Devices provide transformational hardware for biological and chemical HTE [2].

- Software: This includes computational methods for Design of Experiments (DOE), and informatics infrastructure for data capture and management. Effective automation requires an appropriate IT and informatics infrastructure to fully capture all data in a FAIR (Findable, Accessible, Interoperable, Reusable)-compliant fashion [2].

Automation enhances reproducibility by minimizing human error and variation. It also fuels efficiency, allowing laboratories to "accomplish more with less, and do it faster" [2]. Perhaps most importantly, by automating routine tasks, scientists are freed to focus on higher-value activities such as ideation, experimental design, and data interpretation.

The Integrated HTE Workflow for Organic Synthesis

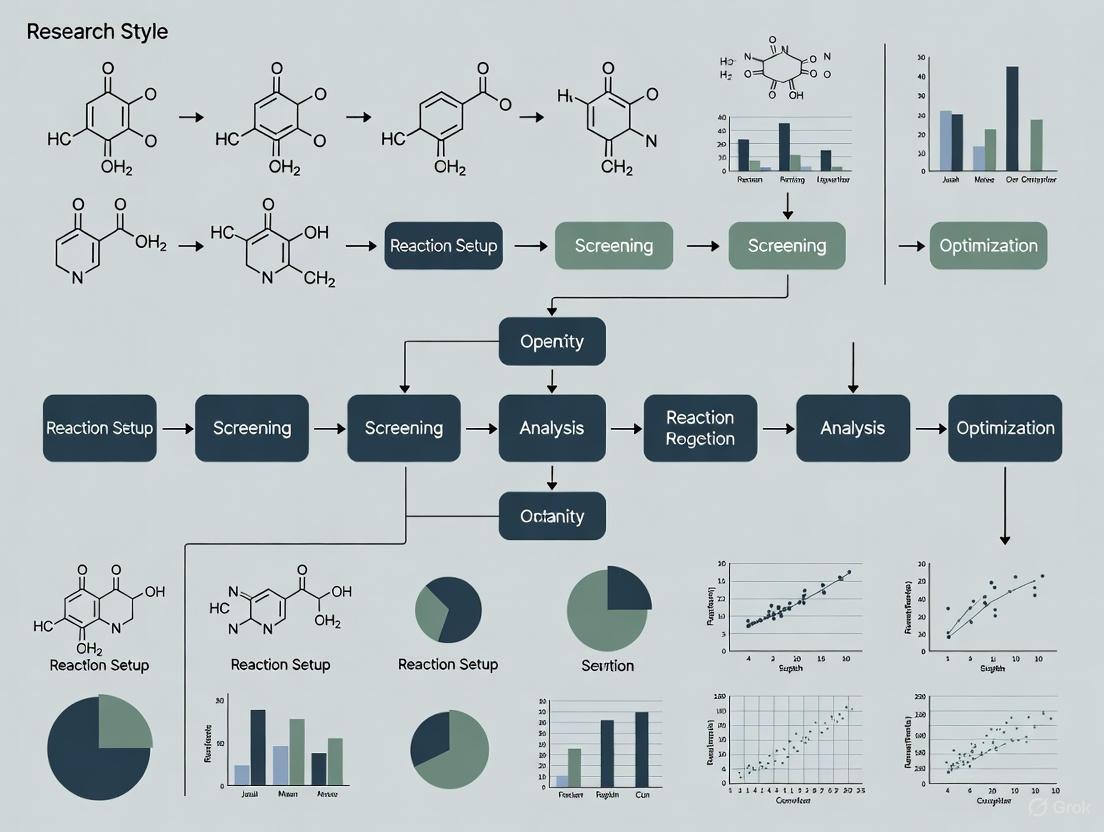

The fundamental principles of HTE converge into a cohesive, iterative workflow that transforms the research cycle in organic synthesis. The diagram below illustrates this integrated process.

Experimental Protocol: A Generalized HTE Workflow

This protocol provides a template for executing an HTE campaign, adaptable to specific research goals.

1. Hypothesis and Experimental Design (DOE):

- Define the scientific question and objective (e.g., optimizing reaction yield, discovering new catalysts).

- Employ Design of Experiments (DOE) principles to systematically select the set of conditions to be tested. This replaces inefficient one-variable-at-a-time (OVAT) approaches and allows for the efficient exploration of complex variable interactions [2].

- Identify the factors (e.g., temperature, concentration, solvent, catalyst) and their respective ranges.

2. Reaction Setup and Library Design:

- Prepare stock solutions of reagents, catalysts, and solvents.

- Utilize automated liquid handlers or dispensers to accurately transfer nanoliter to microliter volumes into reaction vessels (e.g., wells in a 384-well microplate) according to the DOE plan [3].

- This stage is critical for ensuring the accuracy and reproducibility of the entire experiment.

3. Miniaturized and Parallelized Execution:

- Reactions are run simultaneously in a parallelized format (e.g., in a microplate block or multiple microreactors) [2] [3].

- Conditions such as temperature and stirring are controlled uniformly across the platform.

4. Automated Reaction and Analysis:

- Upon completion, reaction mixtures are automatically quenched if necessary.

- Analysis is performed using high-throughput analytical methods, such as:

- High-Throughput LC-MS/GC-MS: Configured with automated sample injection from microplates.

- Plate-based Spectrophotometry: For colorimetric or fluorometric assays (common in enzymatic activity screening) [3].

- The output is raw analytical data (e.g., chromatograms, spectra) for each reaction vessel.

5. Data Capture and Management:

- All experimental data, including reaction parameters (inputs) and analytical results (outputs), are automatically captured and stored in a FAIR-compliant data environment [2].

- This typically involves the use of Electronic Lab Notebooks (ELNs) and Laboratory Information Management Systems (LIMS) to create a structured, queryable database [2]. Proper data management at this stage is essential for future analysis and machine learning.

6. Data Analysis, Visualization, and Insight Generation:

- Raw data is processed to calculate key performance metrics (e.g., conversion, yield, enantiomeric excess).

- Data visualization techniques are employed to identify patterns, trends, and outliers [4] [5] [6]. Effective visualization is crucial for interpreting the high-dimensional data generated by HTE.

- Results are analyzed to validate or refute the initial hypothesis, leading to new insights and the refinement of predictive models, thus informing the next cycle of experimentation.

Data Management and Visualization in HTE

The massive datasets generated by HTE present both an opportunity and a challenge. Effective data management and visualization are not final steps but integral components of the HTE workflow.

The FAIR Data Principle

Managing HTE data requires an informatics infrastructure designed for volume and complexity. The core modern standard is the FAIR principle, which dictates that data must be Findable, Accessible, Interoperable, and Reusable [2]. Establishing a FAIR-compliant data environment dramatically reduces the effort spent on data wrangling and allows scientists to focus on ideation and design, leading to better experiments and outcomes. This often involves integrating Electronic Lab Notebooks (ELNs) and Laboratory Information Management Systems (LIMS) to manage the request, sample, experiment, test, analysis, and reporting workflows [2].

Visualizing High-Dimensional HTE Data

Data visualization transforms complex numerical data into accessible visual narratives, which is essential for interpreting HTE results and communicating findings [4] [6]. The human brain processes visual information far more efficiently than raw numbers, making visualization key to identifying trends, correlations, and outliers [5] [6].

Best Practices for HTE Data Visualization:

- Know Your Audience and Message: Tailor the complexity and technical depth of the visualization to the expertise of the researchers or stakeholders [5] [7].

- Select the Right Chart Type: Choose visual encodings that match the data's structure and the insight you wish to convey [4] [5].

- Bar Charts: Ideal for comparing quantitative results across different categorical conditions (e.g., yield across different catalysts) [4] [6].

- Line Charts: Effective for displaying trends over a continuous variable like time or temperature [4] [6].

- Scatter Plots: Essential for exploring correlations between two continuous variables (e.g., yield vs. catalyst loading) [4].

- Heatmaps: Powerful for visualizing large matrices of data, such as reaction outcomes across two variables (e.g., solvent vs. ligand), using color intensity to represent values [4] [6].

- Use Color Strategically: Utilize color palettes that are appropriate for the data type (qualitative, sequential, or diverging) and ensure sufficient contrast for readability [5]. Avoid using color in a way that may mislead the viewer.

- Maintain Accuracy and Simplicity: Avoid "chartjunk"—unnecessary graphical elements that clutter the visualization. Ensure that scales are not distorted, which can misrepresent the underlying data [5] [6] [7].

The entire data lifecycle within an HTE context, from capture to actionable insight, can be visualized as follows:

Implementing a successful HTE program requires a suite of specialized tools and reagents. The following table details key components of the HTE research toolkit.

Table 2: Essential Research Reagent Solutions and Materials for HTE

| Category | Item/Solution | Function in HTE Workflow |

|---|---|---|

| Automation Hardware | Liquid Handling Robots | Precisely dispenses nanoliter to microliter volumes of reagents and solvents into microplates or microreactors, enabling miniaturization and parallelization [2]. |

| Solid Dispensers | Accurately weighs and dispenses small, precise amounts of solid reagents (catalysts, bases, etc.) into reaction vessels [2]. | |

| Microplate Readers | Performs high-speed spectrophotometric measurements (absorbance, fluorescence) for plate-based assays, often used in enzymatic activity screening [3]. | |

| Reaction Platforms | Microplates (96 to 1536-well) | Standardized platforms for performing thousands of parallel chemical or biological reactions in a miniaturized format [3]. |

| Microreactors / Lab-on-a-Chip | Devices with microchannels that allow for controlled fluidics, reaction execution, and sometimes integrated analysis, offering high integration and low reagent use [3]. | |

| Informatics & Software | Electronic Lab Notebook (ELN) | Digitally captures experimental procedures, parameters, and observations, ensuring data is accessible and structured [2]. |

| Laboratory Information Management System (LIMS) | Tracks samples and manages workflow data, integrating with instrumentation to create a FAIR-compliant data environment [2]. | |

| Design of Experiments (DOE) Software | Assists in designing efficient and effective experimental arrays to maximize information gain while minimizing the number of experiments [2]. | |

| Analytical Tools | High-Throughput LC-MS/GC-MS | Provides rapid, automated chemical analysis for reaction mixture composition, yield, and purity determination across a large number of samples [2]. |

High-Throughput Experimentation is a transformative approach, fundamentally built upon the interdependent pillars of miniaturization, parallelization, and automation. This methodology enables an unprecedented rate of empirical data generation, which is critical for accelerating innovation in organic synthesis and drug discovery. The full potential of HTE is realized only when this data is managed within a FAIR-compliant informatics infrastructure and analyzed through effective visualization and data analysis techniques. As the field evolves, the integration of artificial intelligence and machine learning with HTE workflows promises to further refine experimental design, uncover deeper insights, and ultimately close the loop toward fully autonomous discovery, empowering researchers to solve increasingly complex scientific challenges [1] [2].

The Evolution from Traditional One-Variable-at-a-Time to Parallel Screening Approaches

The discovery and optimization of chemical reactions represent a fundamental challenge in organic synthesis, historically addressed through labor-intensive, time-consuming experimentation. Traditional approaches have been dominated by the one-variable-at-a-time (OVAT) methodology, where reaction variables are modified individually while keeping all other parameters constant to find optimal conditions for a specific reaction outcome [8]. This linear methodology, while straightforward, possesses significant limitations as it disregards the intricate interactions among competing variables within complex synthesis processes [8]. In recent years, a profound paradigm change in chemical reaction optimization has been enabled by concurrent advances in laboratory automation, artificial intelligence, and the strategic implementation of parallel screening approaches [8].

This evolution from sequential to parallel experimentation represents more than merely a technical improvement—it constitutes a fundamental restructuring of the scientific method as applied to chemical synthesis. The emerging methodology allows researchers to navigate high-dimensional parametric spaces efficiently, uncovering optimal conditions that balance multiple competing objectives such as yield, selectivity, purity, cost, and environmental impact [8]. Within the context of high-throughput experimentation (HTE) workflows for organic synthesis research, this transition has been particularly transformative, enabling the rapid exploration of chemical space that would be practically inaccessible through traditional OVAT approaches. The implementation of parallel screening has become especially crucial in drug discovery pipelines, where the efficient identification and optimization of lead compounds can significantly accelerate the development of new therapeutic agents [9].

Limitations of Traditional One-Variable-at-a-Time Approaches

The one-variable-at-a-time approach to reaction optimization, while methodologically straightforward, suffers from several critical limitations that become particularly pronounced when addressing complex chemical systems. The most significant drawback lies in its inability to detect interactions between variables. By examining factors in isolation, OVAT methodologies inherently miss synergistic or antagonistic effects between parameters such as temperature, concentration, catalyst loading, and solvent composition [8]. This limitation can lead researchers to identify local optima that fall far short of the global optimum for a given reaction system.

Furthermore, the OVAT approach is exceptionally resource-intensive, requiring substantial time, material, and human resources to systematically explore even moderately complex parameter spaces [8]. For a reaction with just five variables, each examined at only five levels, the OVAT methodology would require 25 experiments if no interactions are present—and far more if proper replication is included. This experimental burden creates practical constraints on how thoroughly chemical space can be explored, potentially causing researchers to miss superior reaction conditions that lie outside the narrowly defined search paths.

The sequential nature of OVAT experimentation also significantly prolongs optimization timelines, creating bottlenecks in research and development pipelines [8] [10]. Each experimental cycle requires completion, analysis, and planning before the subsequent variable can be addressed, resulting in protracted development cycles that delay process implementation and scale-up. This sequential constraint is particularly problematic in drug discovery, where accelerated timelines can have substantial implications for therapeutic development and patient access to new treatments [9].

The Rise of Parallel Screening Methodologies

Fundamental Principles and Definitions

Parallel screening represents a fundamentally different approach to experimental design and execution, characterized by the simultaneous evaluation of multiple experimental conditions rather than their sequential examination. In the context of chemical synthesis, HTE is defined as a technique that "leverages a combination of automation, parallelization of experiments, advanced analytics, and data processing methods to streamline repetitive experimental tasks, reduce manual intervention, and increase the rate of experimental execution in comparison to traditional manual experimentation" [8]. This methodology enables researchers to efficiently explore multidimensional parameter spaces by executing numerous experiments in parallel, dramatically reducing optimization timelines while providing a comprehensive view of variable interactions and their effects on reaction outcomes [8].

The conceptual framework for parallel screening extends beyond mere parallel execution of experiments to incorporate adaptive experimentation strategies, where results from initial experimental arrays inform the selection of subsequent conditions [11]. This creates an iterative, data-driven feedback loop that progressively refines understanding of the chemical system and converges more efficiently toward optimal conditions. The integration of machine learning algorithms further enhances this approach by predicting promising regions of chemical space to explore, effectively guiding the parallel screening process toward the most informative experiments [8].

Implementation Platforms and Technologies

The practical implementation of parallel screening methodologies has been enabled by specialized hardware and software systems designed specifically for high-throughput chemical experimentation. HTE platforms typically include liquid handling systems for reagent distribution, modular reactor systems capable of maintaining diverse reaction conditions, and integrated analytical tools for rapid product characterization [8]. These systems may operate in batch mode, where multiple discrete reactions proceed simultaneously in separate vessels, or in flow systems, where continuous processing enables additional dimensions of control and analysis [8].

Microtiter plates (MTP) have become foundational tools in parallel screening, with formats including 96, 384, 1536, or even 6144 individual wells enabling massive experimental parallelization [8] [9]. These platforms are particularly valuable for screening discrete compounds or catalysts across diverse reaction conditions, facilitating the rapid identification of "hits" that merit more detailed investigation [9]. Commercial HTE platforms such as the Chemspeed SWING robotic system exemplify this approach, offering integrated robotic systems with multiple dispense heads for precise delivery of reagents—including challenging materials like slurries—across dozens or hundreds of parallel reactions [8].

For even greater throughput, combinatorial approaches such as the "split and pool" methodology enable the synthesis and screening of extremely large compound libraries [9]. In this technique, solid supports or solutions are divided, subjected to different chemical transformations, then recombined in successive cycles, generating exponential diversity from linear experimental steps. When combined with DNA-encoding strategies—where library components are tagged with identifiable oligonucleotide sequences—combinatorial approaches enable the screening of libraries containing billions of distinct compounds, far beyond the practical limits of discrete parallel screening [9].

Quantitative Comparison: Traditional vs. Parallel Approaches

The efficiency advantages of parallel screening become quantitatively apparent when examining the experimental requirements for exploring complex parameter spaces or synthesizing diverse compound libraries. The table below compares the requirements for synthesizing a library of one billion compounds using parallel versus combinatorial approaches:

Table 1: Comparison of Synthesis Methods for a One-Billion Compound Library

| Parameter | Parallel Synthesis | Combinatorial Synthesis |

|---|---|---|

| Coupling Steps Required | 3 billion | 3,000 |

| Time Requirement | ~2,000 years (with 2 runs/day) | Reasonable time frame |

| Cost Estimate | $0.4-2 million (for 1 million compounds) | ~$200,000 |

| Library Encoding | Not required | DNA encoding beneficial |

The extraordinary difference in experimental requirements—3 billion coupling steps for parallel synthesis versus only 3,000 for combinatorial synthesis—highlight why parallel and combinatorial approaches have become indispensable in modern chemical research, particularly in drug discovery [9]. Similar efficiency advantages extend to the screening process itself, where parallel high-throughput screening (HTS) methods utilizing microtiter plates with 96 to 6144 wells enable the evaluation of thousands to hundreds of thousands of compounds per day, compared to the impractical timelines that would be required for individual assessment of each compound in a billion-member library [9].

Practical Implementation: Workflows and Experimental Design

Integrated Parallel Screening Workflow

The implementation of an effective parallel screening strategy follows a systematic workflow that integrates experimental design, execution, analysis, and iterative optimization. This process creates a closed-loop system where data from each experimental cycle informs subsequent design decisions, progressively refining understanding of the chemical system and converging toward optimal conditions.

Diagram 1: Parallel Screening Workflow

This workflow begins with careful design of experiments (DOE) to define the parameter space to be explored, followed by parallel reaction execution using HTE platforms [8]. Data collection through in-line or offline analytical tools enables reaction characterization, with subsequent mapping of collected data points against target objectives. Machine learning algorithms then process this information to predict promising regions for further exploration, selecting the next set of reaction conditions to evaluate [8]. This iterative cycle continues until optimal conditions are identified, achieving the desired balance between multiple competing objectives such as yield, selectivity, and cost.

Two-Tiered Screening and Optimization Strategy

For comprehensive reaction development, a two-tiered strategy implementing "breadth-first" screening followed by "depth-second" optimization has proven highly effective [11]. This approach first identifies promising candidate compounds or conditions through broad parallel screening, then subjects these hits to more focused optimization using adaptive experimentation methods.

Table 2: Two-Tiered Screening and Optimization Methodology

| Stage | Objective | Methods | Key Features |

|---|---|---|---|

| Tier 1: Broad Screening | Identify initial "hit" compounds/catalysts | Parallel screening of discrete candidates | Examines each candidate over concentration range; Implements early termination for hits |

| Tier 2: Focused Optimization | Refine conditions for each hit | Multidirectional Search (MDS) algorithms | Adaptive experimentation; Defines continuous search space; Navigates to local optimum |

In the first tier, the Parascreen module examines discrete candidates in parallel over a range of concentrations, implementing decision-making at two levels: (1) local evaluation, where yield-versus-time data are examined using pattern-matching to determine whether monitoring should continue or terminate, and (2) global evaluation, where candidates reaching user-defined thresholds are flagged as hits, with subsequent experiments at higher concentrations of the same candidate being deleted to conserve resources [11]. This approach efficiently prunes unproductive experimental pathways while focusing resources on the most promising candidates.

In the second tier, each hit compound undergoes refined condition optimization using multidirectional search (MDS) algorithms, which perform parallel adaptive experimentation within a defined continuous search space [11]. This methodology efficiently navigates complex parameter landscapes to identify local optima for each promising candidate identified in the initial screening phase.

The Scientist's Toolkit: Essential Research Reagent Solutions

Successful implementation of parallel screening methodologies requires specialized materials and equipment designed specifically for high-throughput experimentation. The table below details key research reagent solutions and their functions within HTE workflows:

Table 3: Essential Research Reagent Solutions for Parallel Screening

| Tool/Reagent | Function | Application Notes |

|---|---|---|

| Microtiter Plates | Parallel reaction vessels | 96, 384, 1536 wells; Compatible with automated liquid handling |

| Multipin Apparatus | Parallel synthesis on solid support | Peptide/epitope synthesis; Screening without cleavage from pins |

| DNA-Encoding Oligomers | Library component identification | Tags building blocks for sequence-based decoding |

| Automated Synthesizers | High-throughput compound production | Systems like Vantage (96-384 compounds in parallel) |

| Liquid Handling Robots | Precise reagent distribution | Nanoliter to microliter volumes; 96/384-channel heads |

| EGFR-IN-146 | 2-phenyl-N-(pyridin-3-ylmethyl)quinazolin-4-amine | 2-phenyl-N-(pyridin-3-ylmethyl)quinazolin-4-amine (CAS 166039-38-9) is a quinazolin-4-amine-based research chemical. This product is For Research Use Only (RUO). Not for human or veterinary use. |

| Valone | Valone, CAS:145470-90-2, MF:C14H14O3, MW:230.26 g/mol | Chemical Reagent |

These specialized tools enable the practical execution of parallel screening campaigns, with each component addressing specific challenges associated with miniaturization, parallelization, or analysis throughput. The selection of appropriate tools depends on specific research objectives, with considerations including reaction scale, analytical requirements, and the degree of automation integration desired [8] [9].

Case Studies and Applications in Organic Synthesis

Reaction Optimization in Pharmaceutical Development

Parallel screening methodologies have demonstrated particular utility in optimizing key transformations relevant to pharmaceutical development, including Suzuki–Miyaura couplings, Buchwald–Hartwig aminations, and other transition-metal-catalyzed processes that are highly sensitive to multiple reaction parameters [8]. In one implementation, a Chemspeed SWING robotic system equipped with two fluoropolymer-sealed 96-well metal blocks enabled the exploration of stereoselective Suzuki–Miyaura couplings, providing precise control over both categorical and continuous variables [8]. The integrated robotic system with a four-needle dispense head facilitated low-volume reagent delivery, including challenging slurry formulations, while parallelization of experimental workflows enabled completion of 192 reactions within 24 hours—a throughput rate practically unattainable through traditional OVAT approaches.

Similar parallel screening approaches have been successfully applied to diverse reaction classes including N-alkylations, hydroxylations, and photochemical reactions, demonstrating the broad applicability of HTE methodologies across diverse synthetic challenges [8]. In each case, the ability to simultaneously vary multiple parameters—including catalyst identity and loading, ligand structure, base strength, solvent composition, and temperature—enabled rapid identification of optimal conditions that might have been missed through sequential optimization approaches.

Catalyst Screening and Discovery

The search for novel catalysts represents another area where parallel screening methodologies have demonstrated significant advantages. In one approach, a mobile robot equipped with sample-handling arms was developed to execute photocatalytic reactions for water cleavage to produce hydrogen [8]. This system functioned as a direct replacement for human experimenters, executing tasks and linking eight separate experimental stations including solid and liquid dispensing, sonication, characterization equipment, and sample storage. Through a comprehensive ten-dimensional parameter search spanning eight days, the robotic system identified conditions achieving an impressive hydrogen evolution rate of approximately 21.05 µmol·hâ»Â¹ [8]. This systematic exploration of a complex parameter space would have been prohibitively time-consuming using traditional OVAT approaches, demonstrating how parallel screening can accelerate discovery in catalyst development.

The evolution from traditional one-variable-at-a-time to parallel screening approaches represents a fundamental transformation in how chemical research is conducted, enabling efficient exploration of complex parameter spaces that were previously practically inaccessible. This paradigm shift has been driven by advances in automation technologies, machine learning algorithms, and data analysis methods that collectively support the design, execution, and interpretation of parallel experimentation campaigns [8]. The resulting acceleration in reaction optimization and compound screening has been particularly transformative in drug discovery, where parallel and combinatorial methods have become essential for generating the diverse compound libraries needed to identify novel therapeutic agents [9].

Future developments in parallel screening will likely focus on increasing integration and adaptability across experimental platforms, enhancing the sophistication of decision-making algorithms, and improving data management practices for greater accessibility and shareability [8] [12]. The ongoing development of "self-driving" laboratories capable of autonomous experimentation with minimal human intervention represents the logical extension of current parallel screening methodologies, potentially further accelerating the pace of discovery in organic synthesis and related fields [8] [11].

As these technologies continue to evolve, parallel screening approaches will become increasingly central to chemical research, enabling more efficient exploration of chemical space while managing the complexity of multi-parameter optimization. This methodological evolution from sequential to parallel experimentation represents not merely a technical improvement, but a fundamental advancement in how we approach scientific discovery in chemistry, with implications for fields ranging from pharmaceutical development to materials science and beyond.

High-Throughput Experimentation (HTE) has emerged as a transformative approach in organic synthesis, radically accelerating reaction discovery, optimization, and the generation of robust chemical data. This technical guide details the core components of HTE workflows, framed within the context of modern organic synthesis research for drug development and materials science. By integrating advanced automation, data-rich experimentation, and intelligent analysis, HTE provides a structured framework to navigate complex chemical spaces efficiently.

Reaction Design and Workflow Planning

The foundation of a successful HTE campaign lies in meticulous reaction design and strategic workflow planning. This initial phase moves beyond traditional one-variable-at-a-time (OVAT) approaches to enable the concurrent exploration of a high-dimensional parameter space.

Strategic Experiment Design

Reaction design in HTE involves defining the objectives and rationally selecting which variables to screen. Key considerations include:

- Objective Definition: Clearly define the campaign's goal, whether it is reaction discovery, substrate scope exploration, or optimization of reaction conditions for yield, selectivity, or robustness [13].

- Variable Selection: A typical screen investigates multiple categorical variables simultaneously. For example, a catalytic reaction might screen arrays of catalysts, ligands, bases, and solvents to find optimal combinations [1] [13].

- Bias Mitigation and Serendipity: By testing a wide range of conditions, including those that might be counter-intuitive, HTE helps reduce human bias and promotes serendipitous discoveries [1].

- Inclusion of Negative Data: Deliberately incorporating potentially negative reactions based on expert rules (e.g., known steric hindrance effects) is crucial for generating datasets that are valuable for machine learning and understanding reaction boundaries [14].

Miniaturization and Parallelization

A core principle of HTE is the miniaturization and parallelization of reactions.

- Reaction Scale: HTE is conducted at dramatically reduced scales, typically in the microliter (96- or 384-well plates) to nanoliter (1536-well plates) range [13]. This enables significant reduction in material consumption, cost, and waste.

- Platforms: Reactions are performed in parallel arrays, most commonly in 96-well plates [13] or other multi-well formats. An emerging, ultra-high-throughput alternative uses DNA-encoded libraries, allowing for hundreds of thousands of reactions to be performed and analyzed simultaneously [15].

- Complementary Technologies: Flow chemistry serves as a powerful complementary tool to batch-based HTE, especially for reactions involving hazardous reagents, requiring pressurized conditions, or benefiting from superior heat and mass transfer [16].

The designed workflow must be carefully planned, including the layout of reaction plates and the integration of subsequent execution and analysis steps [13].

Reaction Execution and Automation Systems

The physical execution of HTE campaigns relies on integrated systems that ensure precision, reproducibility, and efficiency. Automation is a key enabler, handling tasks that are repetitive, prone to human error, or require operation in controlled environments.

Core Hardware Components

A modern HTE platform combines several automated modules:

- Solid Dosing Systems: Automated powder dispensing is critical for accuracy and efficiency. Systems like the CHRONECT XPR can dose a wide range of solids (from sub-milligram to several grams) with high precision (<10% deviation at low masses, <1% at >50 mg) and significantly reduce weighing time compared to manual operations [17].

- Liquid Handling Robots: These systems automate the dispensing of solvents, liquid reagents, and catalysts, ensuring consistent volumes across hundreds of reactions [13] [17].

- Reaction Environment Control: Experiments are often conducted within inert atmosphere gloveboxes to exclude moisture and oxygen, which is crucial for air- and moisture-sensitive chemistry [13] [17]. Agitation is provided by tumble stirrers or other systems to ensure proper mixing in small volumes [13].

- Reactor Blocks: Temperature-controlled blocks hold 96-well plates or vials, allowing parallel reactions to be run under defined thermal conditions [13].

Implementation and Impact

The integration of these components into a cohesive workflow is demonstrated by industrial and academic implementations:

- AstraZeneca's HTE Evolution: Over 20 years, AstraZeneca implemented global HTE capabilities, co-locating specialists with medicinal chemists to foster a cooperative model. This led to a dramatic increase in screening capacity, from ~20-30 screens per quarter to ~50-85, and the number of conditions evaluated surged from under 500 to about 2000 per quarter [17].

- Automated Chemical Synthesis Platform (AutoCSP): One study established an AutoCSP that integrated dispensing, transfer, reaction, and analysis functions. The platform successfully screened 432 organic reactions for the anticancer drug Sonidegib, demonstrating results comparable to manual operation but with superior consistency, providing high-quality data for AI analysis [18].

Table 1: Key Automation Hardware in HTE Workflows

| Component | Example System/Model | Key Function | Performance Specifications |

|---|---|---|---|

| Solid Dosing | CHRONECT XPR [17] | Automated powder dispensing | Range: 1 mg - several grams; Dosing time: 10-60 sec/component |

| Liquid Handling | Various liquid handlers [17] | Dispensing solvents & liquid reagents | Handles 96-well plate formats; precise µL-scale dispensing |

| Reaction Agitation | Tumble Stirrer (e.g., VP 711D-1) [13] | Homogeneous mixing in small vials | Consistent stirring in parallel format |

| Reactor System | Paradox reactor [13] | Parallel execution of reactions | Holds 96x 1mL vials with temperature control |

Analysis, Data Management, and Machine Learning

The value of an HTE campaign is fully realized only through robust analysis, effective data management, and the application of machine learning to extract predictive insights from the large datasets generated.

High-Throughput Analysis Techniques

Rapid, automated analysis is essential for evaluating the outcome of hundreds to thousands of experiments.

- Liquid Chromatography-Mass Spectrometry (LC-MS/UPLC-MS): This is the workhorse analytical technique for HTE. Platforms like the Waters Acquity UPLC are standard, allowing for the rapid determination of conversion, yield, and identification of byproducts [13] [14].

- Analysis Workflow: At the end of the reaction, samples are typically diluted automatically, often with a solution containing an internal standard (e.g., biphenyl) for semi-quantitative analysis. Aliquots are then transferred to a analysis plate for injection into the LC-MS [13].

- Alternative Ultra-High-Throughput Analysis: For DNA-encoded libraries, reaction outcomes are analyzed not by chromatography but by high-throughput DNA sequencing, which can decode the results of hundreds of thousands of reactions in a single run [15].

Data Management and Machine Learning

The data generated by HTE is a valuable asset for building predictive models.

- Datasets for AI: Large, self-generated HTE datasets are used to train machine learning models. For instance, one study created a dataset of 11,669 distinct acid-amine coupling reactions to train a Bayesian neural network model, which achieved 89.48% accuracy in predicting reaction feasibility [14].

- Uncertainty and Robustness: Advanced ML models can disentangle uncertainty, identifying whether a reaction is likely to fail due to its intrinsic chemistry or due to sensitivity to subtle environmental variations. This allows for the prediction of reaction robustness, a critical factor for scaling up industrial processes [14].

- Active Learning: ML models can guide subsequent experimental design. By analyzing prediction uncertainty, an active learning strategy can be implemented, which has been shown to reduce data requirements by up to 80% for effective model training [14].

Table 2: Core Analytical and Data Management Techniques in HTE

| Technique | Primary Function | Throughput & Scale | Key Outcome Measures |

|---|---|---|---|

| LC-MS/UPLC-MS [13] [14] | Quantitative reaction analysis | 96/384-well plates; ~minutes/sample | Conversion, yield (via AUC), byproduct formation |

| DNA Sequencing [15] | Analysis of DNA-encoded libraries | 504,000+ reactions in a single run | Relative reaction efficiency for vast condition matrices |

| Bayesian Deep Learning [14] | Predict feasibility & robustness | Trained on 10,000+ reactions | Feasibility accuracy, robustness estimation, uncertainty quantification |

| Random Forest Algorithm [18] | Data quality control & anomaly detection | Accurate anomaly identification (98.3%) | Identifies experimental outliers, ensures dataset integrity |

The Scientist's Toolkit: Essential Research Reagents and Materials

Successful HTE relies on a suite of specialized reagents, materials, and software tools that form the basic toolkit for researchers.

Table 3: Essential Research Reagent Solutions and Materials for HTE

| Item | Function/Description | Example/Specification |

|---|---|---|

| 96-Well Plate Reactor | Platform for running parallel reactions in microliter volumes. | 1 mL vials in an 8x12 array (e.g., Paradox reactor) [13]. |

| Condensation Reagents | Facilitate amide bond formation, a critical reaction in medicinal chemistry. | A set of 6 different reagents screened to find the optimal one for a given acid-amine pair [14]. |

| Photoredox Catalysts | Enable photochemical reactions by absorbing light and engaging in single-electron transfer. | A library of 24 catalysts screened for a fluorodecarboxylation reaction [16]. |

| Transition Metal Catalysts & Ligands | Core components for cross-coupling and other catalytic reactions. | Screened in arrays to identify active catalytic systems [13] [17]. |

| Internal Standard (e.g., Biphenyl) | Added post-reaction for semi-quantitative analysis by LC-MS. | Allows for calculation of relative yield/conversion based on Area Under the Curve (AUC) ratios [13]. |

| HTE Design Software | Software for designing the experiment layout and tracking variables. | In-house software (e.g., HTDesign) or commercial packages for planning reaction matrices [13]. |

| (+-)-Methionine | (+-)-Methionine, CAS:26062-47-5, MF:C5H11NO2S, MW:149.21 g/mol | Chemical Reagent |

| Sterigmatocystine | Sterigmatocystine, MF:C18H12O6, MW:324.3 g/mol | Chemical Reagent |

Addressing Reproducibility Challenges Through Standardized Protocols and Automated Platforms

Reproducibility forms the cornerstone of the scientific method, yet synthetic chemistry faces significant challenges in this domain. Irreproducible synthetic methods consume substantial time, financial resources, and research momentum across both academic and industrial laboratories [19]. The manifestations of irreproducibility are varied, including fluctuations in reaction yields, inconsistent selectivity in organic transformations, and unpredictable performance of newly developed catalytic materials [19]. In materials chemistry, this problem is particularly acute, with quantitative analyses revealing that a substantial fraction of newly reported materials are synthesized only once, providing no opportunity to assess the reproducibility of their properties [20]. Within the specific context of metal-organic frameworks (MOFs) research, for example, significant variations in synthetic and characterization practices hinder comparative analysis and replication efforts [21]. The fundamental thesis of this whitepaper is that addressing these challenges requires a dual approach: implementing standardized reporting protocols and leveraging automated high-throughput experimentation (HTE) platforms, which together can transform reproducibility in organic synthesis research.

Quantitative Assessment of Reproducibility Challenges

A systematic analysis of synthesis reproducibility in materials chemistry reveals distinct patterns. Literature meta-analysis of metal-organic frameworks (MOFs) indicates that the frequency of repeat syntheses follows a power-law distribution, where the fraction θ(n) of materials synthesized exactly n times is modeled as θ(n) = fn^(-α), with f representing the fraction of materials synthesized only once [20]. This mathematical framework helps quantify the reproducibility challenge and identifies a small subset of "supermaterials" that are replicated far more frequently than the model predicts [20].

Table 1: Quantitative Analysis of Synthesis Reproducibility for Metal-Organic Frameworks (MOFs)

| Metric | Finding | Implication |

|---|---|---|

| Power-law parameter (f) | ~0.5 (50% of MOFs synthesized only once) [20] | Half of reported materials lack independent verification |

| Most replicated MOFs | Small number of "supermaterials" exceed predicted replication [20] | Highlights materials with exceptional reproducibility |

| UiO-66 synthesis variations | 10 different protocols across 10 publications [21] | Standardization lacking even for well-studied materials |

| Reaction concentration variance | 8-169 mmol Lâ»Â¹ for UiO-66 syntheses [21] | Critical parameters vary widely between laboratories |

| BET surface area variance | 716-1456 m² gâ»Â¹ for UiO-66 [21] | Material properties differ significantly between batches |

The observed variations in synthetic protocols for even well-established materials like UiO-66 demonstrate the standardization deficit in the field. Across ten recent publications examining UiO-66 for biomedical applications, researchers employed different reaction stoichiometries (varying from 1:1 to 1:4.5 metal-to-ligand ratios), different modulators (acetic acid, HCl, formic acid, benzoic acid, or none), and significantly different reaction concentrations [21]. These synthetic discrepancies naturally lead to variations in key material properties such as particle size, porosity, and defectivity, which subsequently influence experimental outcomes and application performance [21].

Standardized Reporting Protocols for Enhanced Reproducibility

Comprehensive Procedural Documentation

Standardized reporting represents the foundational element of reproducible synthesis. Leading journals and organizations have established detailed guidelines to ensure critical experimental parameters are documented. Organic Syntheses, for instance, requires procedures with significantly more detail than typical journals, including explicit specifications for reaction setup—describing the size and type of flask, how every neck is equipped, drying procedures, and atmosphere maintenance methods [22]. Specific requirements include avoiding balloons for inert atmospheres in most cases, defining room temperature and vacuum pressures explicitly, and providing justification when specialized equipment like gloveboxes is employed [22]. Furthermore, they mandate that authors identify the most expensive reagent or starting material and estimate its cost per run, with a general threshold of $500 for any single reactant in a full-scale procedure [22].

Reagent and Characterization Standards

Complete documentation of reagent sources and purification methods is essential for reproducibility. Authors must indicate the purity or grade of each reagent, its commercial source (particularly for chemicals where trace impurities may vary between suppliers), and detailed descriptions of any purification, drying, or activation procedures [22]. For characterization, sufficient data must be provided to support claims and confirm the identity and purity of molecules and materials produced [19]. This includes peak listings, solvent information, and spectra for spectroscopic analyses, with raw data files ideally provided in non-proprietary formats to enable comparison [19]. When quantitative NMR is employed for purity determination, the internal standard must be identified and calculation-printed spectra included [22].

Data Accessibility and Code Sharing

Enhancing reproducibility requires making underlying data and analysis methods accessible. Nature Synthesis, for example, mandates data availability statements describing how research data can be accessed and encourages deposition in repositories rather than "available on request" status [19]. When unpublished code or software central to the work is developed, authors must adhere to specific code and software guidelines, release the associated version upon publication, and deposit it at a DOI-minting repository [19]. These practices ensure that both the experimental and computational aspects of research can be examined and replicated by the community.

Automated Platforms and High-Throughput Experimentation

Intelligent Automated Synthesis Platforms

Automation and high-throughput techniques provide a technological foundation for addressing reproducibility challenges while dramatically increasing research efficiency. Intelligent automated platforms for high-throughput chemical synthesis offer distinctive advantages of low consumption, low risk, high efficiency, high reproducibility, high flexibility, and excellent versatility [23]. These systems integrate various capabilities including automated combinatorial materials synthesis, high-throughput screening, and optimization for large-scale data generation [24]. At Pacific Northwest National Laboratory (PNNL), for instance, HTE equipment enables researchers to perform more than 200 experiments per day—a dramatic increase from the handful possible with manual approaches—while maintaining comprehensive data tracking [24]. These systems typically include solid dispensers, liquid handlers with positive displacement pipetting for viscous liquids/slurries, capping/uncapping stations, on-deck magnetic stirrers with heating/cooling, vortex mixers, centrifuges, and multiple optimization sampling reactors [24].

Universal Chemical Programming Languages

A transformative approach to standardization involves the development of universal chemical programming languages. Recent work demonstrates the use of χDL (chiDL), a human- and machine-readable language that standardizes synthetic procedures and enables their execution across automated synthesis platforms [19]. This language allows synthetic procedures to be encoded and performed across different automated platforms, as demonstrated through transfers between systems at the University of British Columbia and the University of Glasgow [19]. The approach enables synthetic procedures to be shared and validated between automated synthesis platforms in host-to-peer or peer-to-peer transfers, similar to BitTorrent data file sharing, establishing a framework for truly reproducible protocol distribution [19].

Integrated HTE Workflows and Data Management

Complete HTE workflows support researchers from experiment submission through to results presentation. Systems like HTE OS provide open-source, freely available workflows that integrate reaction planning, execution, and data analysis [25]. These platforms typically utilize core spreadsheets for reaction planning and communication with robotic systems, while funneling all generated data into analysis environments where users can process and interpret results [25]. The workflow includes tools for parsing LCMS data and translating chemical identifiers, providing essential data-wrangling capabilities to complete the experimental cycle [25]. When applied to organic synthesis and methodology development, these integrated systems help address challenges posed by diverse workflows and reagents through standardized protocols, enhanced reproducibility, and improved efficiency [1].

Integrated Framework: Connecting Standardization and Automation

The power of standardized protocols and automated platforms multiplies when these elements are integrated into a cohesive workflow. The combination creates a virtuous cycle where standardized reporting informs automated execution, which in turn generates consistent data that further refines standards. This integration represents the future of reproducible synthetic research.

This integrated workflow demonstrates how standardized protocols become machine-readable through digital encoding using systems like χDL, enabling seamless transfer between automated HTE platforms. The automated execution generates high-quality, reproducible data that serves dual purposes: validating and refining community standards while simultaneously training AI/ML models for reaction optimization. These models, in turn, provide predictive insights that further enhance standardized protocols, creating a continuous improvement cycle for synthetic reproducibility.

The Scientist's Toolkit: Essential Research Reagent Solutions

Table 2: Essential Components for Reproducible High-Throughput Experimentation

| Component | Function | Implementation Example |

|---|---|---|

| Liquid Handling Systems | Precise reagent dispensing with minimal cross-contamination | Positive displacement pipetting for viscous liquids/slurries [24] |

| Solid Dispensers | Automated powder weighing and distribution | Modular robotic platforms with analytical balance [24] |

| Reaction Stations | Controlled environment for parallel reactions | Multi-well microarray substrates with stirring and heating/cooling [24] |

| Universal Chemical Language | Standardized protocol sharing across platforms | χDL for human- and machine-readable procedures [19] |

| Open-Source HTE Software | Integrated workflow management | HTE OS for experiment submission to results presentation [25] |

| High-Throughput Analysis | Rapid characterization of reaction outcomes | Compatible property measurements (solubility, conductivity, etc.) [24] |

| Cynaroside | Cynaroside | High-purity Cynaroside, a bioactive flavonoid for research use only (RUO). Explore its applications in oncology, neurology, and metabolic disease studies. Inhibits key signaling pathways. |

| Aphidicolin | Aphidicolin, CAS:69926-98-3, MF:C20H34O4, MW:338.5 g/mol | Chemical Reagent |

Implementation of these toolkit components requires careful consideration of system configuration. For general experiments, modular robotic platforms operated in nitrogen purge boxes provide flexibility, while for highly sensitive experiments, completely configurable systems within argon glove boxes maintain appropriate environments [24]. The integration of these components enables the characterization of basic physical properties such as solubility, conductivity, and viscosity alongside electrochemical measurements, providing comprehensive data for reaction optimization and validation [24].

The convergence of standardized reporting protocols and automated high-throughput platforms presents a transformative opportunity for addressing longstanding reproducibility challenges in synthetic chemistry. Through comprehensive documentation standards, universal chemical programming languages, and integrated robotic systems, the research community can establish a new paradigm of reproducible synthesis. The power-law model of material resynthesis suggests that systematic efforts to increase replication rates could significantly enhance the reliability of synthetic research [20]. Future developments will likely focus on increasingly intelligent platforms that leverage artificial intelligence techniques not just for execution, but for synthetic route design and outcome prediction [23]. As these technologies mature and become more accessible, they will reshape traditional disciplinary approaches, promote innovative methodologies, redefine the pace of chemical discovery, and ultimately transform materials manufacturing processes across pharmaceutical, energy storage, and specialty chemicals sectors.

The discovery and optimization of organic synthetic routes are fundamental to progress in the pharmaceutical, materials, and agricultural industries. Historically, this process has been characterized by labor-intensive, time-consuming experimentation guided primarily by human intuition and one-variable-at-a-time (OVAT) approaches. This traditional methodology requires exploring high-dimensional parametric spaces and presents a significant bottleneck in the iterative design–make–test–analyze (DMTA) cycle [26]. However, a profound paradigm shift is occurring, driven by the convergence of high-throughput experimentation (HTE) and data-driven machine learning (ML) algorithms. This transformation enables multiple reaction variables to be synchronously optimized, significantly reducing experimentation time and minimizing human intervention [26]. The bridging of academic research and industrial implementation represents the most significant advancement in synthetic methodology in decades, moving the field from artisanal practice to automated, data-driven science.

Core Methodologies: HTE and Machine Learning Integration

High-Throughput Experimentation Platforms

HTE has emerged as a foundational technology for systematically interrogating reactivity across diverse chemical spaces. Modern HTE platforms leverage lab automation to execute thousands of reactions in parallel, generating expansive datasets that capture complex relationships between reaction components and outcomes [27]. These platforms provide several distinct advantages over traditional approaches:

- Broad Reaction Space Exploration: HTE enables comprehensive investigation of numerous variables simultaneously, including catalysts, solvents, bases, temperatures, and concentrations, across hundreds to thousands of discrete experiments [27].

- Valuable Negative Data: Unlike conventional literature sources that predominantly report successful reactions, HTE datasets capture valuable failure data that provide crucial boundaries for reaction feasibility [27].

- Real-World Relevance: HTE data typically samples reaction spaces of direct interest to medicinal and process chemistry, ensuring that data-driven findings possess immediate practical relevance [27].

Recent disclosures of large-scale HTE datasets, such as the release of over 39,000 previously proprietary HTE reactions covering cross-coupling reactions and chiral salt resolutions, have dramatically improved the data landscape for method development [27].

Machine Learning Algorithms for Reaction Optimization

Machine learning algorithms serve as the analytical engine that transforms HTE data into predictive models and actionable chemical insights. Several ML approaches have demonstrated particular utility for reaction optimization:

- Random Forests: These non-linear algorithms effectively handle the complex, interdependent nature of reaction parameters without requiring data linearization, providing robust variable importance metrics that identify which reaction components (catalysts, solvents, etc.) most significantly influence outcomes [27].

- Bayesian Optimization: This sequential design strategy efficiently navigates high-dimensional parameter spaces to identify optimal reaction conditions with minimal experimentation, benefiting from literature-informed initializations that outperform random sampling [28].

- Multi-label Classification: This approach predicts multiple condition components simultaneously, treating condition recommendation as a structured output problem over a fixed set of reaction roles [28].

- Sequence-to-Sequence Models: These models generate structured condition recommendations using open vocabularies (e.g., SMILES strings), providing flexibility beyond predefined chemical lists [28].

Table 1: Key Machine Learning Approaches in Reaction Optimization

| Algorithm Type | Primary Function | Advantages | Limitations |

|---|---|---|---|

| Random Forests | Variable importance analysis | Handles non-linear data; No requirement for linearization | Limited extrapolation beyond training data |

| Bayesian Optimization | Efficient parameter space navigation | Minimal experiments required; Excellent for optimization campaigns | Requires careful initialization; Computationally intensive |

| Multi-label Classification | Predicts multiple condition components | Structured outputs; Handles fixed reaction roles | Limited to predefined chemical lists |

| Sequence-to-Sequence Models | Generates agent identities | Open vocabulary; Potential for novel agent prediction | May generate impractical or non-executable suggestions |

Analytical Frameworks: Extracting Chemical Insights from HTE Data

The HiTEA Statistical Framework

The High-Throughput Experimentation Analyzer (HiTEA) represents a significant advancement in statistical frameworks for extracting chemical insights from HTE datasets. HiTEA employs three orthogonal statistical methodologies that work in concert to elucidate the hidden "reactome" – the complex network of relationships between reaction components and outcomes [27]:

- Random Forests identify which reaction variables (catalyst, solvent, temperature, etc.) exert the most significant influence on reaction outcomes, answering the question: "Which variables are most important?" [27]

- Z-score ANOVA-Tukey analysis pinpoints statistically significant best-in-class and worst-in-class reagents, enabling robust performance comparisons across chemical classes [27].

- Principal Component Analysis (PCA) visualizes how these best-in-class and worst-in-class reagents populate the chemical space, revealing clustering patterns and selection biases within datasets [27].

This combination of statistical analyses requires no assumptions about underlying data structure, accommodates non-linear and discontinuous relationships, and functions effectively with the sparse, non-combinatorial data typical of chemical datasets [27].

The QUARC Framework for Quantitative Condition Recommendation

Bridging the gap between qualitative recommendations and executable procedures, the QUARC (QUAntitative Recommendation of reaction Conditions) framework extends condition prediction beyond agent identities and temperature to include quantitative details essential for experimental execution [28]. QUARC formulates condition recommendation as a four-stage prediction task:

- Agent Identity Prediction: Identifying suitable catalysts, solvents, and additives.

- Temperature Prediction: Recommending optimal reaction temperatures.

- Reactant Amount Prediction: Determining appropriate equivalence ratios for reactants.

- Agent Amount Prediction: Specifying quantities for non-reactant components [28].

This sequential inference approach captures natural dependencies between conditions (e.g., temperature selection depends on catalyst identity) while maximizing data usage across incomplete reaction records [28]. The framework's reaction-role agnosticism avoids ambiguities in classifying chemical agents and provides structured outputs readily convertible into executable instructions for automated synthesis platforms [28].

QUARC Workflow: Sequential prediction pipeline for quantitative reaction conditions.

Research Reagent Solutions

Successful implementation of HTE workflows requires specialized materials and platforms designed for high-throughput screening. The table below details essential components of a modern HTE toolkit:

Table 2: Essential Research Reagent Solutions for HTE Workflows

| Tool/Reagent | Function | Implementation Role |

|---|---|---|

| Automated Synthesis Platforms | Parallel reaction execution | Enables rapid empirical testing of thousands of reaction conditions [26] |

| Broad Catalyst/Ligand Libraries | Diverse metal complexes and organocatalysts | Provides structural diversity for discovering optimal catalytic systems [27] |

| Solvent Collections | Diverse polarity, coordinating ability, and green credentials | Screens solvent effects on yield and selectivity [27] |

| HTE Reaction Blocks | Specialized vessels for parallel reactions | Standardizes format for automated screening campaigns [27] |

| Analytical Integration | HPLC, GC-MS, SFC for rapid analysis | Provides high-throughput outcome quantification [27] |

Data Processing and Visualization Tools

Effective data management and visualization are critical for interpreting HTE results. Key computational tools include:

- Color Palette Generators: Specialized tools for creating perceptually uniform color schemes that ensure accessibility (WCAG 2.1 contrast ratios of at least 4.5:1) and effective data visualization [29].

- Statistical Analysis Packages: Implementations of PCA, random forests, and ANOVA for identifying significant trends and outliers in multivariate HTE data [27].

- Chemical Space Visualization: Dimensionality reduction techniques (PCA, t-SNE) for mapping reagent relationships and performance clusters [27].

Case Studies: Successful Academic-Industrial Integration

Cross-Coupling Reaction Optimization

The application of HiTEA to Buchwald-Hartwig coupling datasets comprising approximately 3,000 reactions demonstrated the framework's ability to extract nuanced structure-activity relationships [27]. The analysis confirmed the known dependence of yield on ligand electronic and steric properties while also identifying unexpected reagent interactions that would be difficult to detect through traditional experimentation [27]. Temporal analysis of the dataset revealed evolving best-practice conditions while demonstrating that inclusion of historical data expands the investigational substrate space, ultimately providing a more comprehensive understanding of the reaction class [27].

QUARC Performance Evaluation

In comparative studies, the QUARC framework demonstrated modest but consistent improvements over popularity-based and nearest-neighbor baselines across all condition prediction tasks [28]. The model's practical utility was particularly evident in its ability to recommend viable conditions for diverse reaction classes, including those with limited precedent in the training data [28]. By incorporating quantitative aspects of reaction setup, QUARC provides more actionable recommendations than models limited to qualitative agent identification, effectively bridging the specificity gap between retrosynthetic planning and experimental execution [28].

HTE-ML Integration: Cyclical workflow from data generation to industrial implementation.

Challenges and Future Directions

Despite significant progress, several challenges remain in fully bridging academic research and industrial implementation:

- Data Quality and Standardization: Yield calculations in HTE are often derived from uncalibrated ultraviolet absorbance ratios, making measurements more qualitative than quantitative nuclear magnetic resonance spectroscopy or isolated yield determinations [27].

- Dataset Biases: HTE datasets may be subject to selection biases in reactant and condition choice, creating regions of substantial data sparsity that limit model generalizability [27].

- Interpretability Gap: While models can successfully predict high-performing conditions, translating these predictions into fundamental chemical principles and mechanistic understanding remains challenging [27].

- Integration with Automated Synthesis: Converting model recommendations into executable code for robotic platforms requires additional layers of translation and validation [28].

Future developments will likely focus on closing the loop between prediction and experimental validation through autonomous discovery systems, improving model interpretability to extract novel chemical insights, and developing standardized protocols for data sharing and comparison across institutions [26] [27]. As these technologies mature, the bridge between academic research and industrial implementation will strengthen, fundamentally transforming how organic molecules are designed and synthesized.

Advanced HTE Methodologies and Real-World Applications in Drug Discovery and Development

High-Throughput Experimentation (HTE) has fundamentally transformed the landscape of organic synthesis research, enabling a paradigm shift from traditional one-variable-at-a-time (OVAT) approaches to massively parallelized experimentation. This evolution is characterized by the progressive miniaturization and automation of reaction platforms, moving from established 96-well plate systems to advanced 1536-well nanoscale configurations. Within organic chemistry, HTE serves as a powerful tool for accelerating reaction discovery, optimizing synthetic methodologies, and generating comprehensive datasets for machine learning applications [1] [13]. The core principle of HTE involves the miniaturization and parallelization of chemical reactions, allowing researchers to explore a vast experimental space—encompassing catalysts, solvents, reactants, and conditions—with unprecedented speed and efficiency while conserving valuable resources [12].

The drive toward miniaturization, from microliter volumes in 96-well plates to nanoliter-scale reactions in 1536-well systems, is motivated by the need to enhance screening efficiency, reduce material consumption, lower costs, and minimize chemical waste [13] [30]. This transition is particularly crucial in fields like pharmaceutical development, where HTE has proven instrumental in derisking the drug discovery and development (DDD) pipeline by enabling the rapid testing of numerous relevant molecules [13]. Furthermore, the standardized, reproducible datasets generated by HTE workflows are invaluable for building predictive models that augment chemist intuition and guide the development of new synthetic methodologies [31] [1]. This technical guide examines the architecture, capabilities, and applications of different HTE platform configurations, providing a framework for their implementation in modern organic synthesis research.

Core HTE Platform Specifications and Comparisons

Quantitative Comparison of HTE Platforms

The choice of HTE platform is a critical determinant of experimental strategy, impacting throughput, resource requirements, and operational complexity. The following table summarizes the key specifications of standard HTE formats.

Table 1: Technical Specifications of Standard HTE Platform Configurations

| Platform Format | Well Volume Range | Well Count | Typical Reaction Scale | Primary Applications & Notes |

|---|---|---|---|---|

| 96-Well Plate | ~300 μL [16] | 96 | Micromole scale [13] | Standard Screening: Accessible, semi-manual or automated; ideal for initial reaction discovery and optimization [13]. |

| 384-Well Plate | Information missing | 384 | Information missing | High-Throughput Screening: Used for screening many reactions or substrates simultaneously [16]. |

| 1536-Well Plate | 1 to 10 μL [32] | 1536 | Nanomole scale [13] | Ultra High-Throughput Screening (uHTS): Designed for automation and robotics; maximizes screening efficiency in minimal space [32] [30]. |

Analysis of Platform Selection

The 96-well plate format represents a highly accessible entry point into HTE. It operates at the micromole scale, typically using 1 mL vials in a 96-well plate format, which strikes a balance between manageable manual liquid handling and a significant increase in throughput over traditional round-bottom flasks [13]. This format is versatile and can be implemented in both automated and semi-manual setups, making it a mainstay in both academic and industrial laboratories for initial reaction discovery and optimization campaigns [13].

In contrast, the 1536-well plate configuration is engineered for ultra high-throughput screening (uHTS) where maximizing data points per unit area is paramount. With working volumes as low as 1 to 10 μL and reactions run at the nanomole scale, this format drastically reduces the consumption of often precious reagents and compounds [13] [32]. These plates are manufactured to international standards (e.g., ANSI/SBS) for compatibility with automated robotic systems [32]. Due to the precision required for dispensing and handling at this scale, their use is predominantly confined to core laboratories, pharmaceutical research and development labs, and contract research organizations equipped with specialized liquid handling robots [30].

The progression from 96-well to 1536-well systems is not merely a matter of increasing well count; it represents a fundamental shift in workflow philosophy. While 96-well plates offer a bridge between traditional and high-throughput methods, 1536-well plates are a cornerstone of fully industrialized, data-driven chemical research, enabling the rapid generation of very large datasets essential for robust machine learning and cheminformatics [1] [13].

Advanced HTE Configurations and Enabling Technologies

Integration of Flow Chemistry with HTE

While plate-based HTE is highly effective for screening discrete reaction conditions, it faces limitations in handling continuous variables like temperature, pressure, and reaction time with high precision [16]. The integration of flow chemistry with HTE principles has emerged as a powerful solution to these challenges, creating a complementary and enabling technology platform [16]. In flow chemistry, reactions are performed in continuously flowing streams within narrow tubing or microreactors, rather than in batch wells. This approach provides several distinct advantages for HTE, including superior heat and mass transfer, the ability to safely use hazardous reagents, and access to wider process windows (e.g., high temperatures and pressures) [16].

A key benefit of flow-based HTE is the ability to dynamically and continuously vary parameters throughout an experiment. This allows for the high-throughput investigation of continuous variables in a way that is not feasible in static batch plates [16]. Furthermore, scale-up from a screening campaign is often more straightforward in flow; instead of re-optimizing for a larger batch vessel, scale can be increased simply by running the optimized flow process for a longer duration, a concept known as "numbering up" [16]. This technology has been successfully applied across diverse areas of organic synthesis, including photochemistry, electrochemistry, and catalysis [16]. For instance, flow HTE has been used to safely screen photoredox reactions, where the short path lengths in microreactors ensure uniform irradiation, overcoming the light penetration issues common in batch photochemistry [16].

Nanoscale and Magnetic Catalytic Systems

The push towards miniaturization in HTE is paralleled by the adoption of advanced nanoscale materials as catalysts, which align perfectly with the reduced reaction volumes of systems like 1536-well plates. Nanoparticles and Magnetic Catalytic Systems (MCSs) are two prominent classes of materials that enhance HTE workflows [33] [34].